Method, apparatus and device for achieving co-location of voices and images and medium

A sound and image technology, applied in the field of co-location of sound and image, can solve the problems of weak video presence and poor video playback effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

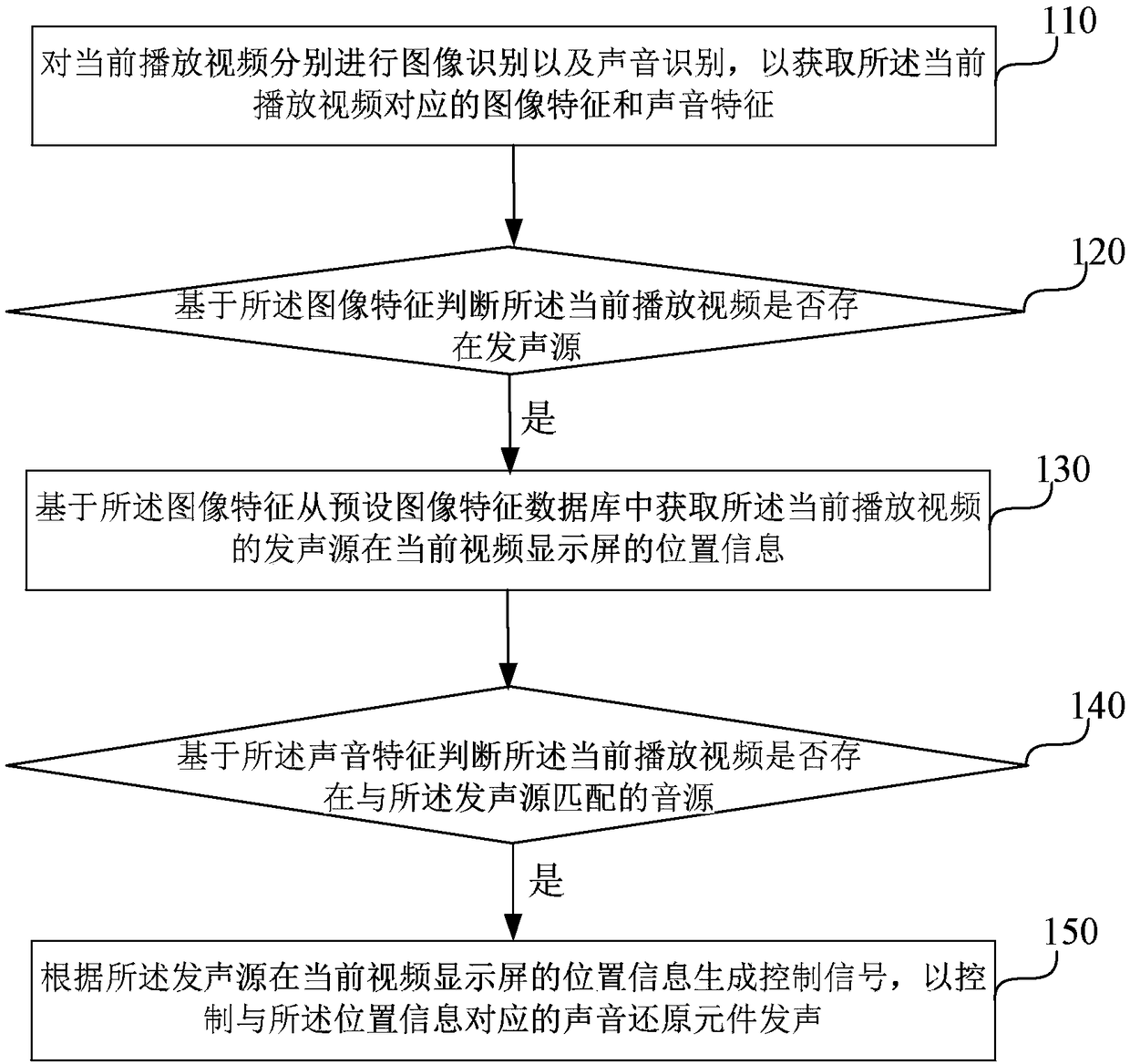

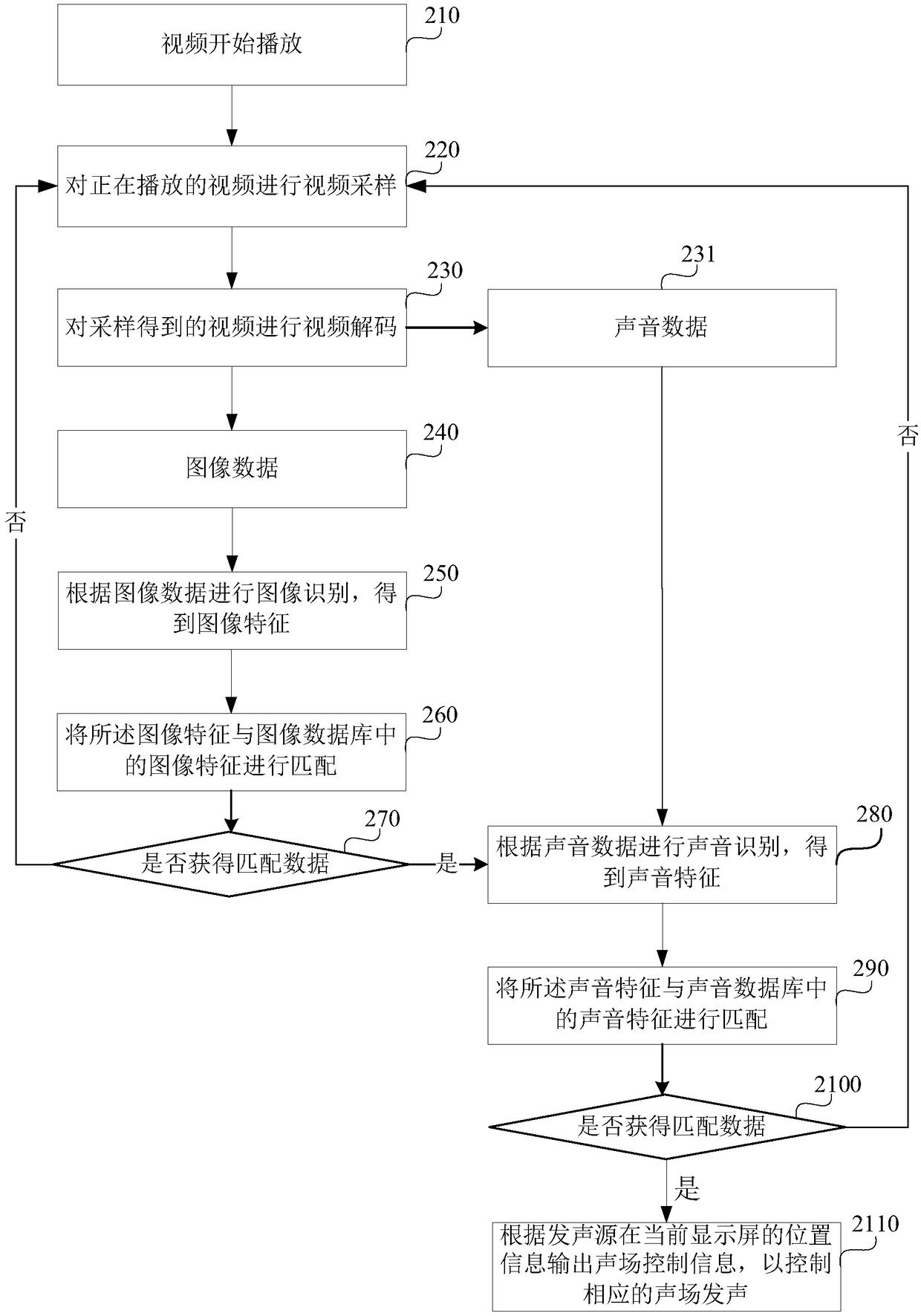

[0048] figure 1It is a schematic flow chart of a method for coordinating sound and images provided by Embodiment 1 of the present invention. The method for realizing the colocation of sound and image provided by this embodiment can be applied to electronic products with large-size display screens, such as television products with a size of 65 inches and above; That is, the distance between sound sources) is relatively short, and the sound effect of co-location of sound and image cannot be highlighted. The method for coordinating sound and images is applicable to the playback of videos with sound features with obvious directional attributes, for example, the video with sound features with obvious directional attributes contains characters and the characters make speech sounds , videos of quarreling sounds or singing sounds, or videos containing animals and the animals make sounds, or videos containing objects and the objects make knocking sounds (such as iron, electric welding...

Embodiment 2

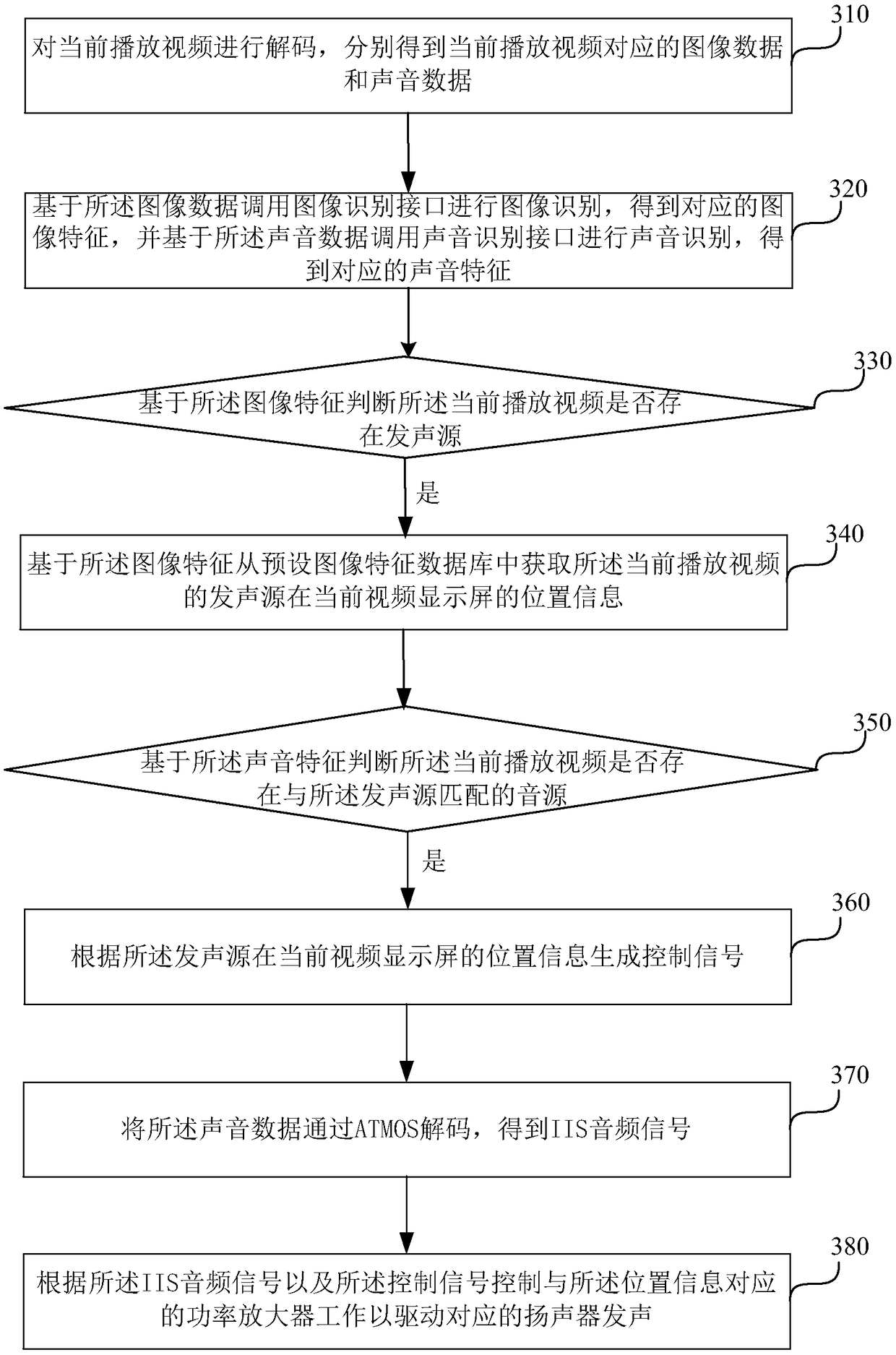

[0101] image 3 It is a schematic flow chart of a method for coordinating sound and images provided by Embodiment 2 of the present invention. On the basis of the above-mentioned embodiments, this embodiment describes the realization process of the sound restoration of the sound source. For details, see image 3 As shown, the method specifically includes the following steps:

[0102] 310. Decode the currently played video to obtain image data and sound data corresponding to the currently played video.

[0103] 320. Call an image recognition interface to perform image recognition based on the image data to obtain corresponding image features, and call a sound recognition interface to perform sound recognition based on the sound data to obtain corresponding sound features.

[0104] 330. Determine whether there is a sound source in the currently playing video based on the image features, and if there is a sound source in the currently playing video, continue to execute step 340...

Embodiment 3

[0127] Image 6 A schematic structural diagram of a device for coordinating sound and images provided in Embodiment 3 of the present invention; see Image 6 As shown, the device includes: an identification module 610, a sound source judgment module 620, an acquisition module 630, a sound source judgment module 640 and a control module 650;

[0128] Among them, the identification module 610 is used to perform image recognition and sound recognition on the currently played video respectively, so as to obtain the image features and sound features corresponding to the currently played video; the sound source judging module 620 is used to judge the sound source based on the image features. Whether there is a sound source in the currently playing video; the acquisition module 630 is used to obtain the sound source of the currently playing video from the preset image feature database based on the image feature if there is a sound source in the currently playing video in the current v...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com