Human activity gesture recognition method based on multi-level end-to-end neural network

A neural network and human activity technology, applied in the medical field, can solve the problems of a large amount of training data for neural networks, difficulty in distinguishing human activities, and limited recognition accuracy, so as to reduce computational complexity and power consumption, improve recognition accuracy, The effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

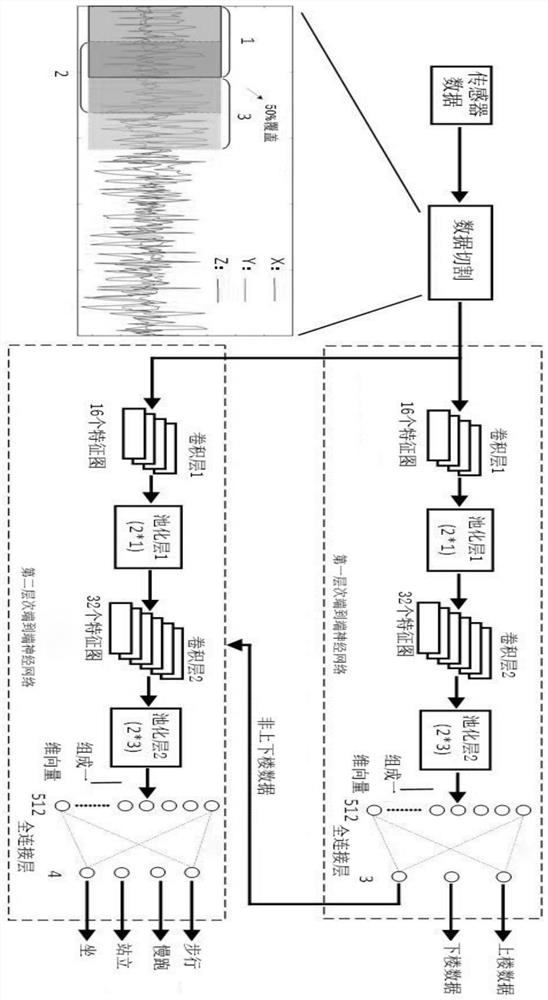

[0045] like Figure 1 to Figure 2 As shown, this embodiment provides a human activity gesture recognition method based on a multi-level end-to-end neural network, using several motion sensors arranged on the surface of the human body and used to collect raw data with tagged data of human activity gestures. The motion sensors include, but are not limited to, accelerometers, gyroscopes, and magnetometers. It should be noted that the serial numbers such as "first" and "second" in this embodiment are only used to distinguish similar components or terms. In addition, the types of human activity gestures in this embodiment include but are not limited to going upstairs, going downstairs, walking, jogging, standing, and sitting. Specific steps are as follows:

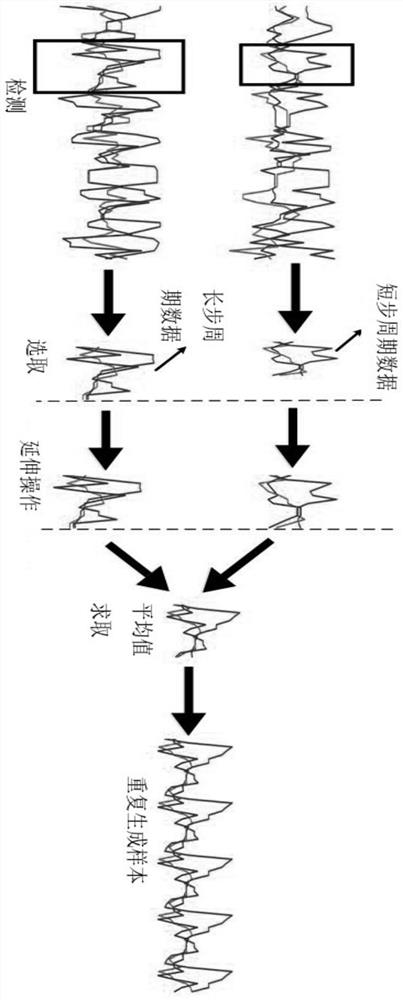

[0046] The first step, multi-level end-to-end neural network training: use the motion sensor to collect the labeled data of human activity posture, use the sliding window to cut the labeled data, and obtain the first labeled ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com