Video behavior identification method based on an Attention-LSTM network

A recognition method and network technology, applied in the field of computer vision, can solve problems such as low time efficiency and time-consuming action positioning, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings.

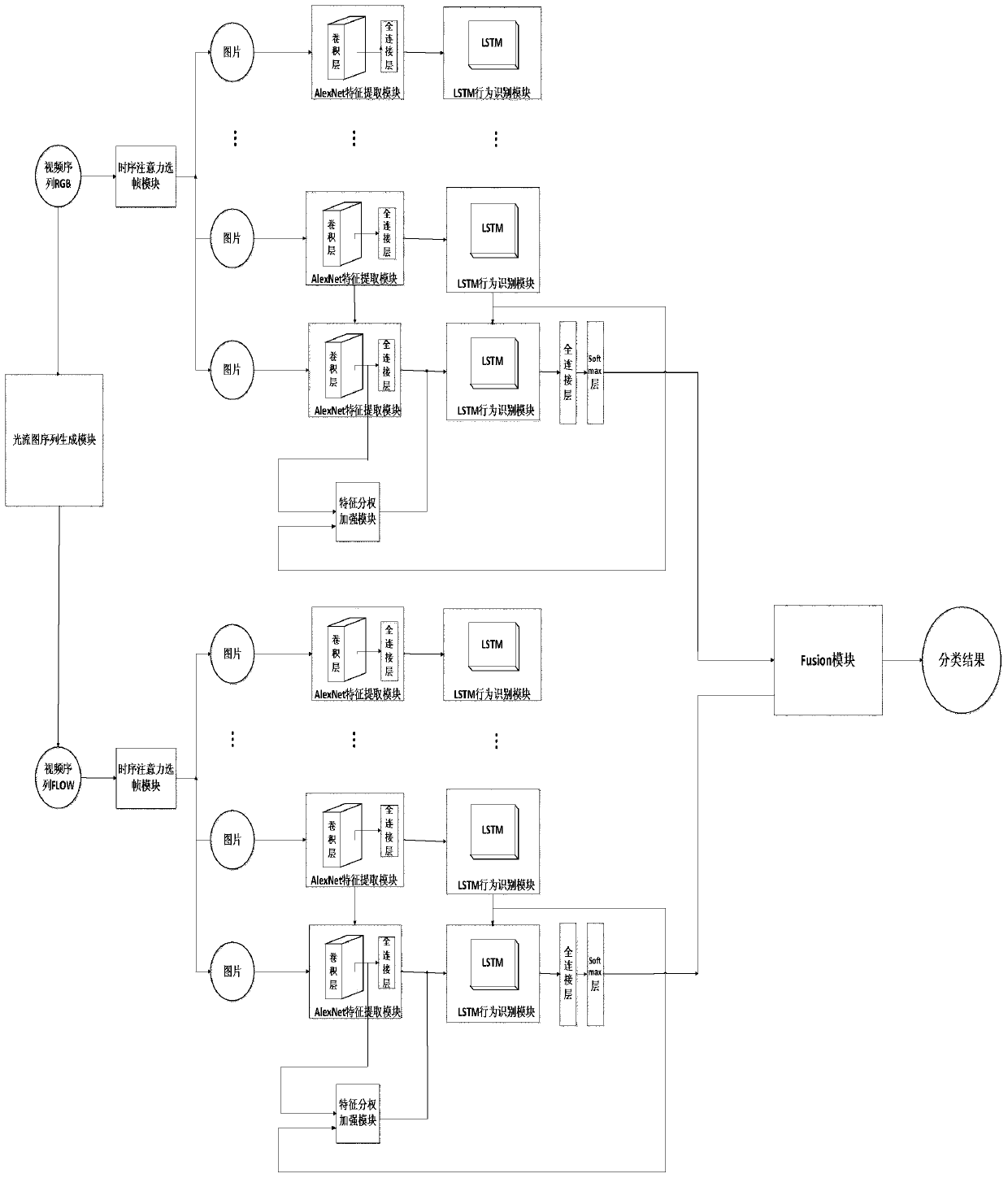

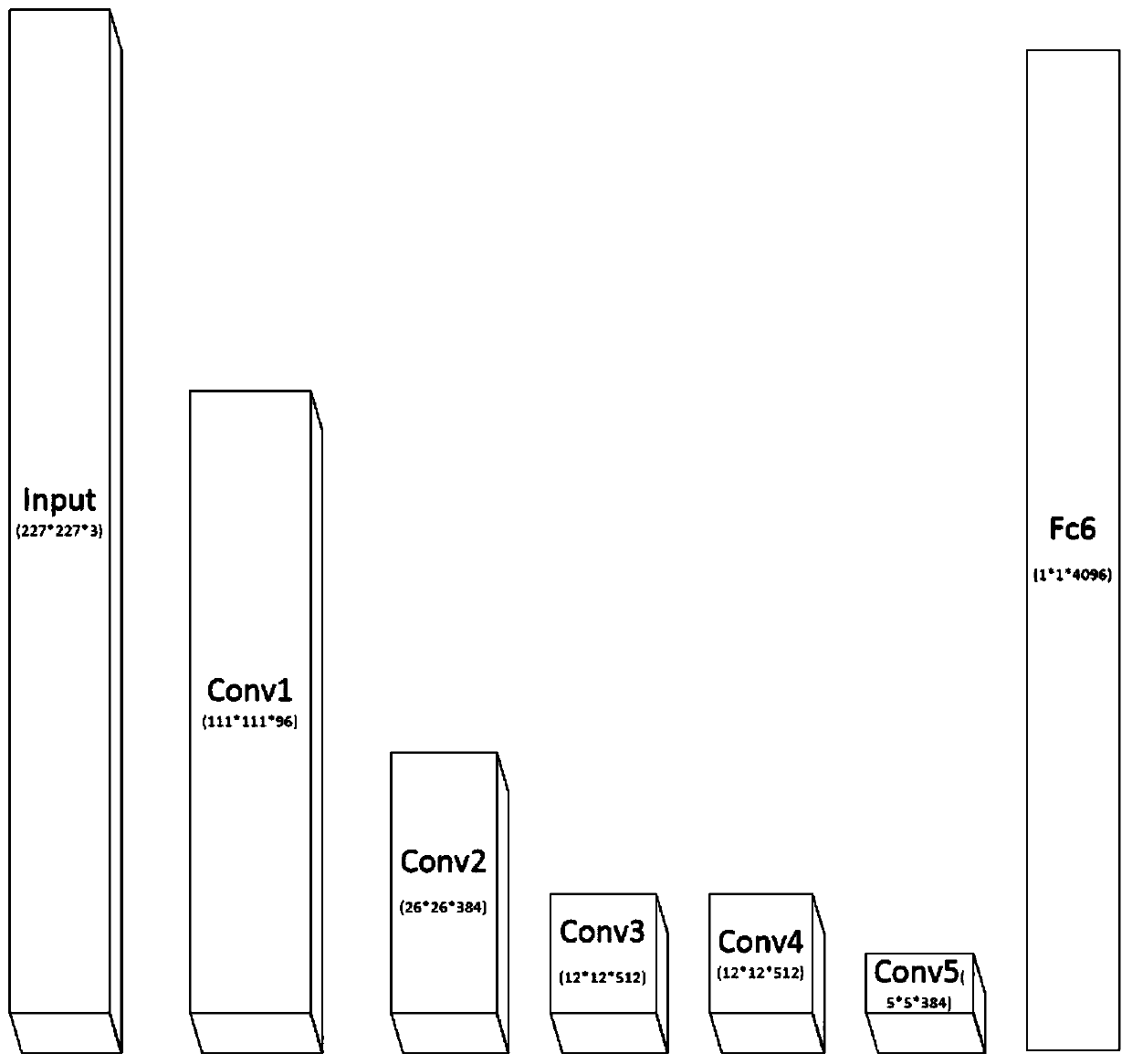

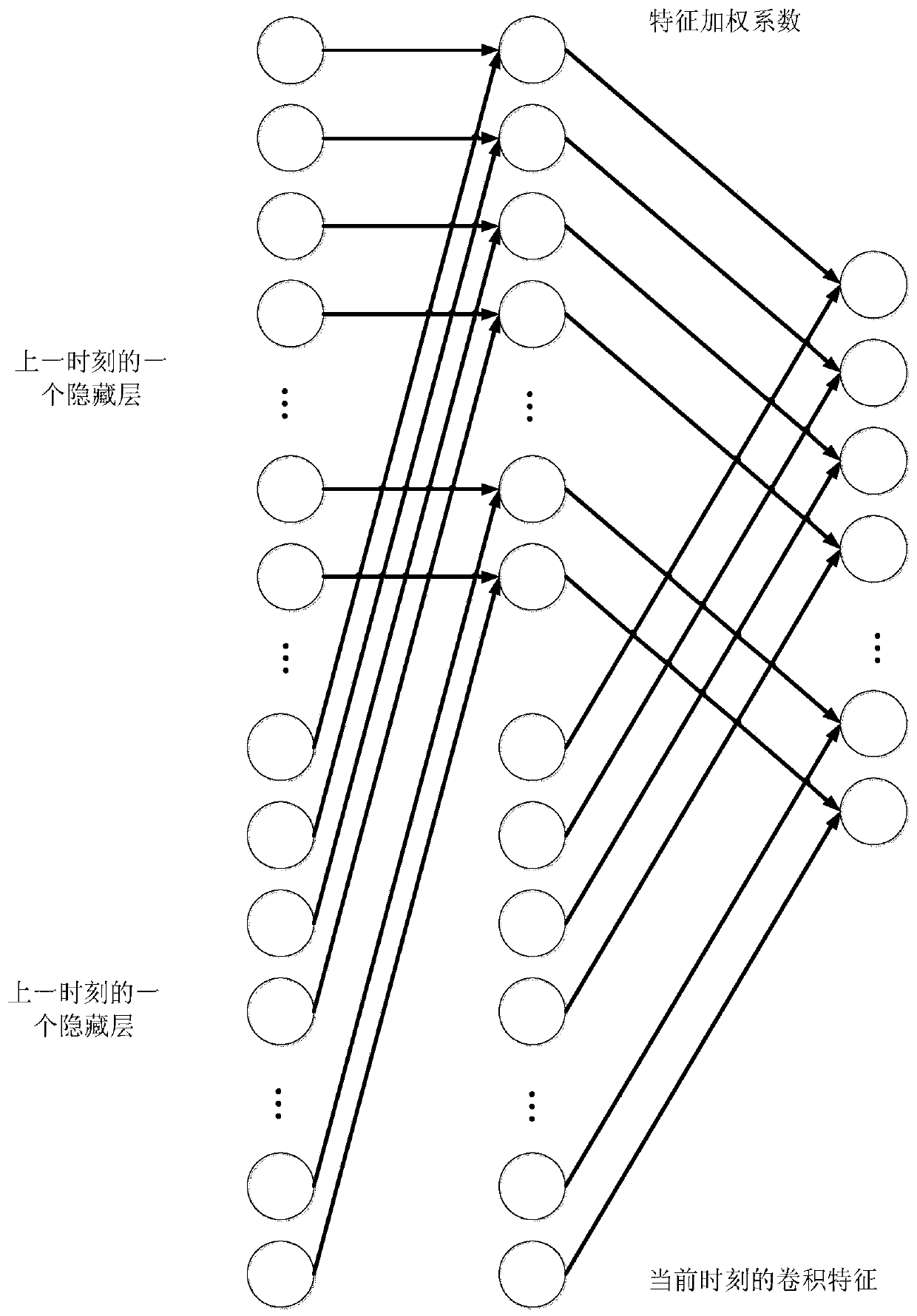

[0038] A video behavior recognition method based on Attention-LSTM network, such as figure 1shown. First, the input RGB image sequence is transformed by the optical flow image sequence generation module to obtain the optical flow image sequence; secondly, the obtained optical flow image sequence and the original RGB image sequence are input into the temporal attention frame acquisition module, and two The non-redundant key frames in the image sequence; then, input the key frame sequences of the two types of images into the AlexNet network feature extraction module, and extract the temporal and spatial features of the two frame images respectively. At the same time, in the last stage of the AlexNet network Between the convolutional layer and the fully connected layer, the feature decentralization module is used to strengthen the feature map...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com