An action video extraction and classification method based on moving object detection

A moving target, classification method technology, applied in character and pattern recognition, instruments, computer parts and other directions, can solve the problem of inability to effectively locate the starting frame, increase the amount of calculation and the difficulty of action recognition, and achieve real-time video extraction and performance. The effect of classification tasks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

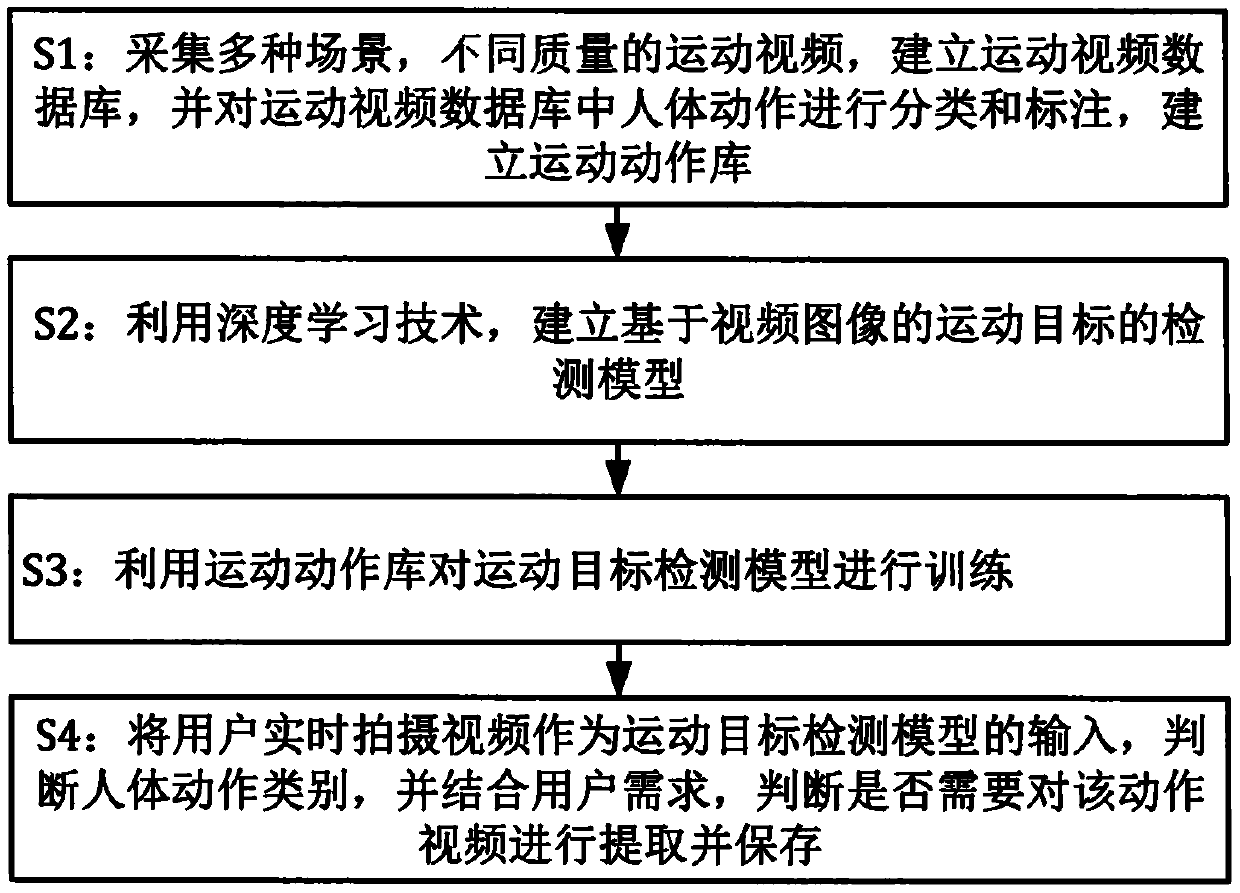

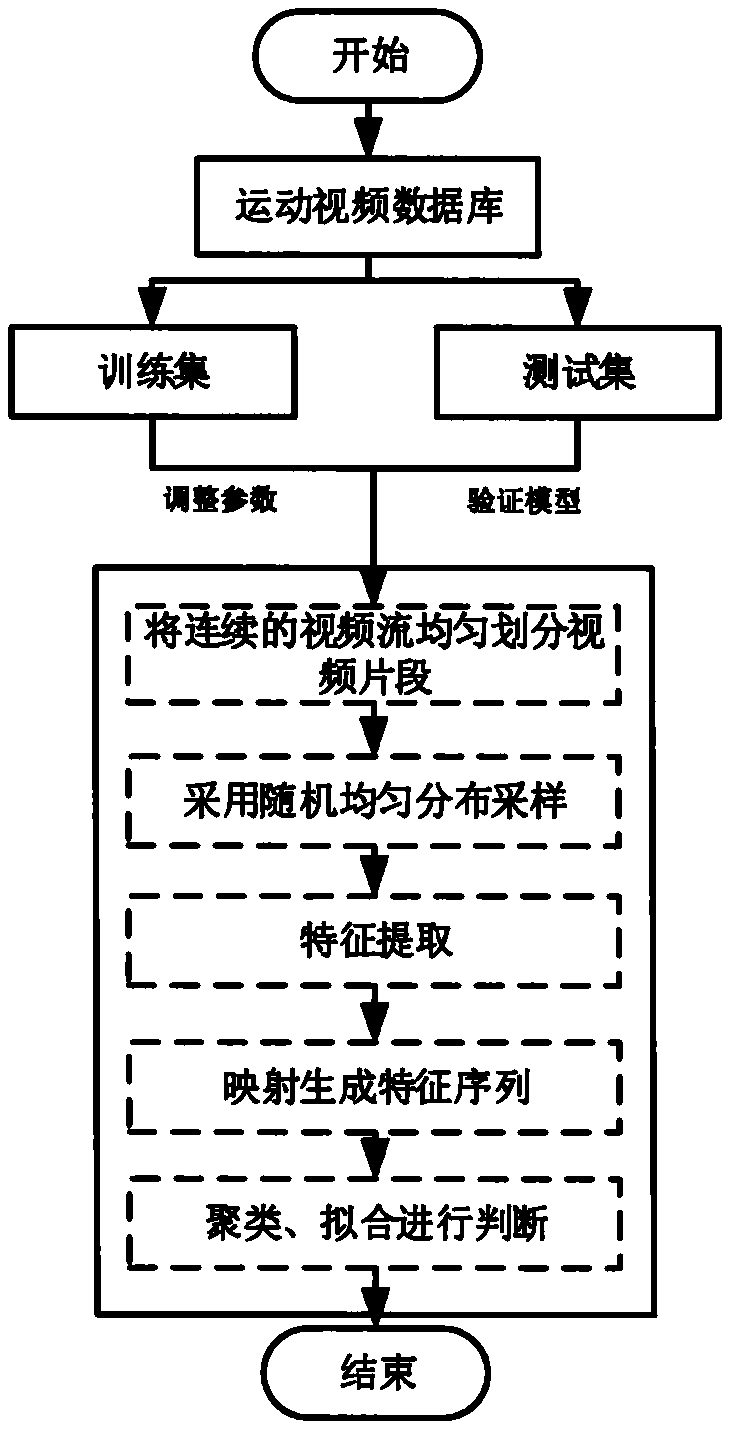

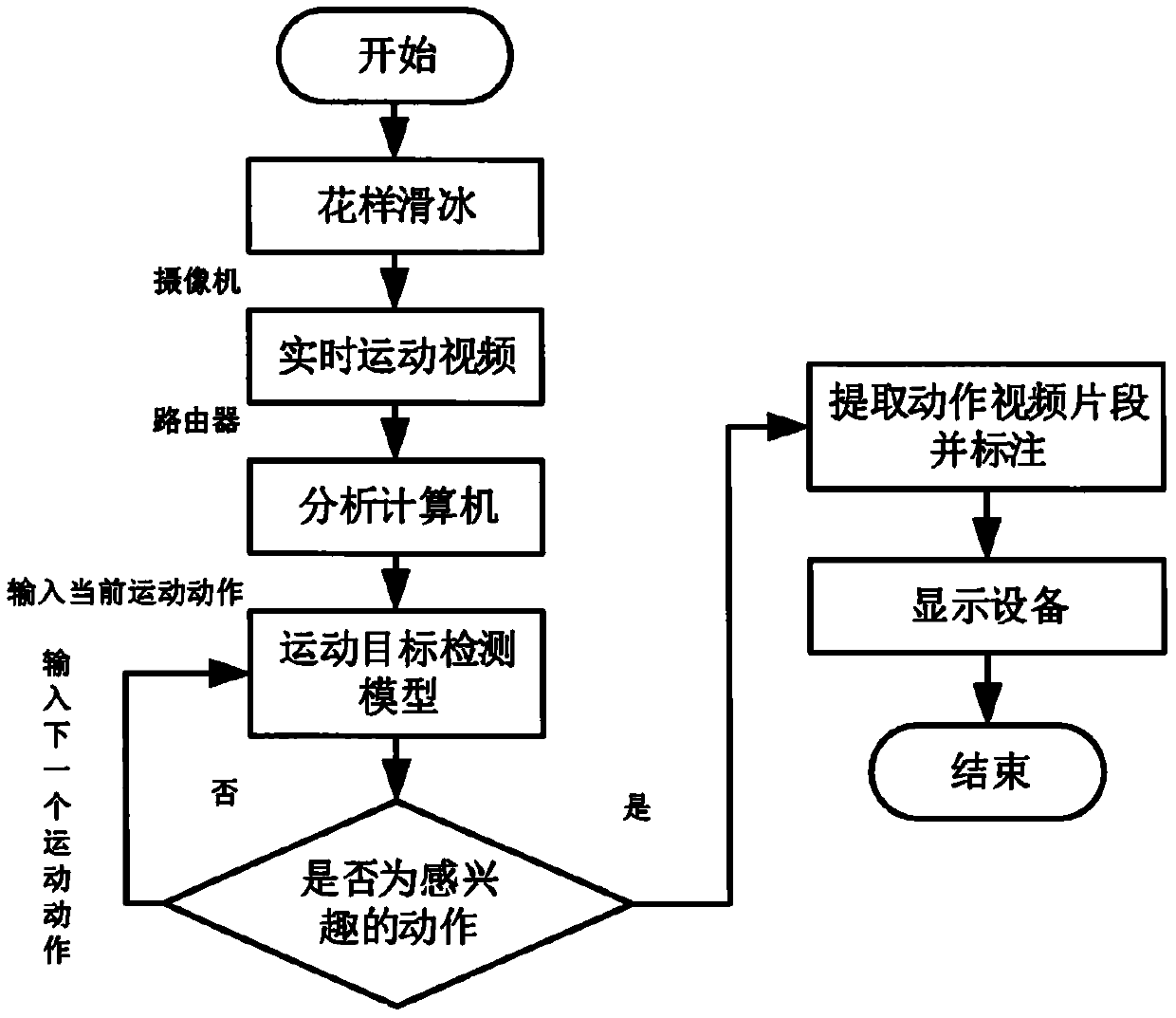

[0030] When the figure skating coach guides the essentials of the athletes' movements and analyzes the opponent's movements, it is often necessary to make a video collection for the athletes and edit the movements they are interested in, such as: front and outside jumps. This embodiment provides a sports goal-based motion video extraction and classification methods for detection, combining figure 1 , the method consists of the following steps:

[0031] step one:

[0032] Obtain figure skating videos under different scenes, different resolutions, frame rates, contrast, shooting angles, different number of people being shot, different shooting distances and other factors from event cameras and network videos, establish a sports video database, and analyze sports Human body movements in the video database are identified as jumping, spinning, lifting, footwork, and twisting, etc., and are categorized and stored in the sports action library and marked;

[0033] Step Two: Combine ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com