A data caching method of a distributed storage system

A technology of distributed storage and data caching, applied in the direction of input/output to the record carrier, etc., can solve the problems of inaccuracy and inability to solve, and achieve the effect of improving service quality, optimizing use, and avoiding cache pollution.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

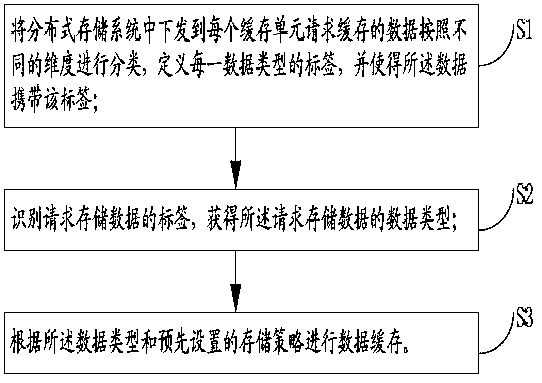

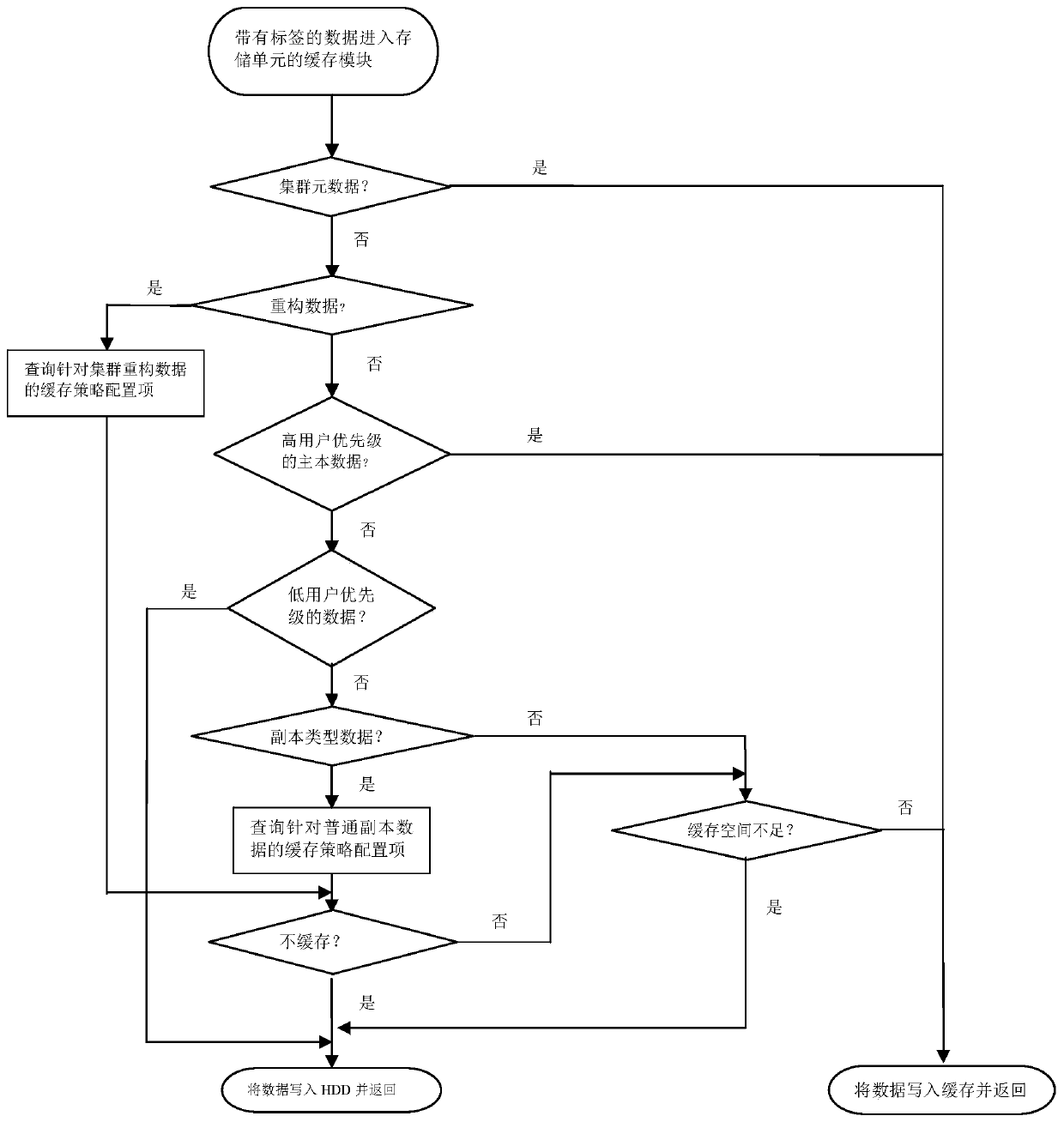

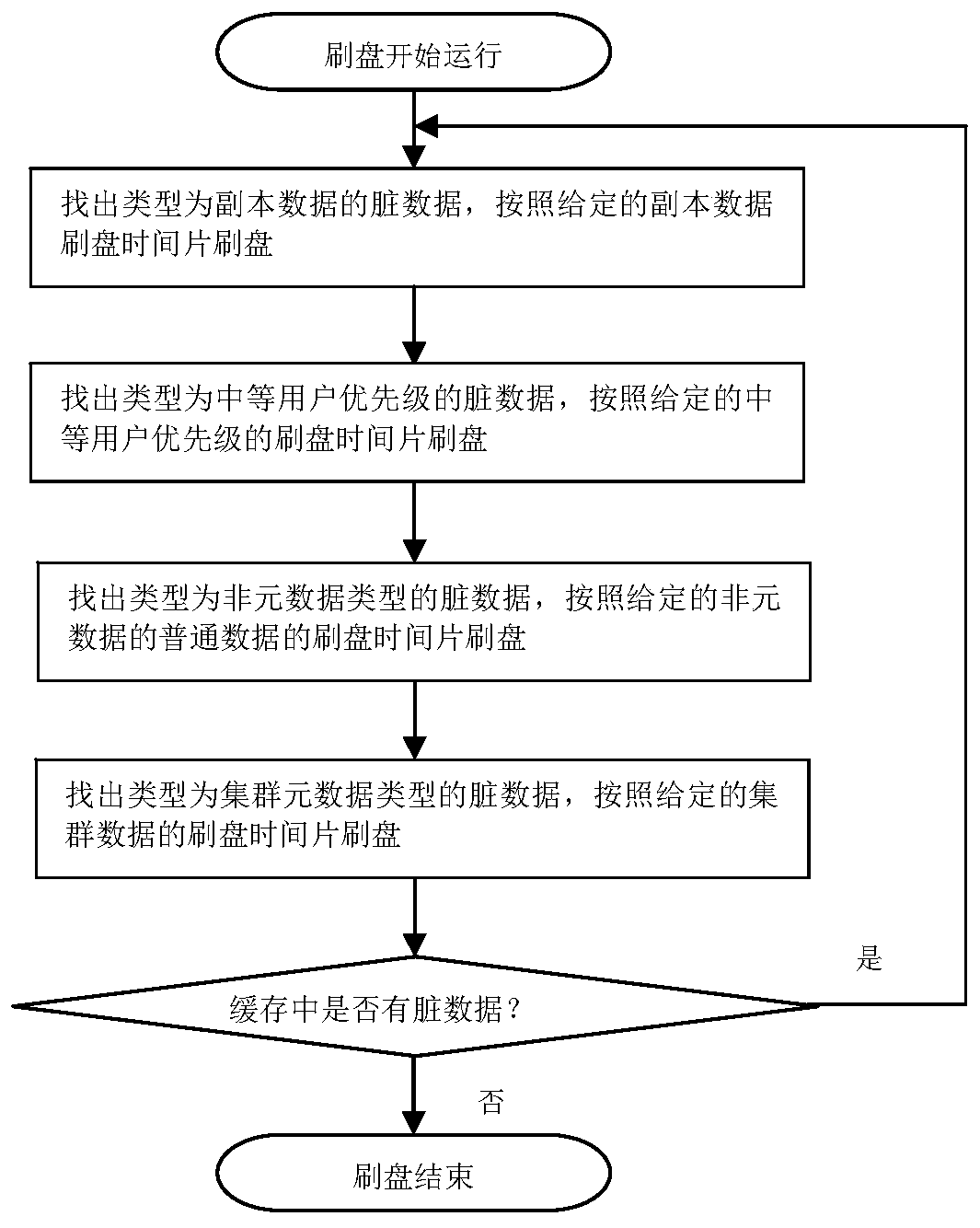

Method used

Image

Examples

Embodiment Construction

[0036] The following descriptions of various embodiments refer to the accompanying drawings to illustrate specific embodiments in which the present invention can be implemented.

[0037] In a storage system, in order to speed up performance, it is often necessary to use a cache technology, which widely exists in various computer systems, such as between the computer CPU and memory, between the memory and an external hard disk, and so on. The capacity of the cache is generally small, but the speed is higher than that of the low-speed device. Setting the cache in the system can increase the data read and write speed of the low-speed device and improve the performance of the entire system.

[0038] Because the capacity of the cache is much smaller than that of the low-speed device, it is necessary to swap in and out the data in the cache. Taking HDD as a low-speed device and a small amount of SSD as a cache as an example, the data read from HDD and stored in SSD is the same as th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com