Emotion recognition method and device based on multi-modal emotion model

An emotion recognition and multi-modal technology, applied in character and pattern recognition, neural learning methods, biological neural network models, etc., can solve problems such as being susceptible to interference, incomplete single-modal information, and untimely feedback, and achieve information full effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

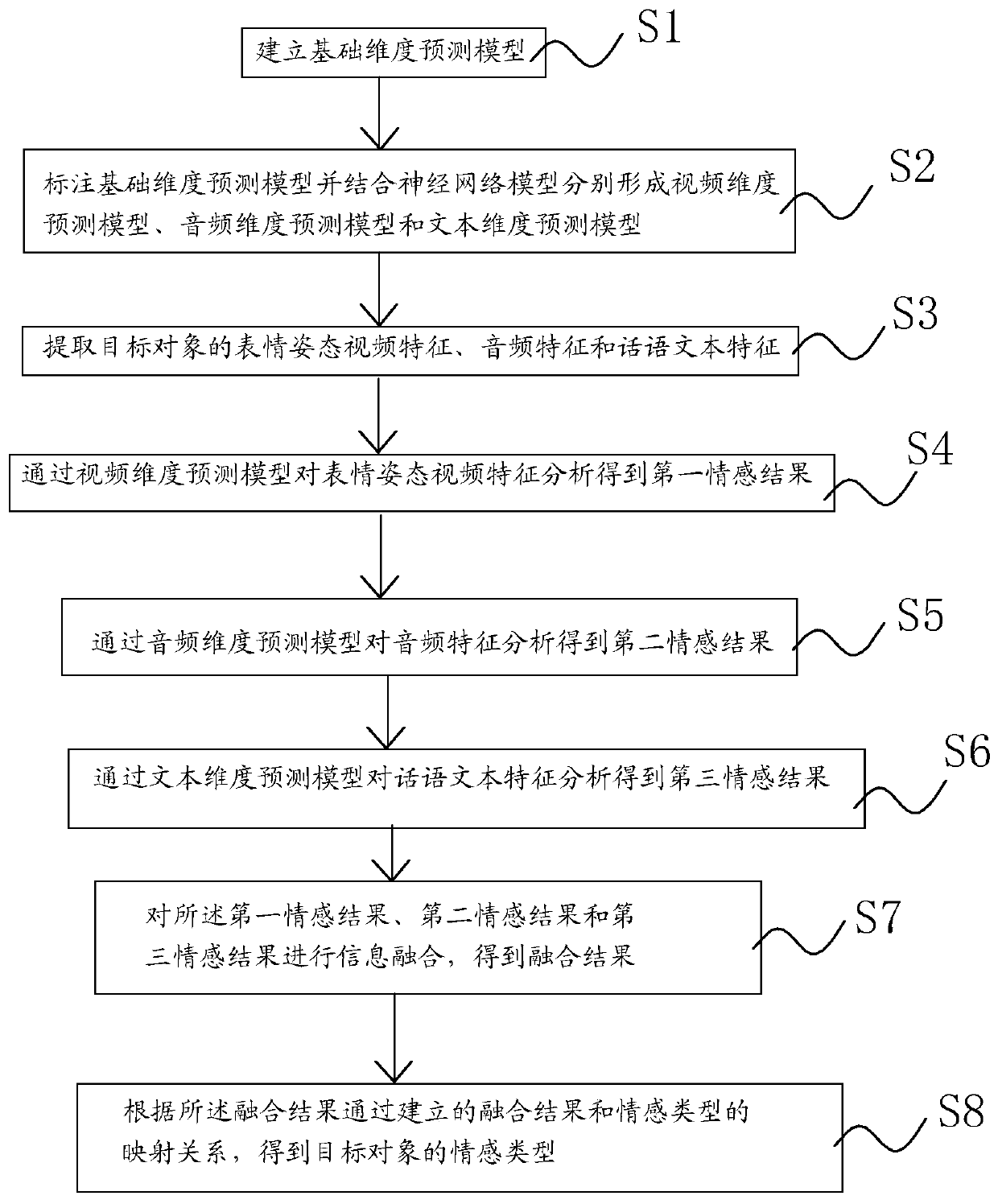

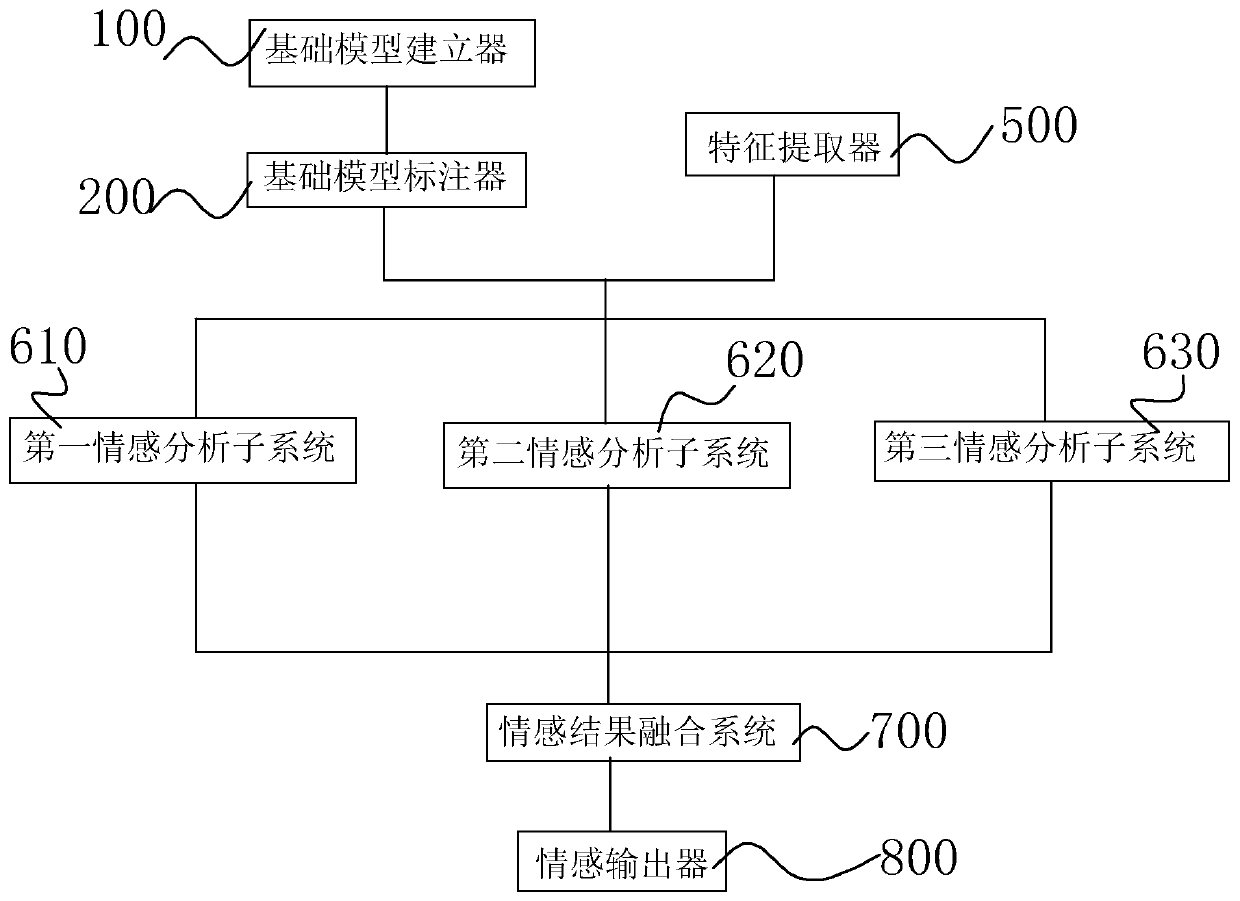

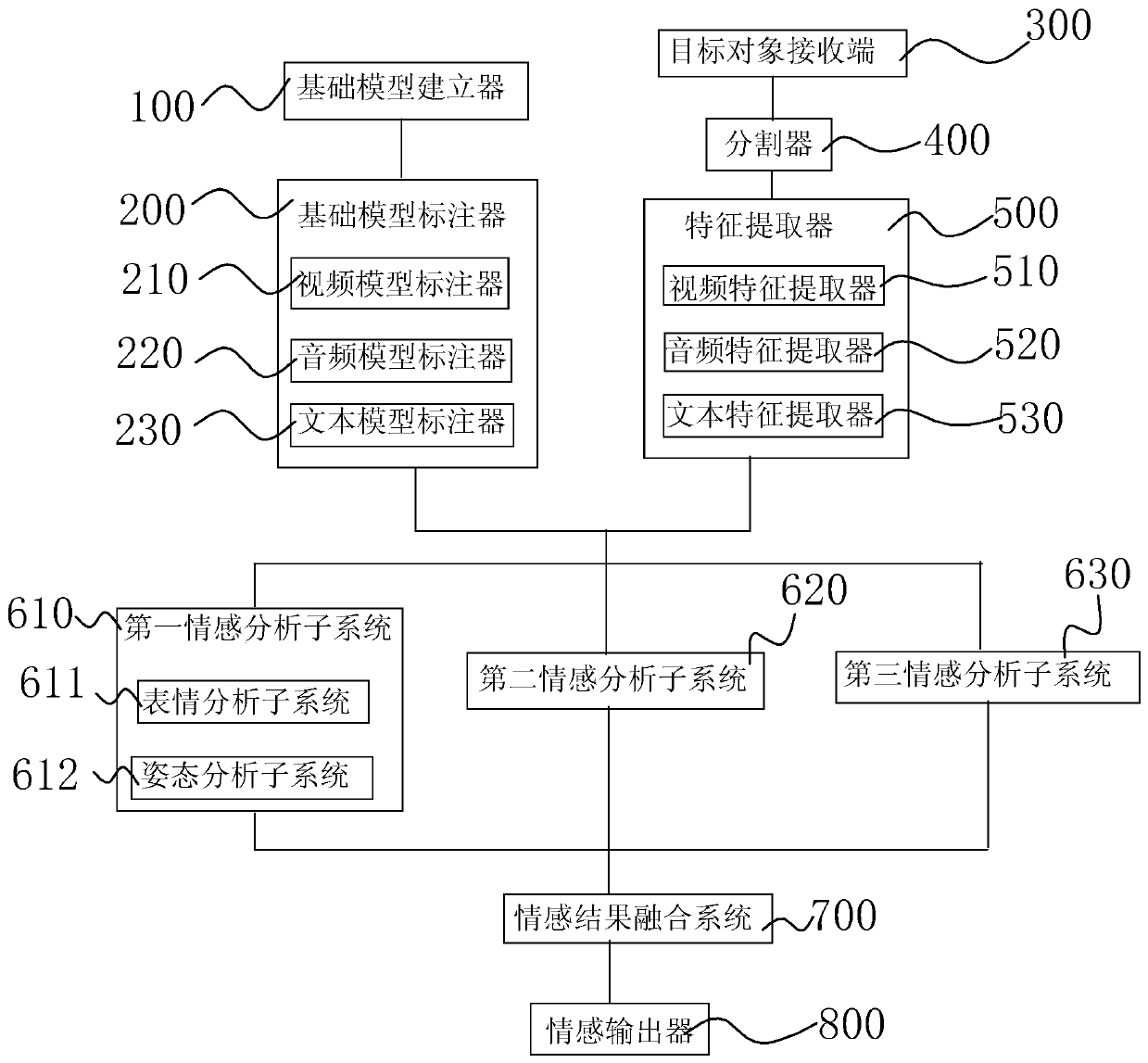

[0022] refer to figure 1 , the first aspect of the present invention provides a kind of emotion recognition method based on multimodal emotion model, is characterized in that, comprises the following steps:

[0023] S1. Establish a basic dimension prediction model;

[0024] S2. Marking the basic dimension prediction model and combining the neural network model to respectively form a video dimension prediction model, an audio dimension prediction model and a text dimension prediction model;

[0025] S3, extracting the facial expression gesture video features, audio features and speech text features of the target object;

[0026] S4. Obtain the first emotion result by analyzing the facial expression and posture video features through the video dimension prediction model;

[0027] S5. Obtain a second emotion result by analyzing the audio features through the audio dimension prediction model;

[0028] S6. Obtain a third emotion result by analyzing the characteristics of the utt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com