Image-text cross-modal retrieval based on multi-layer semantic deep Hash algorithm

A hash algorithm and cross-modal technology, applied in the field of cross-modal retrieval, can solve the problems of affecting retrieval results, multiple labels in real data, and inability to fully preserve data associations, etc., to achieve the effect of improving retrieval accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be further described below in conjunction with specific example:

[0019] In the present invention, both image and text modes are taken as examples for discussion.

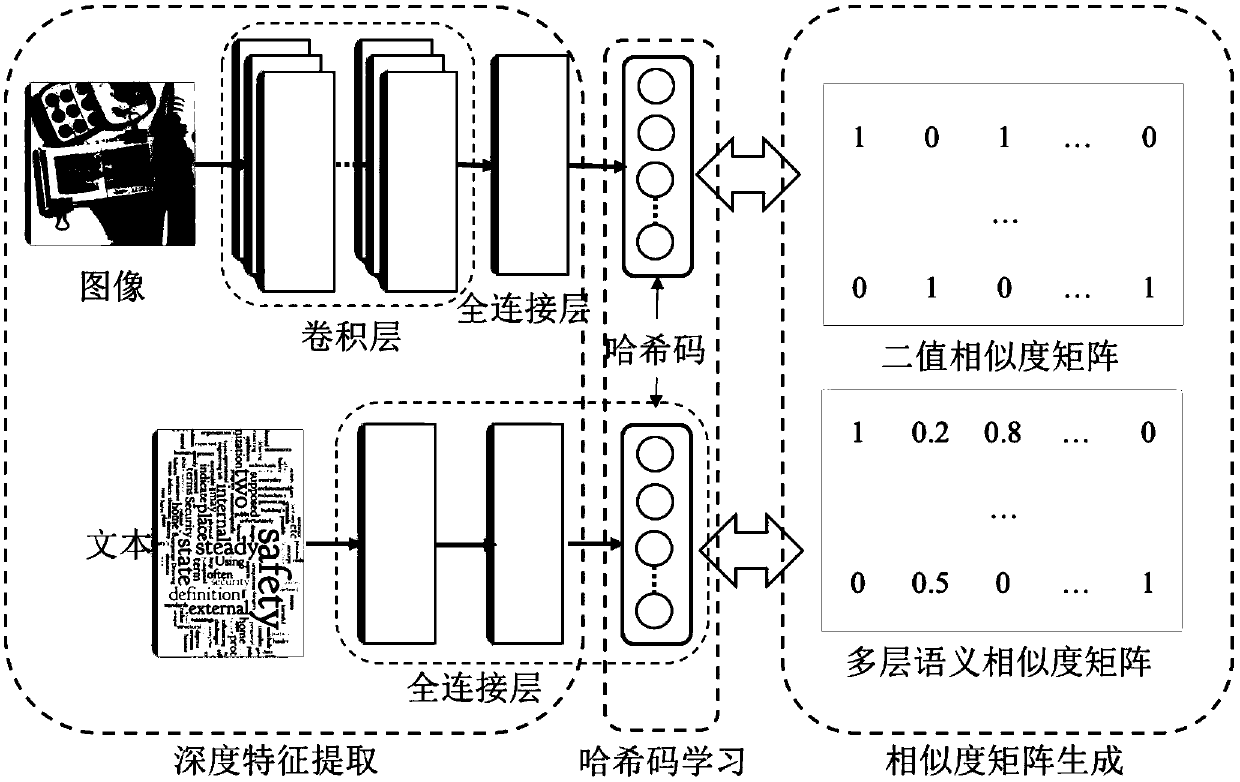

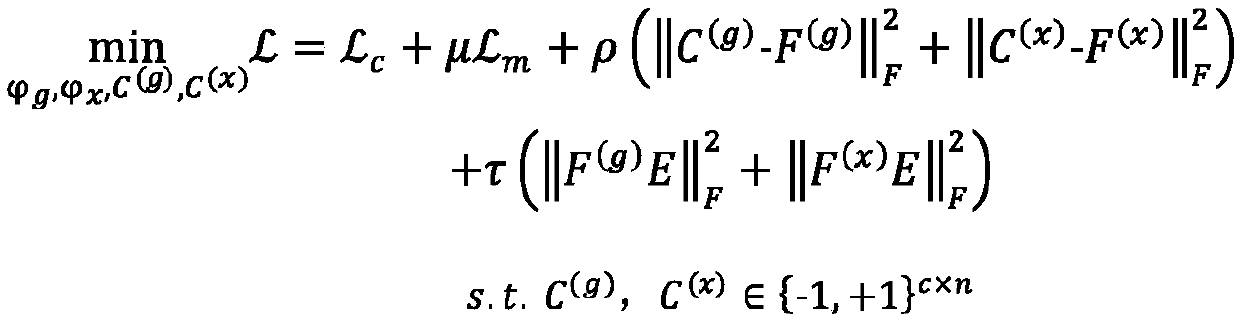

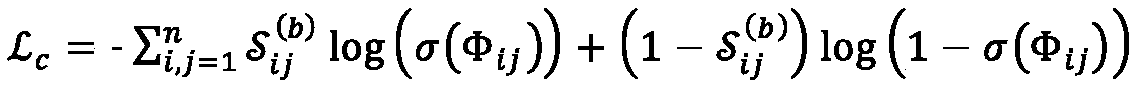

[0020] The present invention provides an image-text cross-modal retrieval (DeepMulti-Level Semantic Hashing for Cross-modal Retrieval, DMSH) method based on a multi-layer semantic depth hashing algorithm, which includes three modules: deep feature extraction module, similarity Degree matrix generation module, hash code learning module, such as figure 1 shown;

[0021] Table 1 Image feature extraction network structure

[0022]

[0023]

[0024] The deep feature extraction module uses a deep neural network to extract image and text data features. The deep convolutional neural network CNN-F network structure is used for image feature extraction, and the network structure configuration is shown in Table 1. In the text feature extraction stage, the text data is first modeled...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com