High dynamic range image tone mapping method and system based on deep learning

A technology of high dynamic range and tone mapping, applied in the field of computer vision, can solve the problem of easy loss of a large number of detailed image local contrast, and achieve the effect of solving boundary problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

[0130] The first embodiment is applied to high dynamic range image tone mapping.

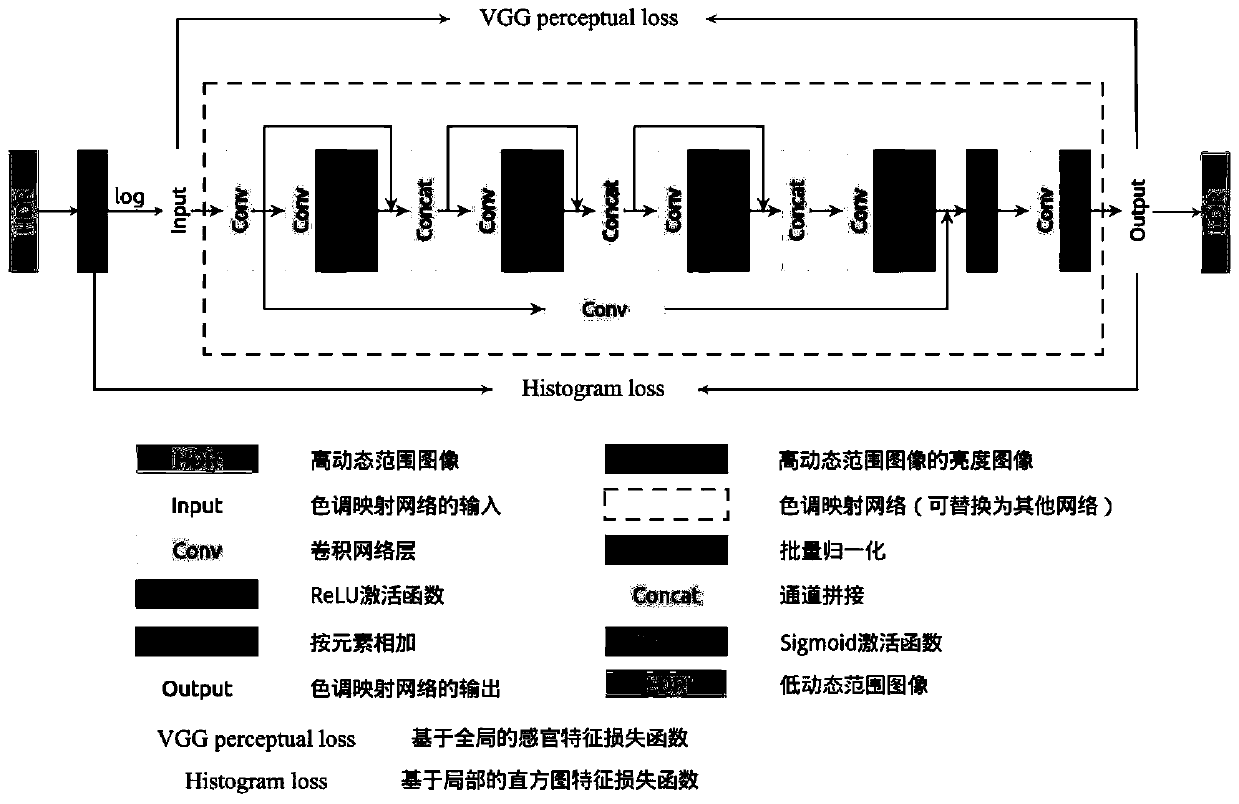

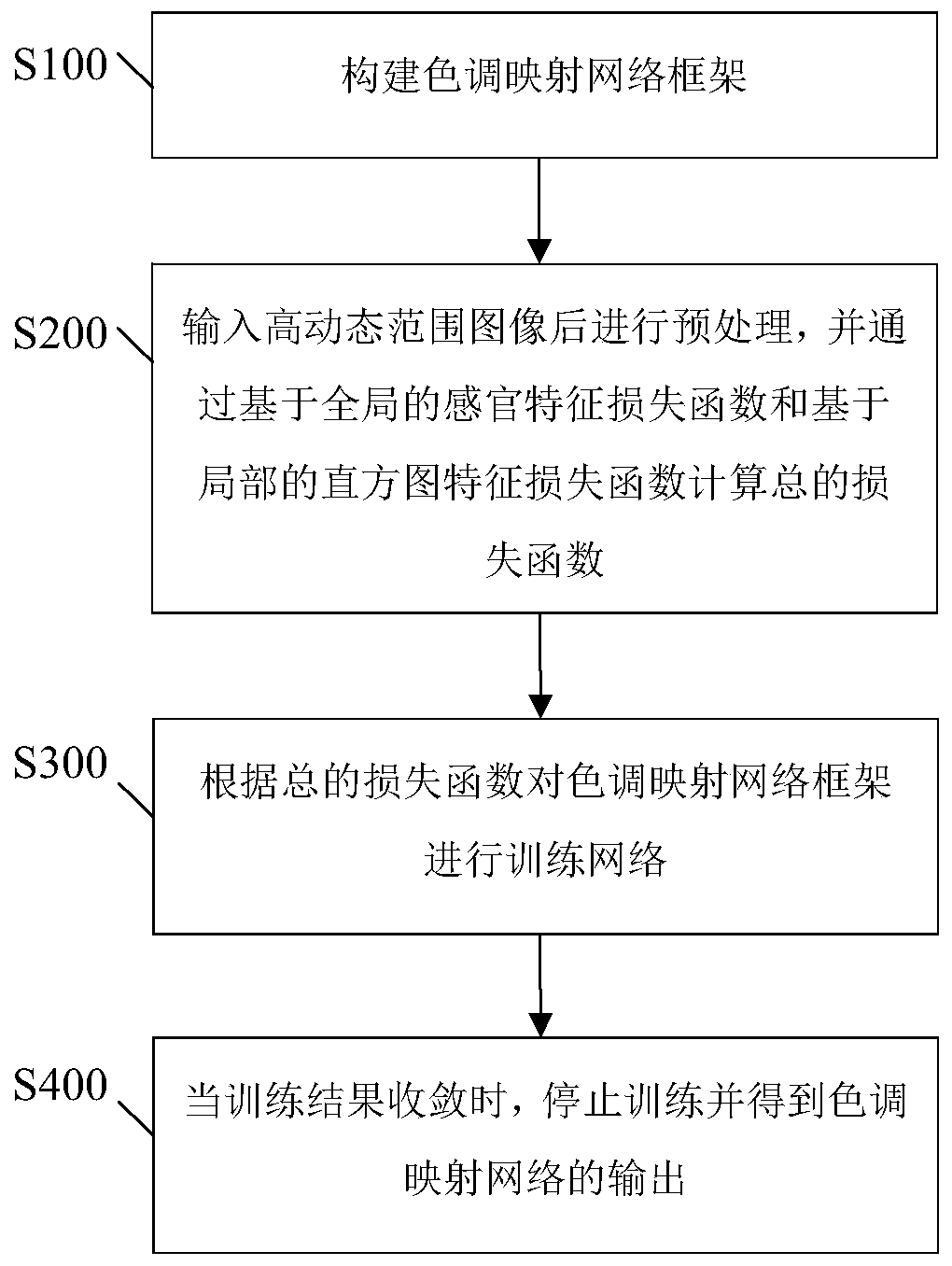

[0131] ①Set tone mapping network. For details on the network structure, see figure 1 For the part from Input to Output in the framework, the input is the data of one channel, and the output is also the data of one channel.

[0132] ② Set up a network to calculate image features. VGGNet is used to calculate deep sensory features of images, and LHN is used to calculate image histogram features.

[0133] (a) The input of VGGNet is the data of 3 channels, and the output of the tone mapping network is only the data of 1 channel, so it will be repeatedly stacked into 3 channels in the channel dimension as the input of VGGNet.

[0134] (b) The input of LHN is the data of 1 channel. The output of the tone mapping network is equally divided into 15×15=225 small regions, and the length and width of each small region are respectively 1 / 15 of the length and width of the output of the tone mapping networ...

specific Embodiment 2

[0156] The second embodiment is applied to image enhancement of low-light ordinary images, and does not involve ground truth.

[0157] The difference from the first embodiment is that in ②, the output of the tone mapping network is not evenly divided into multiple small areas, but directly used as the input of the LHN. 2. All the other operations are the same as those in Embodiment 1.

[0158] Different from the specific embodiment 1, color compensation is performed in ⑥, and the cr and cb channels of the original low-light image are combined with the output of the tone mapping network to obtain a new complete YCbCr three-channel, and then converted back to the RGB color space, Get the final result.

specific Embodiment 3

[0160] The third embodiment is applied to image enhancement of low-light ordinary images, and involves ground truth.

[0161] ①Set up the image enhancement network. It is sufficient to modify the tone mapping network in the first embodiment to input of 3 channels and output of 3 channels.

[0162] ② Set up a network to calculate image features. VGGNet is used to calculate deep sensory features of images, and LHN is used to calculate image histogram features. The output of the image enhancement network is directly used as the input of VGGNet, and each channel of the output of the image enhancement network is used as the input of different LHNs.

[0163] ③Set the calculation method of the loss function.

[0164] L VGG =||T VGG (O)-T VGG (I)|| 2

[0165] L Hist o gram =||T LHN (O R )-GTH R || 1 +||T LHN (O G )-GTH G || 1 +||T LHN (O B )-GTH B || 1

[0166] L total =L VGG +L Histogram

[0167] Among them, L Histogram Represents the histogram feature los...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com