Motion capture-based virtual reality sign language learning, testing and evaluating method

A technology of motion capture and virtual reality, applied in the input/output process of data processing, input/output of user/computer interaction, image data processing, etc. Capture technology and other issues to achieve accurate sign language movements, convenient production, and cost-saving effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

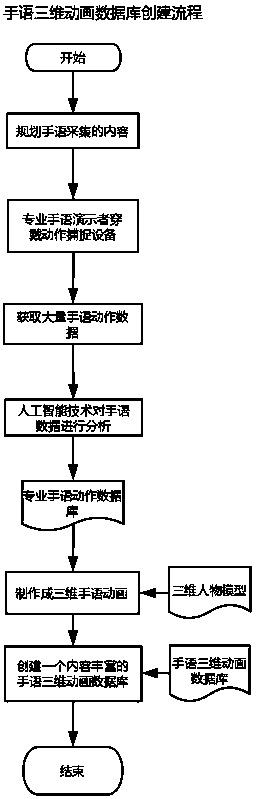

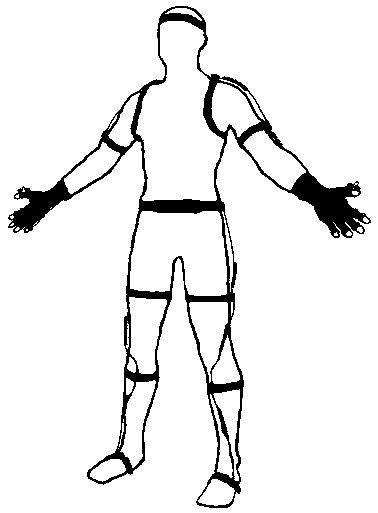

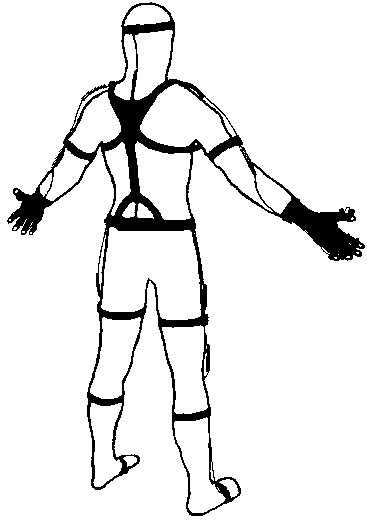

[0032] like Figure 1-4 As shown, the virtual reality sign language learning, testing and evaluation method based on motion capture, the method uses a motion comparison device to process sign language conversation scenes into data; the motion comparison device includes inertial motion capture equipment, data collectors and Data comparison device; the inertial motion capture device includes a plurality of data sensors; each data sensor is respectively bound and affixed to the body parts of the conversation participants; when the sign language conversation scene is carried out, the data sensors record the body parts of the conversation participants Spatial movement information forms conversational scene data; and then uses the conversational scene data to build virtual reality scenes through the 3D scene development platform; sign language students learn sign language through virtual reality scenes.

[0033] The conversation scene can be a conversation scene involving more than ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com