Image annotation method and device and storage medium

An image labeling and storage medium technology, applied in the field of image labeling methods, devices and storage media, can solve the problems of large labeling noise, unfavorable model training, difficulty in facial images, etc. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] Embodiments of the present application are described below in conjunction with the accompanying drawings.

[0034] Since the current labeling of face images is mostly manually scored on a single face image, the subjectivity is too great, so the training samples determined by the above method have a lot of labeling noise, which is not conducive to model training.

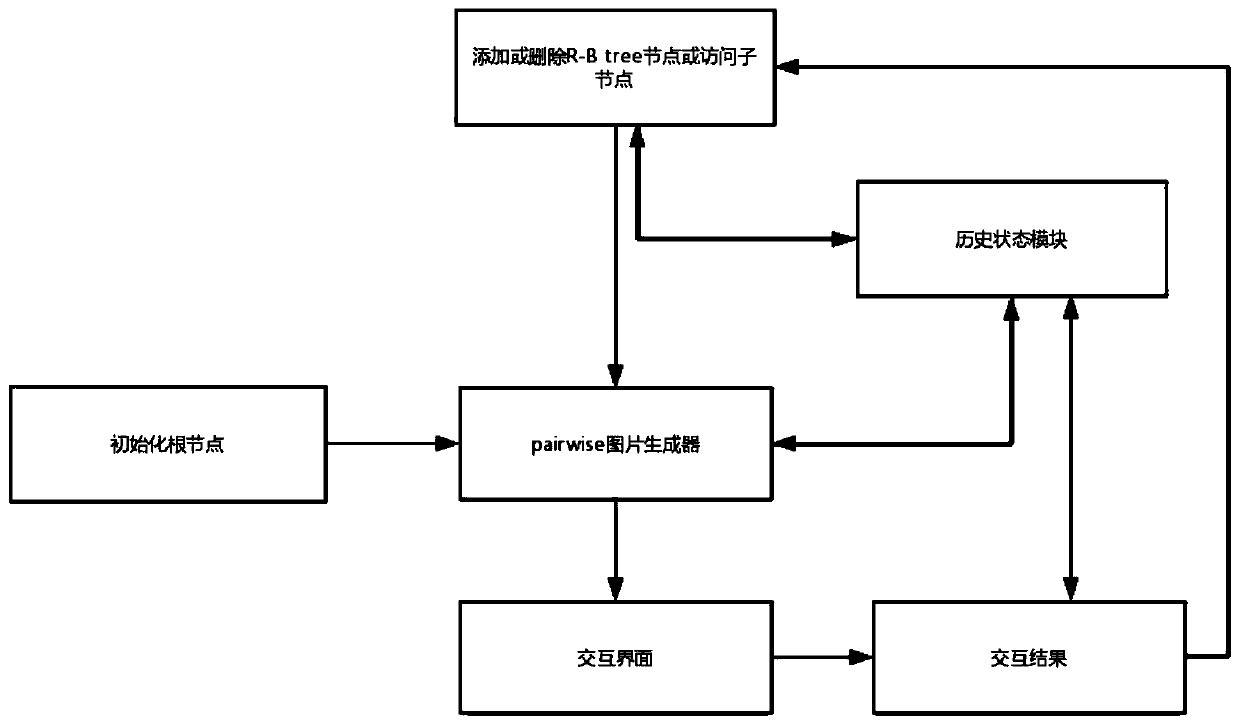

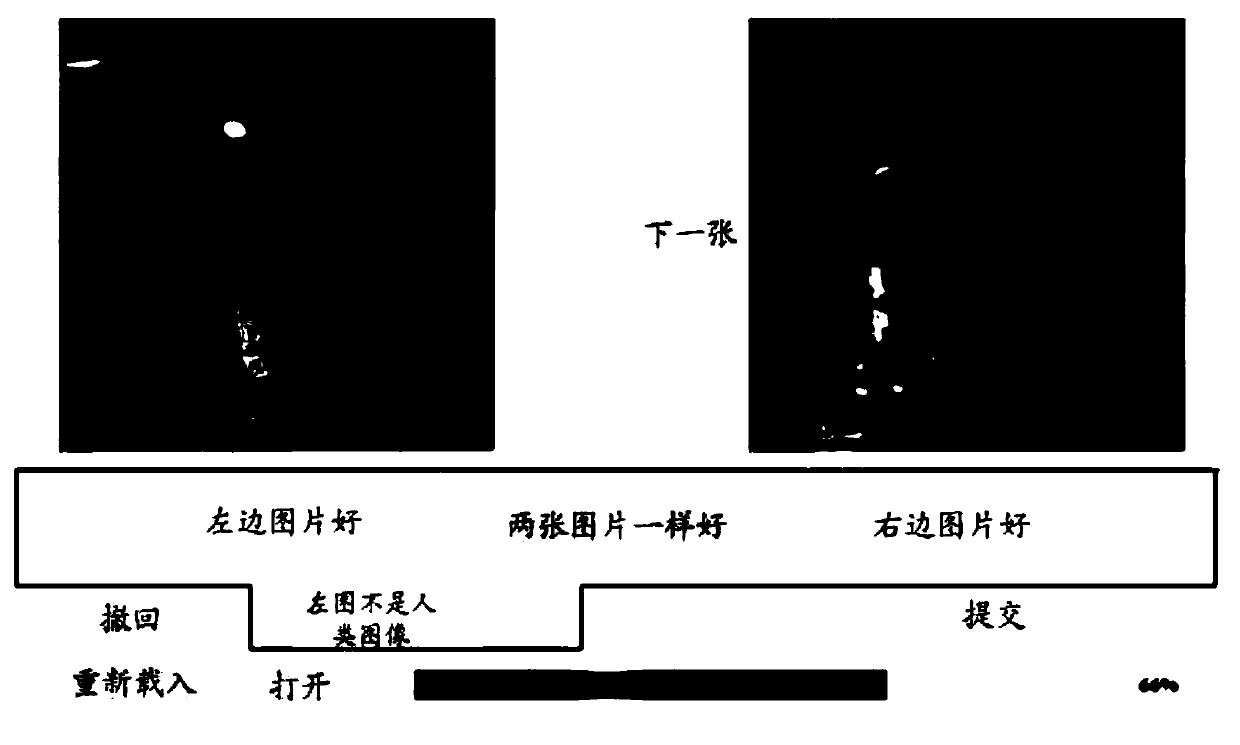

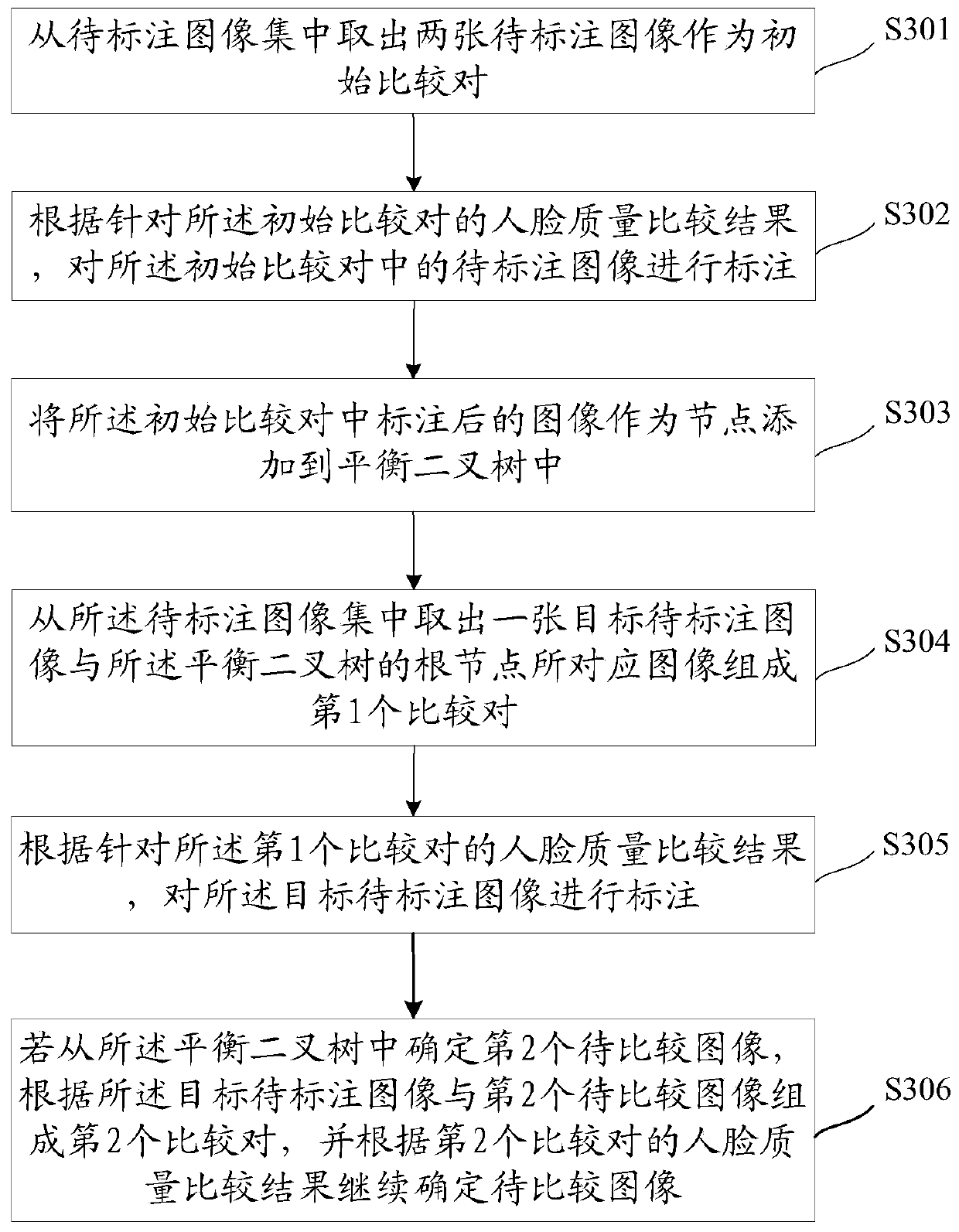

[0035] Therefore, the embodiment of the present application provides an image tagging method, which is different from the traditional method. By using two images to be tagged as a comparison pair for face quality comparison, the basis for user judgment has a measurable objective standard, reducing subjective influence. And based on the comparison result, the marked image can be used as a node to construct a balanced binary tree. In any target node in the balanced binary tree, the child nodes on different sides of the target node have different face quality comparison results. Therefore, when comparing the fac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com