Video-based pedestrian and crowd behavior identification method

A recognition method and behavior technology, applied in the field of deep learning, which can solve problems such as low robustness and limited accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be further described below in conjunction with accompanying drawing.

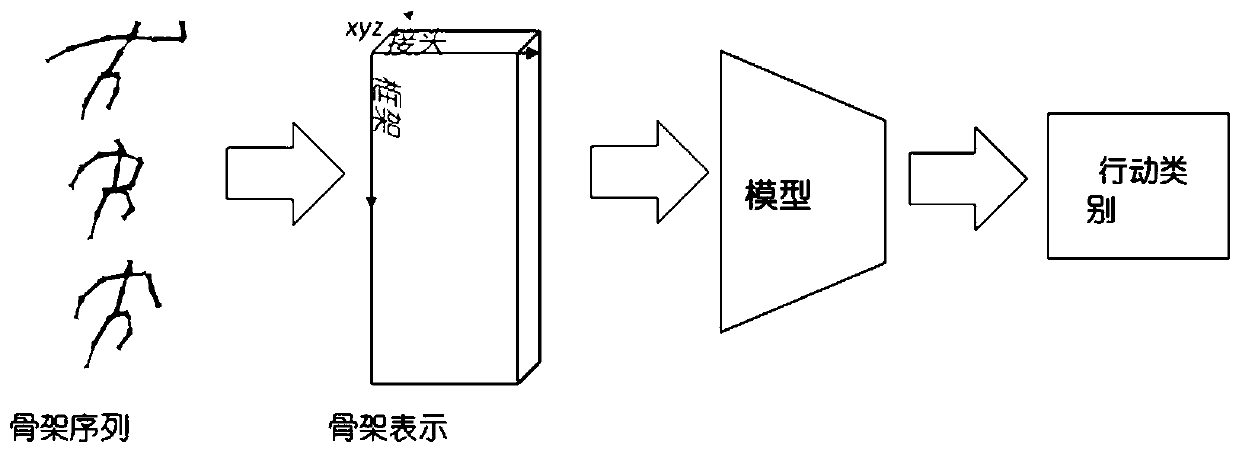

[0028] like figure 1 As shown, the video-based pedestrian and crowd behavior recognition method of the present invention comprises the following steps:

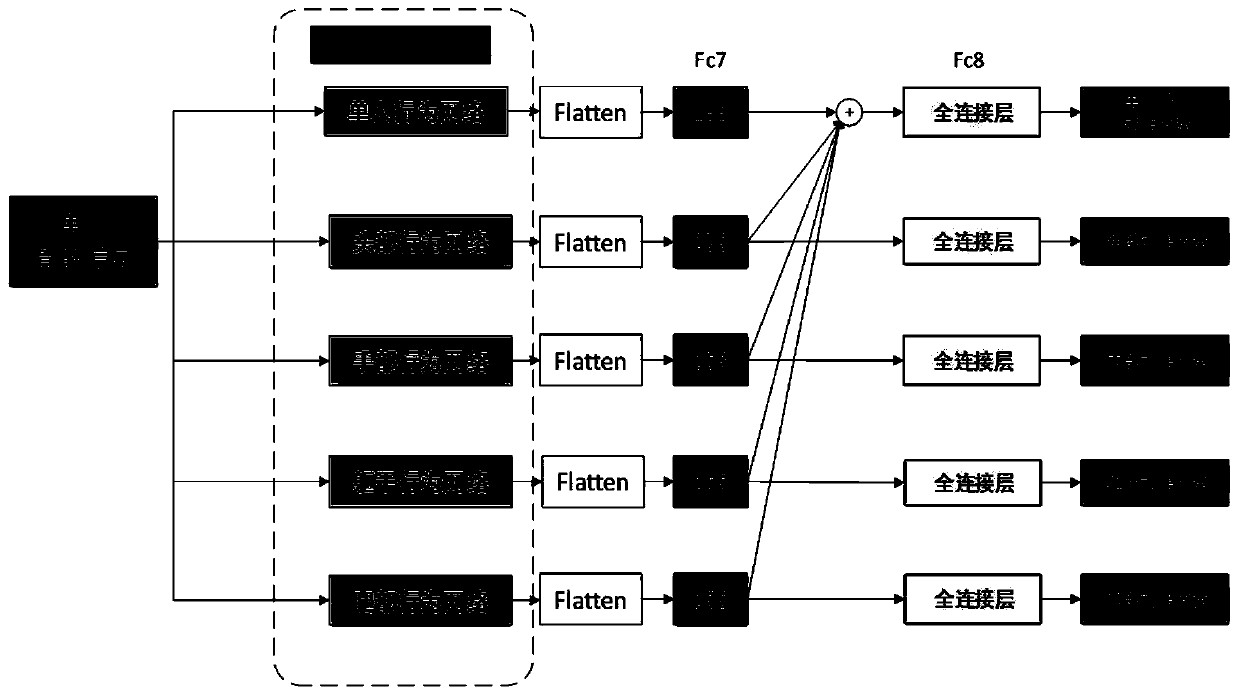

[0029] 1. The video with a frame number of about 150 frames is processed by the human body pose estimation algorithm, and an overall skeleton sequence in the shape of 150 (frame number)×18 (key point number)×3 (3-dimensional skeleton coordinates is 3) is obtained. At the same time, the 18 key points of the human body are distinguished according to the human body parts of the head, arms, torso, and feet, and four groups of limb part skeleton sequences in the shape of 150 (frame number) × corresponding key points × 3 (3-dimensional skeleton coordinates are 3) are obtained .

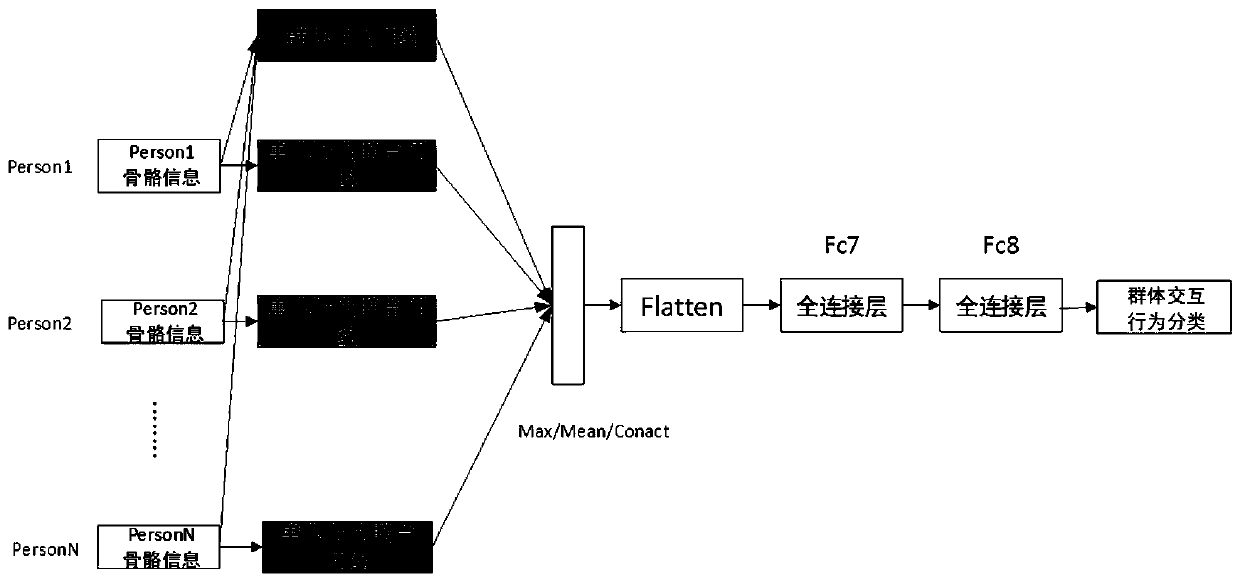

[0030] 2. The types of single-person whole-body behaviors are divided into single-person falls, squats, jumps, etc., and group behaviors involve inte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com