Generation method of human body action editing model, storage medium and electronic device

A human body motion and model technology, applied in the field of human body motion editing model generation, can solve problems such as animation failure, inability to ensure output, smooth and natural motion animation, etc., to achieve the effect of increasing the reuse value and reducing the amount of human intervention

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

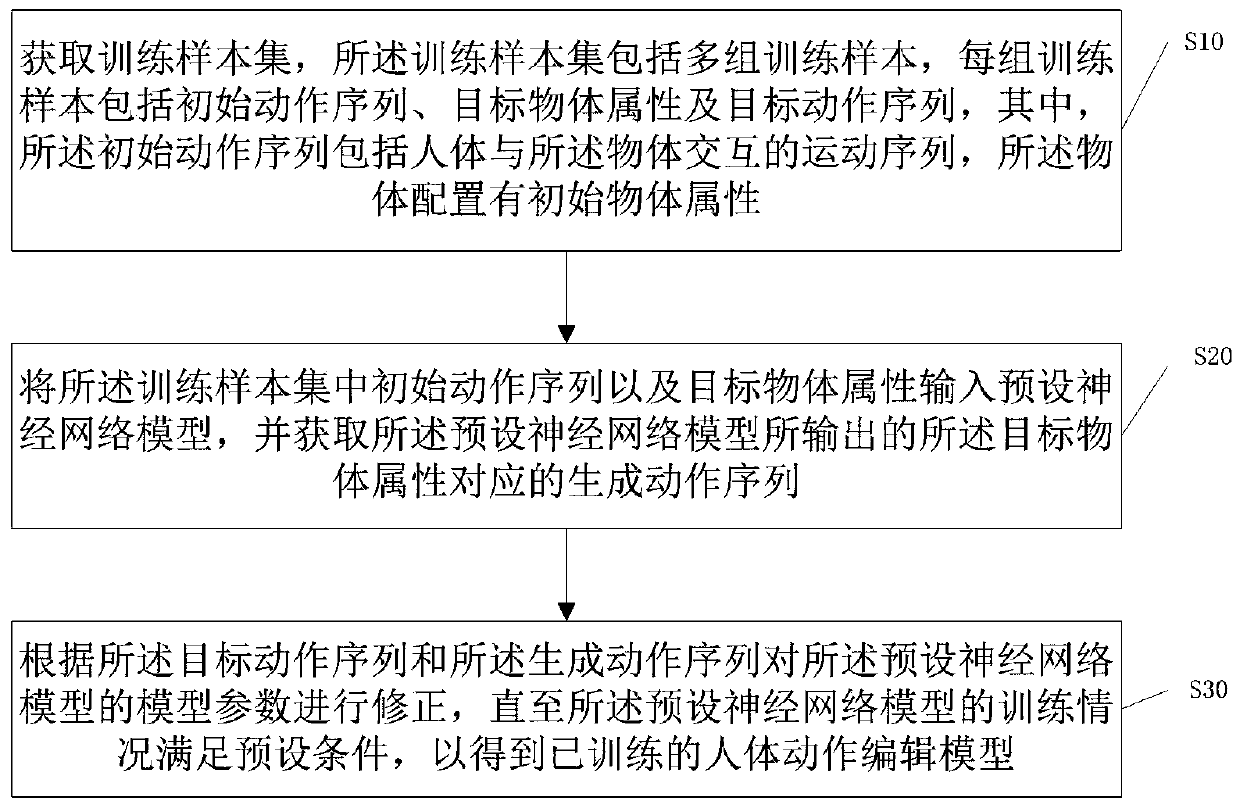

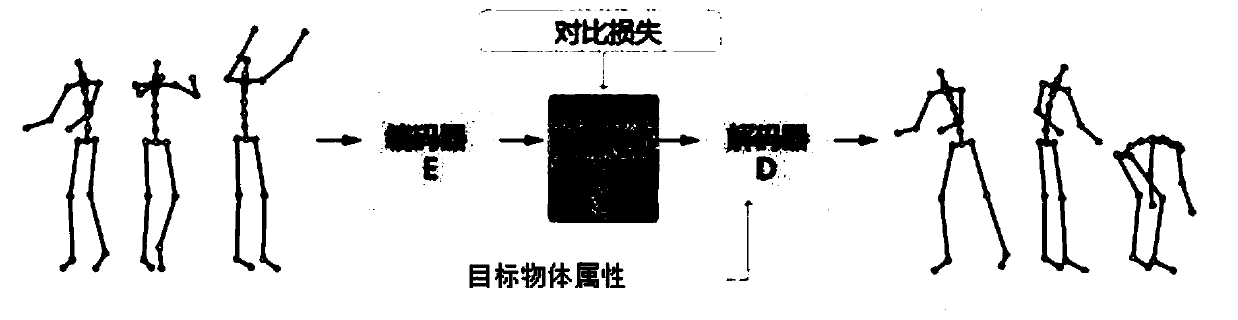

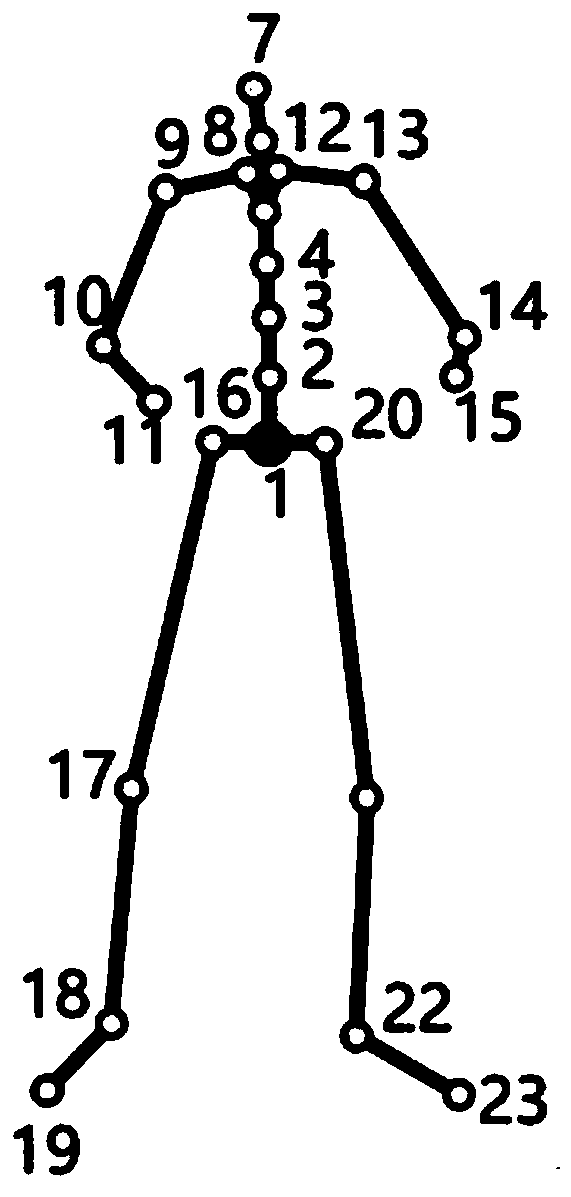

Method used

Image

Examples

example 1

[0082] Example 1: If Figure 9a As shown, the initial action sequence is the action sequence in which the subject moves the 0kg box from the table to the cabinet, such as Figure 9b As shown, when the attribute of the target object is 25kg, the generated motion sequence of the subject moving the 25kg box from the table to the cabinet obtained by the output of the human action editing model is obtained by Figure 9b Given the generative motion sequence, it can be concluded that the subject cannot move it to the cabinet.

example 2

[0083] Example 2: If Figure 10a As shown, the initial action sequence is the action sequence in which the subject picks up a cup whose volume is equal to the volume of the cup and drinks water, such as Figure 10b As shown, when the target object attribute is that the volume of water in the cup is 0, the human action editing model outputs the generated motion sequence of the subject picking up the cup with the volume of water equal to 0 to drink water, by Figure 10b Given the generated motion sequence, it can be concluded that the subject needs to raise his arm when drinking water.

[0084] Based on the generation method of the above-mentioned human motion editing model, the present invention also provides a method for synthesizing human motion based on object attributes, which uses the human motion editing model described in the above embodiment, and the method includes:

[0085] Acquiring target object attributes and an initial action sequence, the initial action sequence...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com