Memory allocation method and device of neural network

A neural network and memory allocation technology, applied in the field of deep learning, can solve problems such as large memory and unreasonable memory allocation, and achieve the effect of reducing memory footprint and optimizing memory allocation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

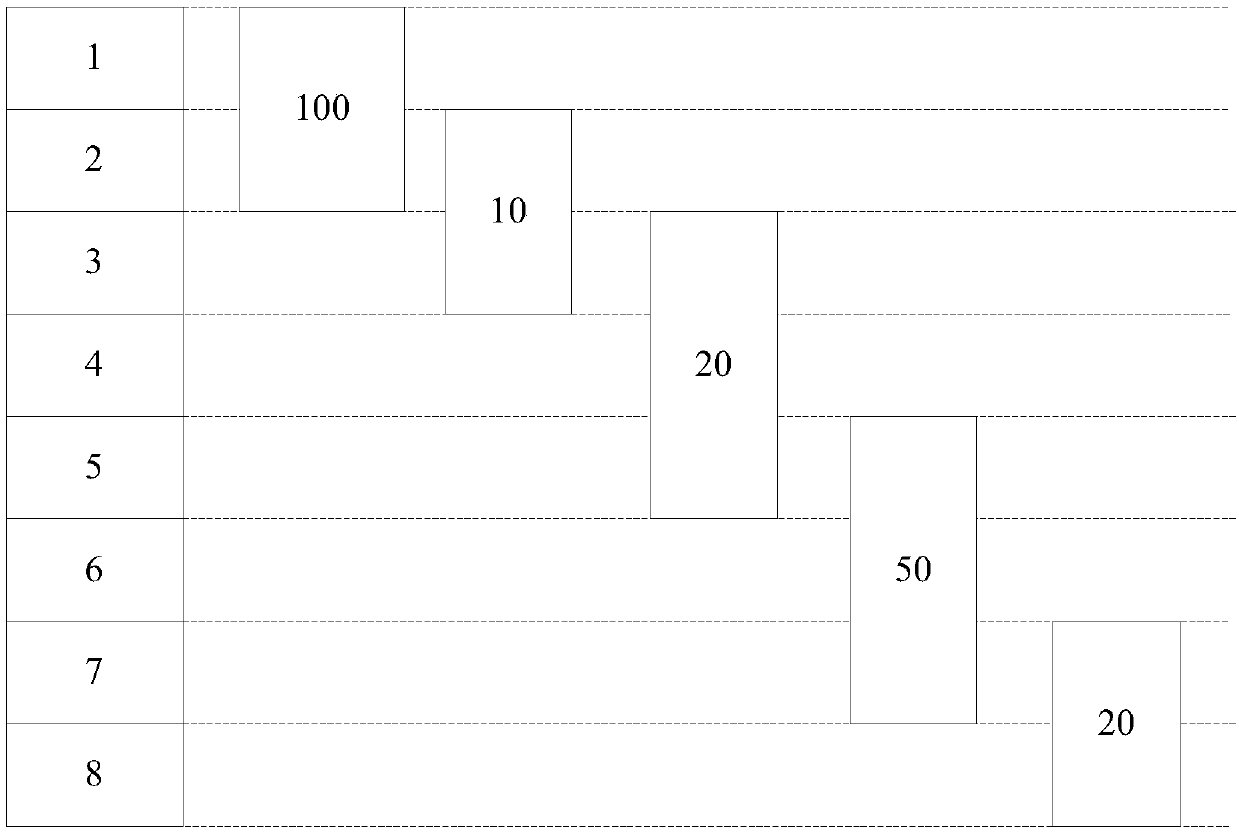

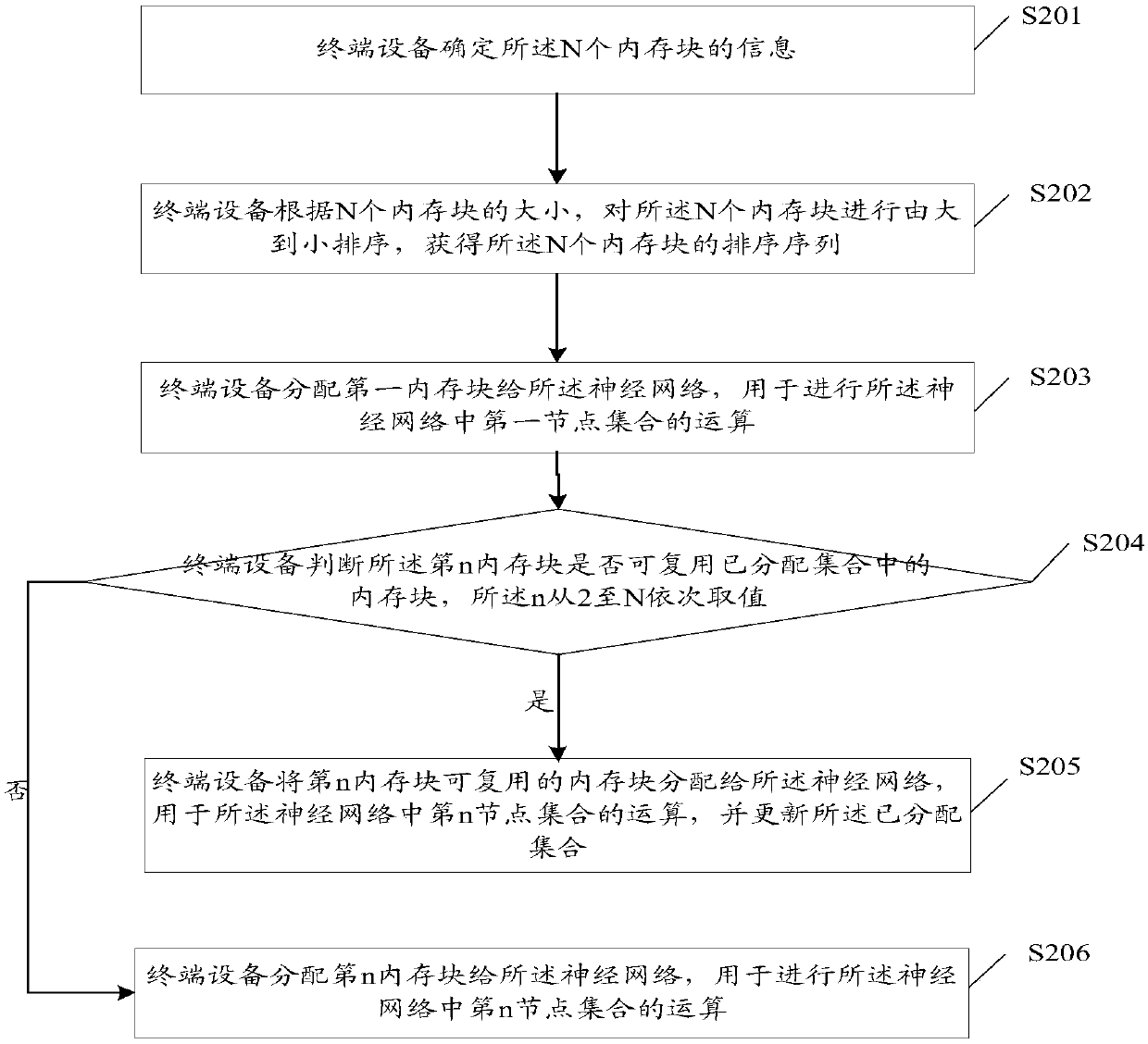

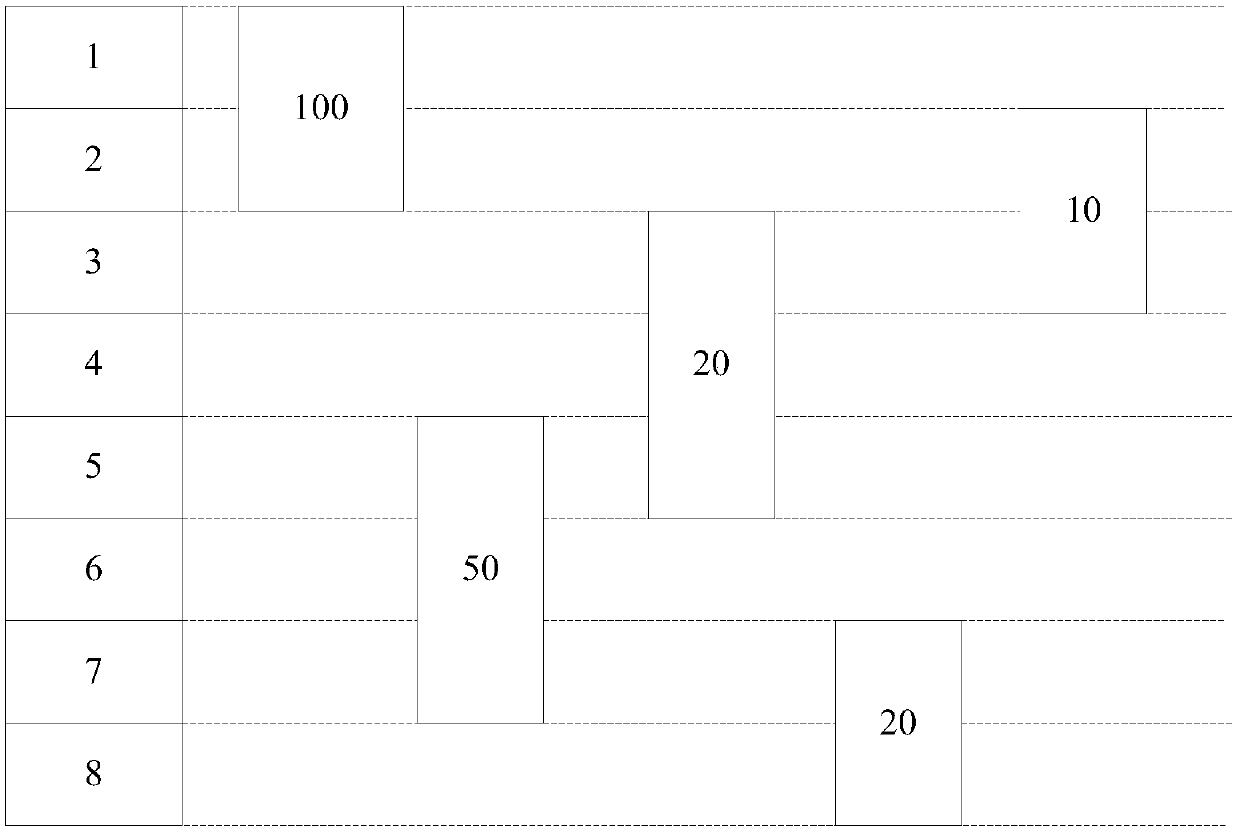

[0048] Example 1: The terminal device runs the neural network and records the information of the memory block occupied by the input parameters of each node in the neural network and the memory block occupied by the output parameters of each node, as well as the intermediate parameters occupied by each node The information of the memory block determines the information of the N memory blocks according to the information of the memory block occupied by the input parameter of each node in the neural network, the information of the memory block occupied by the output parameter, and the information of the memory block occupied by the intermediate parameters.

[0049] In example 1, the terminal device can simultaneously record the information of the input parameters, output parameters, and the memory block occupied by the intermediate parameters, and generate the N memories in the above step S201 according to the information of the input parameters, output parameters and the memory block...

example 2

[0052] Example 2: The terminal device runs the neural network, records the information of the memory block occupied by the input parameters, output parameters, or intermediate parameters of each node in the neural network, according to the input parameters, output parameters or intermediate parameters of each node. The information of the occupied memory block determines the information of the N memory blocks.

[0053] In the second example, the terminal device can only record the information of the memory block occupied by the input parameters of each node, generate the information of the N memory blocks in step S201, and then use this application figure 2 The method provided only optimizes the memory occupied by the input parameters. Alternatively, the terminal device may also only record the information of the memory block occupied by the output parameter of each node, generate the information of the N memory blocks in the above step S201, and then use this application figure ...

example 3

[0054] Example 3: The terminal device runs the neural network, records any two of the input parameters, output parameters, and intermediate parameters of each node in the neural network, and the information of the occupied memory block, according to any two of each node Information about the memory blocks occupied by each parameter, and determine the information about the N memory blocks.

[0055] In Example 3, the terminal device may only record any two parameters among the input parameters, intermediate parameters, and output parameters of each node, and then optimize any two parameters above. For the entire neural network, the optimization effect of example three is worse than that of example one, and example two is optimized.

[0056] Step S202: The terminal device sorts the N memory blocks from large to small according to the sizes of the N memory blocks to obtain a sorted sequence of the N memory blocks.

[0057] In the embodiment of this application, after sorting the N memor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com