Human body action recognition method

A human action recognition and image technology, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve the problem of low accuracy of action recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

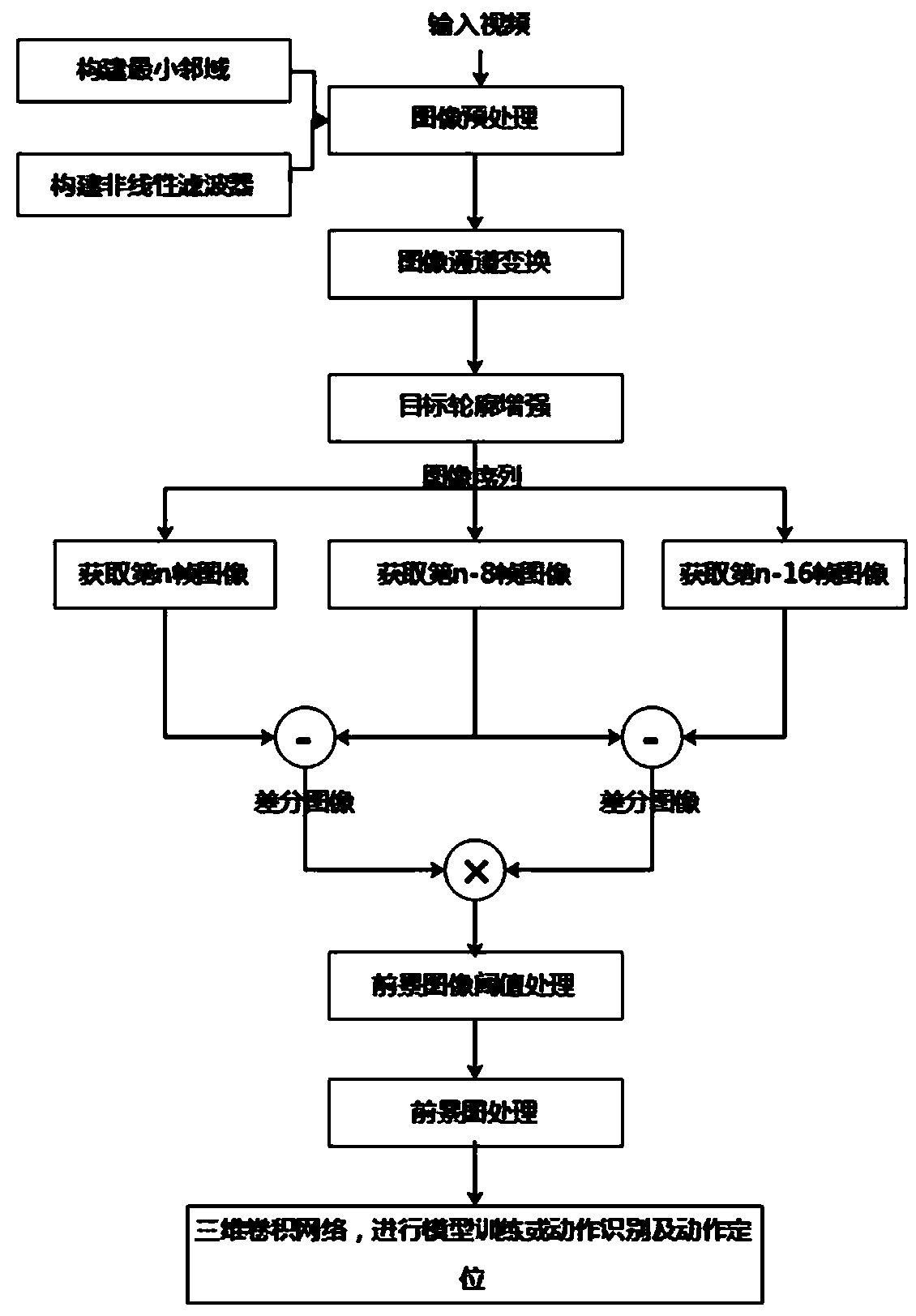

[0070] This embodiment is mainly aimed at large scenes and small targets, by preprocessing training and test data, reducing the impact of complex backgrounds on model detection accuracy, and improving the model's action recognition accuracy. At the same time, only one three-dimensional convolutional deep learning model is used to detect and accurately locate actions in continuous videos of any length, reducing the amount of calculation.

[0071] Such as figure 1 As shown, this embodiment includes the following steps:

[0072] The first step: image preprocessing operation:

[0073] Decode the video and preprocess each frame of pictures. The preprocessing includes the following steps:

[0074] 1) Minimum Neighborhood Selection

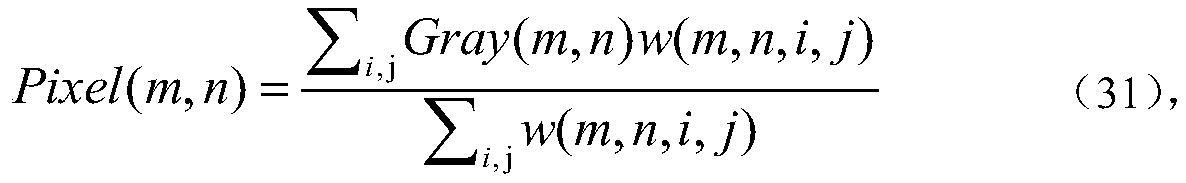

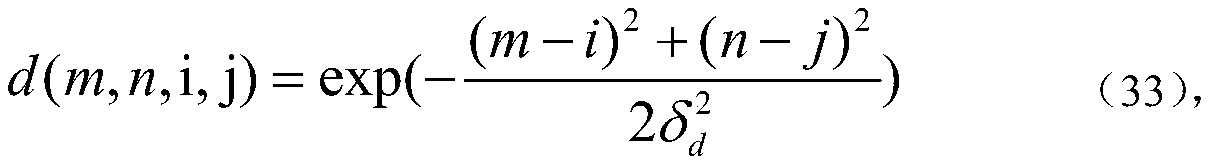

[0075] For a two-dimensional image, the minimum neighborhood width is 9, that is, a pixel and its surrounding 8 pixels are taken as the minimum filtering neighborhood, that is, in the neighborhood window length (i, j) of the pixel, the selection of i ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com