Video feature learning method based on video and text pair discriminant analysis

A video feature and discriminant analysis technology, applied in neural learning methods, biometric recognition, character and pattern recognition, etc., can solve the problems of high labor cost, lack of practicability and scalability, and inability to extract video information. The effect of reducing labor costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

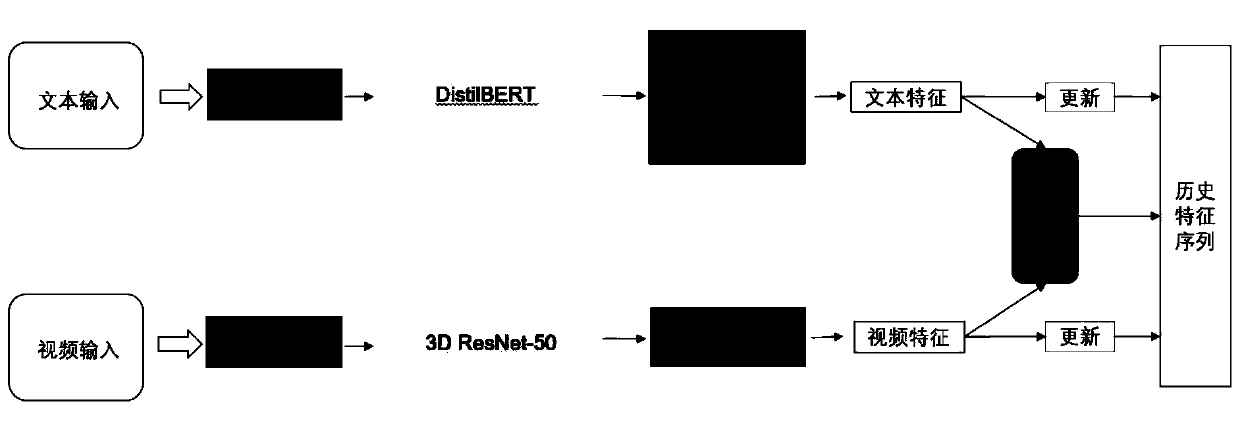

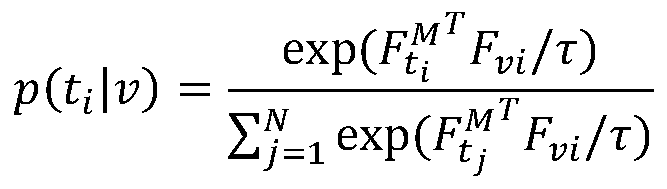

[0027] The present invention proposes a video feature learning method based on video and text description pair discrimination, which forms a video-text pair from the video and the text description matched with the video, uses a three-dimensional convolutional network to extract video features, and uses a DistilBERT network to extract text description features Through training, the video and its corresponding text description have similar semantic features, so that the text description automatically becomes the label of the corresponding video, and the training builds a deep learning network for learning video features.

[0028] Specifically include the following steps:

[0029] 1) Preparatory stage: Construct two historical feature sequences with a size of N×256 to store the features of videos and text descriptions in the database respectively, where N represents the number of videos in the database, and the dimension of features is 256 dimensions. The historical feature sequen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com