A Violent Behavior Recognition Method Based on Temporal Guided Spatial Attention

A technology of guiding space and recognition methods, applied in the field of violent behavior recognition based on temporal sequence guiding spatial attention, can solve the problems of large amount of parameters in 3D convolutional networks, difficulty in meeting real-time requirements, small amount of parameters, etc., to reduce background interference , Reduce missed detection and improve accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention is described in detail below in conjunction with the accompanying drawings:

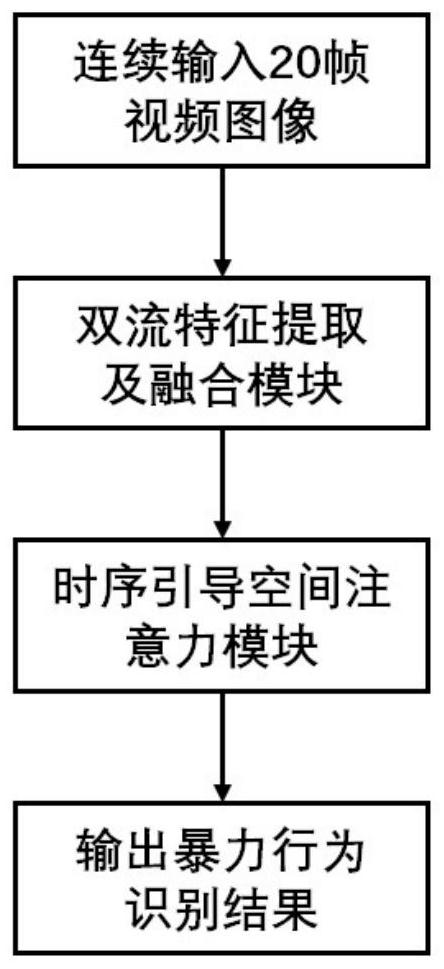

[0034] like figure 1 As shown, a kind of violent behavior recognition method based on temporal sequence guiding spatial attention provided by the present invention, the following steps:

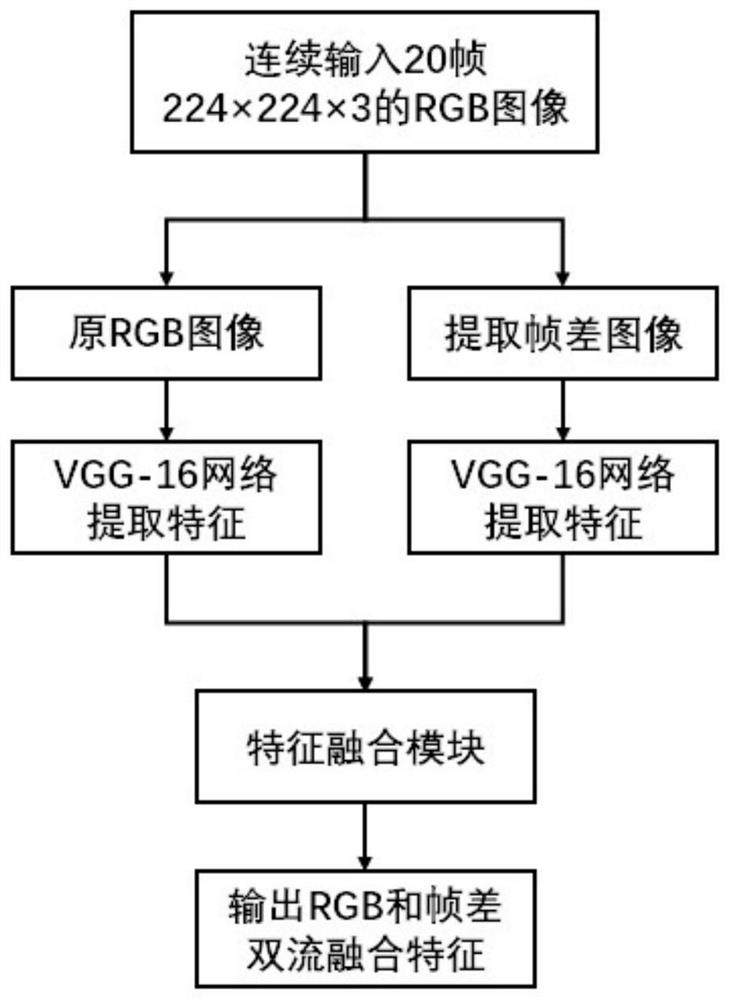

[0035] 1) Two-stream feature extraction and fusion For the input continuous video sequence, a deep convolutional neural network is used to extract the features of the RGB image and the frame difference image respectively, and the two-stream features are fused for a temporally guided spatial attention module.

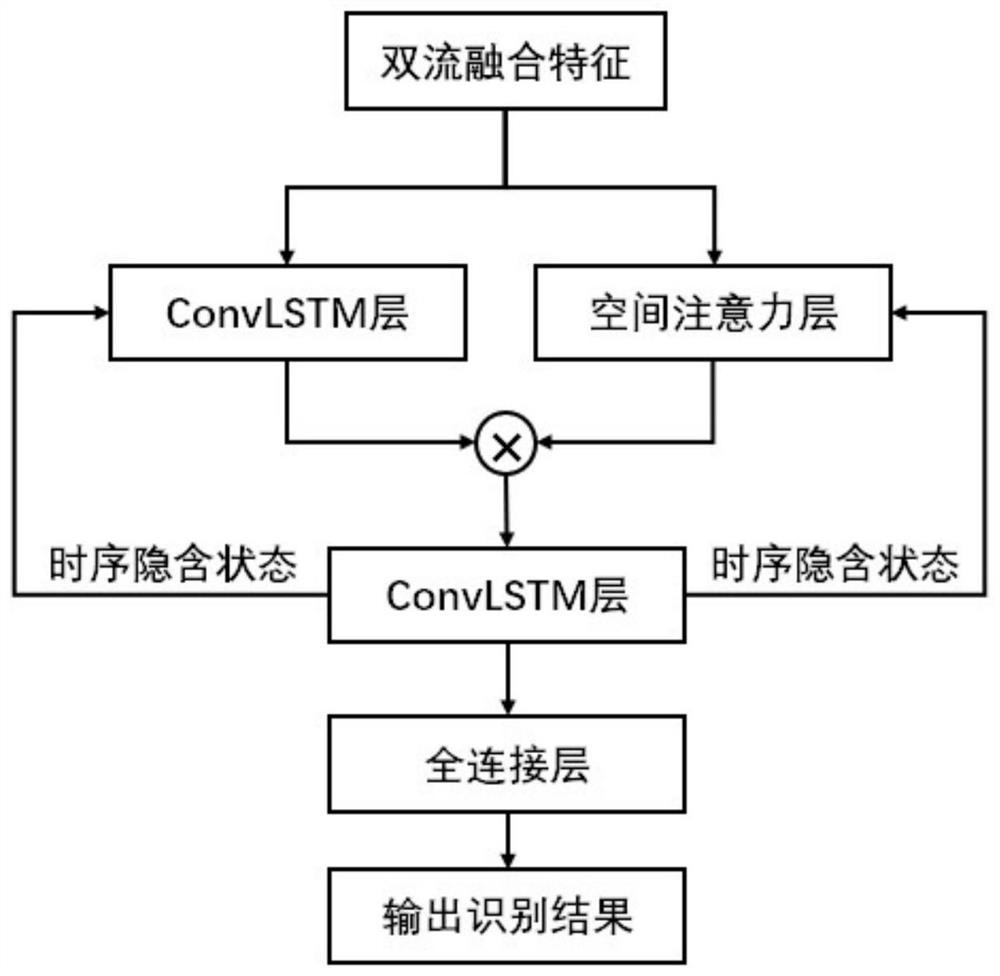

[0036] 2) The timing-guided spatial attention module uses the temporal features output by ConvLSTM to guide the spatial attention module to assign different weights to different spatial regions of the feature, and guide the network to pay attention to the moving region. Finally, the recognized categories and scores are output according to the weighted features.

[0037] Specifica...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com