Remote Sensing Image Classification Method Based on Deep Fusion Convolutional Neural Network

A convolutional neural network and remote sensing image technology, applied in the field of image classification, can solve the problems of low classification accuracy, single or redundant remote sensing image feature extraction, etc., to improve feature expression ability, avoid over-fitting, and ensure robustness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

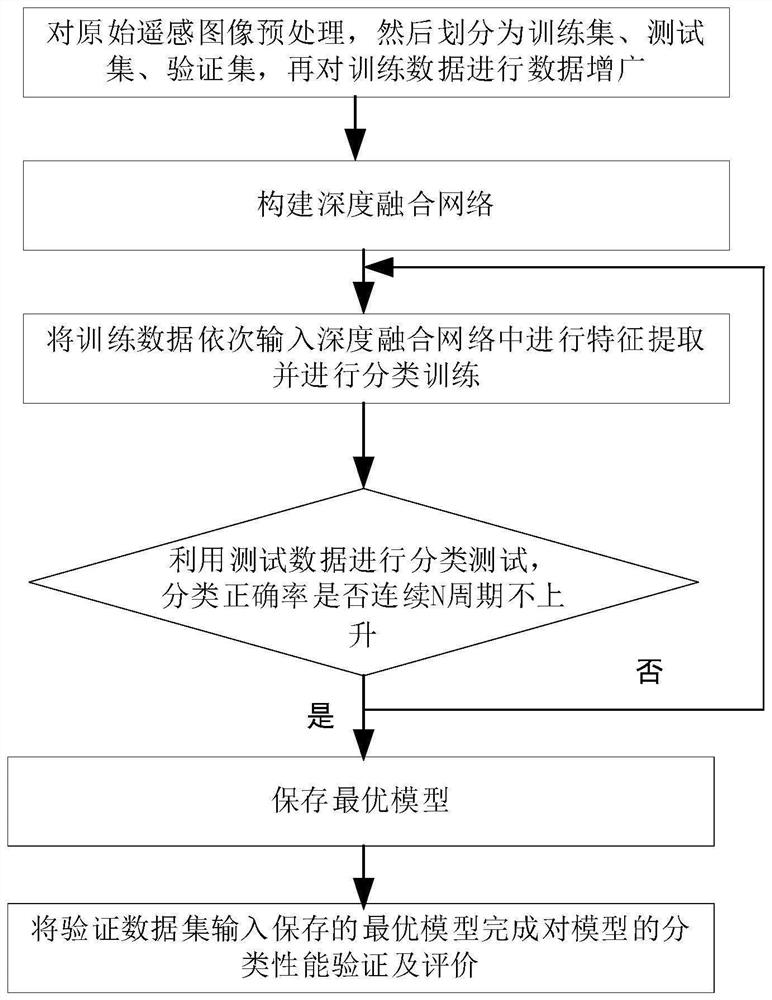

[0046] Embodiment 1: see Figure 1 to Figure 2 , a remote sensing image classification method based on a deep fusion convolutional neural network, comprising the following steps:

[0047] (1) Construct the original remote sensing image into a data set, preprocess the original remote sensing image, divide the preprocessed image into training set, test set and verification set, add category labels to the images of different categories in the training set, and then Perform data augmentation on the training data to obtain the training data;

[0048] (2) Construct a deep fusion convolutional neural network;

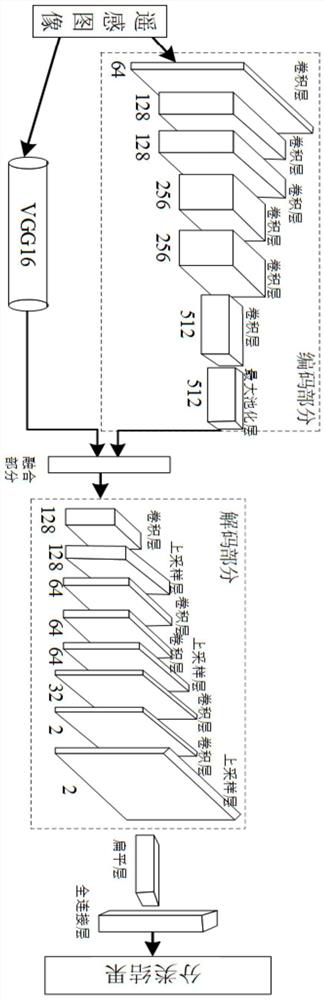

[0049] The deep fusion convolutional neural network includes an encoder-decoder model, a VGG16 model, a fusion part, a flat layer and a fully connected layer, and the encoder-decoder model includes an encoding part and a decoding part;

[0050] The VGG16 model is used to extract the deep features of the image;

[0051] The coding part includes a multi-layer convolutional la...

Embodiment 2

[0060] Example 2: see Figure 1 to Figure 2 , this embodiment is further improved and defined on the basis of embodiment 1. Specifically:

[0061] The preprocessing in the step (1) is to divide each pixel value of the original remote sensing image by 255 for normalization, and the data augmentation is to perform horizontal mirroring, rotation and scaling operations on the images in the training set .

[0062] In the upsampling layer, the upsampling adopts the nearest neighbor method to increase the image size.

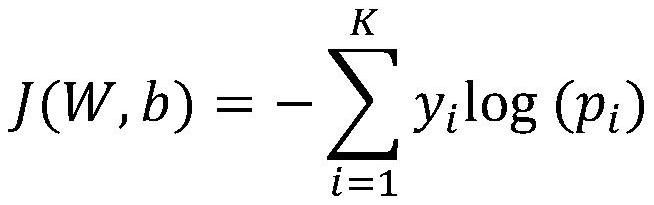

[0063] In step (3), the cross-entropy loss function J(W,b) is:

[0064]

[0065]

[0066] Among them, p i is the normalized probability output of the softmax function to the i-th sample in the fully connected layer, K is the number of categories, i is the i-th sample, j is the j-th sample, e is the base of the exponential function, x i is the output value of the fully connected layer for the i-th sample, x j is the output value of the fully connected layer ...

Embodiment 3

[0067] Embodiment 3: see Figures 1 to 2 , this embodiment is further improved and defined on the basis of embodiment 2.

[0068] The preprocessing in the step (1) is to divide each pixel value of the original remote sensing image by 255 for normalization processing. This preprocessing method provides a more effective data storage and processing method, while improving training The convergence rate of the model.

[0069] The data augmentation is: performing horizontal mirroring, rotation and scaling operations on the images in the training set. The specific methods of data augmentation include: (1) Horizontal mirroring, which horizontally flips the training data set in terms of geometric angle; (2) Rotation, image rotation technology can learn rotation invariant features during network training, and the target may have different poses , the rotation solves the problem of less object poses in the training samples. In this technique, the rotation degree is set to 10; (3) scal...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com