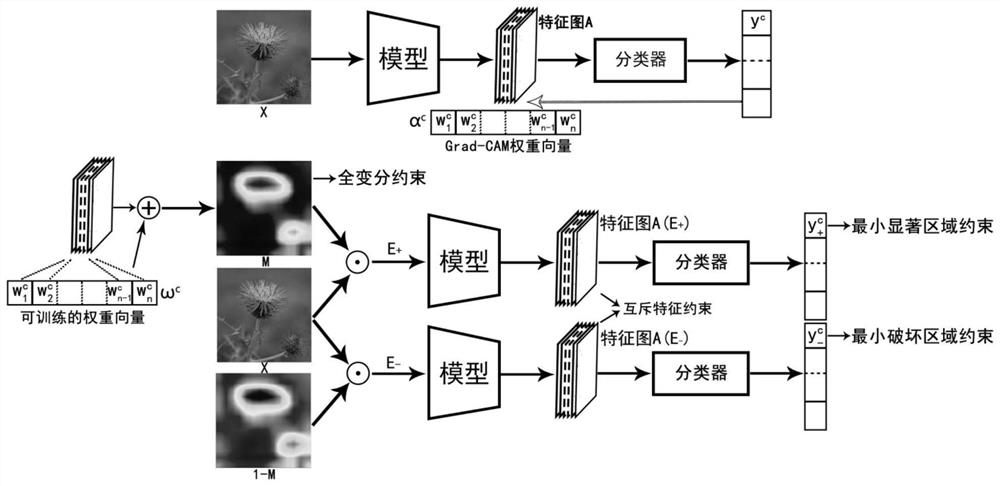

Feature Visualization Method for Deep Neural Networks Based on Constrained Optimization-like Activation Mapping

A deep neural network and constrained optimization technology, applied in the field of deep neural network feature visualization of constrained optimization class activation mapping, can solve problems such as weak class discrimination and high noise, and achieve strong class discrimination, less noise, and good visual effects. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

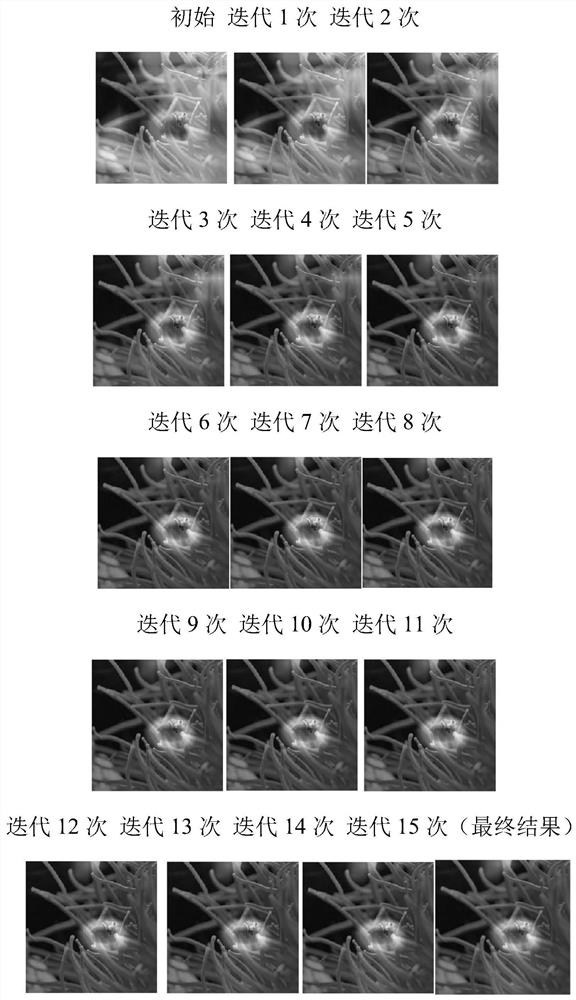

[0041] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

[0042] According to the example that the complete method of content of the present invention implements and its implementation situation are as follows:

[0043] The embodiment uses the deep neural network VGG19 trained on the ImageNet data set as the target model, and is described in detail as follows:

[0044] 1) Obtain a pre-trained model by training or downloading. Torchvision provides a pre-trained VGG19 model on the ImageNet dataset, which can be directly loaded and used.

[0045] 2) Set the feature map to be used, that is, the output of a certain layer of the VGG19 model as the feature map used for subsequent visualization, for example, select the output "features.34" of the last convolutional layer of VGG19.

[0046] 3) For an image X to be tested, such as figure 2 As shown, the input pre-training model is forwarded to obt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com