Compression method of convolutional neural network and implementation circuit thereof

A convolutional neural network and compression method technology, applied in the field of deep learning accelerator design, can solve the problems of processing speed impact, model irregularity, and low efficiency of neural network parallel computing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

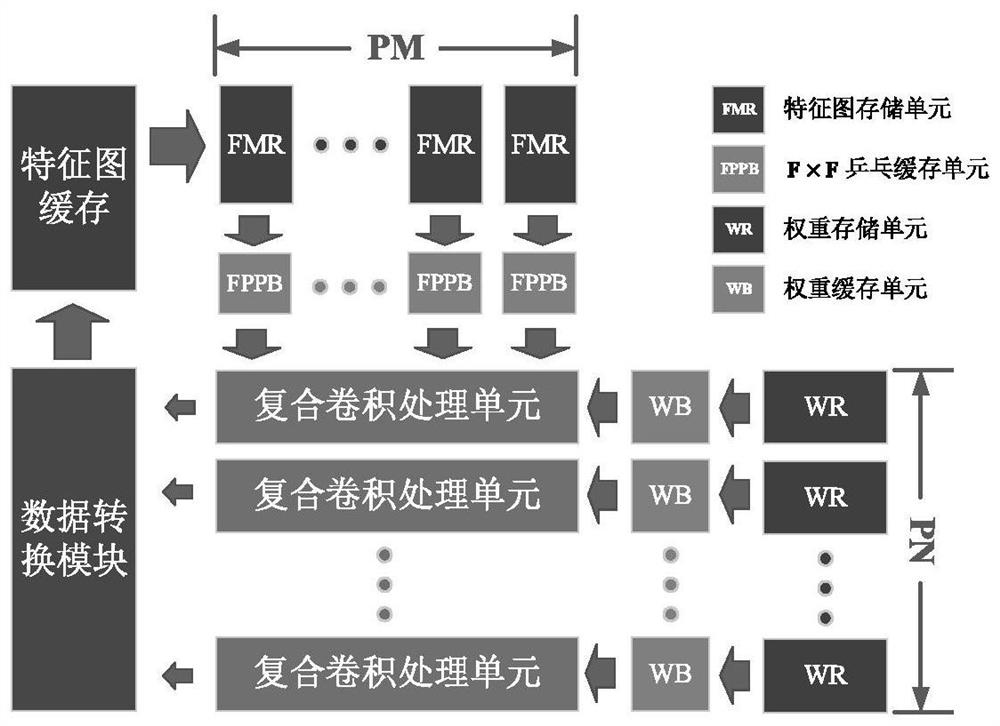

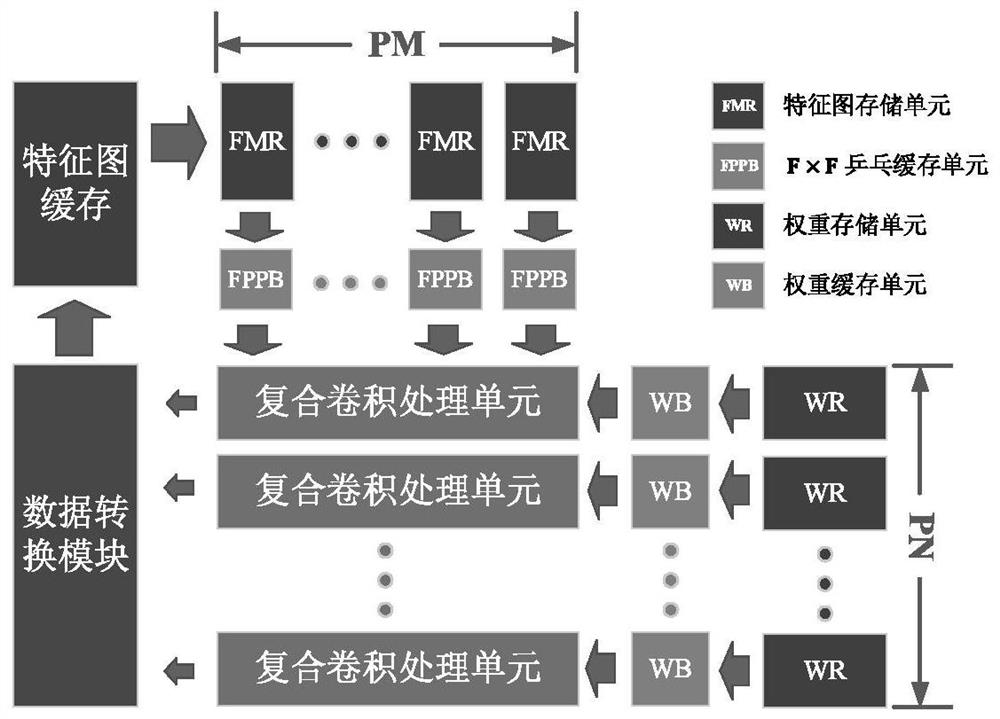

[0039] In a convolutional neural network, different layers have different characteristics. The front layer needs to process large-size feature maps, which requires a large amount of calculation, but has less weight; the size of the feature map processed by the latter layer is reduced due to the pooling layer, which requires a small amount of calculation, but has Lots of weights.

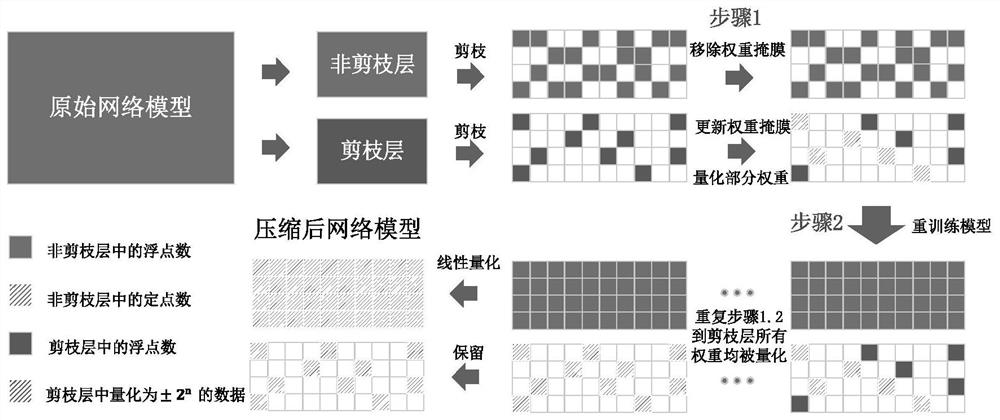

[0040] This embodiment proposes a compression method based on the characteristics of the convolutional neural network, and the specific steps are:

[0041] (1) Divide the convolutional neural network into non-pruning layers and pruning layers;

[0042] (2) Set the pruning threshold, prune the weights in the convolutional neural network that are less than the pruning threshold, then retrain the convolutional neural network, update the weights that have not been cut, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com