Method for completing visual SLAM closed-loop detection by fusing semantic information

A closed-loop detection and semantic information technology, applied in the field of map creation, can solve problems such as visual sensor accumulation errors, and achieve the effects of small calculation, good real-time performance, and good robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The present invention will be further described below in conjunction with accompanying drawing and embodiment

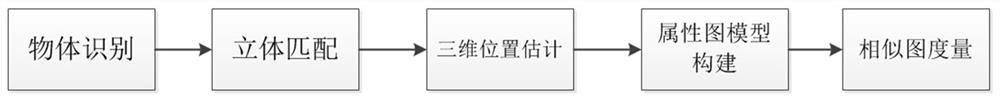

[0022] A method for fusion of semantic information to complete visual SLAM closed-loop detection, such as image 3 , including the following steps;

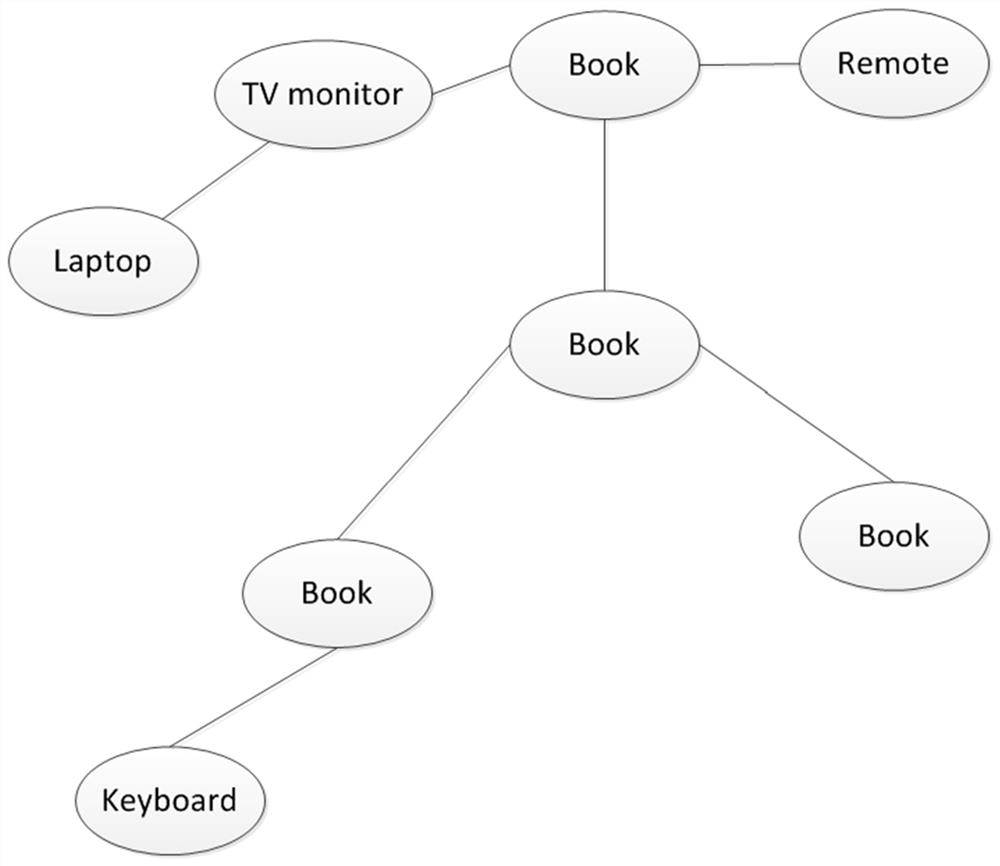

[0023] Object recognition step; use the object detector to recognize the objects in the image. The joint training method in YOLO9000 can be used to train the target detector with the detection data set and the classification data set at the same time. The object detection dataset is used to learn the accurate localization of objects, and the classification dataset is used to increase the number of detected object categories and the robustness of the detector. In this step, YOLO9000 trained by COCO target detection dataset and ImageNet image classification dataset can detect more than 9000 types of targets in real time. In order to improve the accuracy of detecting items.

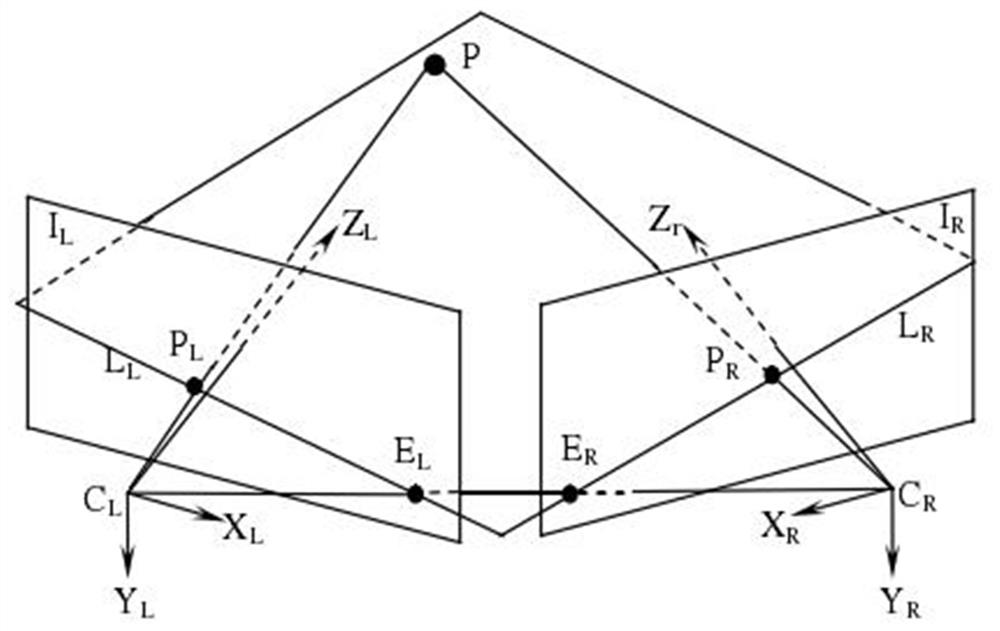

[0024] Stereo matching step: select ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com