Cross-modal image multi-style subtitle generation method and system

A multi-style, cross-modal technology, applied in neural learning methods, character and pattern recognition, biological neural network models, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0035] This embodiment provides a cross-modal image multi-style subtitle generation method;

[0036] A cross-modal image multi-style subtitle generation method, including:

[0037] S101: Acquiring an image of subtitles to be generated;

[0038] S102: Input the image of the subtitle to be generated into the pre-trained multi-style subtitle generation model, and output the multi-style subtitle of the image; the pre-trained multi-style subtitle generation model is obtained after training based on an adversarial generation network The training step includes: first training the ability of the multi-style subtitle generation model to express objective image information, and then training the ability of the multi-style subtitle generation model to generate stylized subtitles.

[0039] The cross-modality of this application is from the image mode to the text mode.

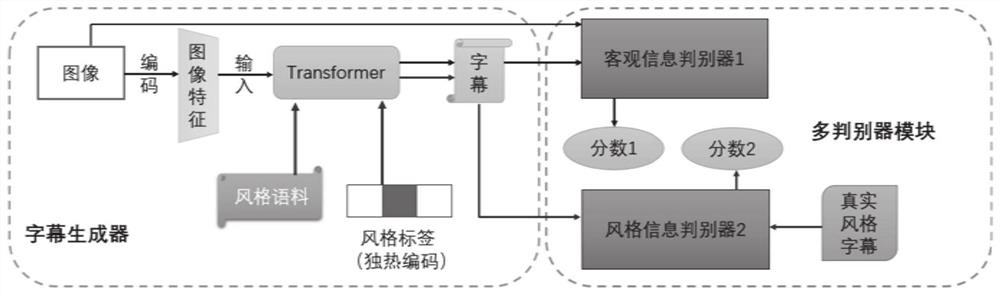

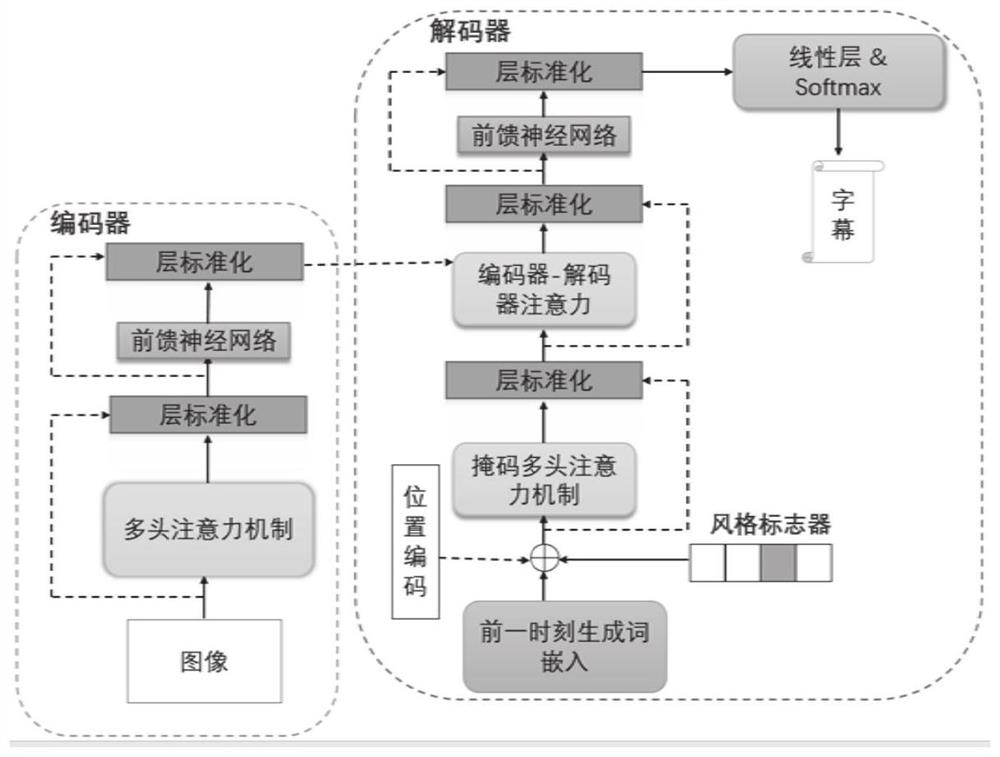

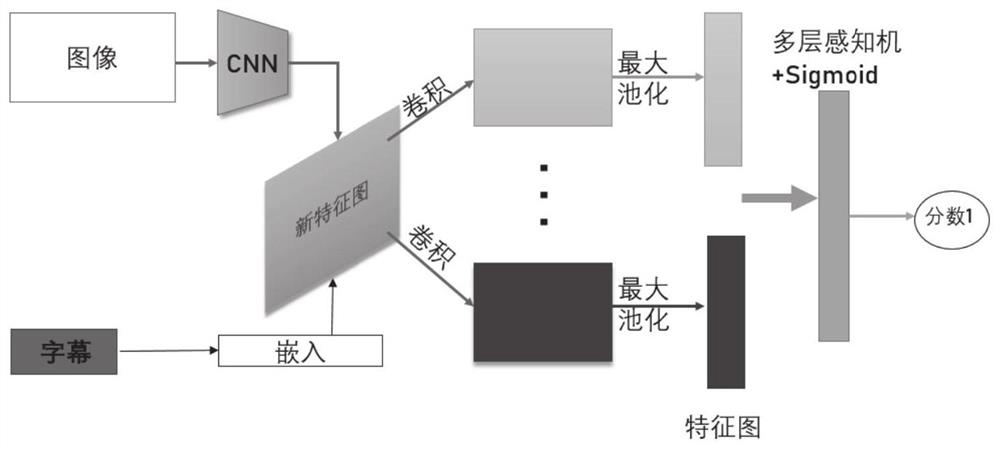

[0040] As one or more examples, such as figure 1 As shown, the confrontation generation network includes:

[0041] S...

Embodiment 2

[0107] This embodiment provides a cross-modal image multi-style subtitle generation system;

[0108] A cross-modal image multi-style subtitle generation system, including:

[0109] An acquisition module configured to: acquire an image of subtitles to be generated;

[0110] The generation module is configured to: input the image of the subtitle to be generated into a pre-trained multi-style subtitle generation model, and output the multi-style subtitle of the image; the pre-trained multi-style subtitle generation model is based on confrontation It is obtained after network training; the training step includes: first training the ability of the multi-style subtitle generation model to express objective image information, and then training the ability of the multi-style subtitle generation model to generate stylized subtitles.

[0111] It should be noted here that the above acquisition module and generation module correspond to steps S101 to S102 in the first embodiment, and the...

Embodiment 3

[0115] This embodiment also provides an electronic device, including: one or more processors, one or more memories, and one or more computer programs; wherein, the processor is connected to the memory, and the one or more computer programs are programmed Stored in the memory, when the electronic device is running, the processor executes one or more computer programs stored in the memory, so that the electronic device executes the method described in Embodiment 1 above.

[0116] It should be understood that in this embodiment, the processor can be a central processing unit CPU, and the processor can also be other general-purpose processors, digital signal processors DSP, application specific integrated circuits ASIC, off-the-shelf programmable gate array FPGA or other programmable logic devices , discrete gate or transistor logic devices, discrete hardware components, etc. A general-purpose processor may be a microprocessor, or the processor may be any conventional processor, o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com