Point cloud labeling method and labeling equipment

A point cloud and point cloud density technology, applied in the field of unmanned driving and automatic driving, can solve the problems of difficult labeling, increasing the difficulty of labeling, insufficient point cloud information of the target object, etc., and achieve the effect of extending the labeling area and reducing the difficulty of labeling

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

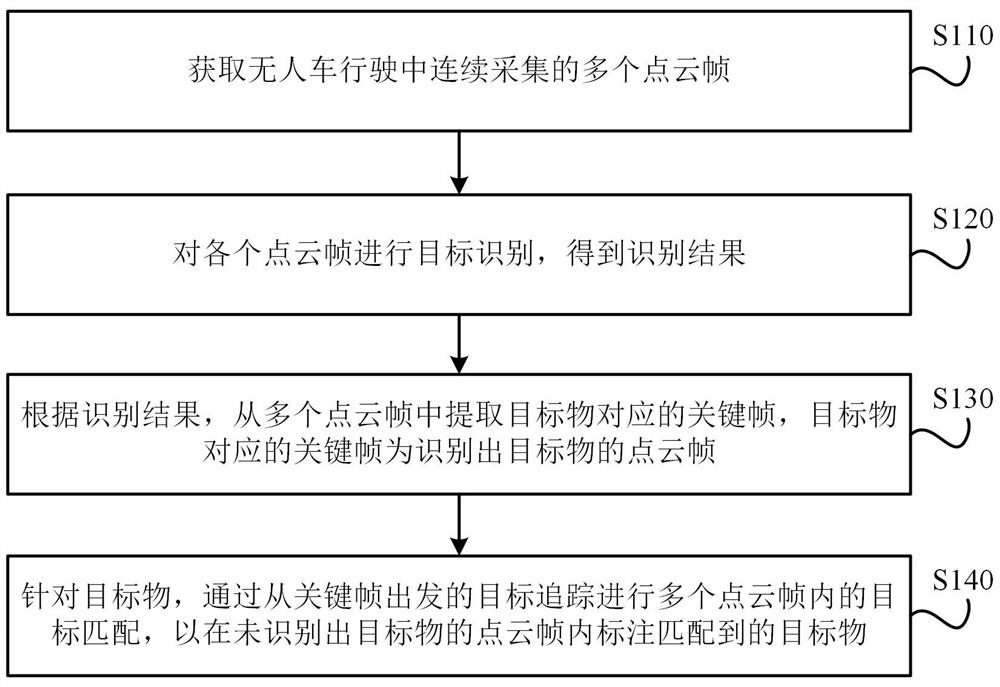

[0057] image 3 Shown is the flow chart of the point cloud labeling method. refer to image 3 , the labeling methods include:

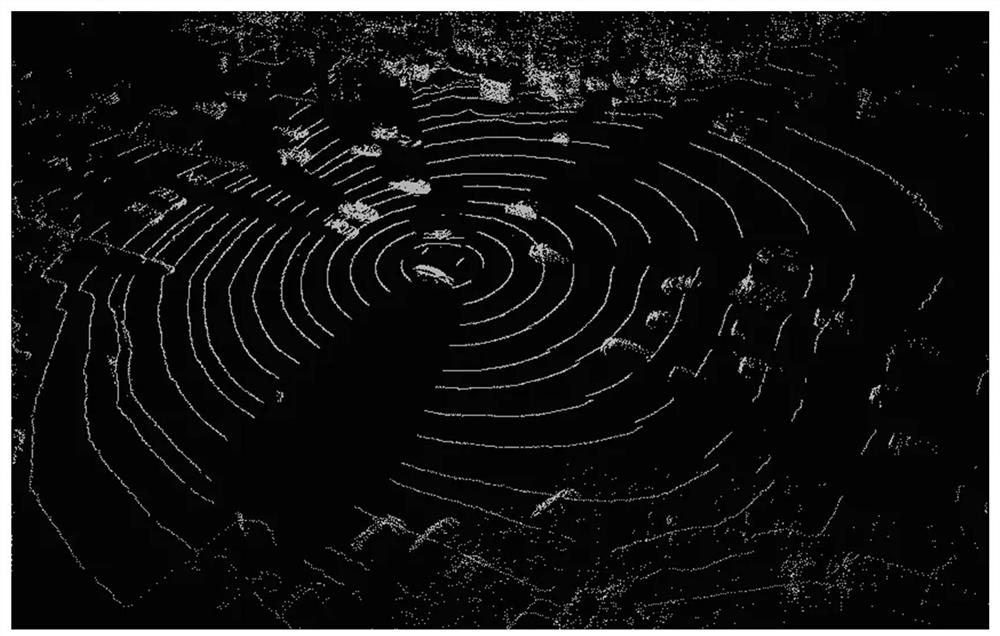

[0058] Step S110, acquiring a plurality of point cloud frames continuously collected during driving of the unmanned vehicle.

[0059] It should be noted that here, multiple point cloud frames are continuously collected through the laser radar installed on the unmanned vehicle, assuming that the multiple point cloud frames are sorted by the acquisition time as P 1 , P 2 ,...,P n , then multiple point cloud frames P 1 , P 2 ,...,P n There is no point cloud frame in which the position of the target object jumps, that is, the point cloud frame P i is the point cloud frame P i-1 with the point cloud frame P i+1 The transition between point cloud frames, the target object from the point cloud frame P i-1 The position shown passes through the point cloud frame P i Arriving at the point cloud frame P at the position shown i+1 location shown.

[...

Embodiment 2

[0073] The point cloud labeling method provided in this embodiment basically adopts the same process as that in the first embodiment above, so it will not be repeated here.

[0074] The difference is: refer to Figure 4 , step S130, according to the recognition result, extract key frames corresponding to the target object from multiple point cloud frames, including:

[0075] Step S131a, according to the recognition result, determine each point cloud frame in which the target is recognized among the multiple point cloud frames as a candidate frame, and obtain multiple candidate frames;

[0076] Step S132a, obtain the separation distance between the target object and the unmanned vehicle in each candidate frame, and obtain multiple separation distances;

[0077] Step S133a, determining the candidate frame corresponding to the minimum value among the plurality of separation distances as the key frame.

[0078] It should be emphasized that there is a one-to-one correspondence be...

Embodiment 3

[0082] The point cloud labeling method provided in this embodiment basically adopts the same process as that in the first embodiment above, so it will not be repeated here.

[0083] The difference is: refer to Figure 5 , step S130, according to the recognition result, extract key frames corresponding to the target object from multiple point cloud frames, including:

[0084] Step S131a, according to the recognition result, determine each point cloud frame in which the target is recognized among the multiple point cloud frames as a candidate frame, and obtain multiple candidate frames;

[0085] Step S132b, obtaining the interval duration between each candidate frame and the point cloud frame of the unrecognized object in acquisition time, and obtaining multiple interval durations;

[0086] In step S133b, the candidate frame corresponding to the minimum value among the plurality of interval durations is determined as a key frame.

[0087] It should be emphasized that there is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com