Classification model training method, system and device and storage medium

A classification model and training method technology, applied in the field of data processing, can solve the problems of insufficient consideration of the effectiveness and diversity of enhanced text, achieve the effects of reducing manual labeling costs, improving F1 parameters, and improving training effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

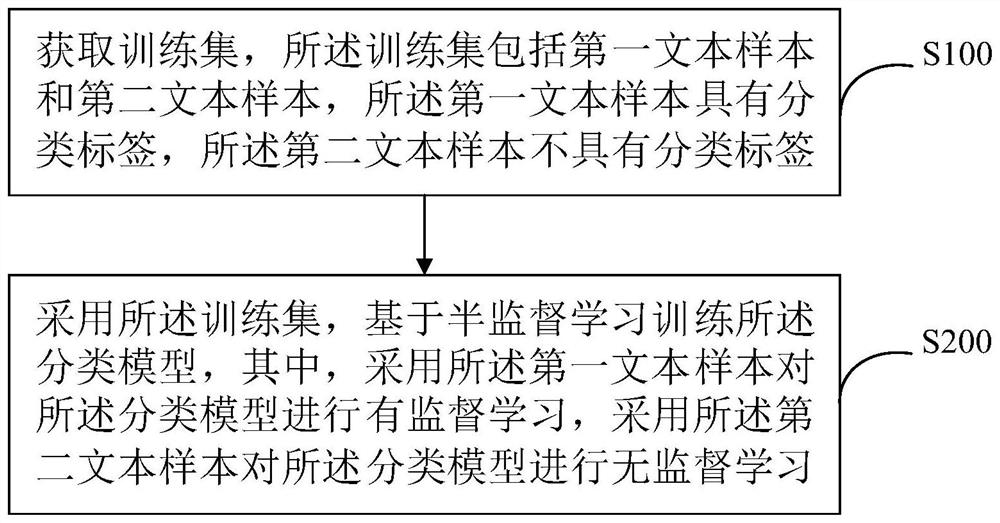

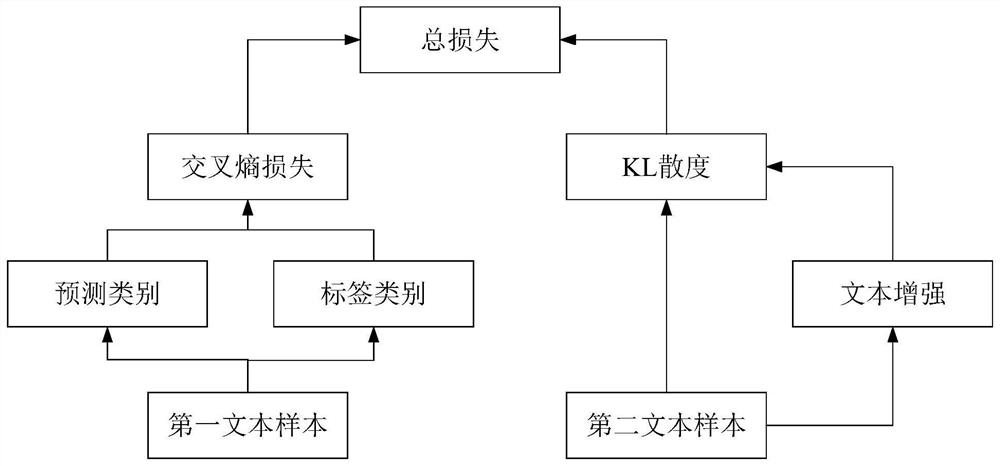

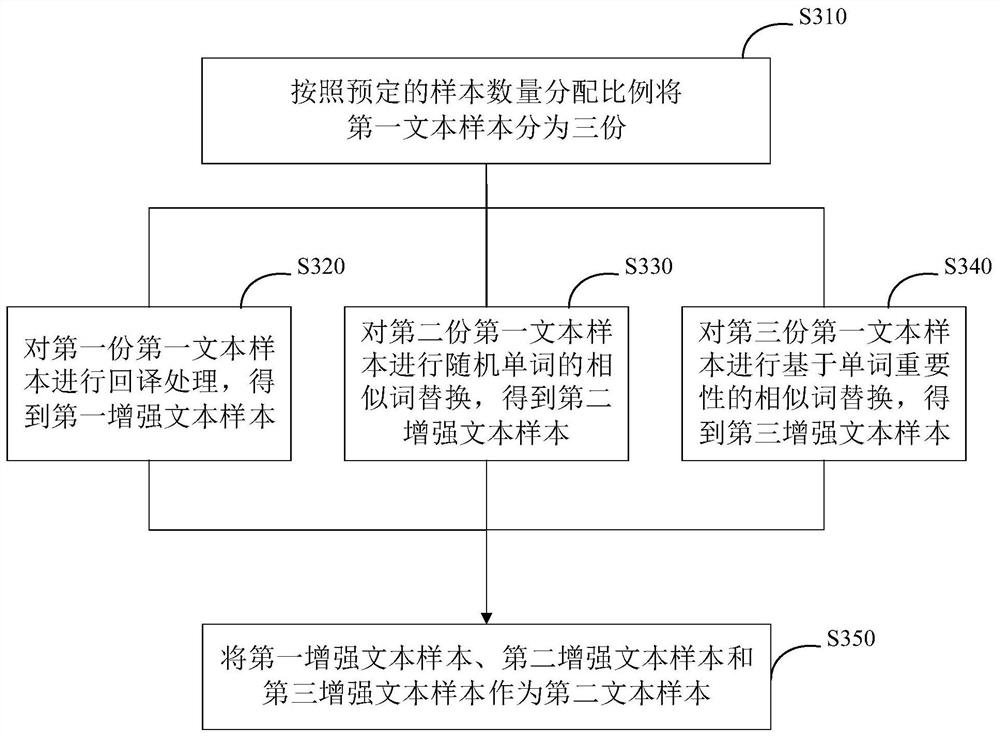

Method used

Image

Examples

Embodiment Construction

[0050] Example embodiments will now be described more fully with reference to the accompanying drawings. Example embodiments may, however, be embodied in many forms and should not be construed as limited to the examples set forth herein; rather, these embodiments are provided so that this disclosure will be thorough and complete and will fully convey the concept of example embodiments to those skilled in the art. The described features, structures, or characteristics may be combined in any suitable manner in one or more embodiments.

[0051] Furthermore, the drawings are merely schematic illustrations of the present disclosure and are not necessarily drawn to scale. The same reference numerals in the drawings denote the same or similar parts, and thus repeated descriptions thereof will be omitted. Some of the block diagrams shown in the drawings are functional entities and do not necessarily correspond to physically or logically separate entities. These functional entities ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com