Memory prefetch system and method requiring latency and data value correlation

A technology of memory and data values, applied in the field of computing systems, which can solve problems such as operating efficiency limitations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

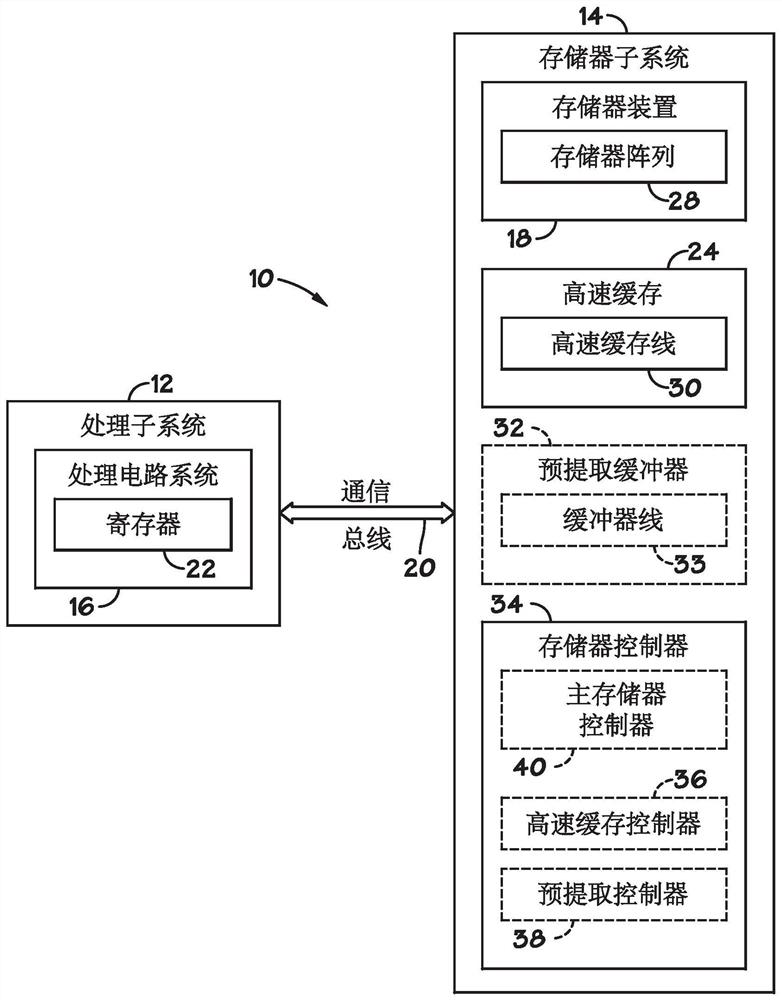

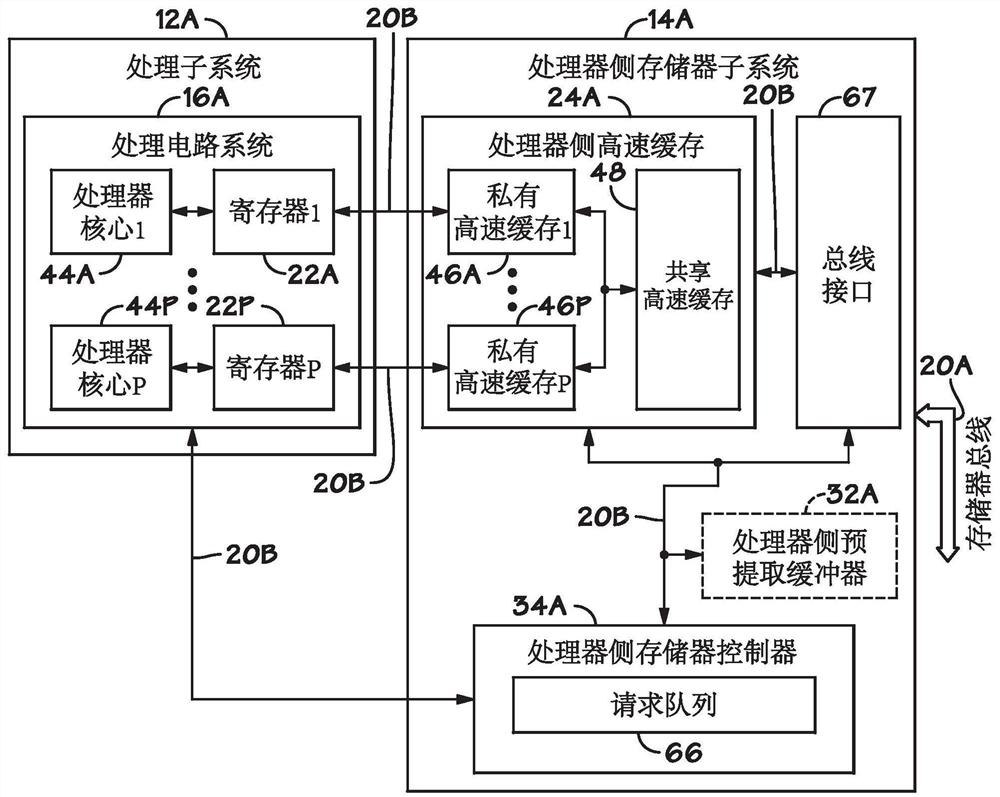

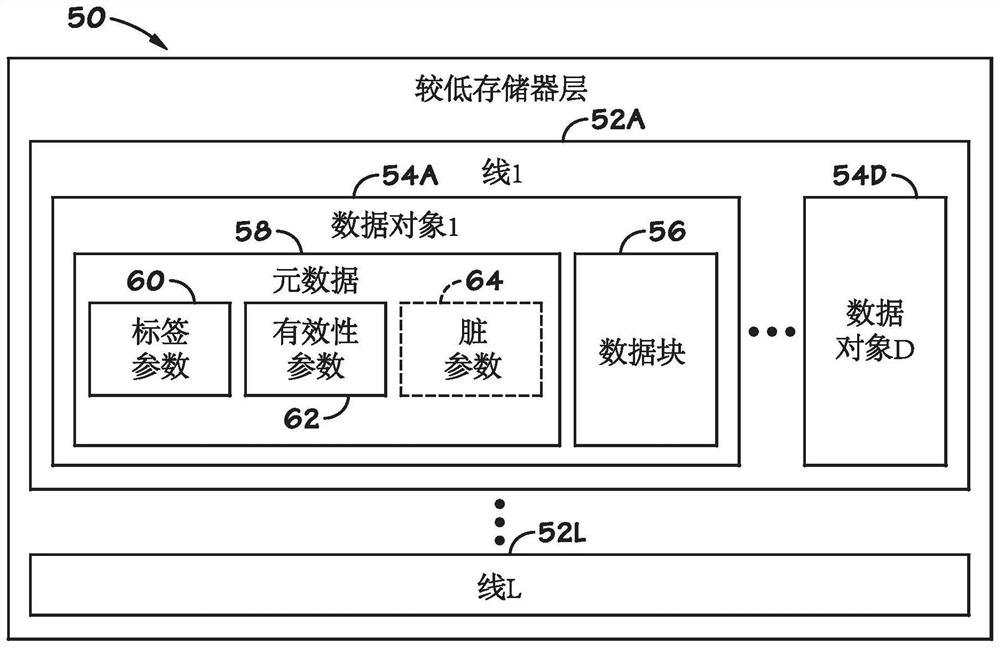

[0021] The present invention provides techniques that facilitate improving the operational efficiency of computing systems, for example, by mitigating architectural features that may otherwise limit operational efficiency. In general, a computing system may include various subsystems, such as a processing subsystem and / or a memory subsystem. In particular, a processing subsystem may include processing circuitry, eg, implemented in one or more processors and / or one or more processor cores. A memory subsystem may include one or more memory devices (eg, chips or integrated circuits), eg, implemented on a memory module, such as a dual in-line memory module (DIMM), and / or organized to implement one or more a memory array (eg, an array of memory cells).

[0022] Generally, during operation of a computing system, processing circuitry implemented in its processing subsystem may perform various operations by executing corresponding instructions, eg, to determine output data by perform...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com