Hierarchical sparse coding method of pruned deep neural network with extremely high compression ratio

A deep neural network and sparse coding technology, applied in the field of layered sparse coding, can solve the problems of difficult parallel implementation of procedural methods, difficult to achieve high compression ratio and efficient calculation, consumption and other problems at the same time, achieve considerable benefits, novel and concise layered The effect of sparse coding structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

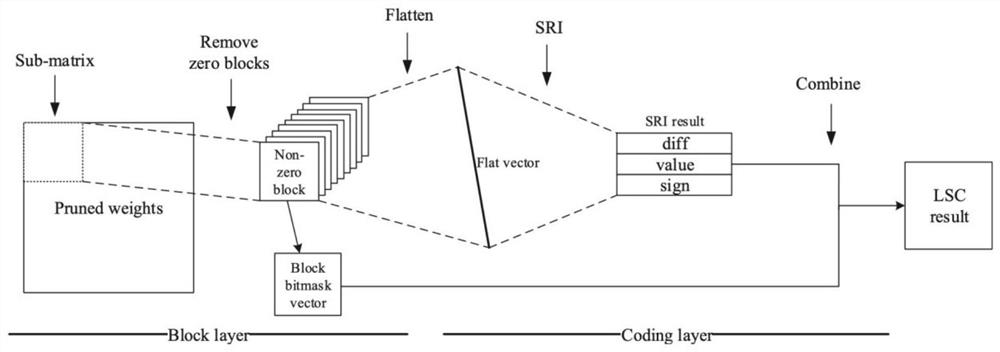

[0032] Such as figure 1 As shown, in the modified embodiment, a hierarchical sparse coding method for pruned deep neural networks with extremely high compression ratio, including maximizing the compression ratio by greatly reducing the amount of metadata, utilizing a novel and effective The hierarchical sparse coding framework optimizes the coding and inference process design of sparse matrices; proposes a sparse coding method with a very high compression ratio, which uses the LSC method, including two key layers, the block layer and the coding layer. In the block layer, the The sparse matrix is divided into multiple small blocks, and then the zero blocks are deleted. In the encoding layer, a novel SRI method is proposed to further encode these non-zero blocks; moreover, the present invention designs an effective decoding mechanism for the LSC method , to speed up the multiplication of the encoding matrix during the inference stage.

[0033] Specifically include the followi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com