Rope skipping posture and number recognition method based on computer vision

A technology of computer vision and recognition methods, applied in computer components, computing, neural learning methods, etc., can solve problems such as strong dependence on counters and limited artificial concentration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

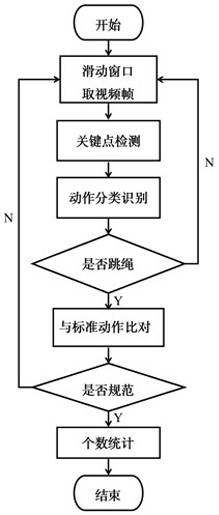

[0051] A computer vision-based rope skipping posture and number recognition method, such as image 3 , including the following steps, in order:

[0052] Step S1: collecting video of human jumping rope;

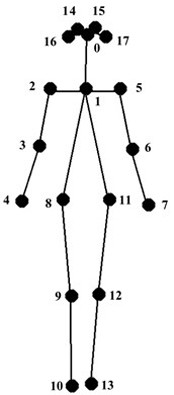

[0053] Step S2: Perform human key point detection on each frame of the video to obtain the coordinate positions and coordinate confidence of the 18 key points of the human body in each frame, and sequentially number them as 0, 1, ..., 17;

[0054] Step S3: Process the coordinate data of the key points, and normalize the coordinates of the key points to -0.5~0.5;

[0055] Step S4: Utilize the selected T-frame keyframes and the coordinates of the key points in each frame keyframe and the confidence of the coordinates to construct a skeleton sequence graph structure, record the space-time graph of the skeleton sequence as G=(V, E), and the nodes V={Vti|t=1,...,T; i=0,...,n-1}, the eigenvector F(vti)={xti,yti,scoreti of the i-th node of frame t }, (xti, yti) is the processed coo...

Embodiment 2

[0074] Feature vector in the step S9 The specific set of eigenvector included angles is: S A ={An(0)(1)-(1)(5), An(0)(1)-(1)(2), An(1)(2)-(1)(5), An(1) )(2)-(2)(3), An(2)(3)-(3)(4), An(1)(5)-(5)(6), An(5)(6)- (6)(7), An(1)(2)-(1)(8), An(1)(5)-(1)(11), An(1)(8)-(8)(9 ), An(8)(9)-(9)(10), An(1)(11)-(11)(12), An(11)(12)-(12)(13), An(2 )(8)-(2)(3), An(5)(11)-(5)(6)}, where 18 key points are numbered nose 0, neck 1, right shoulder 2, right elbow 3, right Wrist 4, Left Shoulder 5, Left Elbow 6, Left Wrist 7, Right Hip 8, Right Knee 9, Right Ankle 10, Left Hip 11, Left Knee 12, Left Ankle 13, Right Eye 14, Left Eye 15, Right Ear 16, Left ear 17.

[0075] Further, the method of calculating the cosine similarity in step S10 is to calculate the cosine of the angle between the eigenvectors, and calculate the variance or standard deviation between the cosine and the cosine of the angle between the cosine and the preset eigenvector, and the preset threshold is generally Take 0.68. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com