Non-blocking L1 Cache in multi-core SOC

A non-blocking, multi-core technology used in the field of computer architecture to solve problems such as the mismatch between the speed and bandwidth of processors and DRAM

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

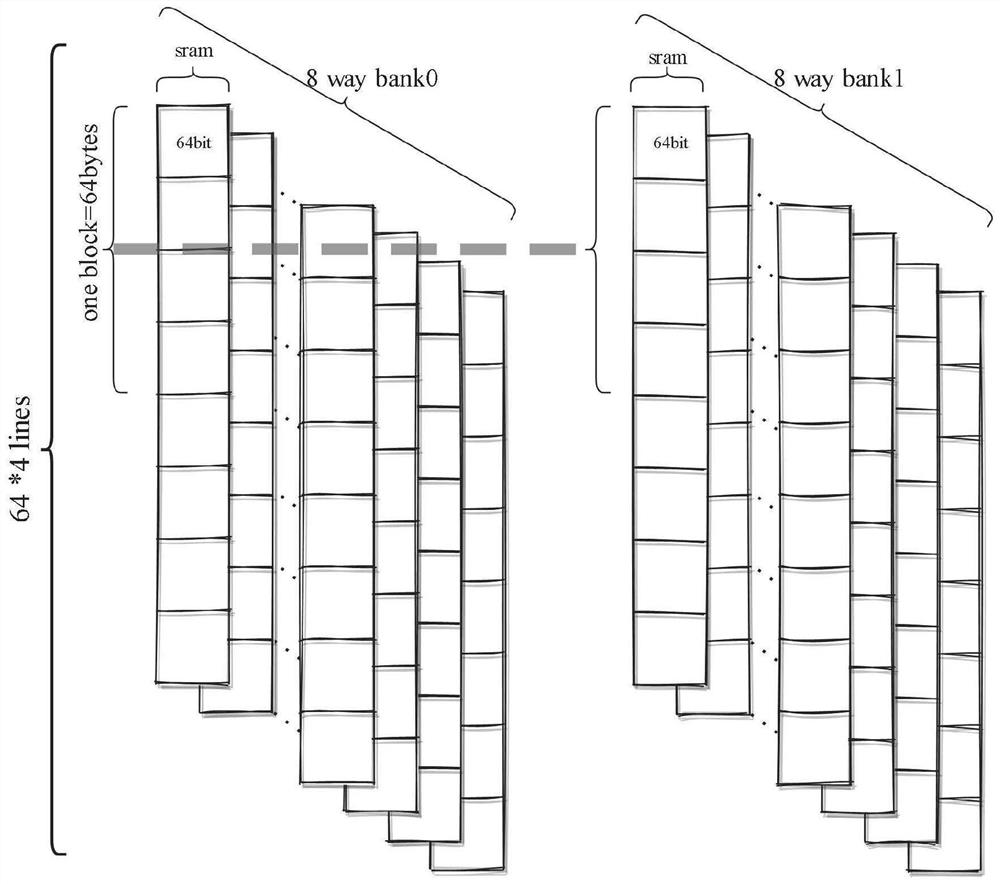

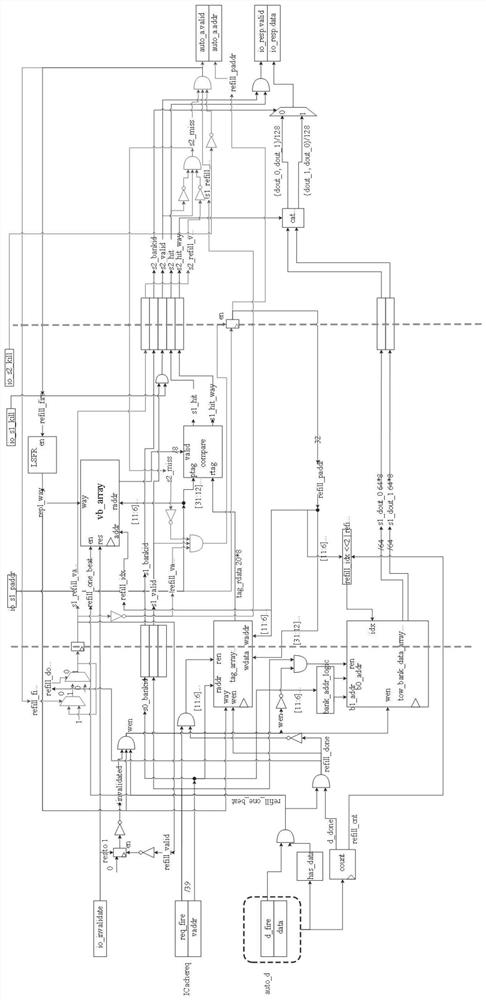

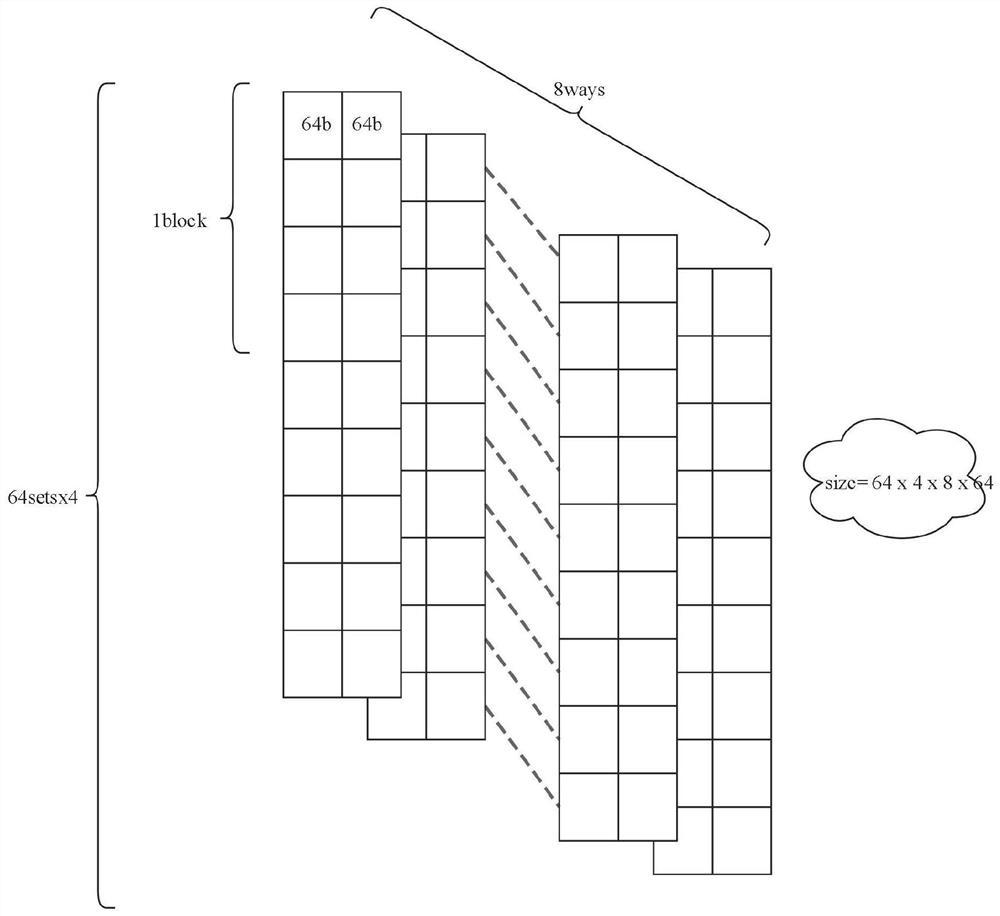

[0032] This embodiment provides a non-blocking L1 Cache in a multi-core SOC. The L1 Cache includes two circuit modules, ICache and DCache. The ICache circuit module is designed as an 8-way set-associated dual-bank SRAM to provide data access for the CPU instruction fetching stage, specifically including:

[0033] Accept the request of the instruction fetch stage in the CPU pipeline;

[0034] Divide the 11-6 bits of the requested address into the 31-12 bits of the idx address and divide them into bit tags, idx is used to index the corresponding group number, and tag is used to compare whether they match;

[0035] Take out the corresponding effective bit and tag through idx. If the effective bit is valid, compare whether the eight tags match the tag requested by the CPU. If they match, it will judge the hit and return the corresponding data; if they do not match, report missing;

[0036] The missing data requested by the CPU will request the missing data from the bus;

[0037] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com