Text generation model training method and device, storage medium and computer equipment

A technology for generating models and training methods, applied in computer parts, biological neural network models, computing, etc., can solve problems such as failure to consider deep-level correlations, reply texts that deviate from the overall logic of multiple rounds of dialogue, and inability to guarantee the accuracy of reply text generation. , to achieve the effect of improving the generation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] Hereinafter, the present invention will be described in detail with reference to the drawings and examples. It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other.

[0037] At present, in the process of training the deep learning model, it does not consider whether the generated reply text is deeply related to the contextual dialogue topics in the multi-round dialogue, which leads to the reply text generated by the deep learning model deviates from the overall logic of the multi-round dialogue , the generation accuracy of the reply text cannot be guaranteed.

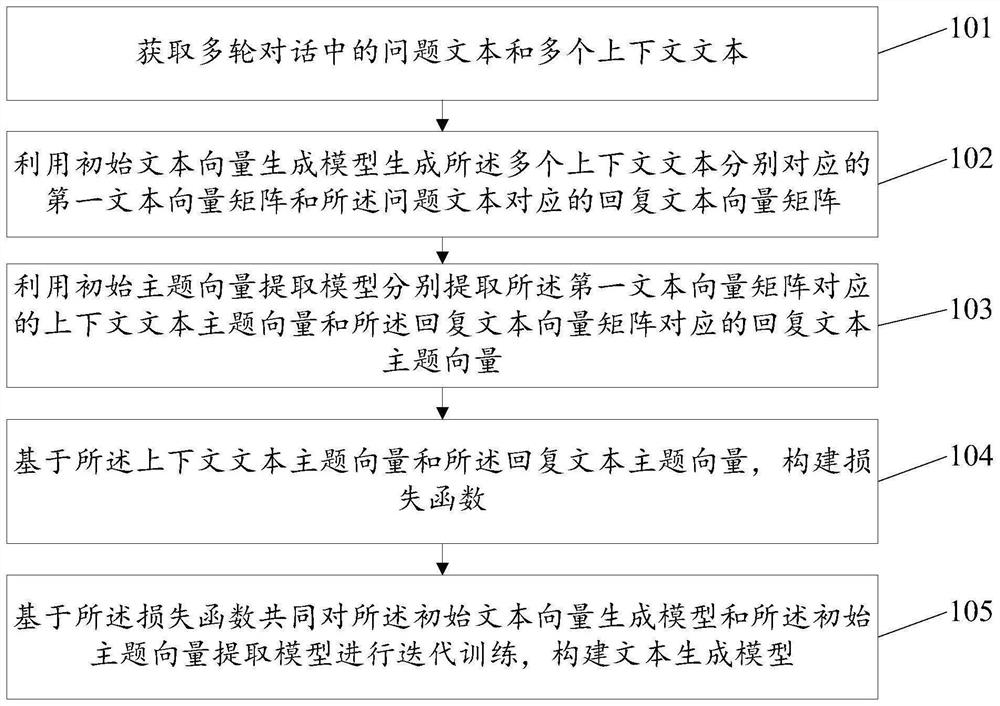

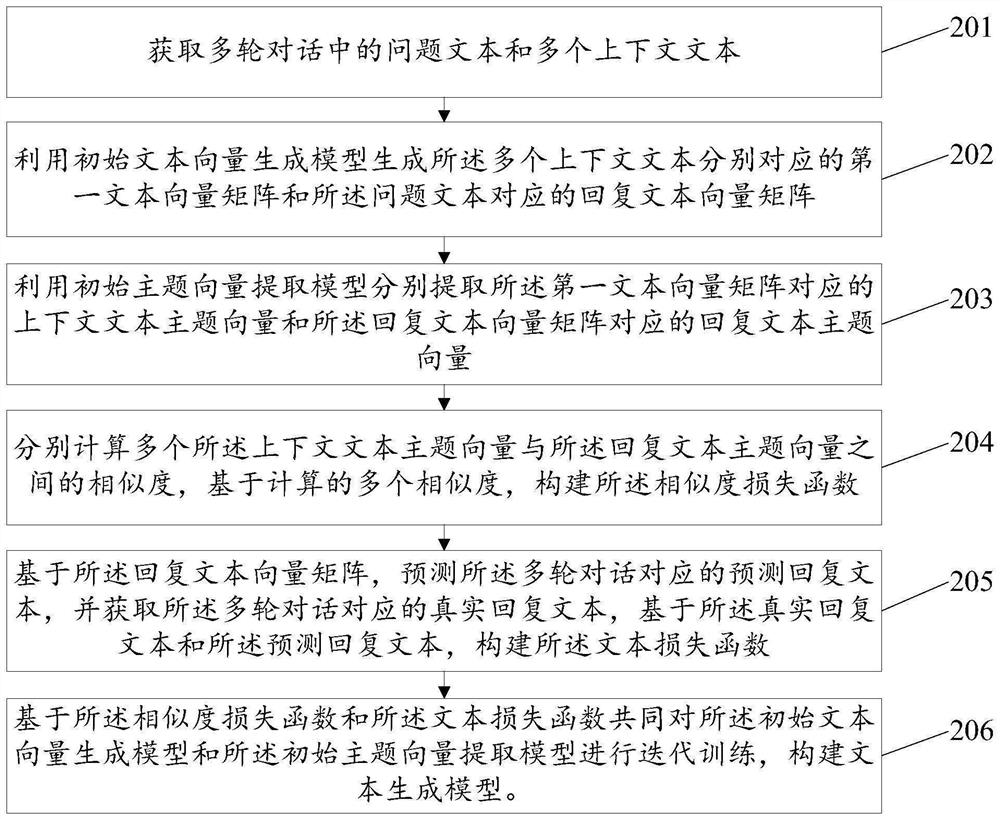

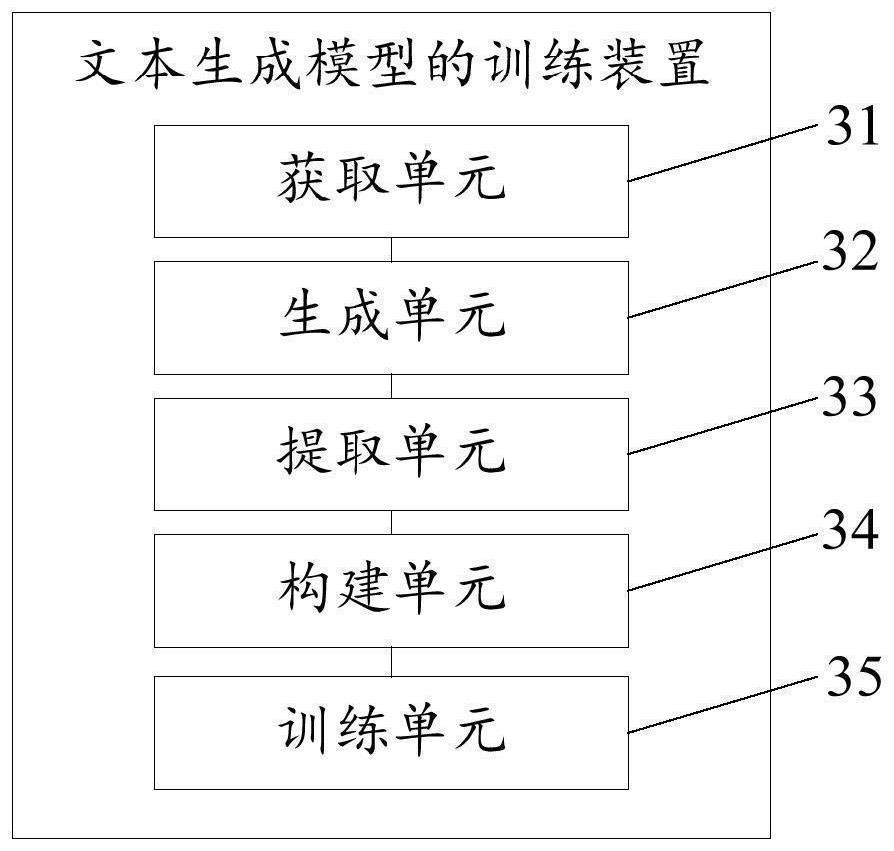

[0038] In order to solve the above problems, an embodiment of the present invention provides a training method for a text generation model, such as figure 1 As shown, the method includes:

[0039] 101. Obtain question text and multiple context texts in multiple rounds of dialogue.

[0040] Wherein, at le...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com