Three-dimensional object recognition method combining view importance network and self-attention mechanism

A three-dimensional object and recognition method technology, applied in the field of computer vision, can solve the problem of loss of three-dimensional object view information, and achieve the effect of avoiding the decline of recognition accuracy and enhancing feature expression.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The invention is implemented based on the open source tool Pytorch of deep learning, and uses the GPU processor NVIDIA GTX3090 to train the network model.

[0027] In the following, the composition of each module in the method of the present invention will be further described in conjunction with the accompanying drawings and specific embodiments. Modifications of various equivalent forms of the present invention by those skilled in the art all fall within the scope defined by the appended claims of the present application.

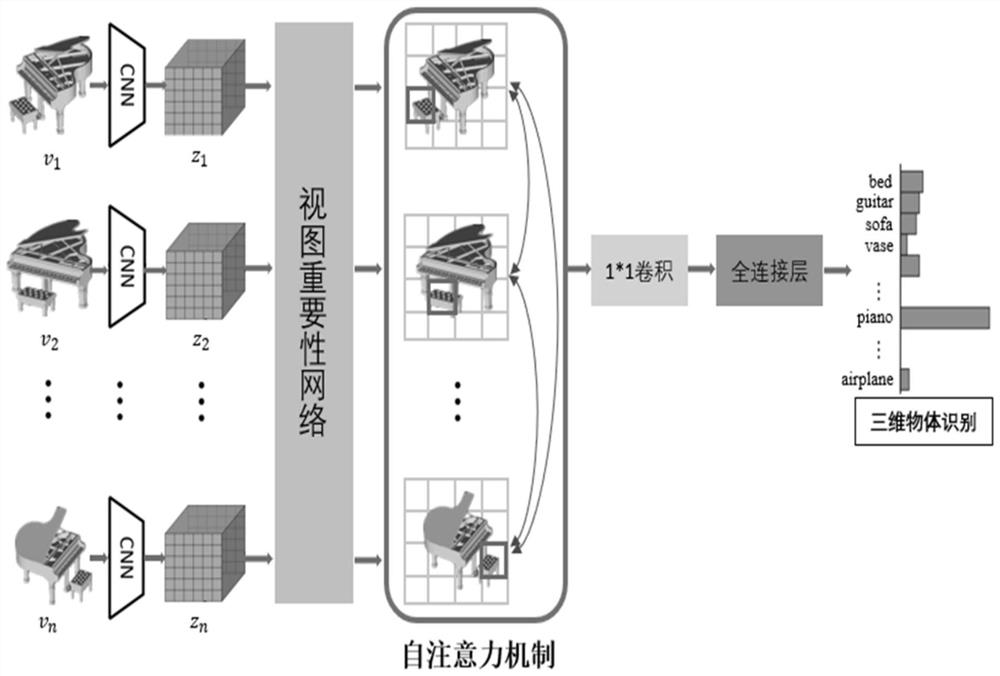

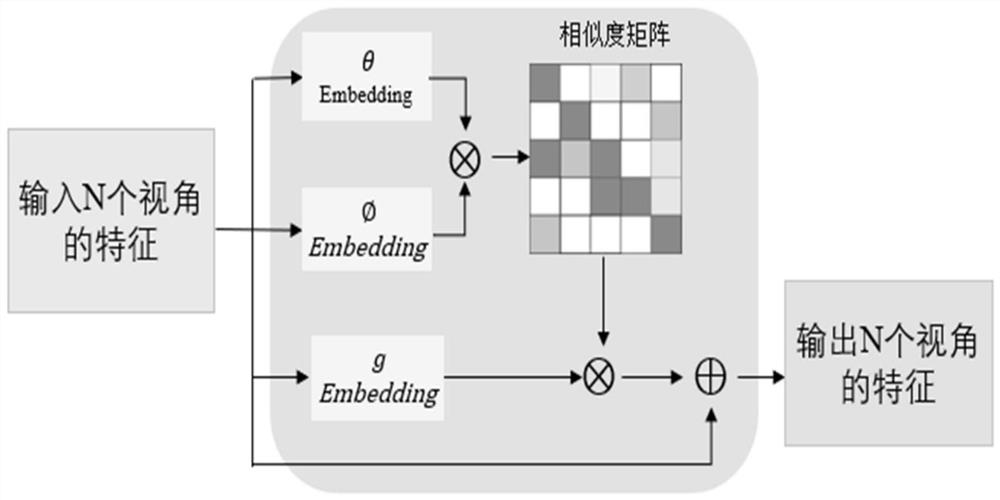

[0028] The composition and flow of the network framework of the present invention are as follows: figure 1 shown, including the following steps:

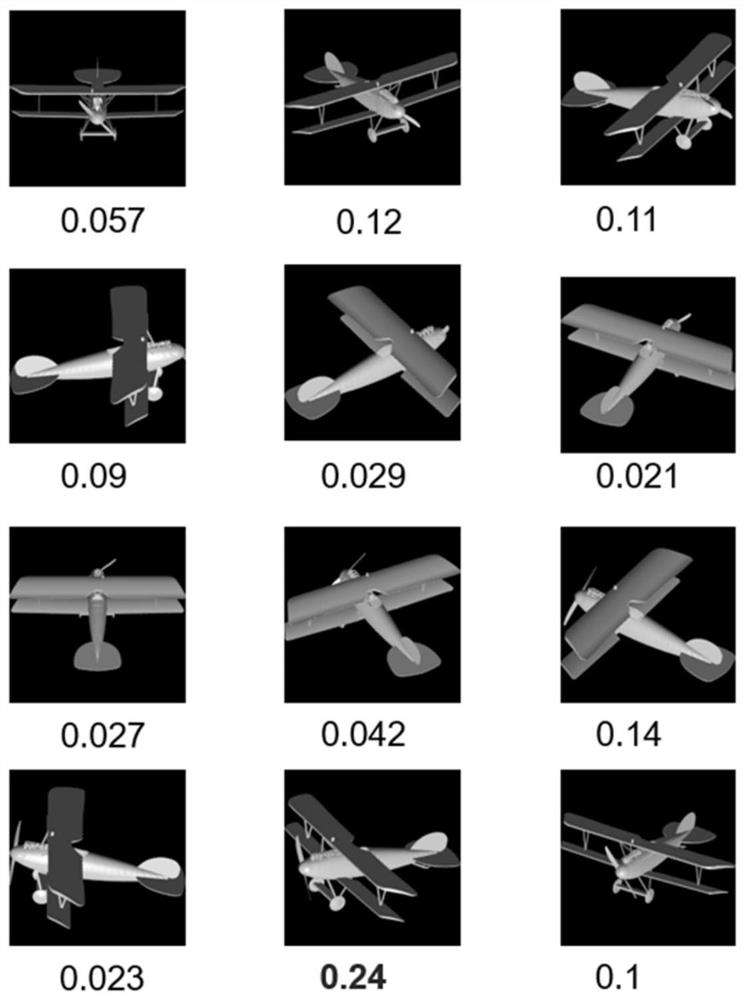

[0029] Step 1: Project the three-dimensional object model from n perspectives, and then obtain n rendering views of the object V={v 1 , v 2 ,...,v n }, where v i is the ith view of the object, and n is set to 12 in this experiment, that is, 12 perspectives are used for 3D object recognition.

[0030]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com