Video generation model training method, video generation method and device

A technology for generating models and videos, applied in the field of artificial intelligence, can solve problems such as poor quality of fitting videos, discontinuous space and time, and inconsistencies in time and space of fitting videos, and achieve the effect of stable and non-jittering images and improved image quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

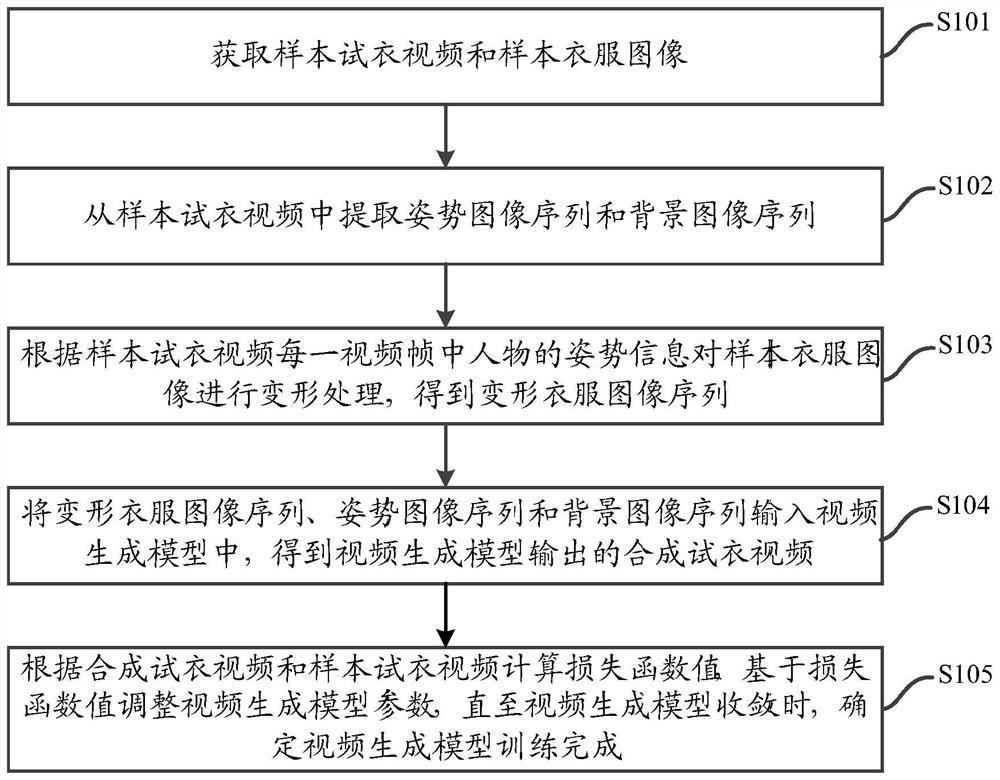

[0072] The technical solutions in the embodiments of the present application will be described below with reference to the accompanying drawings in the embodiments of the present application.

[0073] like figure 1 As shown, the embodiment of the present application provides a video generation model training method, the method can be applied to electronic equipment, and the electronic equipment can be a smart phone, a tablet computer, a desktop computer, a server and other equipment, and the method includes:

[0074] S101. Obtain a sample fitting video and a sample clothes image.

[0075] Among them, the sample fitting video is a video shot by a person wearing sample clothes.

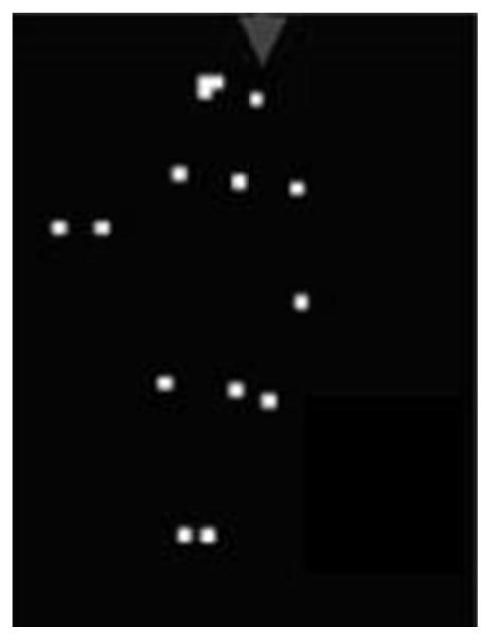

[0076] S102, extract a pose image sequence and a background image sequence from the sample fitting video.

[0077] Wherein, the posture image sequence includes the posture information of the characters in each video frame of the sample fitting video, and the background image sequence includes the imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com