High-speed memory pre-read method and device

A memory and read-ahead technology, applied in the storage field, can solve the problems of inflexibility of fixed read-ahead technology, inability to read by the host, and less ability to read data in advance, and achieve the effect of improving system read performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

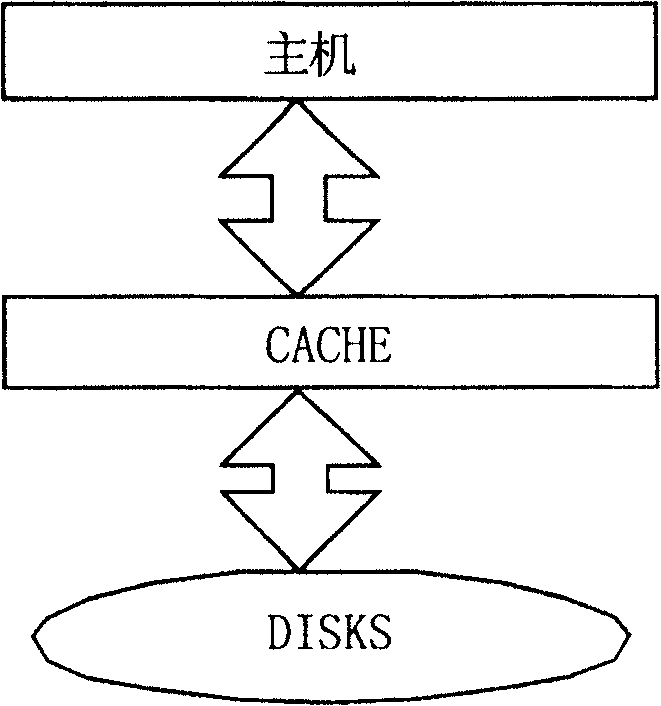

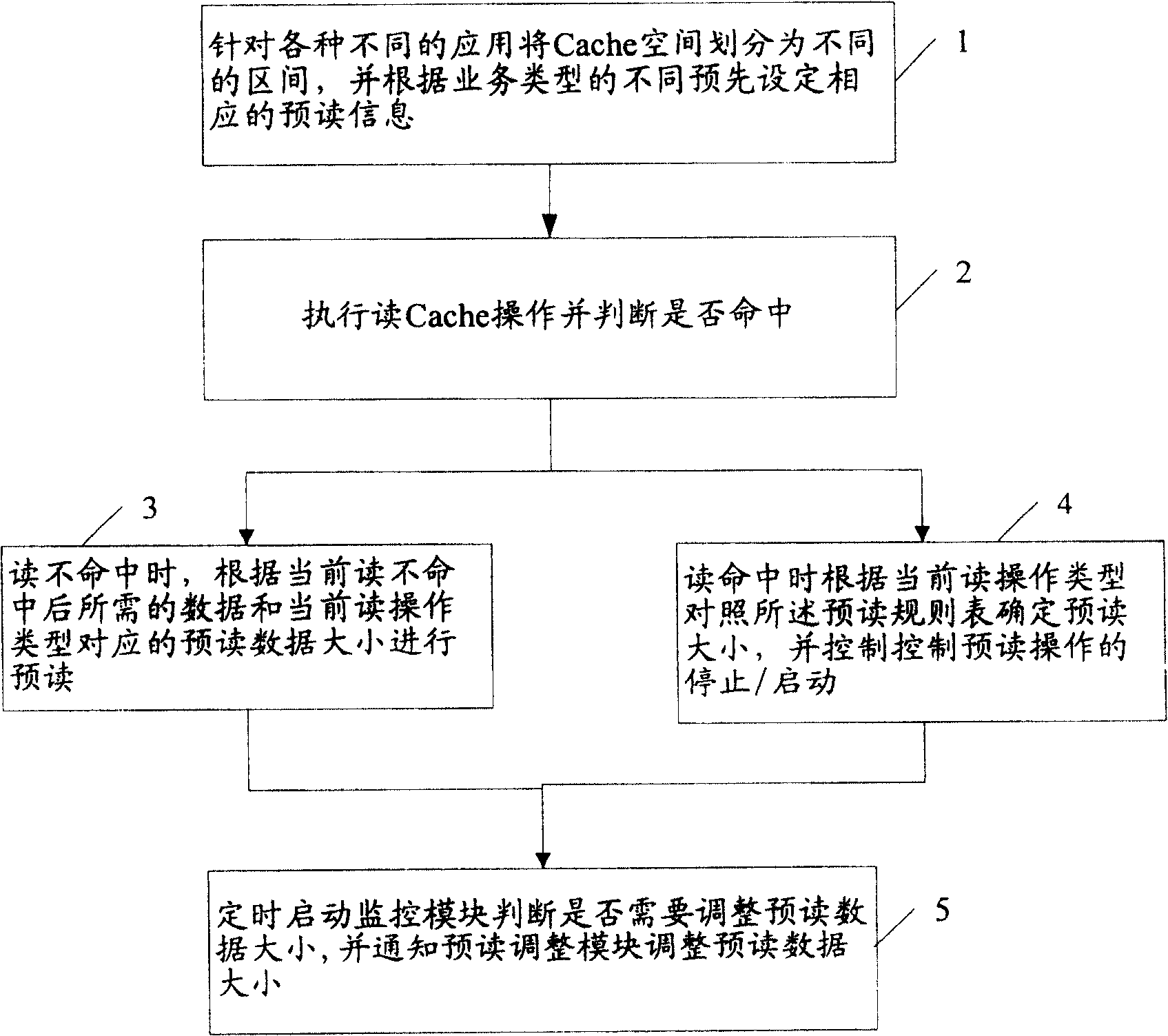

[0042] The core idea of the present invention is to provide a high-speed memory pre-reading method and device. For various data access modes, the corresponding pre-reading information is preset, and the pre-reading operation is performed according to the preset pre-reading information, and dynamically Adjust the read-ahead data size to improve the cache read hit rate and read cache operation efficiency.

[0043] The cache read-ahead operation is mainly to pre-read the cache according to the current read request when reading the cache, and pre-read the data required for the next read command into the cache in advance, so as to improve the read performance and read efficiency of the cache.

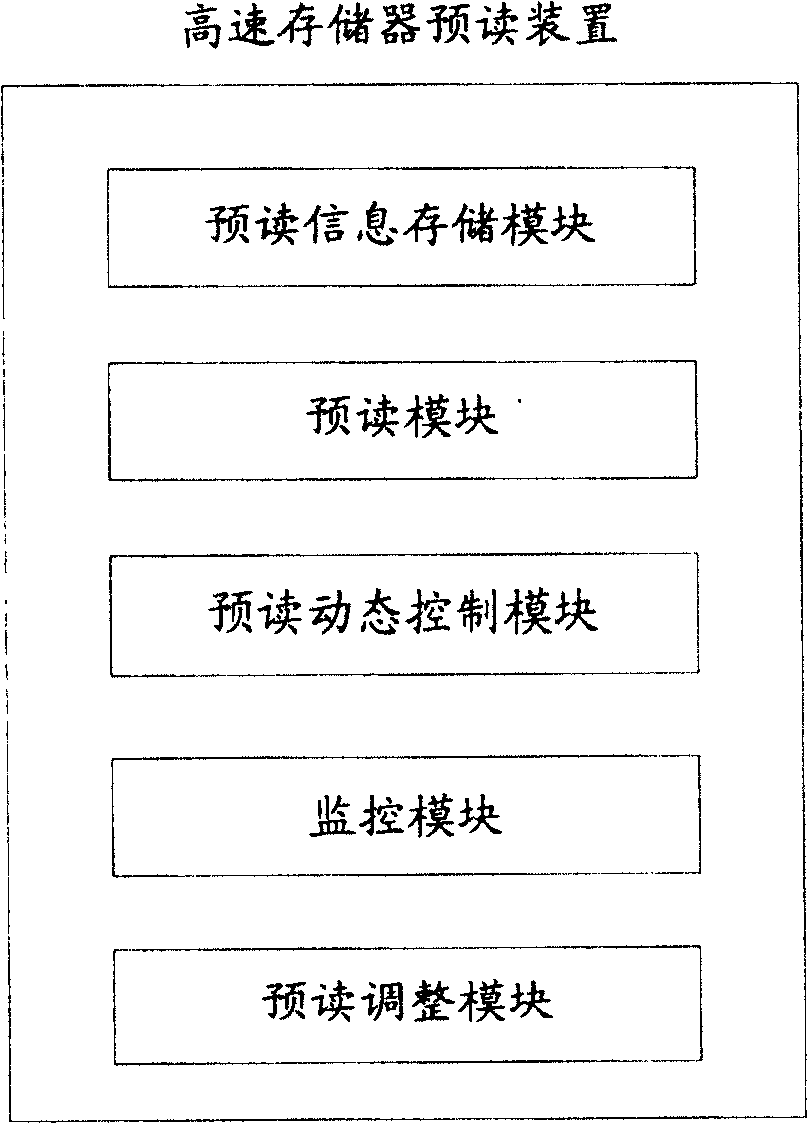

[0044] The present invention provides a high-speed memory pre-reading device, the module schematic diagram of an embodiment of the device is as follows figure 2 As shown, it includes: a pre-reading information storage module and a pre-reading module.

[0045] The pre-reading information ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com