Depth map treatment method and device

A processing method and depth map technology, applied in the field of depth map processing methods and devices, can solve the problems of inaccurate edges, unstable depth values, and the inability of depth maps to reflect the distance between objects in the scene more realistically, and achieve object outlines precise effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

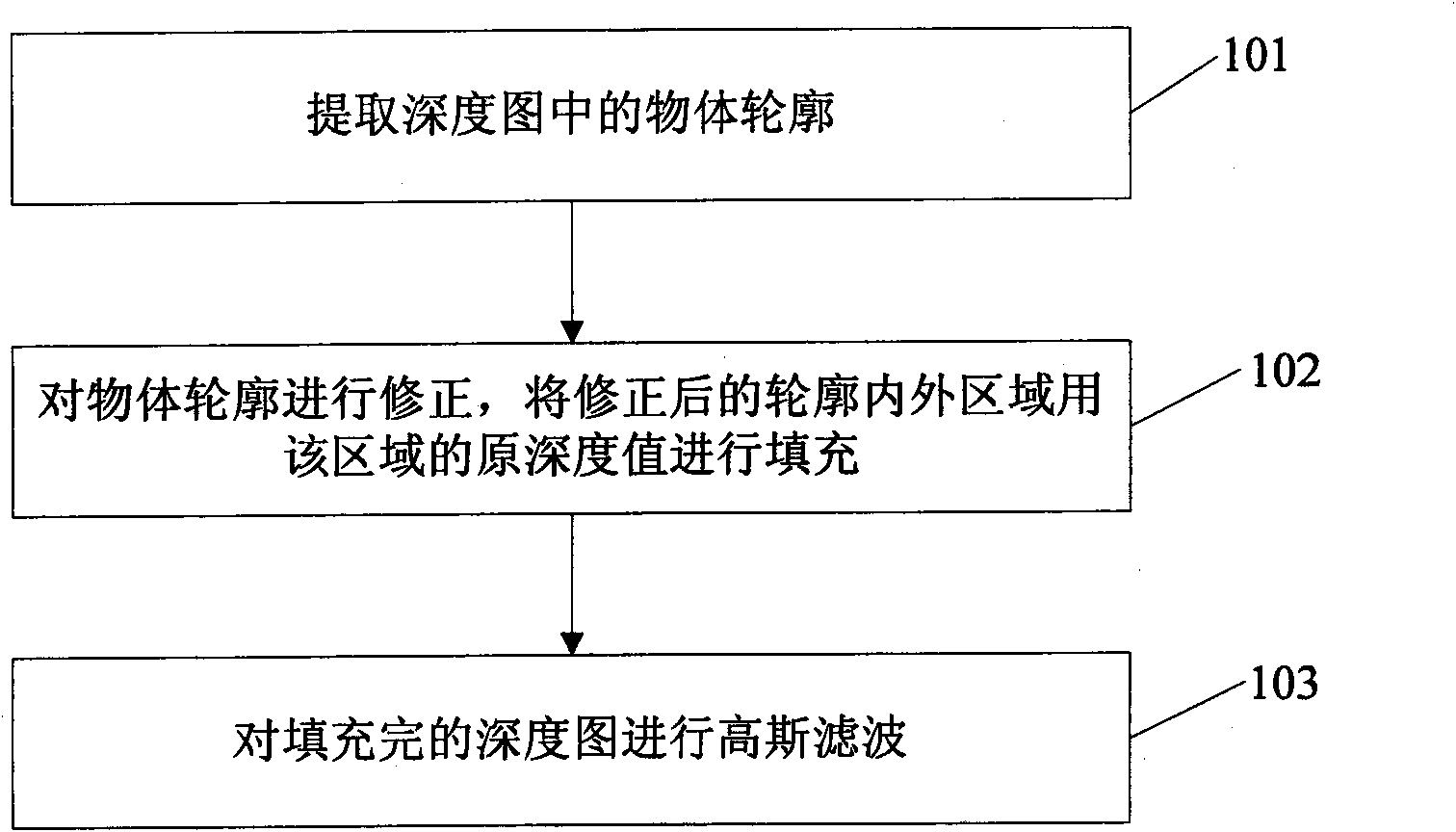

[0053] see figure 1 , Embodiment 1 of the present invention provides a method for processing a depth map, the method comprising:

[0054] 101: Extract the object outline in the original depth image;

[0055] Specifically, the extracted object contour can be expressed in the form of a sequence of contour points, that is, discrete point sampling is performed on the extracted object contour to obtain N contour points v i , i={1, 2, ..., N}, the intervals between these contour points are not uniform. For example, for contours in horizontal, vertical or diagonal directions, only the contour points at both ends are reserved.

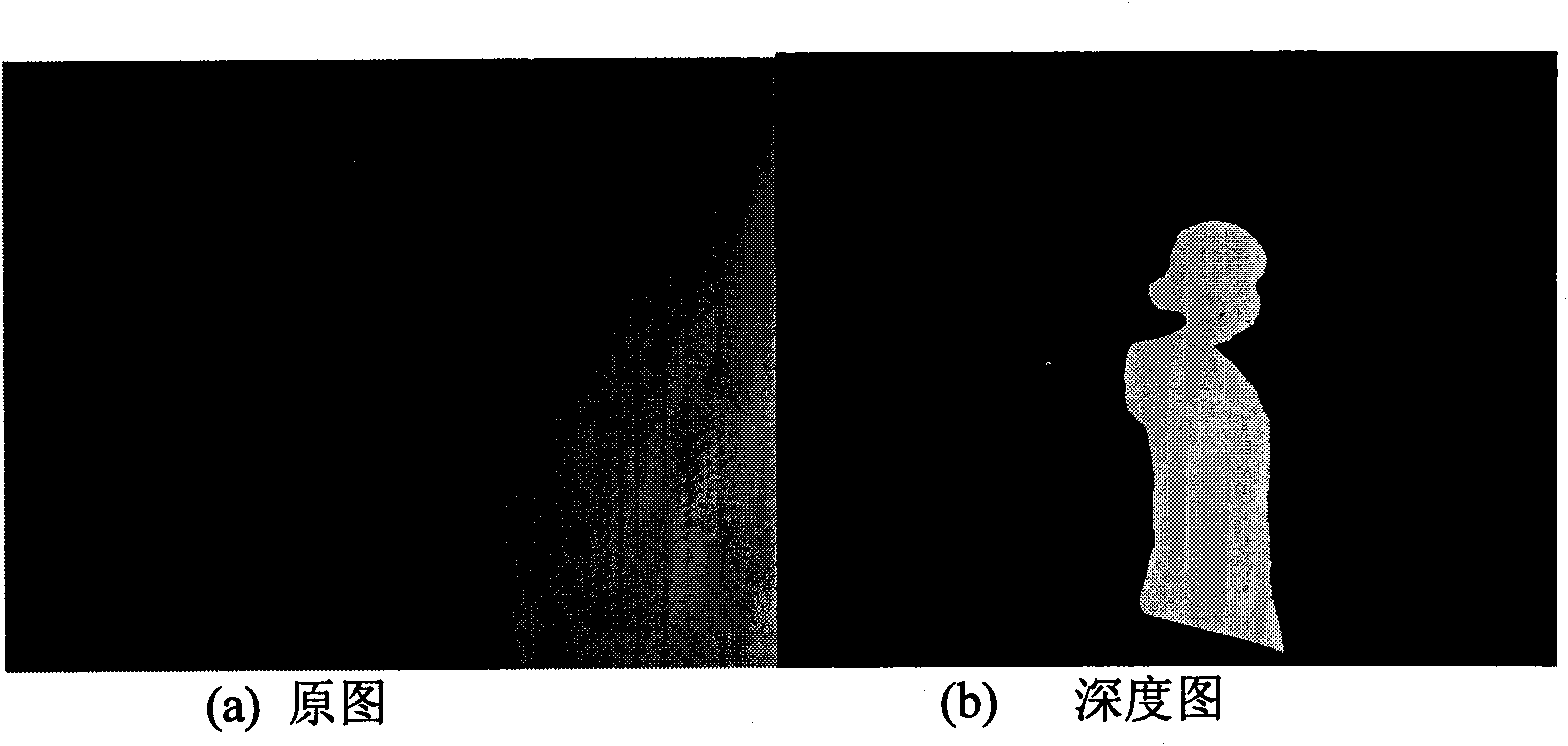

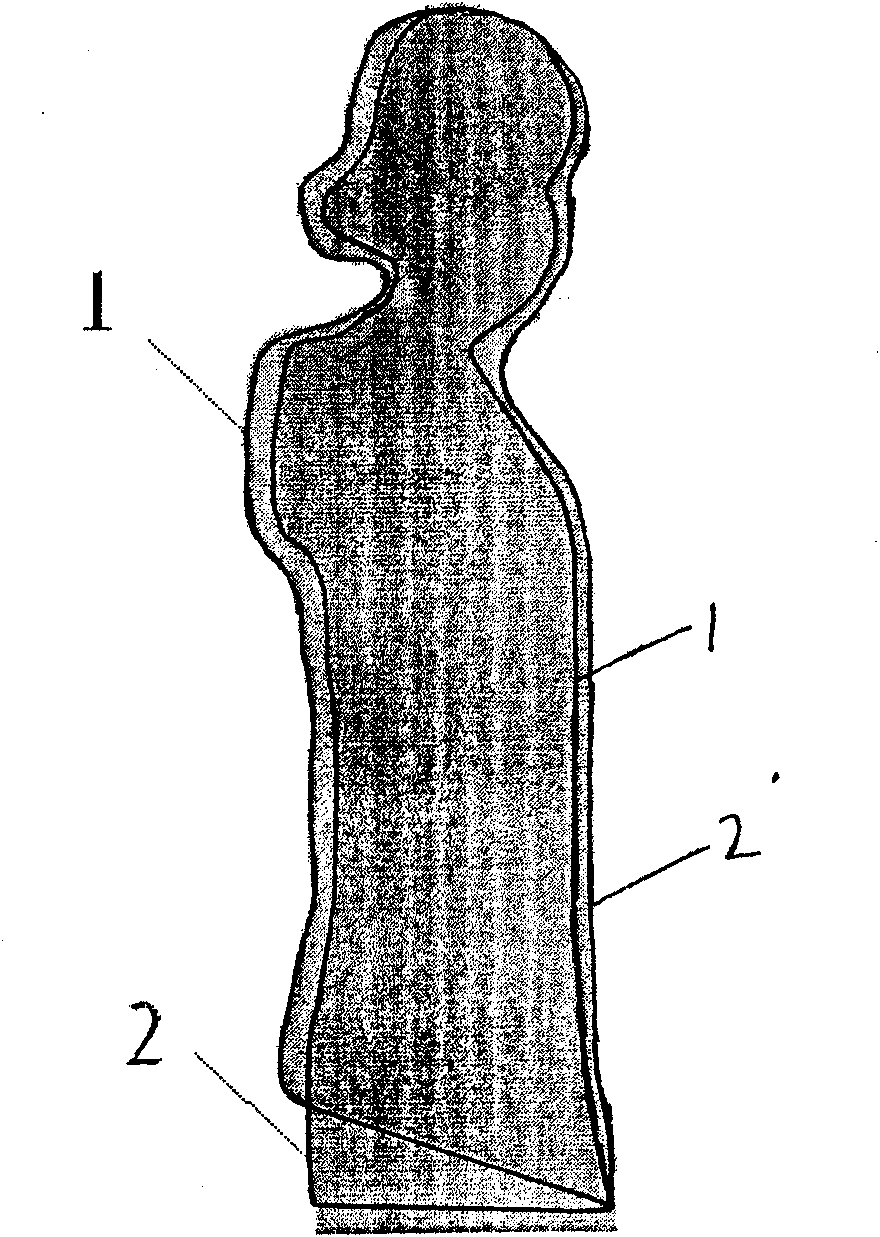

[0056] see figure 2 , figure 2 (a) is the original image of one of the frame images in the planar video sequence, figure 2 (b) for figure 2 (a) The original depth map of the corresponding original image, image 3 Contour 1 in is the directly extracted contour of the person in the original depth map. Obviously, the directly extracted contour 1 is the...

Embodiment 2

[0120] see Figure 7 , Embodiment 2 of the present invention provides a depth map processing device, the device includes: an extraction module 201, a correction module 202, a filling module 203 and a first filtering module 204;

[0121] The extraction module 201 is used to extract the object outline in the original depth map;

[0122] Specifically, the extraction module 201 may include: an extraction unit and a sampling unit;

[0123] The extraction unit is used to extract the object outline in the original depth map;

[0124] The sampling unit is used to perform discrete point sampling on the object contour extracted by the extraction unit to obtain N contour points v i, i={1, 2, ..., N}, the intervals between these contour points are not uniform. For contours in horizontal, vertical or diagonal directions, only the contour points at both ends are kept.

[0125] see figure 2 , figure 2 (a) is the original image of one of the frame images in the planar video sequence, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com