Mobile terminal user interface regulation system and method

A mobile terminal and user interface technology, which is applied in the fields of instruments, computing, electrical and digital data processing, etc., can solve problems such as poor user experience, and achieve the effect of convenient operation and improved user experience.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] The mobile terminal user interface adjustment system and its adjustment method disclosed in the present application will be described in detail below with reference to the accompanying drawings. For the sake of brevity, in the descriptions of the embodiments of the present application, the same or similar devices use the same or similar reference numerals.

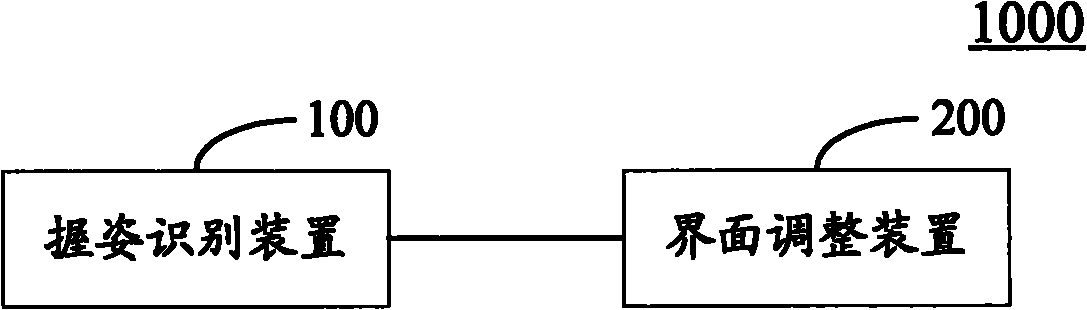

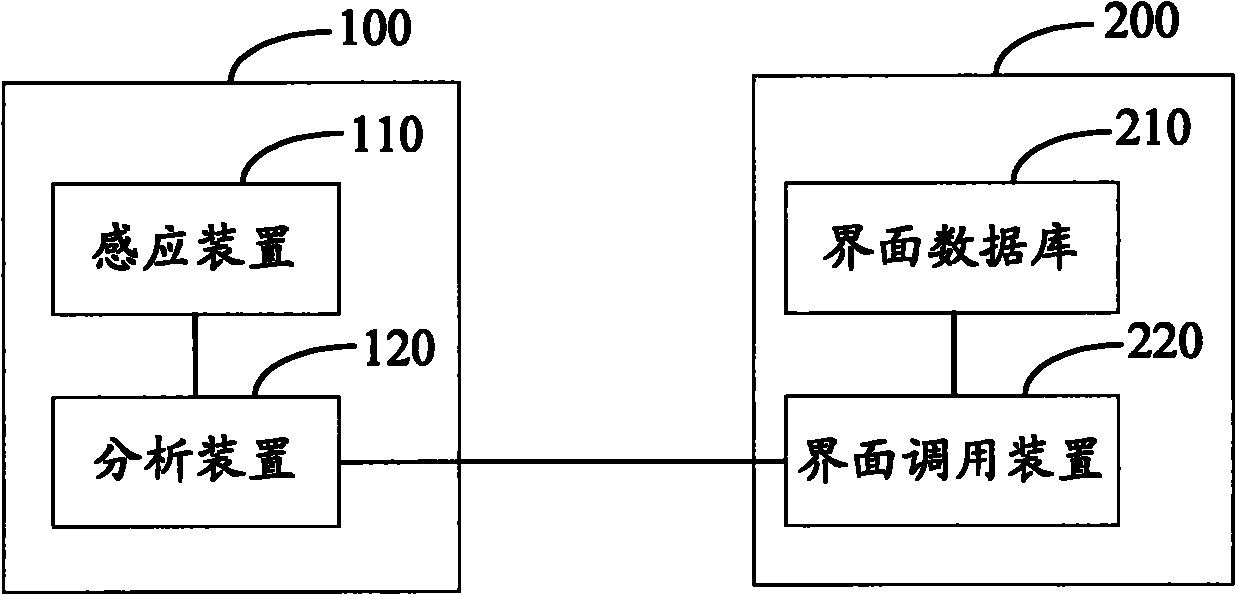

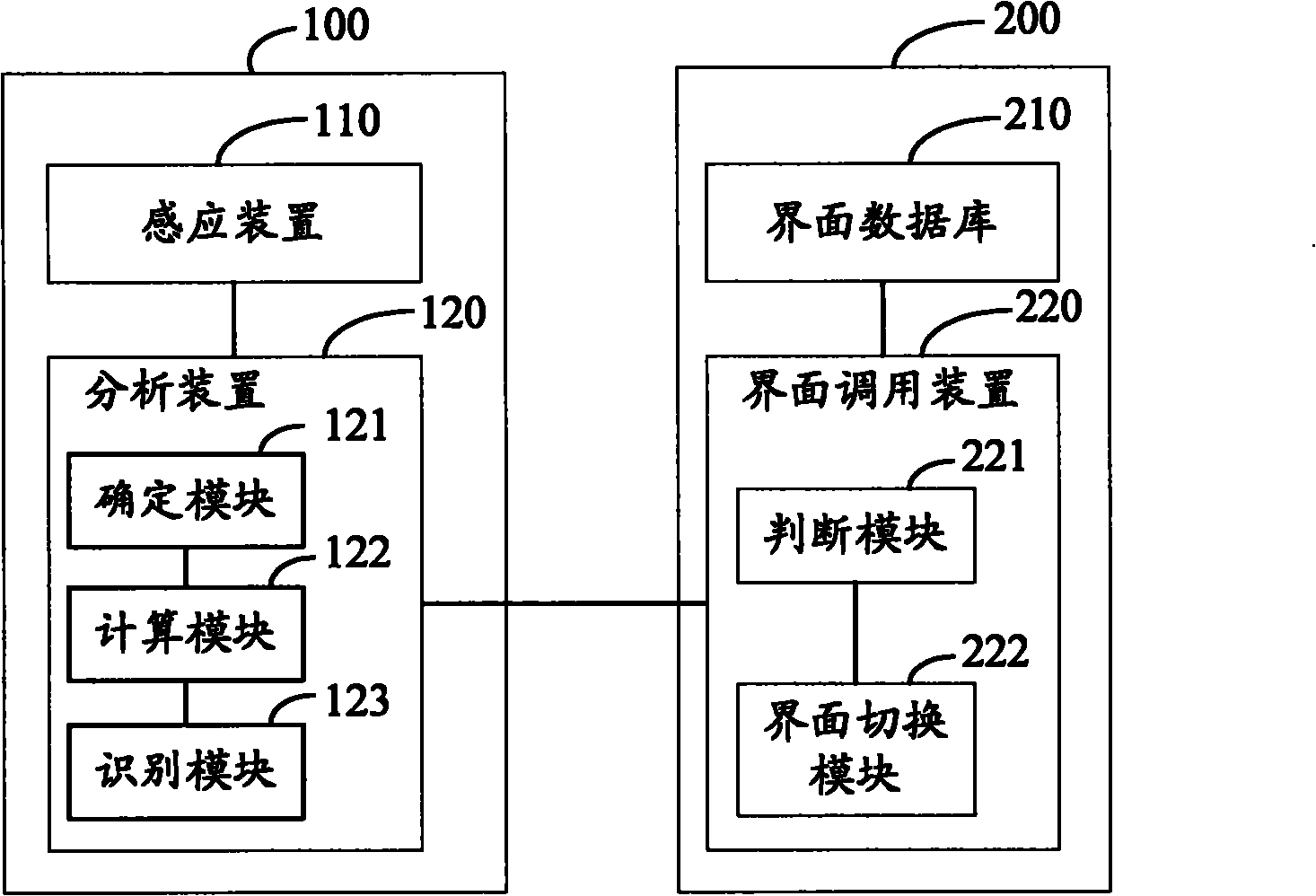

[0021] figure 1 A schematic diagram of a mobile terminal user interface adjustment system 1000 according to an embodiment of the present application is shown. Such as figure 1 As shown, the user interface adjustment system 1000 includes a grip recognition unit 100 and an interface adjustment unit 200 .

[0022] The grip recognition unit 100 can recognize a user's grip of the mobile terminal. The interface adjustment unit 200 can adjust the currently displayed user interface of the mobile terminal when the user operates the mobile terminal according to the recognized gesture of the user. In this way, the user int...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com