Method for selecting reference field and acquiring time-domain motion vector

A motion vector and motion vector prediction technology, applied in television, electrical components, digital video signal modification and other directions, can solve the problem of large motion vector prediction error, and achieve the effect of eliminating jumping, achieving significant effect and improving subjective evaluation score.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

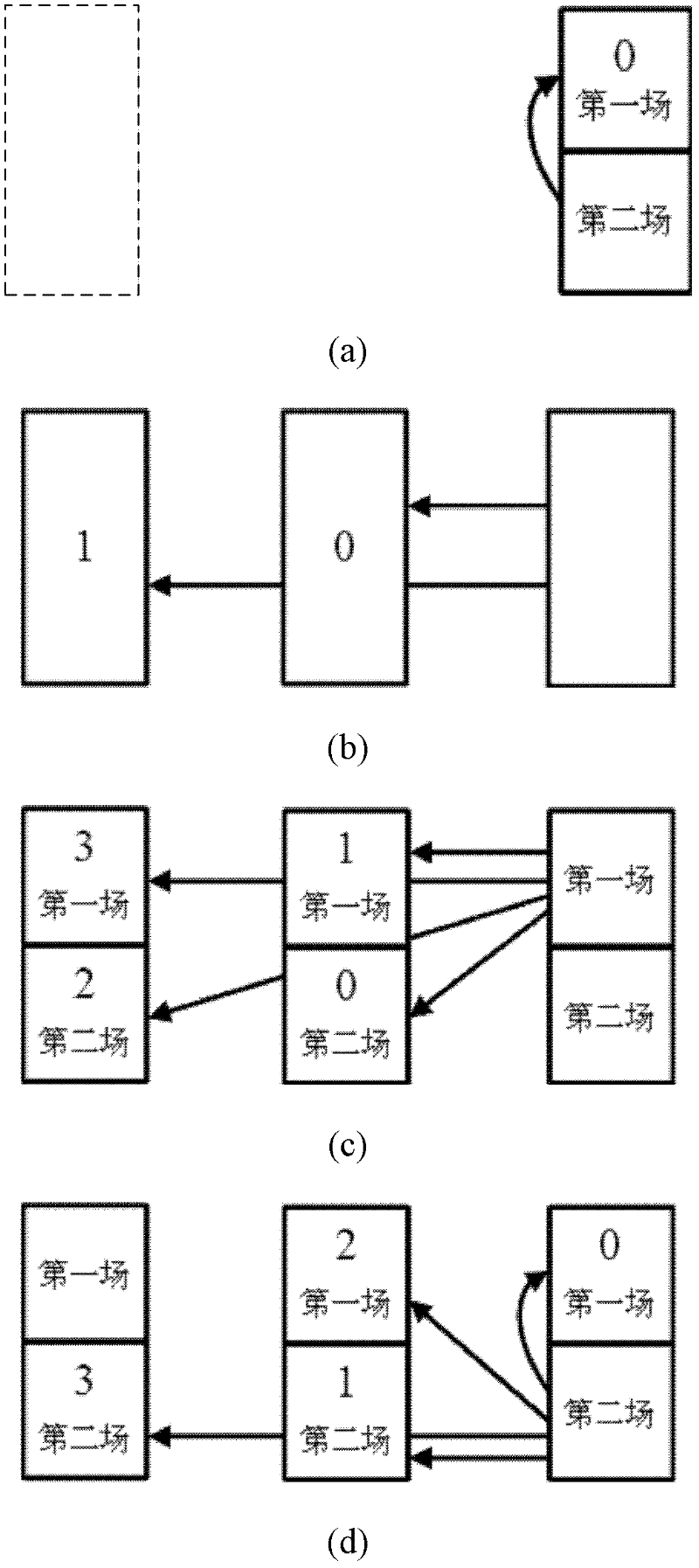

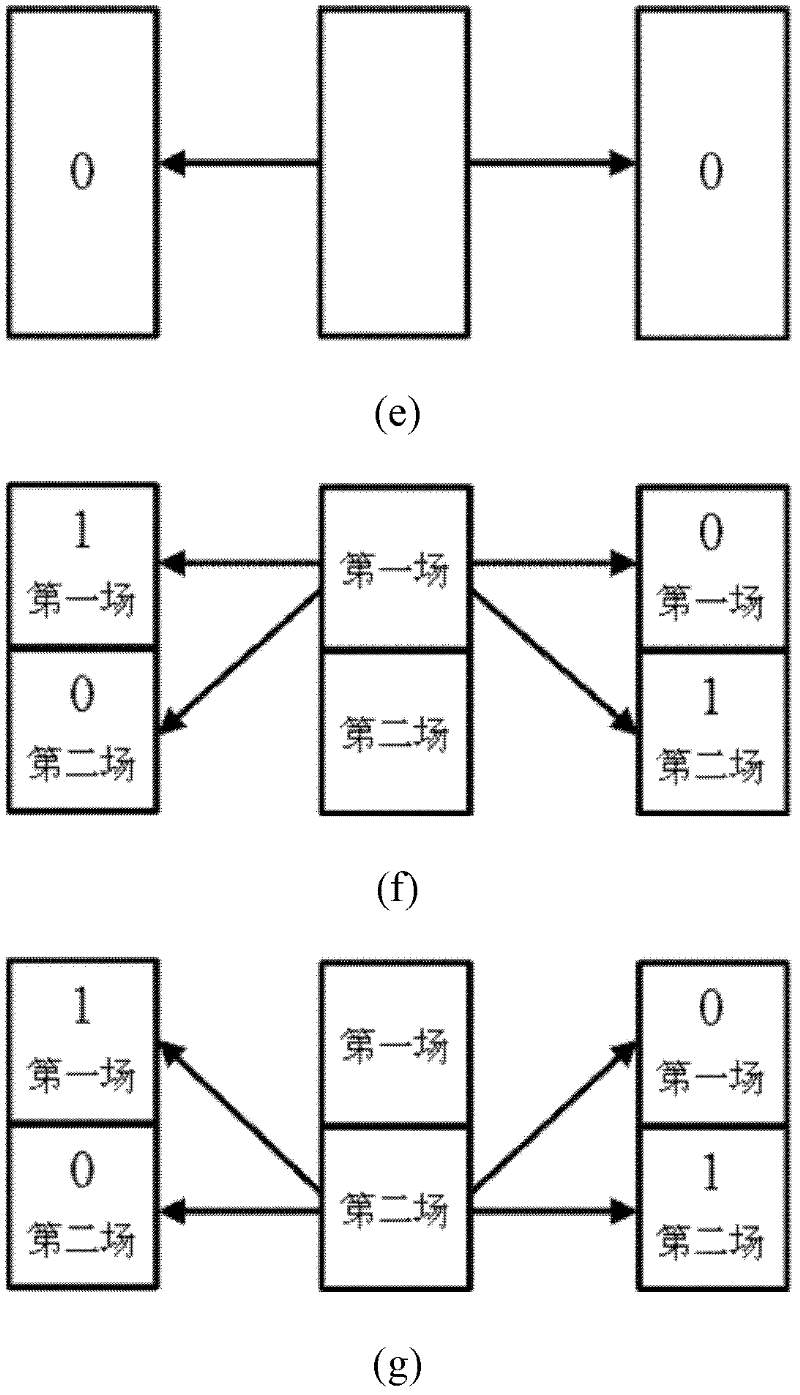

[0047] The purpose of the present invention is to provide a method that can effectively avoid the larger motion vector prediction error in the direct and skip modes of the bottom field coding of the B frame of the AVS video encoder. In the case of keeping the objective quality unchanged, the coding bit rate can be effectively reduced, and the subjective quality can be obviously improved.

[0048] In order to achieve the above purpose, the present invention provides a method for obtaining time-domain motion vectors.

[0049] The specific implementation steps are as follows:

[0050] If the current macroblock type is B_Skip or B_Direct_16*16, or the current subblock type is SB_Direct_8*8, perform the following operations for each 8*8 block:

[0051] first step:

[0052] (1) If the coded macroblock type of the sample corresponding to the sample position in the upper left corner of the current 8*8 block in the backward reference image is "I_8*8", then the forward and backward r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com