Kinect-based action training method

A training method and action technology, applied in the field of virtual reality, can solve problems such as hindering the automatic training system, and achieve the effects of reducing time complexity, simple installation process and simple equipment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to better understand the technical solution of the present invention, a further detailed description will be made below in conjunction with the accompanying drawings and implementation examples.

[0034] 1. The steps of the online training method are as follows:

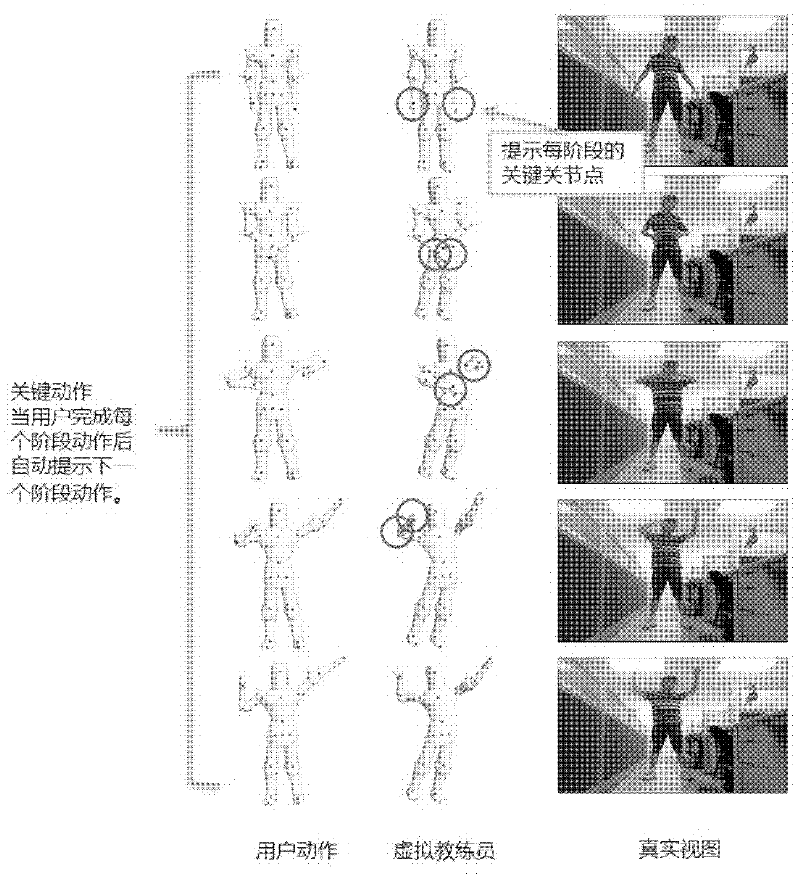

[0035] Online training first automatically divides the action into several key stages. When the user completes the action of one stage, the action of the next stage is automatically prompted. When the action of each stage is prompted, the key joint points of each stage are prompted. . Take an action of lifting and stretching the hand as an example,figure 1 It is a schematic diagram of online training, each row corresponds to a key action. The leftmost column is the result of using the motion data collected by kinect to drive the 3D model in real time, the middle column is the result of driving the 3D model with standard motion data, and the right is the color picture collected by kinect. When the us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com