Deep convex network with joint use of nonlinear random projection, restricted boltzmann machine and batch-based parallelizable optimization

A non-linear, network technology, applied in biological neural network models, computer components, speech analysis, etc., can solve problems such as long learning and difficult parallelization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] Various technologies related to the deep convex network (DCN) will now be described with reference to the drawings, and the same reference numerals denote the same elements in all the drawings. In addition, several functional block diagrams of various example systems are shown and described herein for the purpose of explanation; however, it is understood that the functions described as being performed by a particular system component may be performed by multiple components. Similarly, for example, a component may be configured to perform a function described as performed by multiple components, and some steps in the method described herein may be omitted, reordered, or combined.

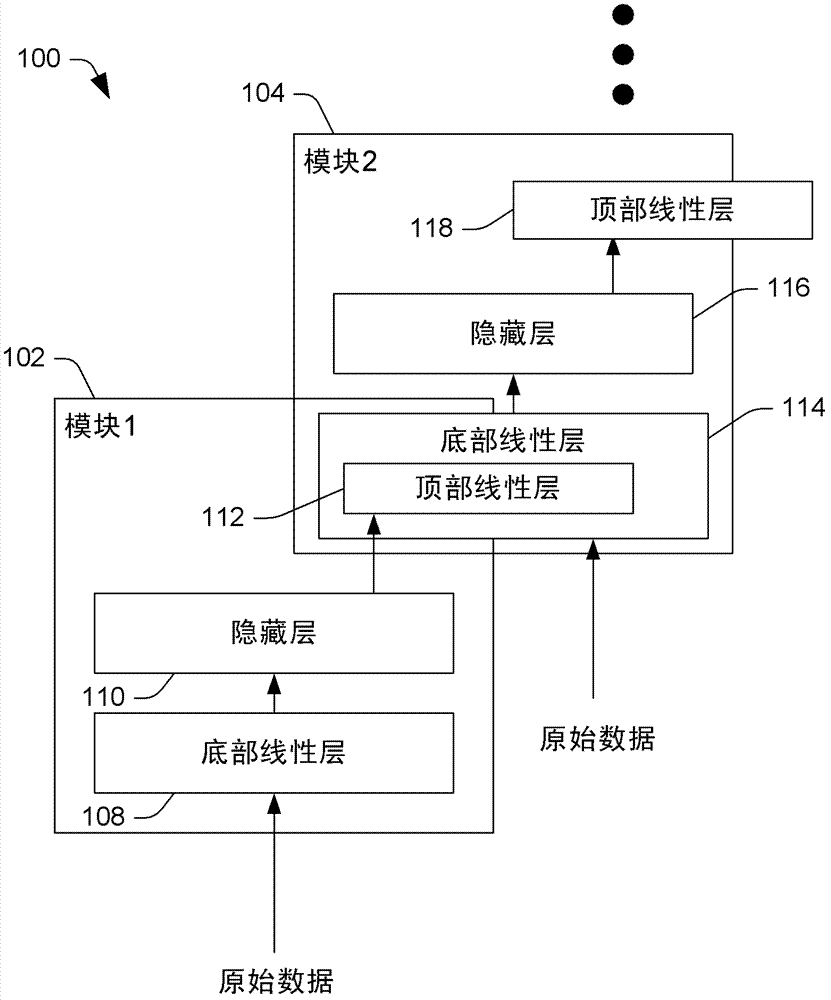

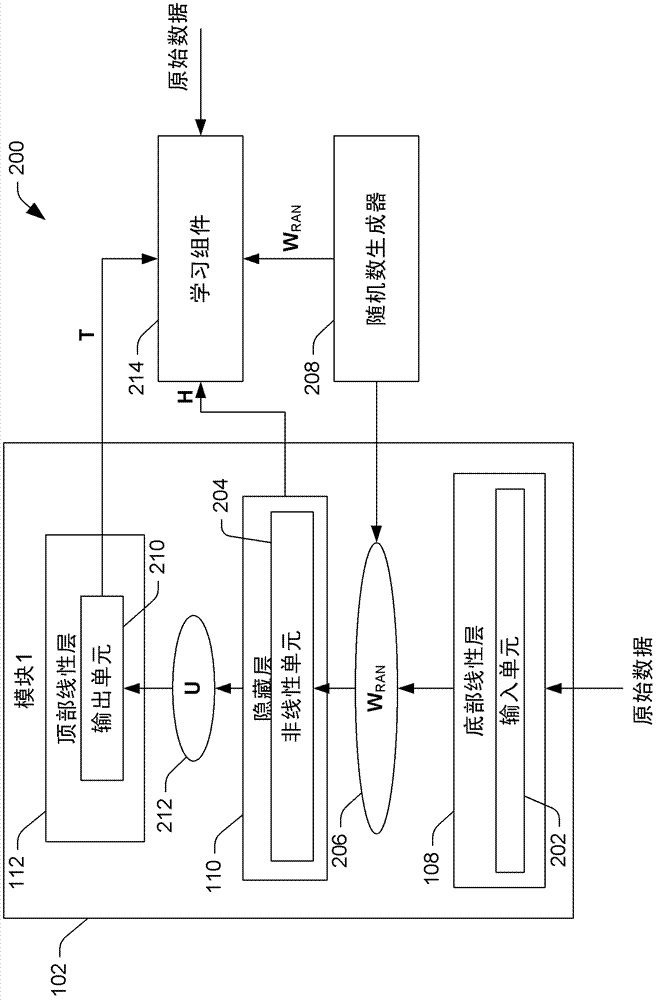

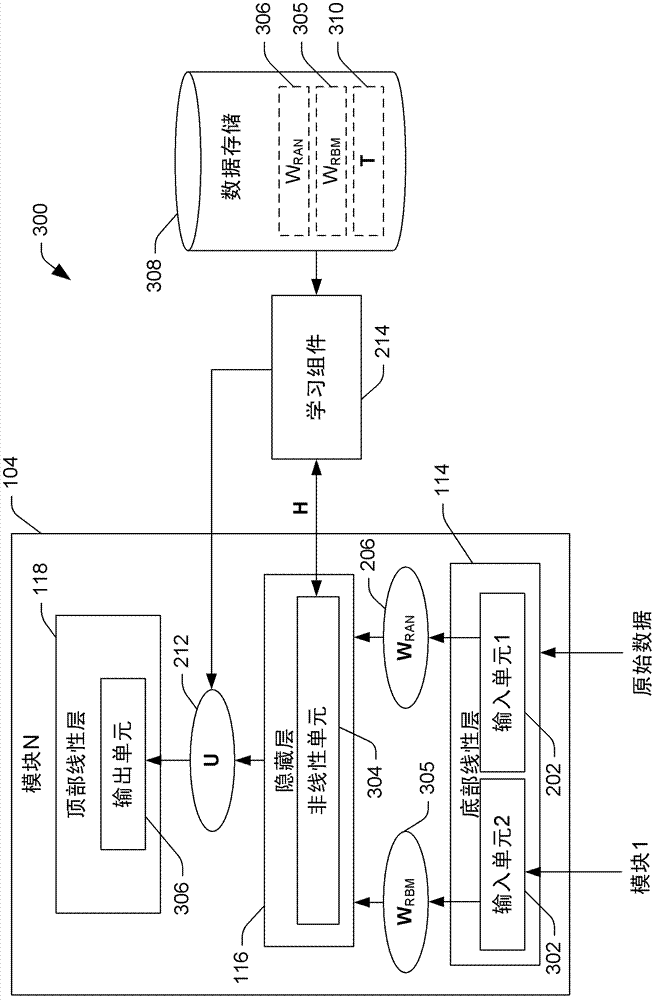

[0021] reference figure 1 , An exemplary DCN 100 is shown, where DCN (after training) can be used in conjunction with performing automatic classification / recognition. According to an example, DCN 100 may be used to perform automatic speech recognition (ASR). In another example, DCN 100 may be us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com