Human-computer interaction method and device

A technology of human-computer interaction and images to be tested, applied in the field of image analysis, can solve problems such as difficult embedding, low flexibility, and large product volume, and achieve good effects, enhanced stability, and good flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] In order to solve the problem of low flexibility for users in the traditional technology, a human-computer interaction method and device for user-defined target images are proposed.

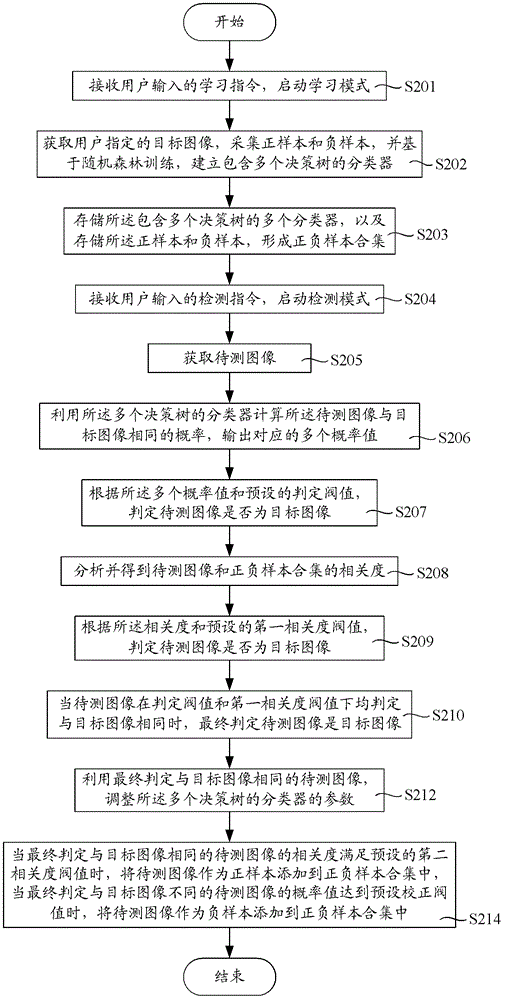

[0045] like figure 1 As shown, it is a flow chart of the steps of the human-computer interaction method of an embodiment, including the following steps:

[0046] Step S201, receiving a learning instruction input by a user, and starting a learning mode.

[0047] Step S202, acquiring the target image specified by the user, collecting positive samples and negative samples, and building a classifier including multiple decision trees based on random forest training.

[0048] The acquisition of the target image specified by the user is the user-defined target image. Assume that the user wants to use the palm to realize human-computer interaction. Then in the learning mode, the palm image can be provided as the target image through the camera. In order to make the collection of positive sampl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com