Method and device for distributing storage resources in GPU in big integer calculating process

A technology for storage resources and allocation methods, which is applied in the field of storage resource allocation, can solve problems such as high computing overhead, achieve the effects of increasing execution speed, reducing register usage, and improving parallel execution efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0122] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the accompanying drawings and examples.

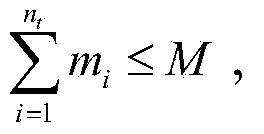

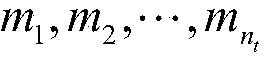

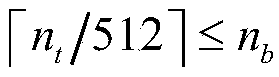

[0123] In order to improve the execution speed of large integer calculations when the GPU is used for the large integer calculations of the SM2 algorithm, the present invention allocates registers for each thread during the execution of various large integers using GPU threads, and calculates the currently executed large integers. The operands and intermediate calculation variables are stored in the allocated registers, and the modulus of the currently performed large integer calculation and other data irrelevant to the currently performed large integer calculation are stored in memory. In this way, the operands and intermediate calculation variables to be called in the large integer calculation in the GPU thread can be directly obtained by repeatedly ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com