A method and system for Hadoop program testing

A program testing and program technology, applied in the field of HADOOP program testing, can solve problems such as increasing the execution time, and achieve the effect of speeding up the test execution speed and shortening the test execution time.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

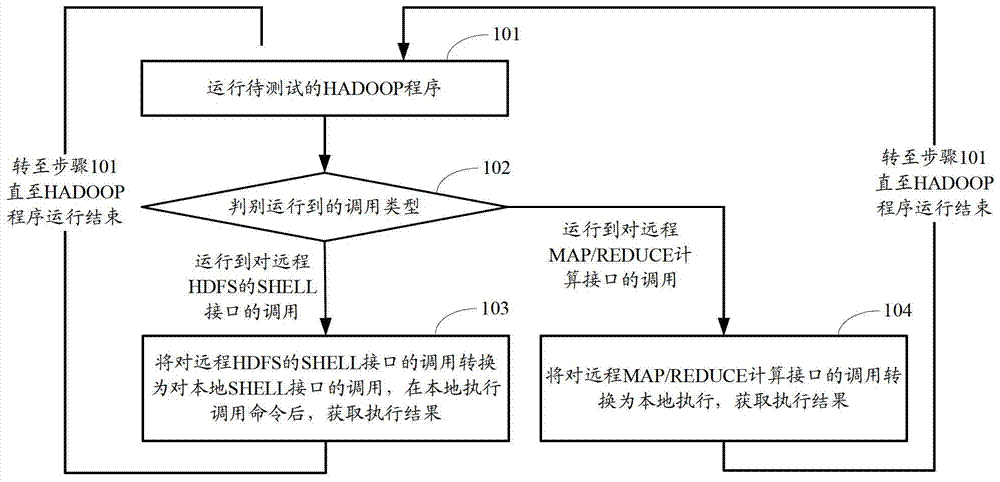

[0042] figure 1 The method flow chart of the HADOOP program test provided for the first embodiment of the present invention, such as figure 1 As shown, the method includes the following steps:

[0043] Step 101: Run the HADOOP program to be tested.

[0044] Step 102: Determine the type of the call to be executed. If the call to the SHELL interface of the remote HDFS is executed, step 103 is executed; if the call to the remote MAP / REDUCE computing interface is executed, step 104 is executed.

[0045]The HADOOP program mainly includes two kinds of calls, namely the call to the SHELL interface of the remote HDFS and the call to the remote MAP / REDUCE computing interface. The calls to the SHELL interface of remote HDFS are mainly operations on remote files, such as read, write, upload, download, display, copy, move, delete, etc. The call to the remote MAP / REDUCE computing interface is mainly a MAP / REDUCE computing task started in a streaming mode.

[0046] Step 103: Convert the...

Embodiment 2

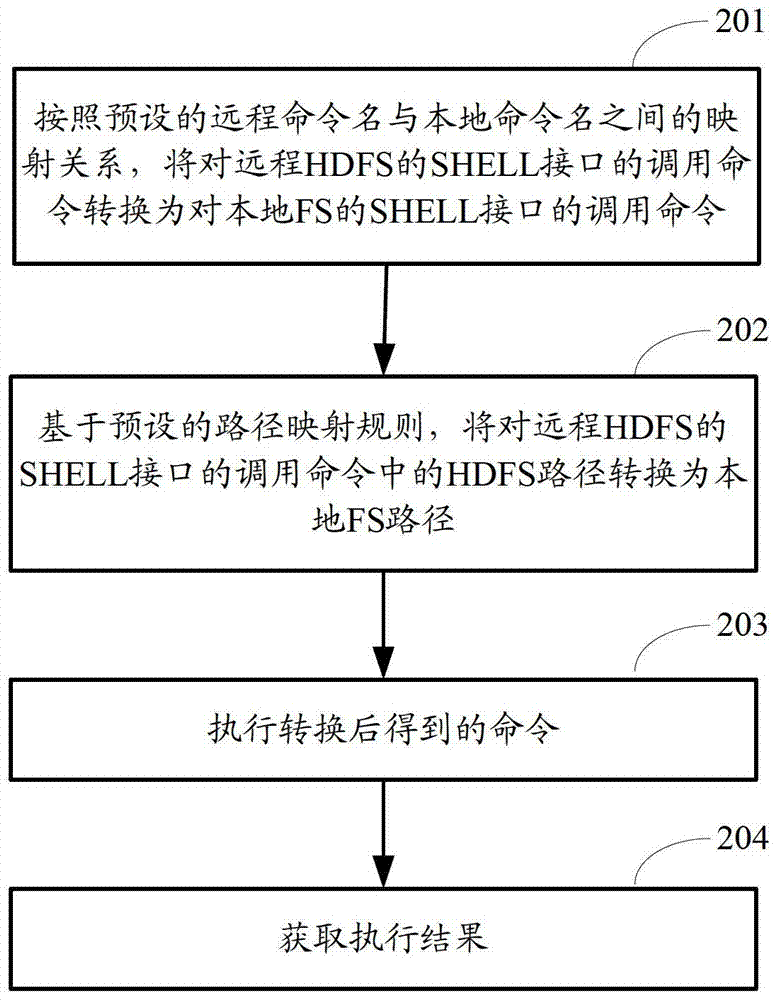

[0052] figure 2 A flowchart of the specific method of the above step 103 provided in Embodiment 2 of the present invention, such as figure 2 shown, including the following steps:

[0053] Step 201 : according to the preset mapping relationship between the remote command name and the local command name, the command for invoking the SHELL interface of the remote HDFS is converted into a command for invoking the SHELL interface of the local FS.

[0054] The mapping relationship between the remote command name and the local command name is pre-configured, so that the calling command to the SHELL interface of the remote HDFS can be converted into a calling command to the SHELL interface of the local FS. The mapping relationship can be shown in Table 1 as an example.

[0055] Table 1

[0056]

[0057] Step 202: Based on a preset path mapping rule, convert the HDFS path in the calling command to the SHELL interface of the remote HDFS into a local FS path.

[0058] Pre-configu...

Embodiment 3

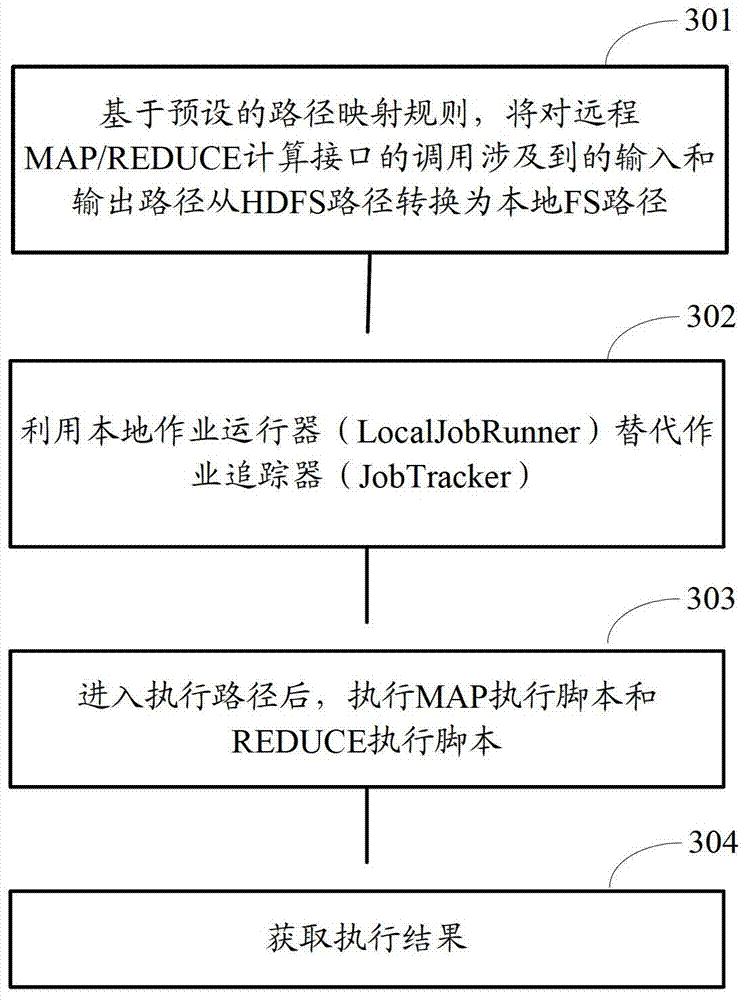

[0067] image 3 A flowchart of the specific method of the above-mentioned step 104 provided in Embodiment 3 of the present invention, such as image 3 shown, including the following steps:

[0068] Step 301: Based on a preset path mapping rule, convert the input and output paths involved in the invocation of the remote MAP / REDUCE computing interface from the HDFS path to the local FS path.

[0069] The input and output paths involved in the invocation of the remote MAP / REDUCE computing interface are HDFS paths. The basis of job execution localization is path localization. The path mapping rules used here are the same as those used in the second embodiment. It is not repeated here.

[0070] Step 302 : Use the local job runner (LocalJobRunner) to replace the job tracker (JobTracker).

[0071] The HADOOP system uses the job client to submit the job to the JobTracker, and then the JobTracker divides the job into computing tasks and assigns them to multiple TaskTrackers for para...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com