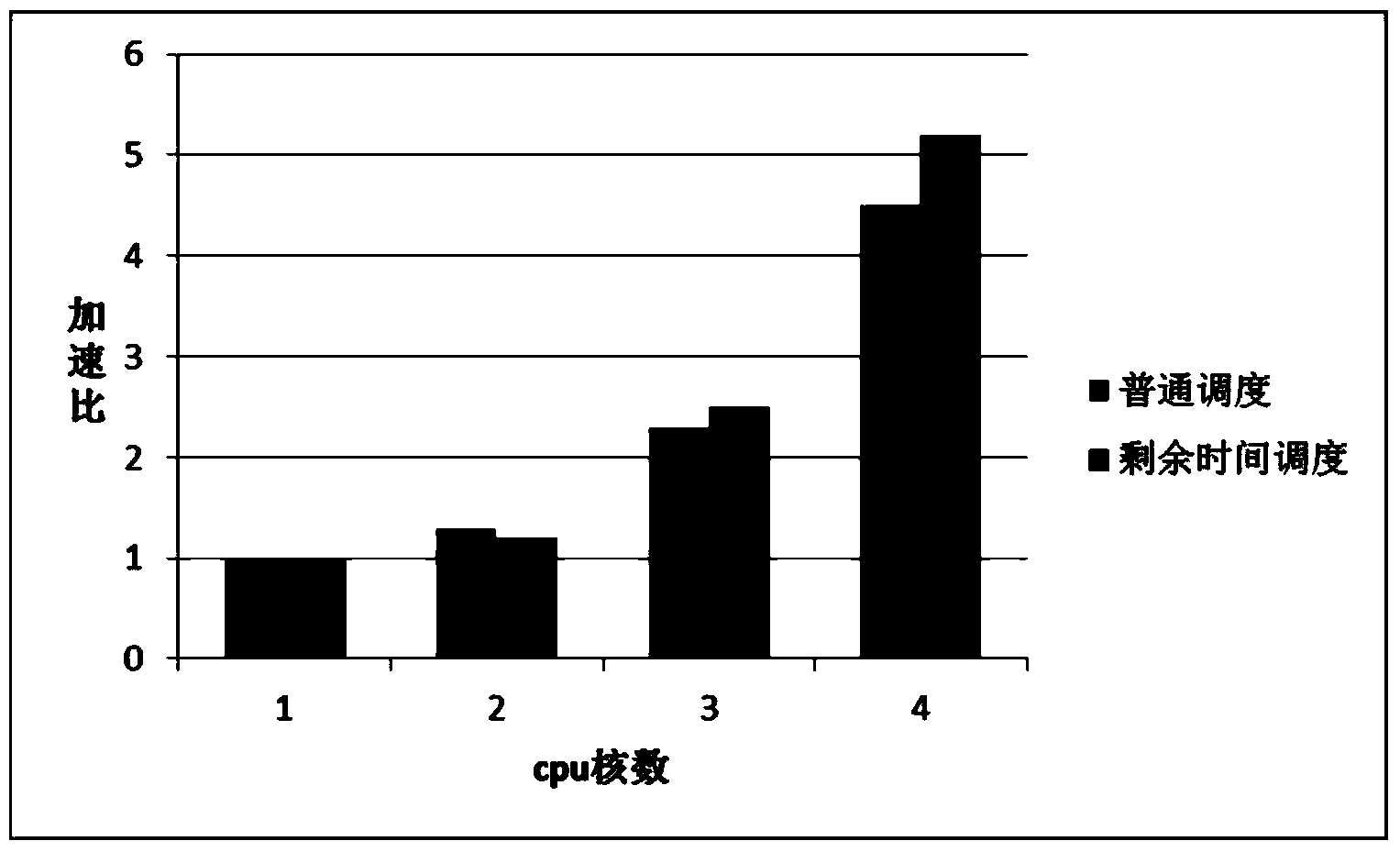

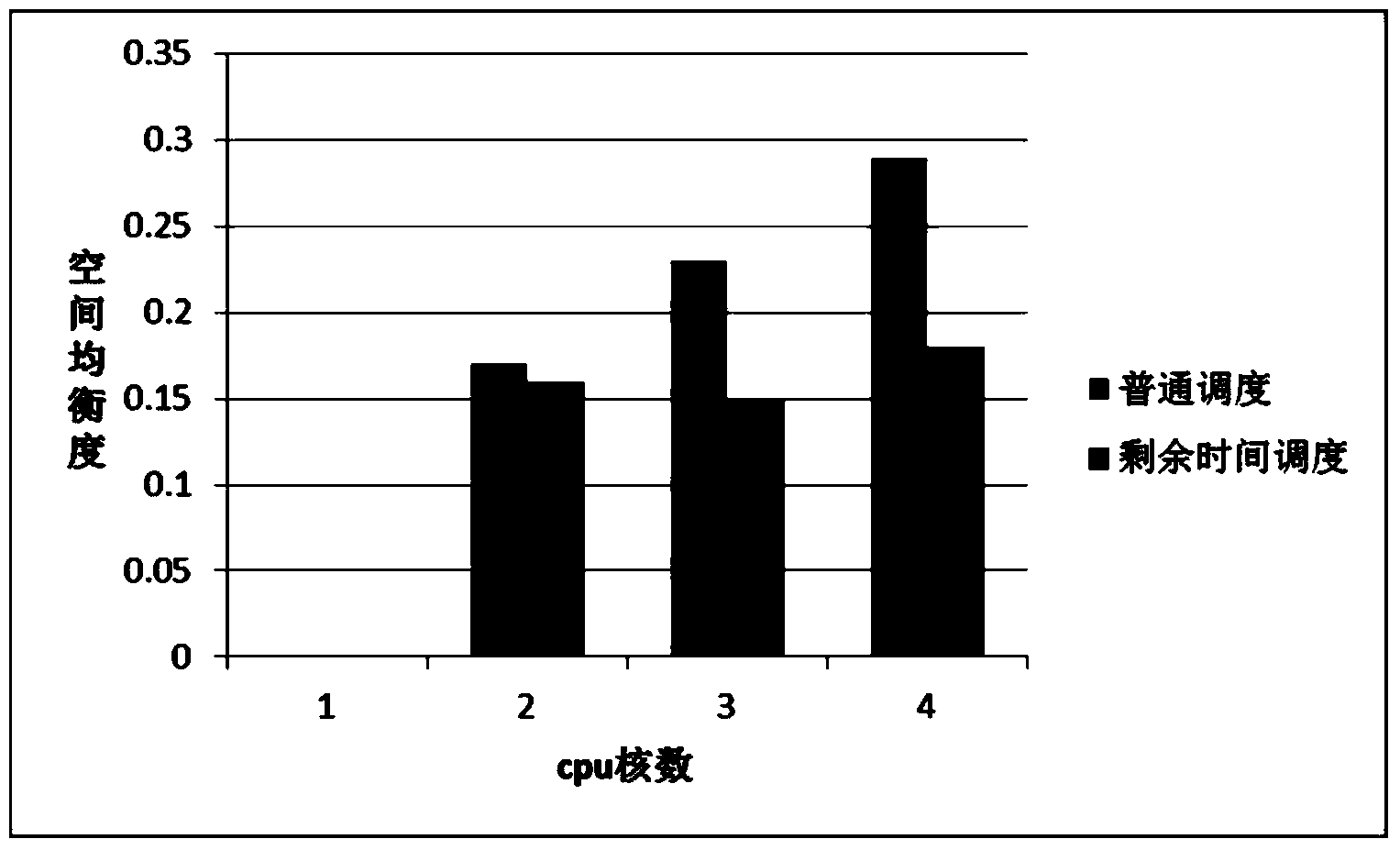

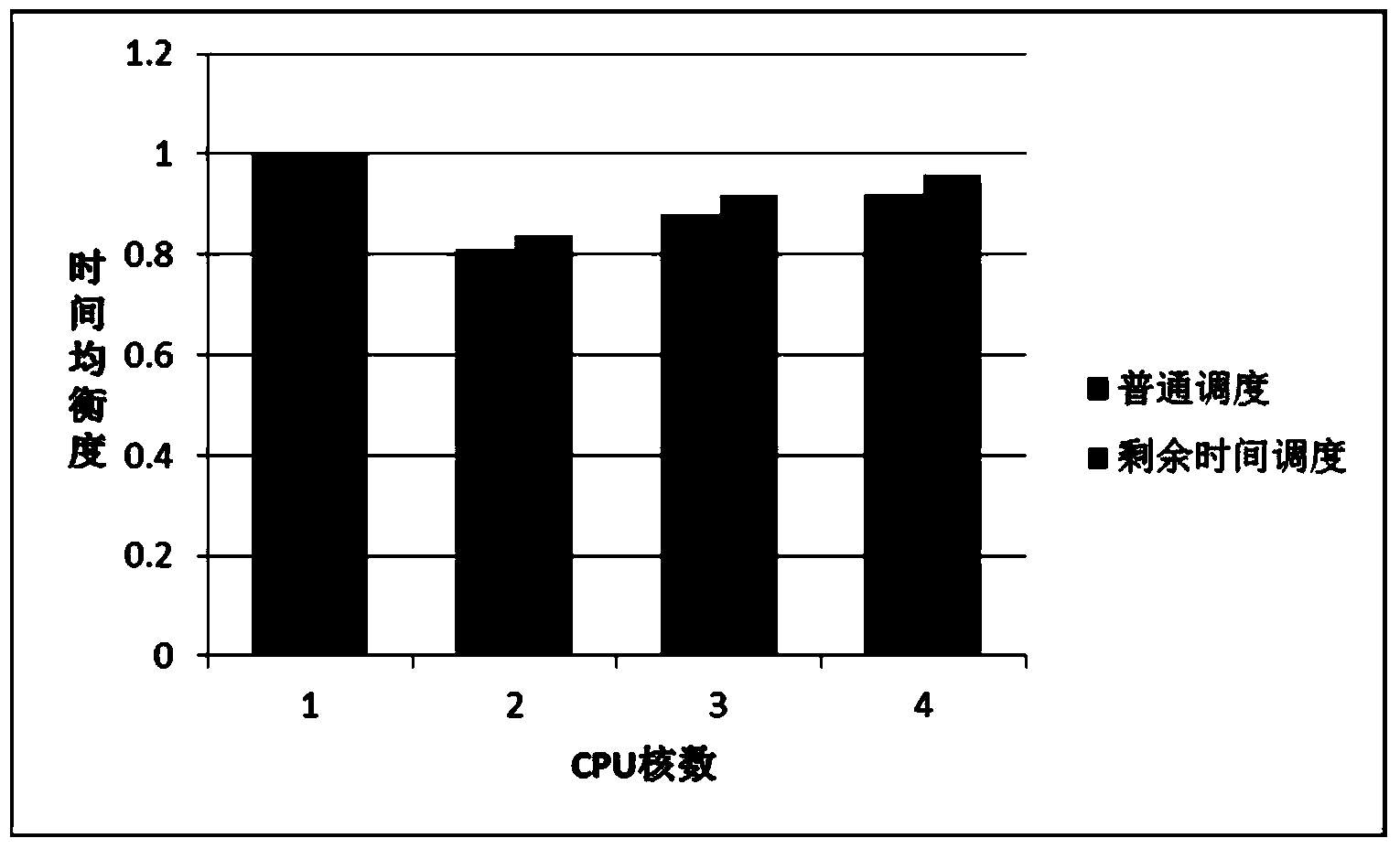

Network processor load balancing and scheduling method based on residual task processing time compensation

A network processor and load balancing technology, applied in data exchange networks, digital transmission systems, electrical components, etc., to solve problems such as coarse data flow division, unbalanced data flow size distribution, and difficulty in achieving load balance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be further described in detail below in conjunction with the accompanying drawings.

[0050] The method proposed by the invention is to solve the distribution of the same data stream running in parallel on the multi-core processor, and the data stream is allocated preferentially by the load of the smallest processing unit obtained.

[0051] A network processor load balancing scheduling method based on remaining task processing time compensation of the present invention, the method includes the following steps:

[0052] Step A: Associated information of the data stream

[0053] (A) A multi-core processor includes multiple processing units, expressed as M={m 1 ,m 2 ,...,m K}, m 1 Indicates the first processing unit, m 2 Indicates the second processing unit, m K Indicates the last processing unit, K indicates the total number of processing units; for the convenience of the following enumeration, m K Also denotes an arbitrary processing uni...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com