Patents

Literature

77results about How to "Reduce scheduling overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

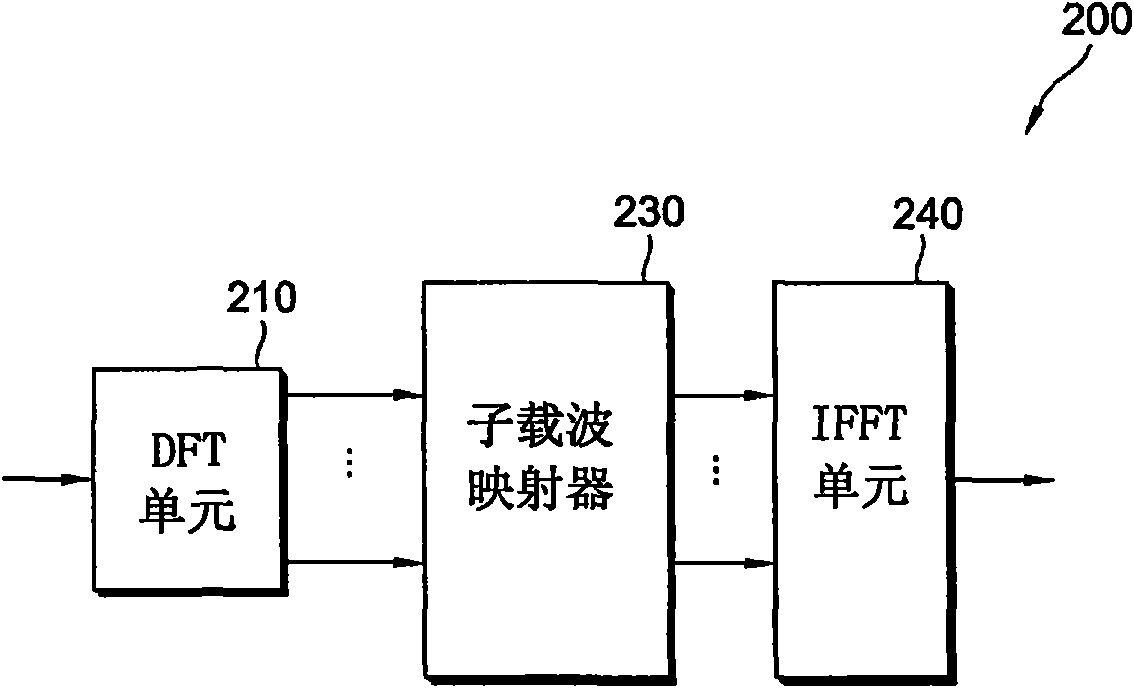

Method of transmitting sounding reference signal in wireless communication system

ActiveCN101617489AReduce consumptionReduce scheduling overheadOrthogonal multiplexCommunications systemSounding reference signal

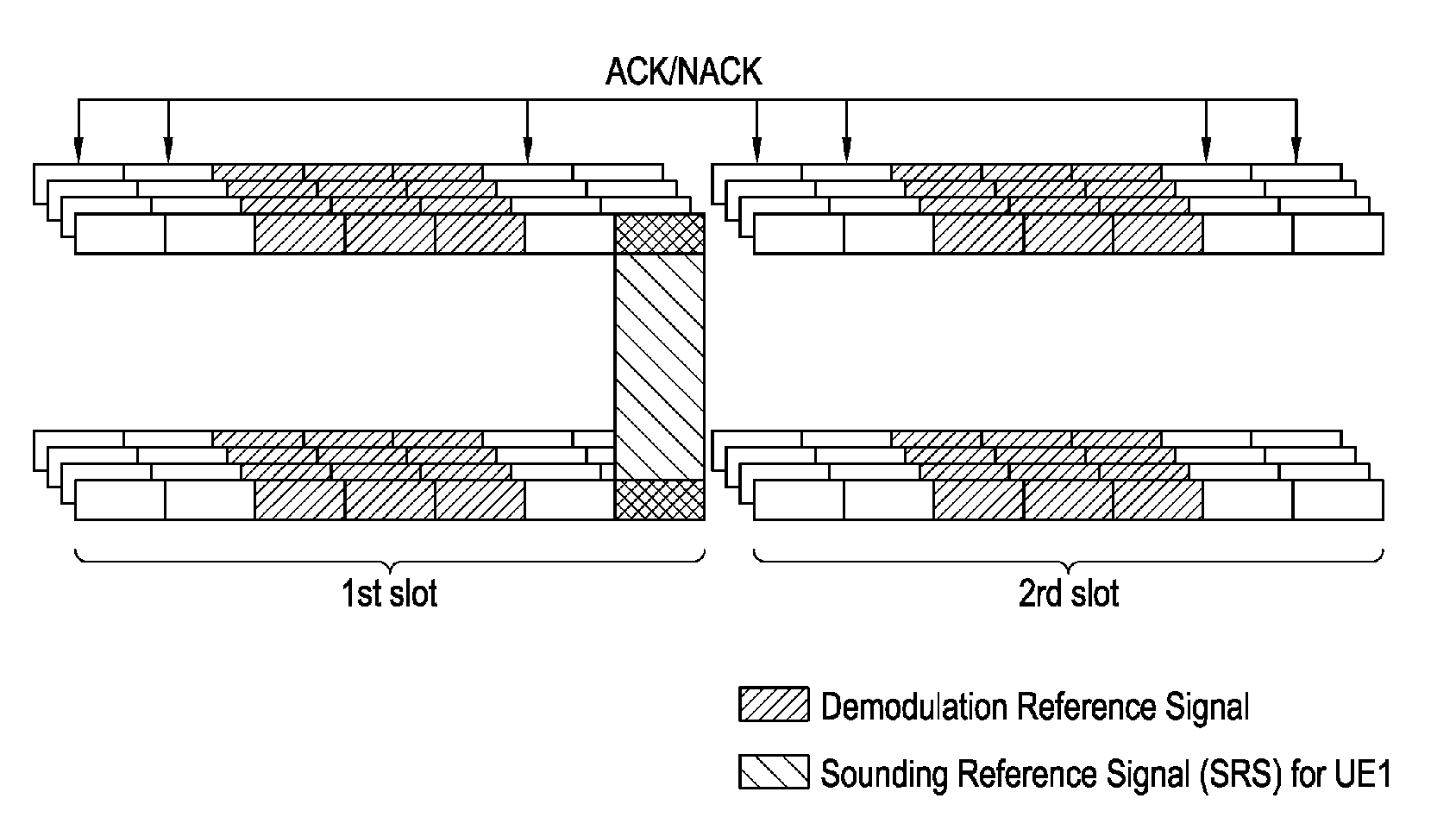

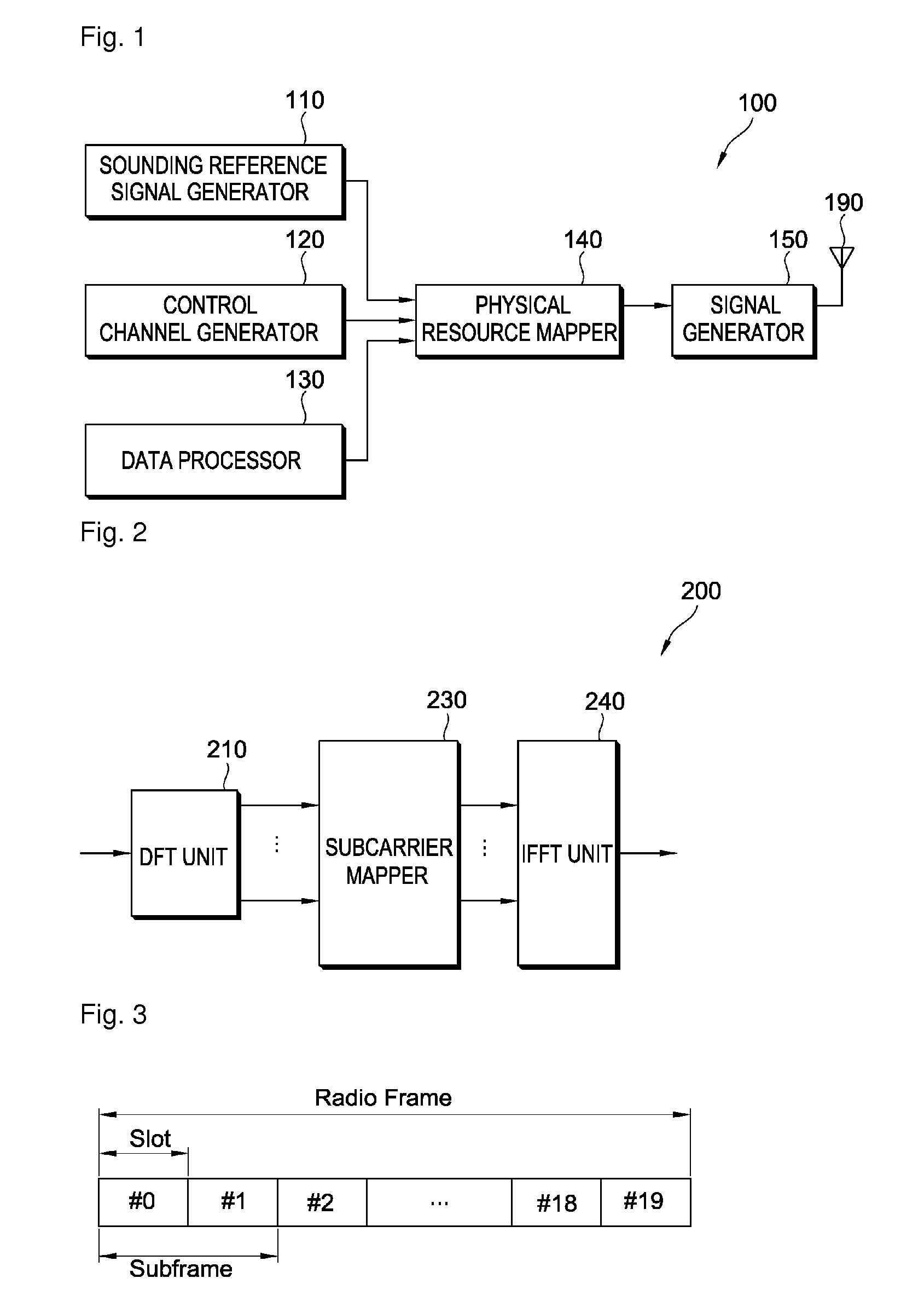

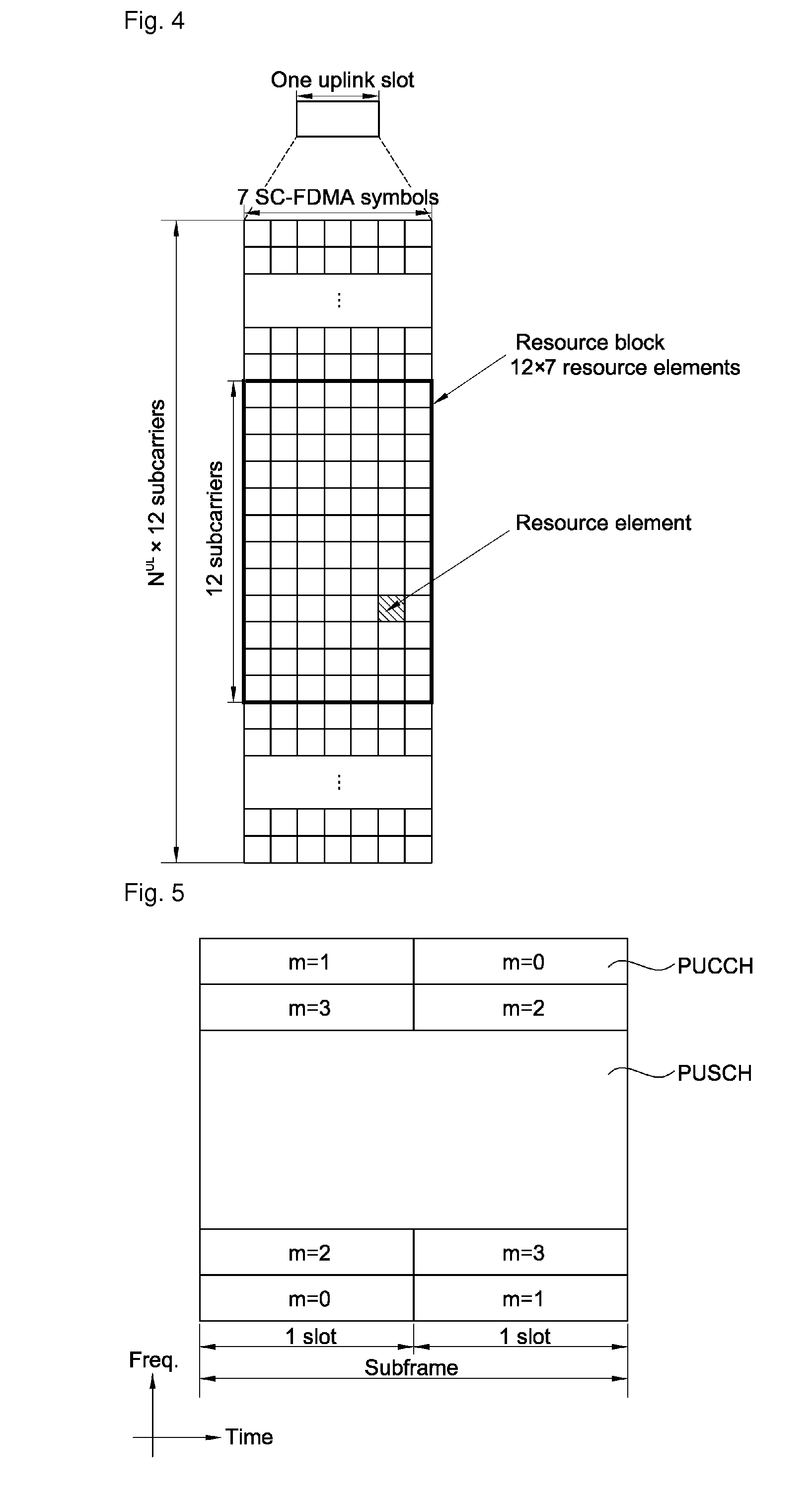

A method of transmitting a sounding reference signal includes generating a physical uplink control channel (PUCCH) carrying uplink control information on a subframe, the subframe comprising a plurality of SC-FDMA(single carrier-frequency division multiple access) symbols, wherein the uplink control information is punctured on one SC-FDMA symbol in the subframe, and transmitting simultaneously the uplink control information on the PUCCH and a sounding reference signal on the punctured SC-FDMA symbol. The uplink control information and the sounding reference signal can be simultaneously transmitted without affecting a single carrier characteristic.

Owner:LG ELECTRONICS INC

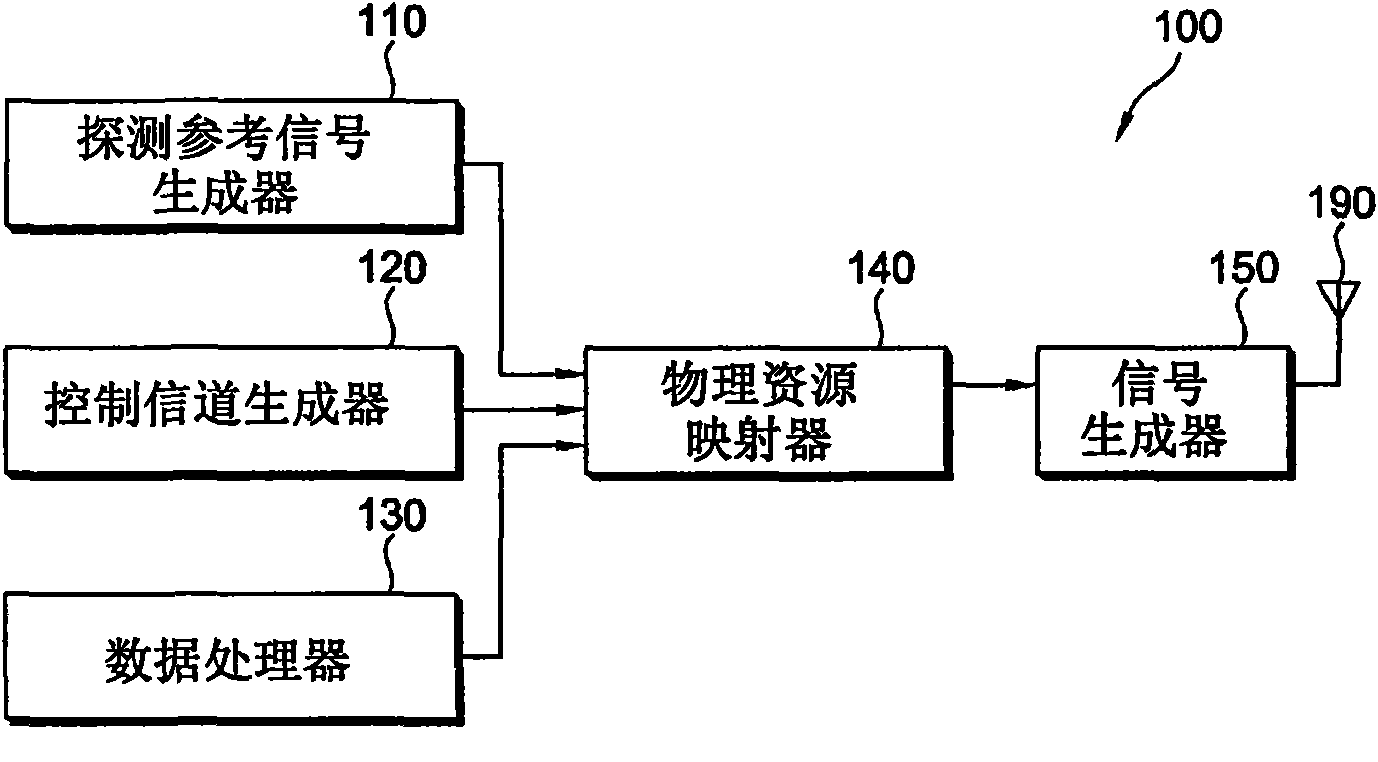

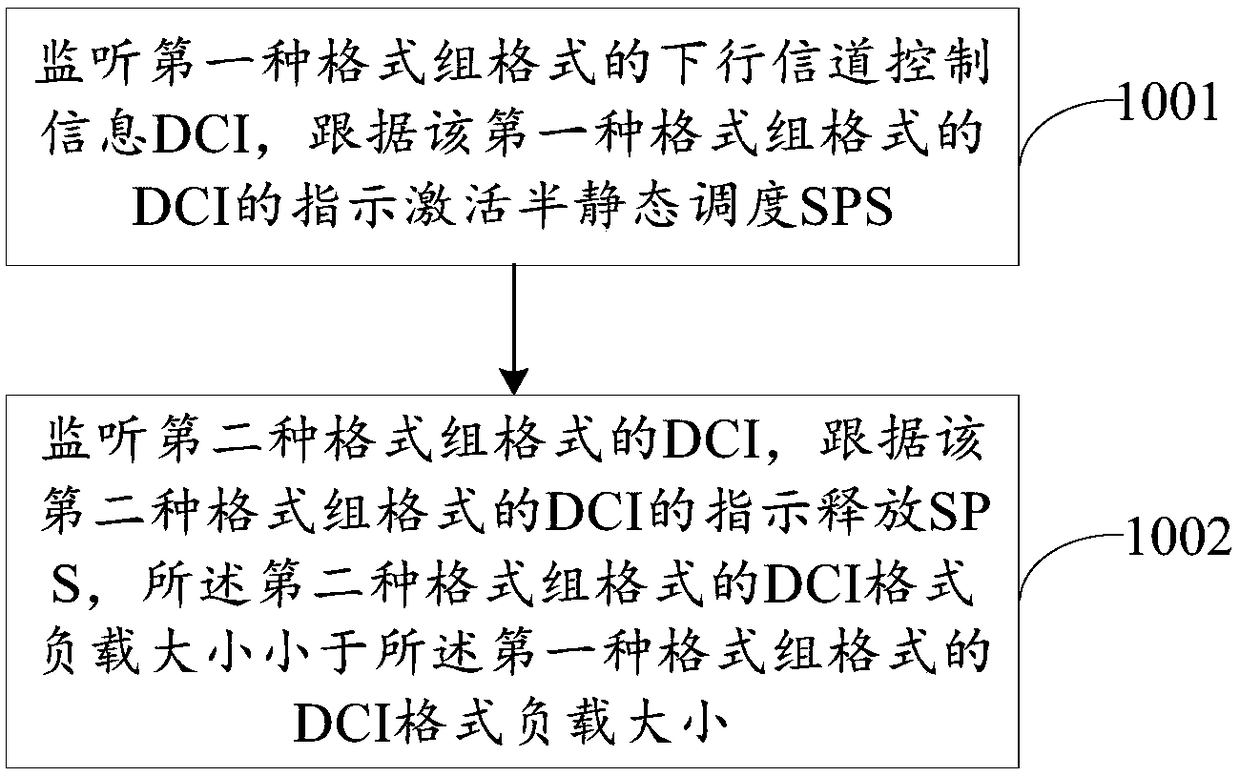

Semi-static resource scheduling method, power control method and corresponding user equipment

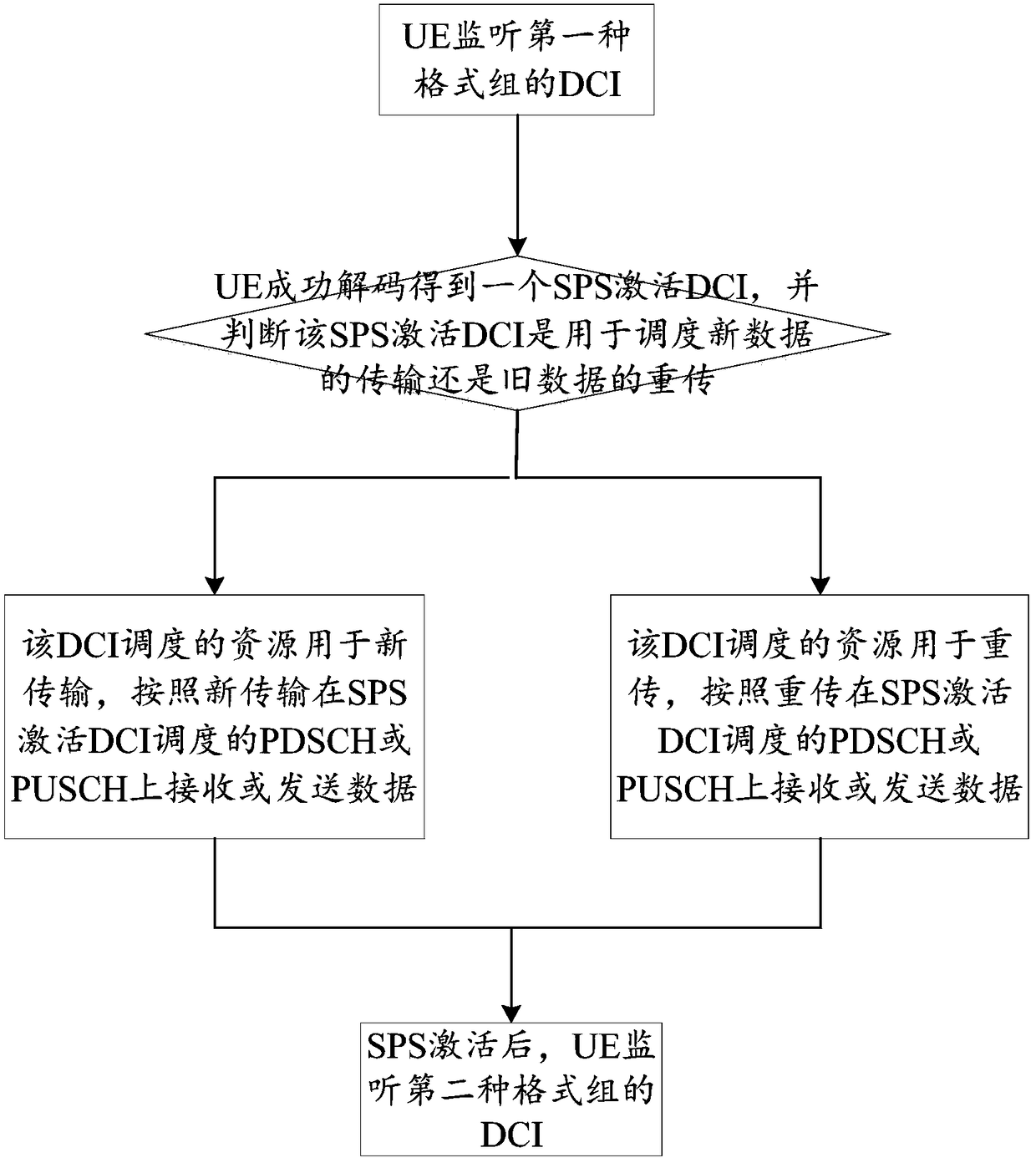

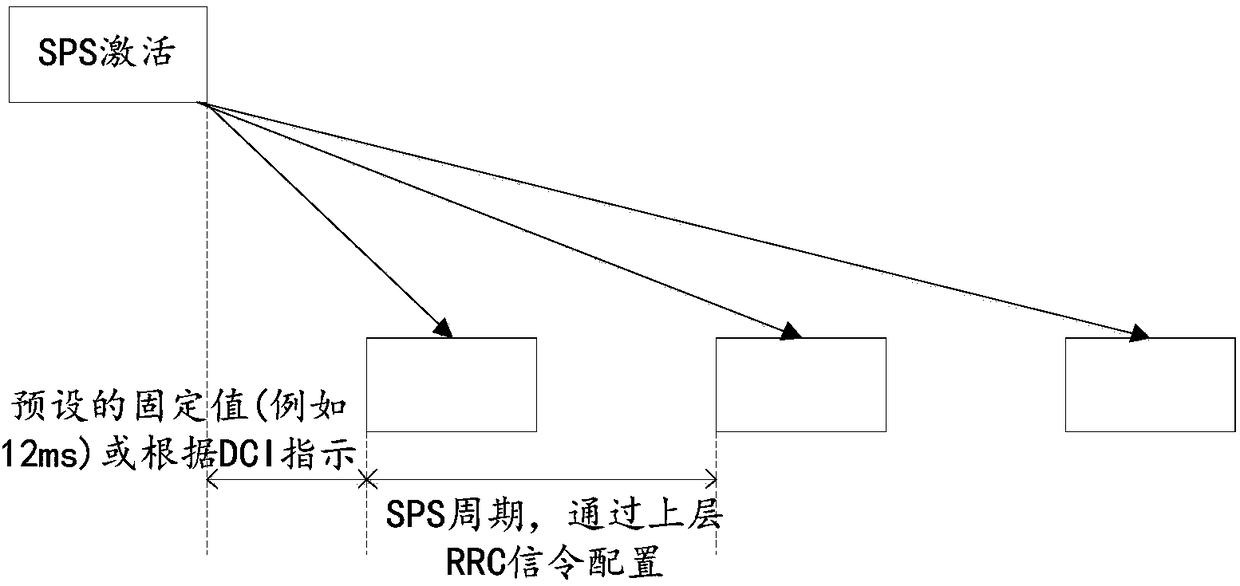

PendingCN108633070AReduce scheduling overheadReduce power consumptionError prevention/detection by using return channelPower managementResource utilizationChannel scheduling

The invention discloses a semi-static resource scheduling method, which comprises the steps of: monitoring downlink channel control information DCI of a first format group format, and activating semi-static scheduling SPS according to the indication of the DCI of the first format group format; and monitoring DCI of a second format group format, releasing SPS according to the indication of the DCIof the second format group format, wherein the DCI format load size of the second format group format is smaller than that of the first format group format. Compared with the prior art, the scheduledDCI format load size after SPS activation is smaller than that of SPS activation, the power consumption of monitoring and detecting the DCI by means of UE is reduced, the downlink channel scheduling overhead of the SPS is decreased, the service time delay is reduced, and the efficiency of resource utilization is significantly improved. In addition, the invention further discloses user equipment used for semi-static resource scheduling.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

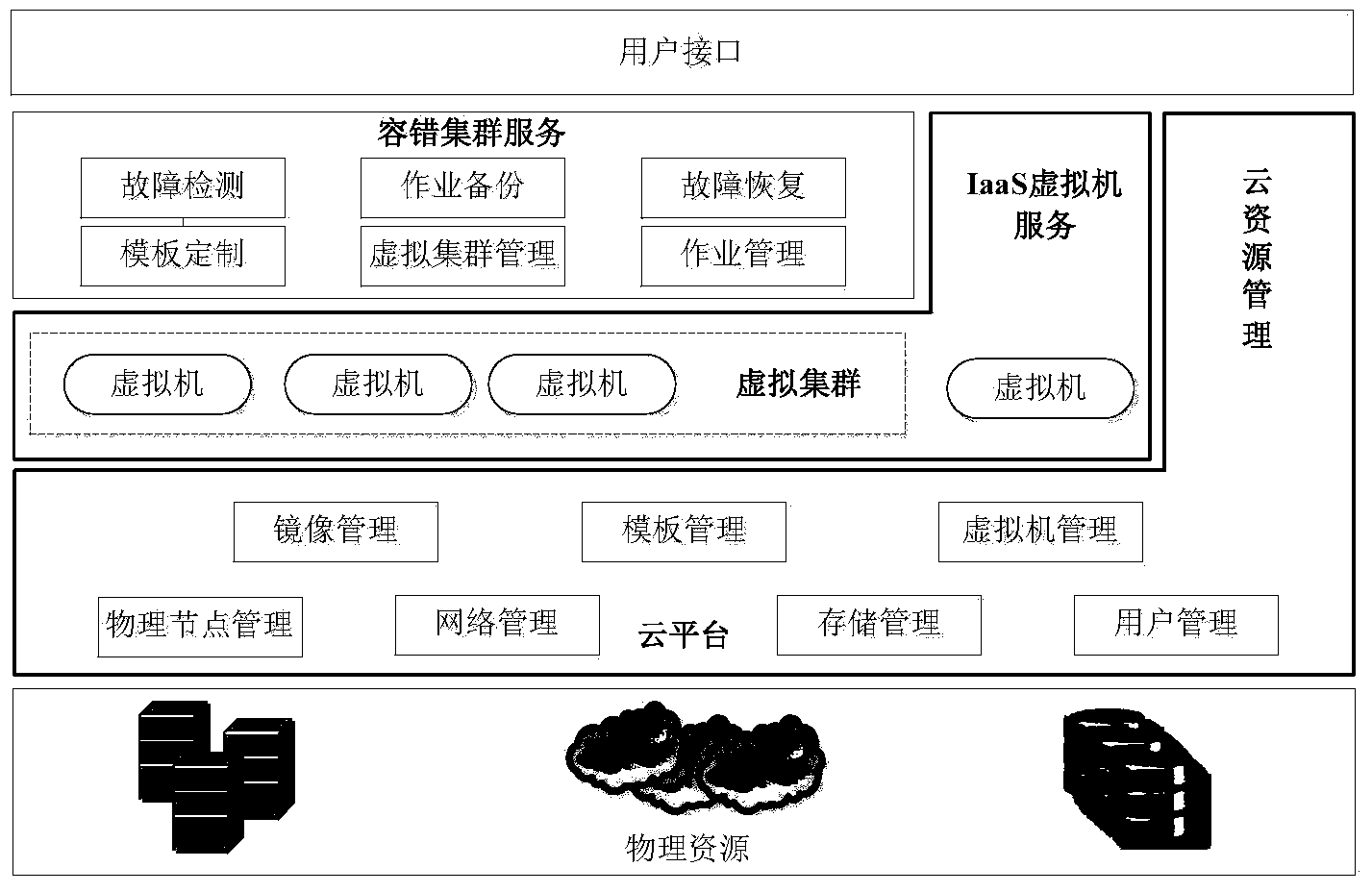

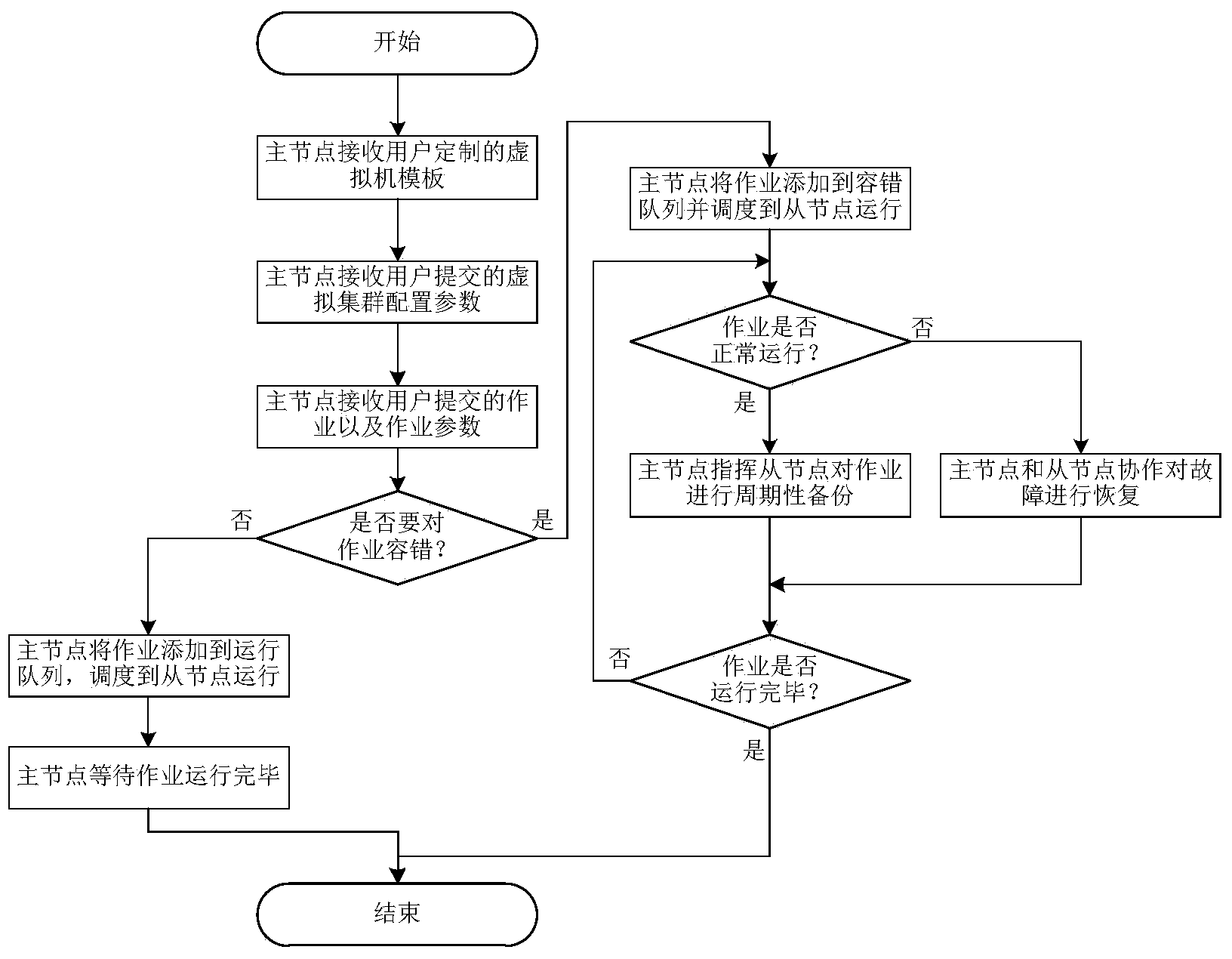

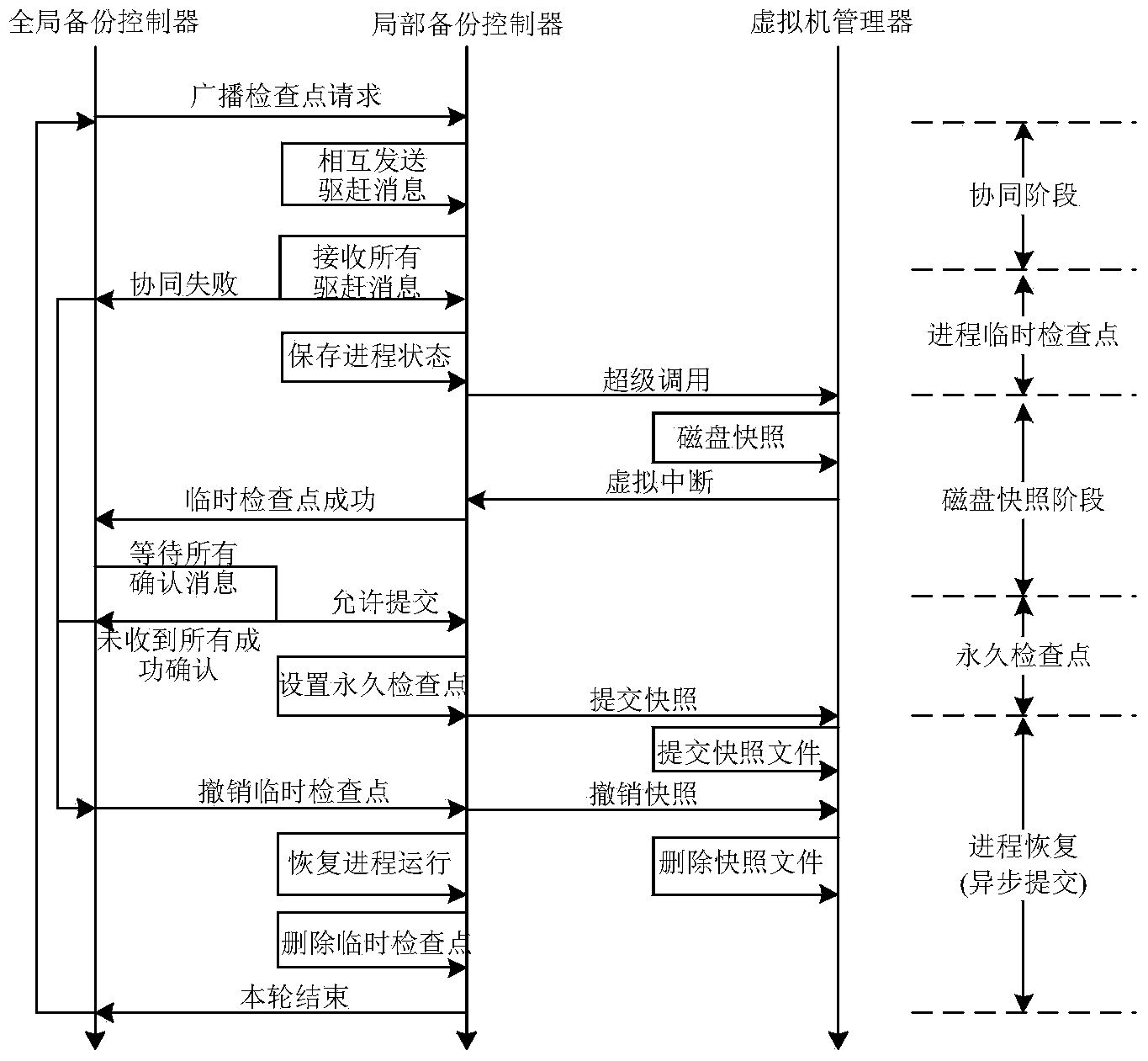

Distributed system multilevel fault tolerance method under cloud environment

ActiveCN103778031AEasy to use interfaceLower the thresholdRedundant operation error correctionVirtualizationVirtual machine

Owner:HUAZHONG UNIV OF SCI & TECH

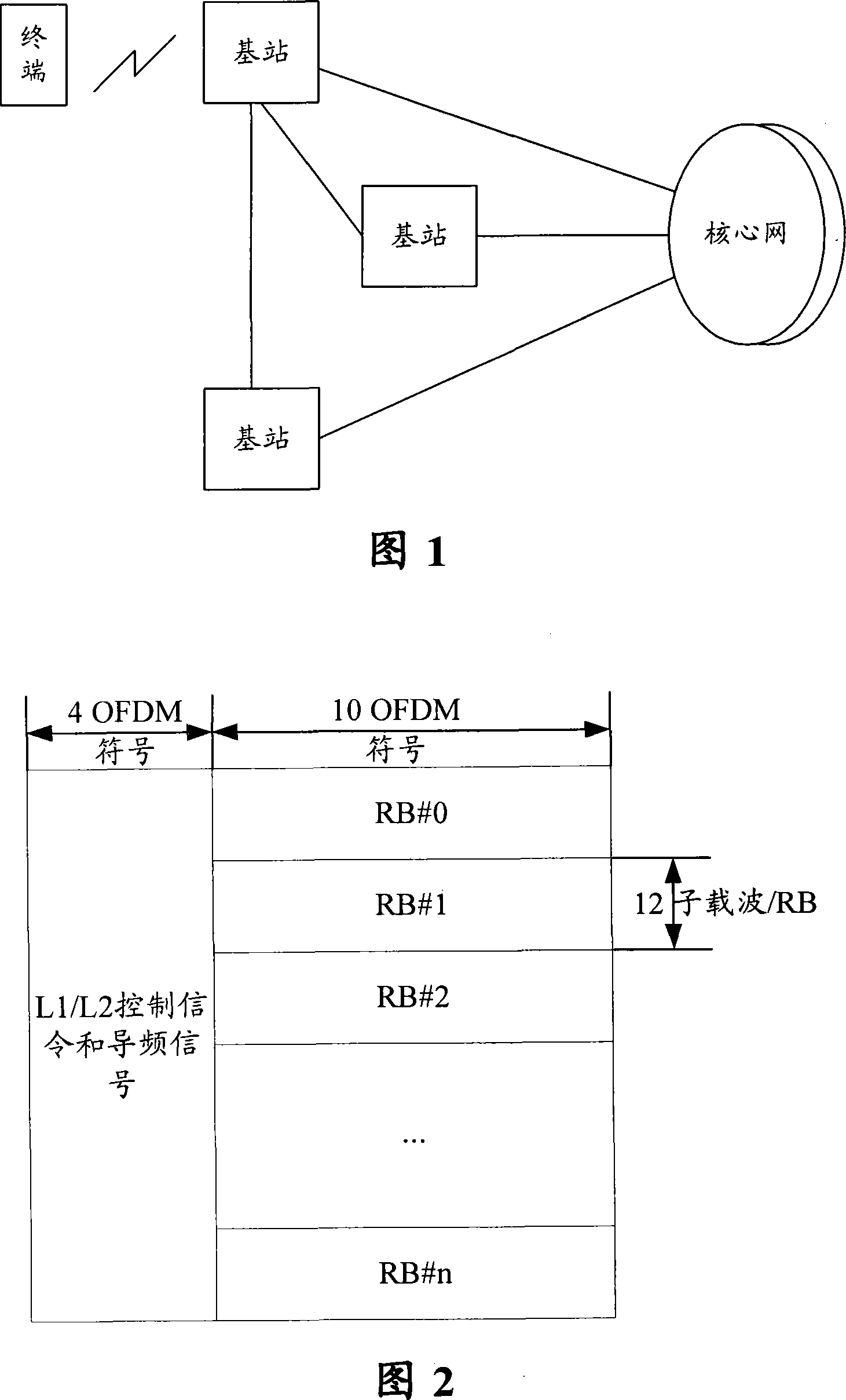

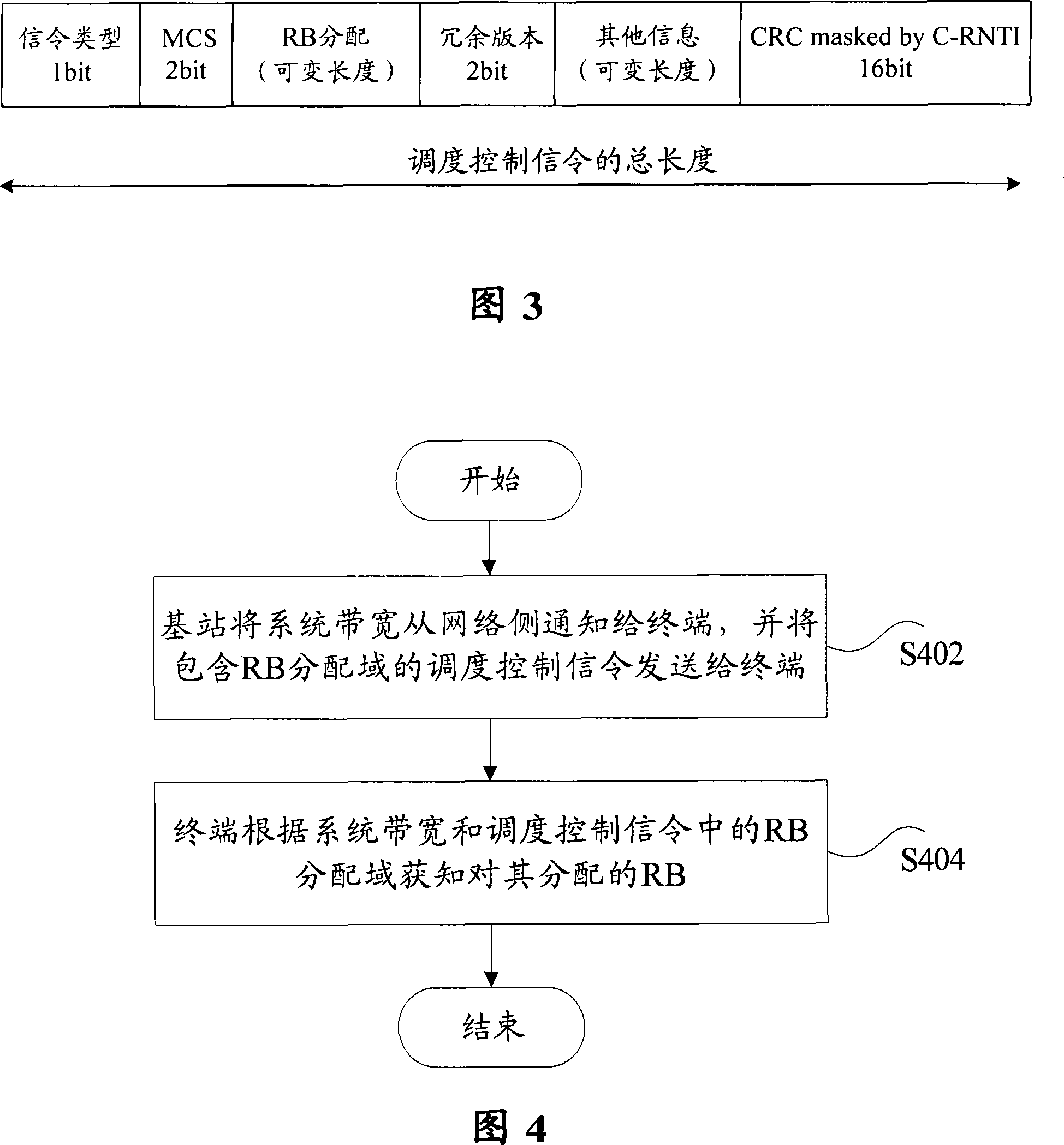

Indication method for wireless resource allocation of LTE system

ActiveCN101127719AReduce scheduling overheadError preventionMulti-frequency code systemsTelecommunicationsWireless resource allocation

The utility model discloses an indication method for wireless resource allocation in a LTE system, comprising: a base station informs a terminal the system bandwidth and sends the dispatch / control instruction containing allocation domain of the resource block to the terminal. The terminal knows the resource block allocated on the basis of the system bandwidth and the resource block allocation domain in the dispatch / control instruction. The indication method in the utility model can decide the RB resource to be allocated to the terminal by making the terminal get the system bandwidth and the RB allocation domain in the dispatch / control instruction from the network side, so that the dispatching expense is greatly reduced.

Owner:ZTE CORP

Method of transmitting sounding reference signal in wireless communication system

ActiveUS8831042B2Reduce battery consumptionImprove spectral efficiencyError prevention/detection by using return channelTransmission control/equlisationCommunications systemSounding reference signal

A method of transmitting a sounding reference signal includes generating a physical uplink control channel (PUCCH) carrying uplink control information on a subframe, the subframe comprising a plurality of SC-FDMA (single carrier-frequency division multiple access) symbols, wherein the uplink control information is punctured on one SC-FDMA symbol in the subframe, and transmitting simultaneously the uplink control information on the PUCCH and a sounding reference signal on the punctured SC-FDMA symbol. The uplink control information and the sounding reference signal can be simultaneously transmitted without affecting a single carrier characteristic.

Owner:LG ELECTRONICS INC

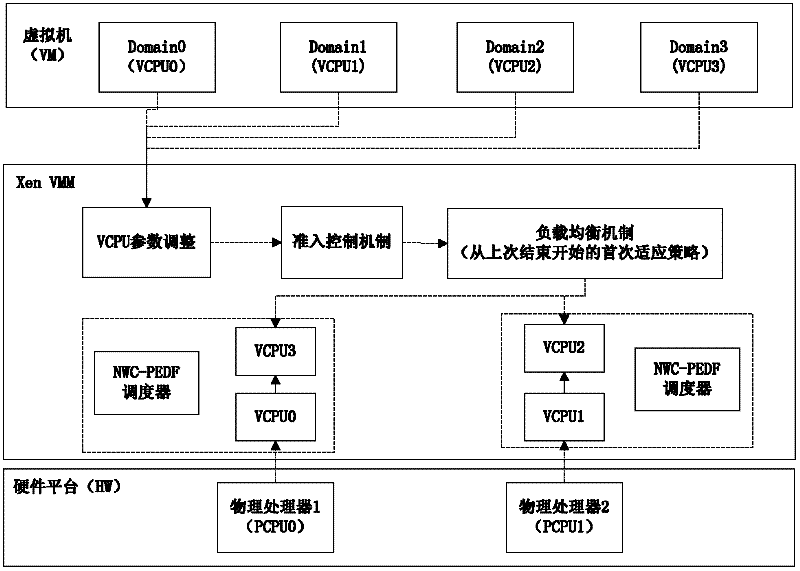

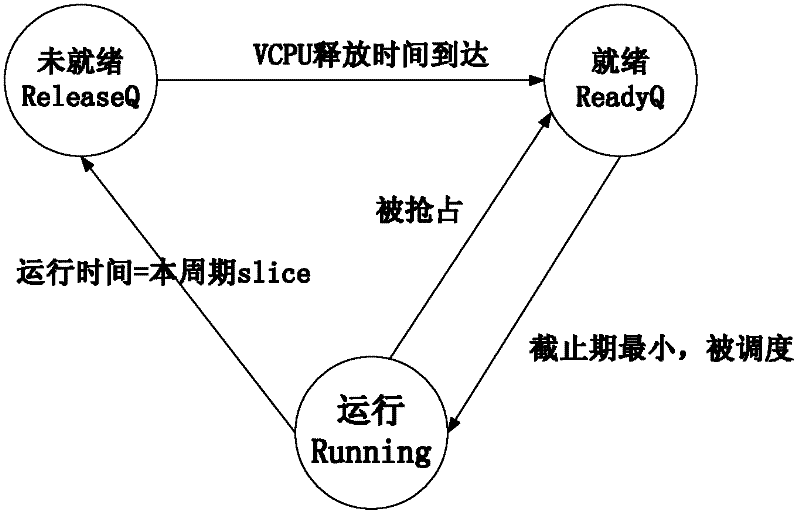

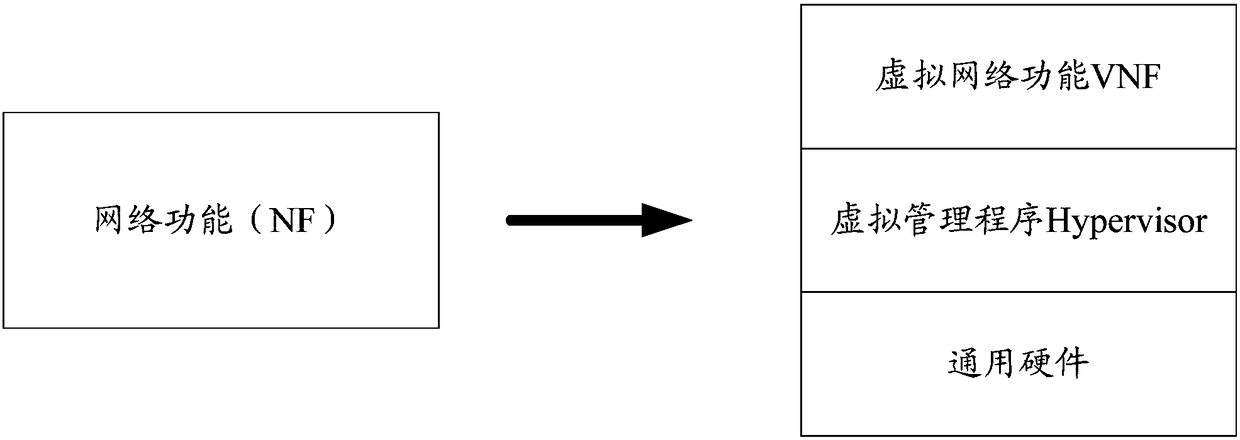

A method of admission control and load balancing in a virtualized environment

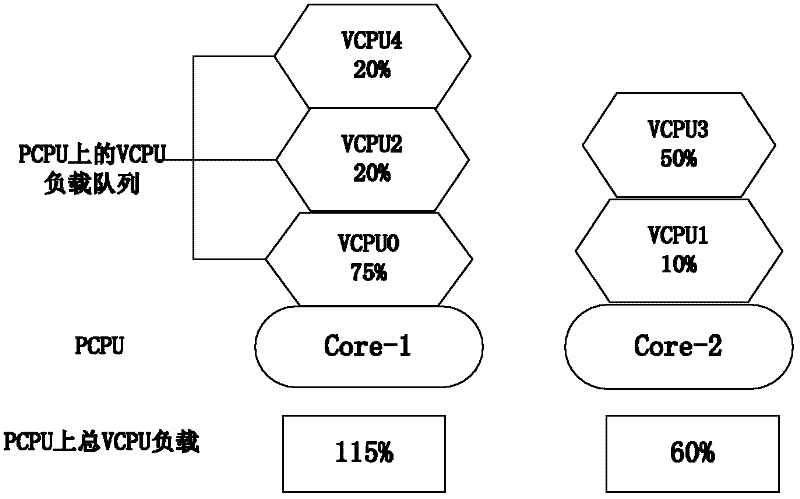

ActiveCN102270159AAvoid occupyingReduce migration overheadResource allocationSoftware simulation/interpretation/emulationPhysics processing unitProcessing

The invention relates to an access controlling and load balancing method for a virtualized environment, which comprises the following steps of: 1, correcting a simple earliest deadline first scheduling algorithm in a Xen virtual machine, and realizing a NWC-PEDF (Non-Work-Conserving Partition Earliest Deadline First) scheduling algorithm; 2, introducing an access controlling mechanism for each physics processing unit, and controlling the load of a Xen virtual processor allocated on each physics processing unit; 3, controlling the allocation and the mapping of a VCPU (Virtual Center ProcessingUnit) in a Xen virtual environment to a PCPU (Physics Center Processing Unit) on a multicore hardware platform by adopting a first adapting strategy so as to ensure the balance of load on each PCPU; and 4, providing a support mechanism for adjusting the scheduling parameter of the VCPU, and allowing a manager to adjust the scheduling parameter of the VCPU according to the variation condition of the load of the virtual machine in the running process. The invention has the advantages that the requirement of the virtual environment of the multi-core hardware platform on the hard real-time tasks of an embedded real-time system is met, improvement is made on the scheduling algorithm in the Xen virtual machine, and the access controlling and load balancing mechanism is realized.

Owner:ZHEJIANG UNIV

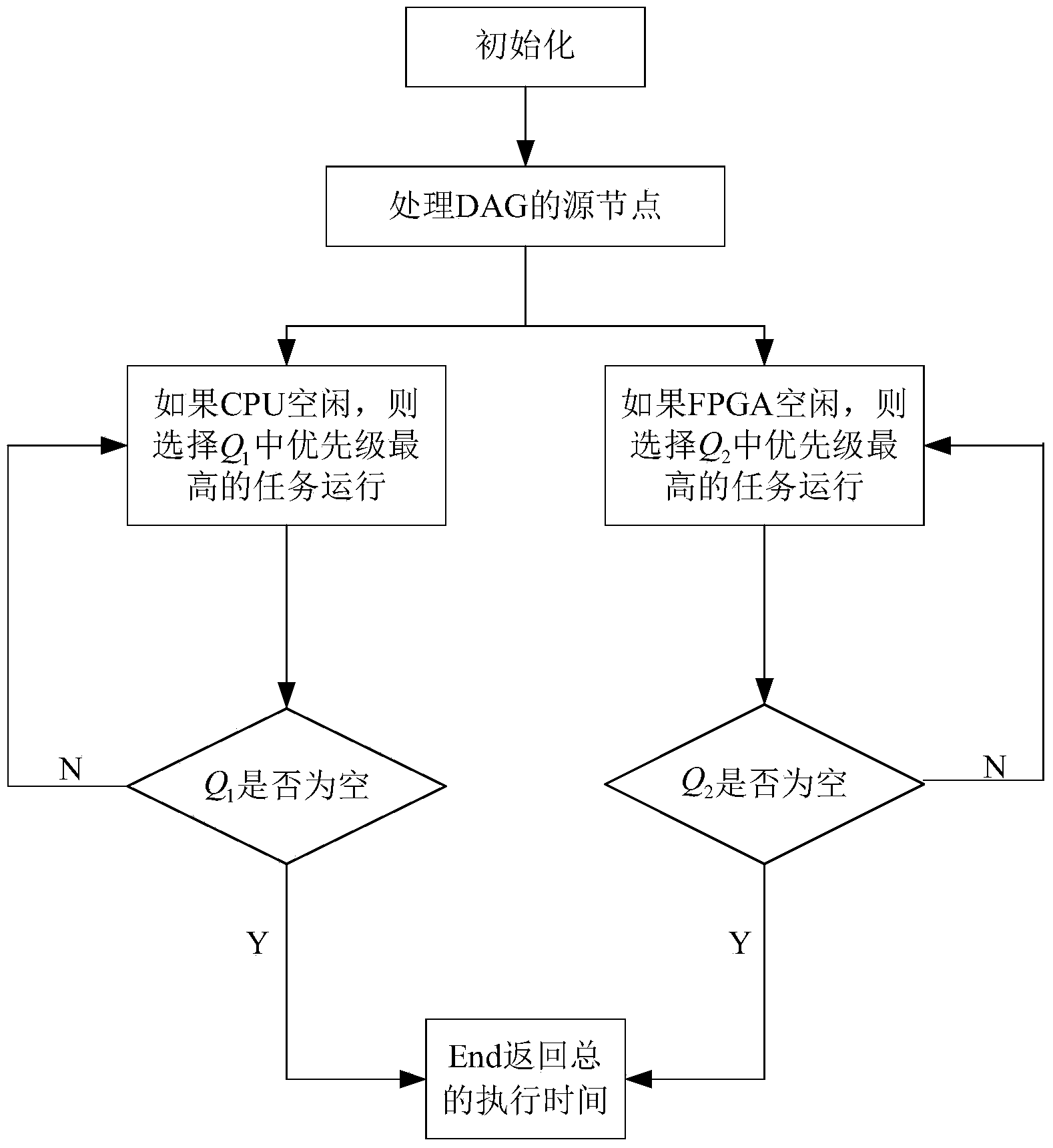

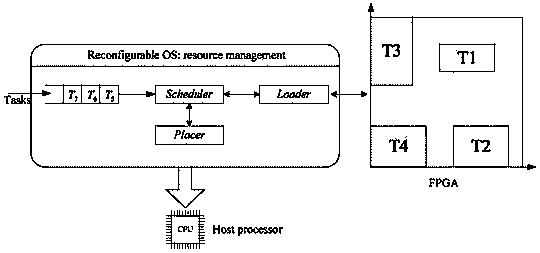

Hybrid task scheduling method of directed acyclic graph (DGA) based reconfigurable system

InactiveCN104239135AReduce configuration timesReduce scheduling overheadProgram initiation/switchingRunning timeDirected acyclic graph

The invention discloses a hybrid task scheduling method of a directed acyclic graph (DGA) based reconfigurable system. The hybrid task scheduling method includes decomposing an application into multiple tasklets described by DGA, and scheduling the tasklets through a scheduler; allowing software tasks to enter a queue Q1, and calculating the software tasks in the queue Q1 according to CPU idling condition and scheduling priority after the software tasks are managed through a task manager; allowing hardware tasks to enter a queue Q2, and further allowing the hardware tasks to enter a queue Q3 if the hardware tasks in the queue Q2 are capable of reutilizing a reconfigurable resource, otherwise, keeping the hardware tasks queuing up in the queue Q2 according to the priority and then configuring and loading through a loader; completing the process of configuring and loading or allowing the tasks in the queue Q3 to enter a queue Q4, allowing the tasks in the queue Q4 to enter a queue Q5 after the tasks are managed via the task manager, then running the tasks according to the priority, sequentially circulating until finishing running of all the tasks, and finally feeding back the total running time. The Q1 refers to the software task queue, the Q2 refers to the preconfigured hardware task queue, the Q3 refers to the configuration reuse queue, the Q4 refers to the configuration completion queue, and the Q5 refers to the running task queue. Configuration frequency is reduced by a configuration reuse strategy, so that the overall scheduling overhead is reduced.

Owner:JIANGSU UNIV OF SCI & TECH

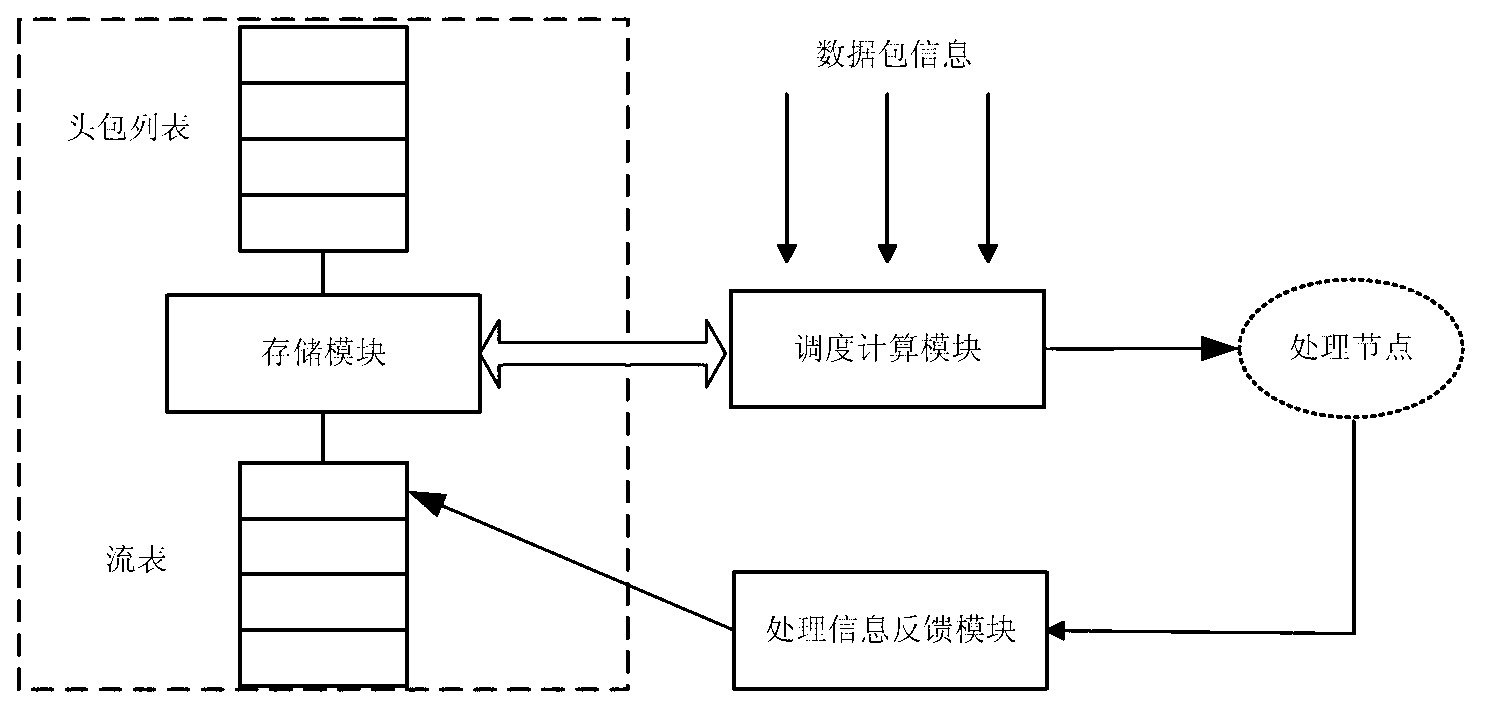

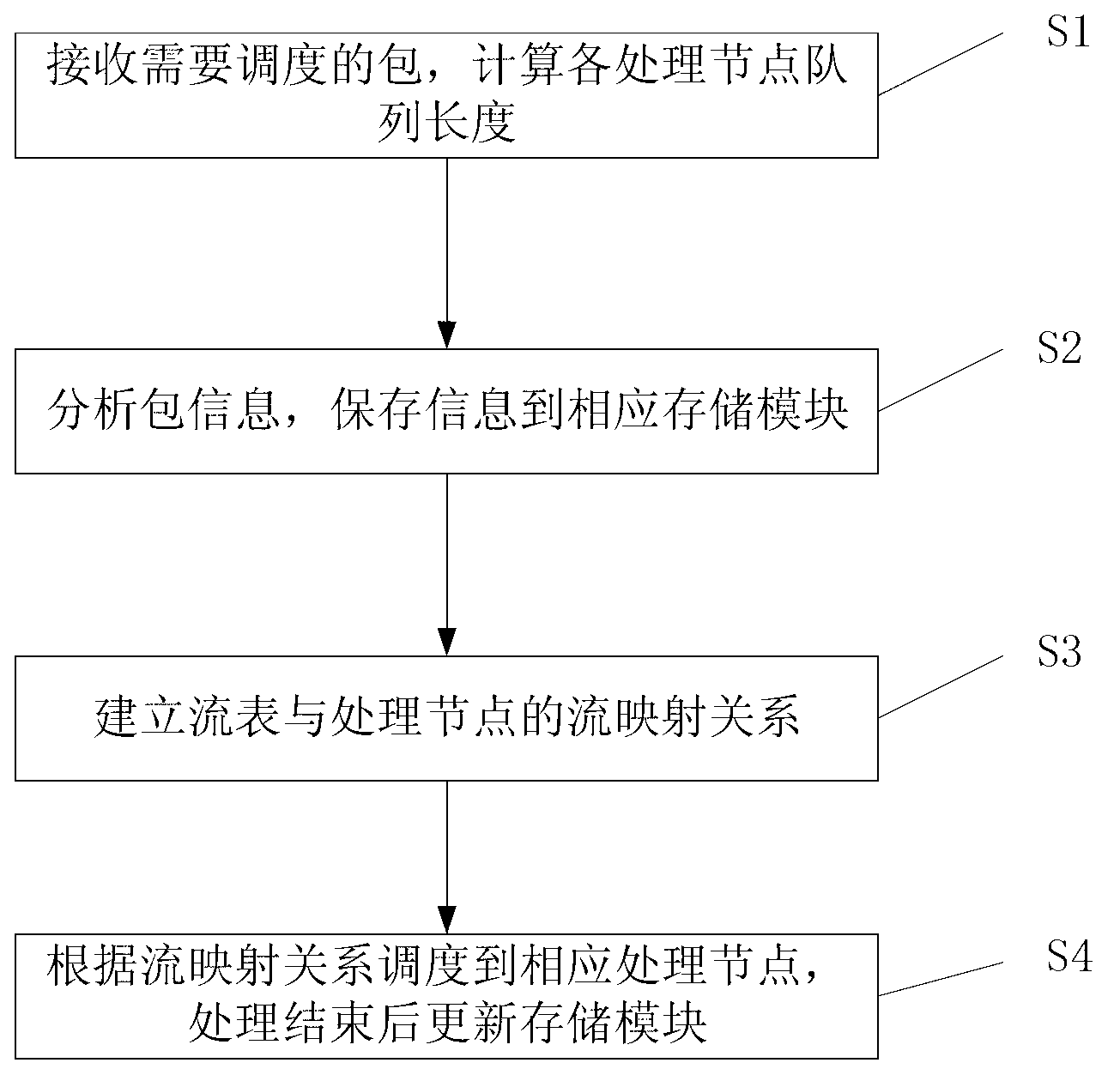

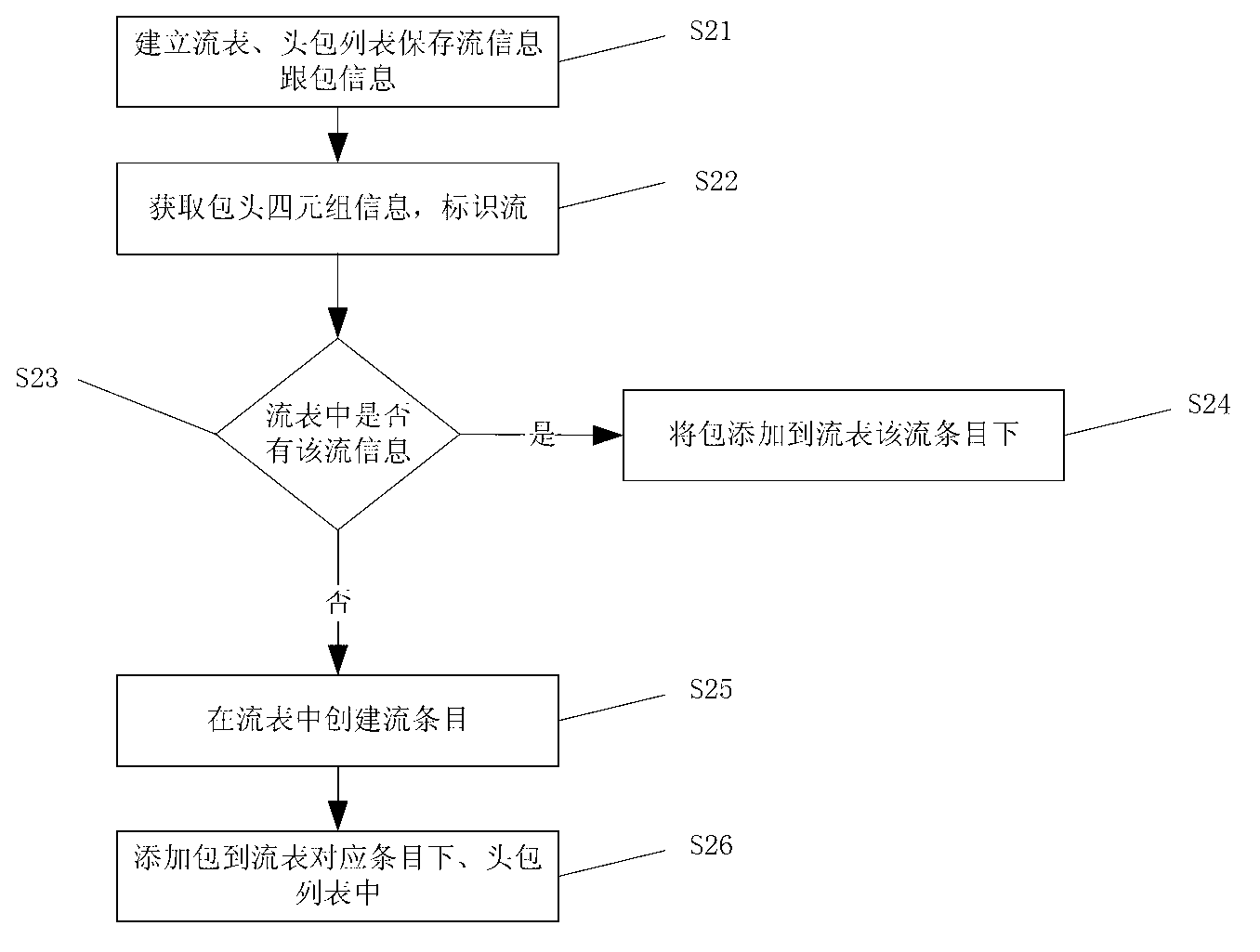

Method for scheduling traffic under multi-core network processor by traffic chart mapping scheduling strategy

InactiveCN103023800AReduce scheduling overheadOptimal Cache UtilizationData switching networksMoving averageTraffic capacity

The invention discloses a method for scheduling a traffic under a multi-core network processor by a traffic chart mapping scheduling strategy, which implements an allocation system cache memory on a burst traffic through a head packet list memory structure and a traffic chart memory structure, and establishes a mapping relation between a traffic chart and a processing node; the task queue length information of the processing node is calculated through a weighed moving average method, in order to realize dynamic regulation of the mapping relation; and a packet in the traffic is scheduled to a corresponding node processing node through the mapping relation. According to the method disclosed by the invention, the traffic scheduling is effectively realized, the optimal cache memory utilization rate is achieved and traffic sequence keeping is realized; meanwhile, comparatively good load balance is guaranteed through the processing queue average length.

Owner:BEIHANG UNIV

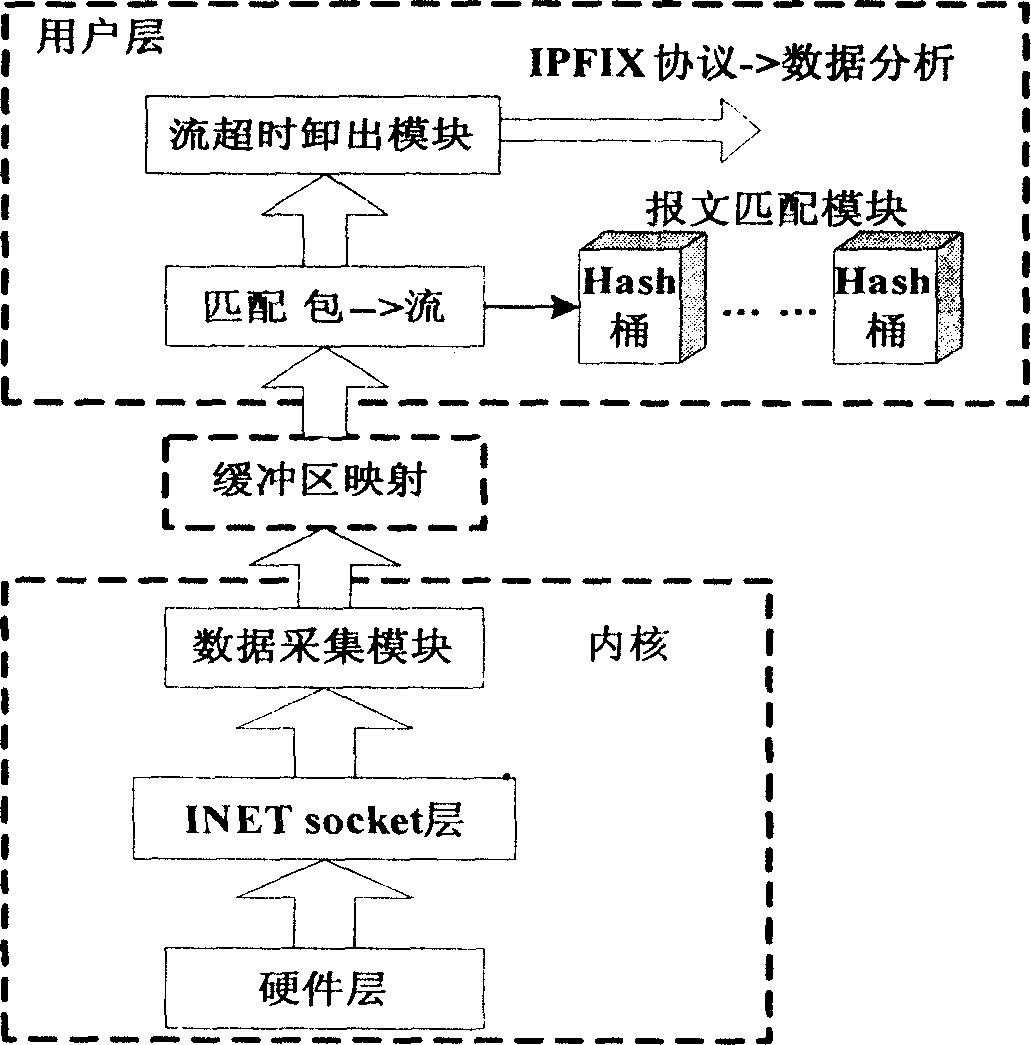

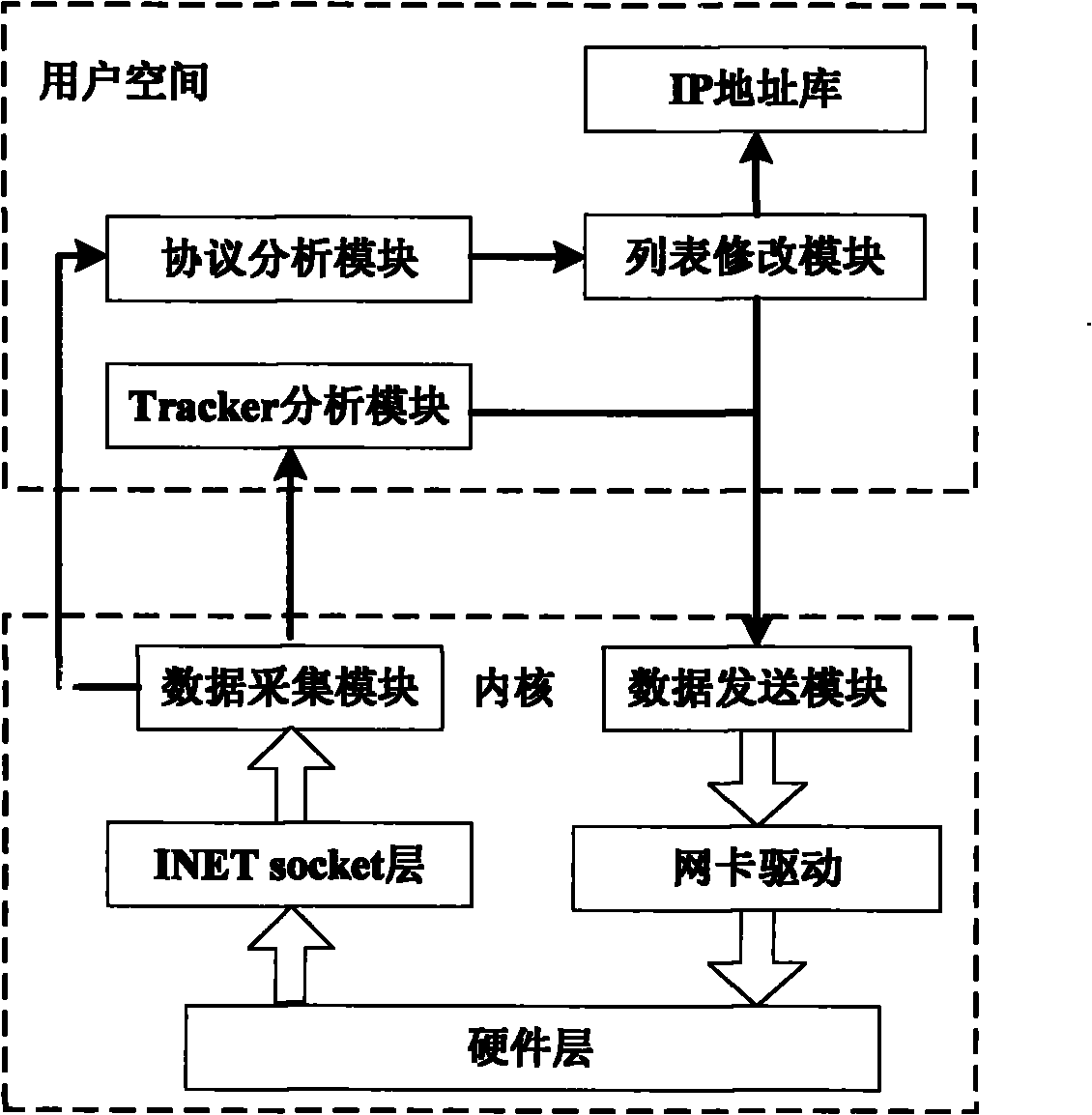

Linux kernel based high-speed network flow measuring unit and flow measuring method

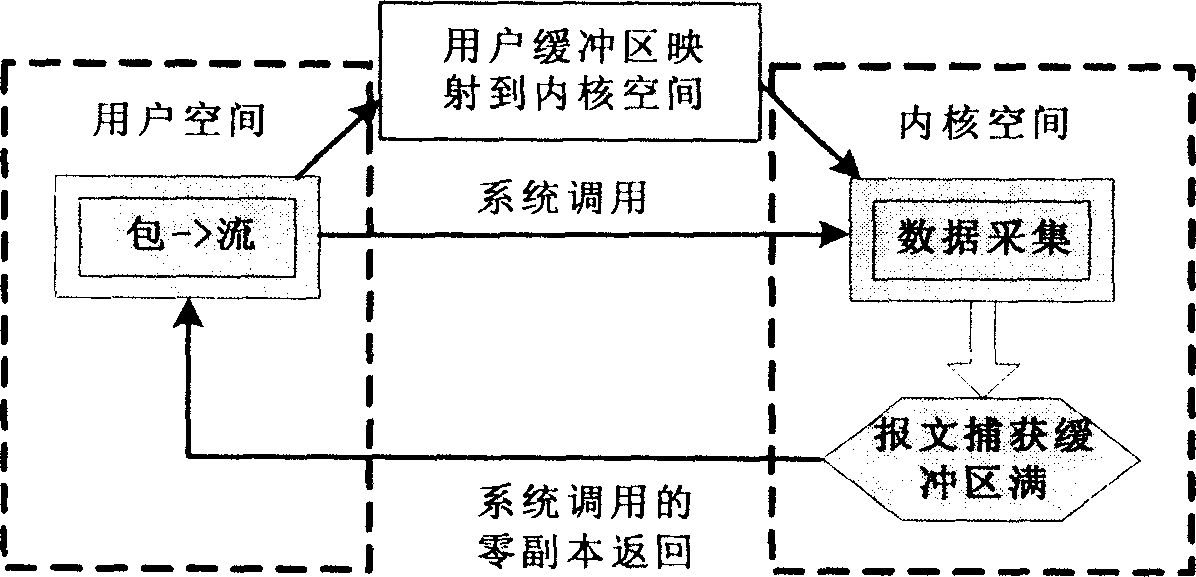

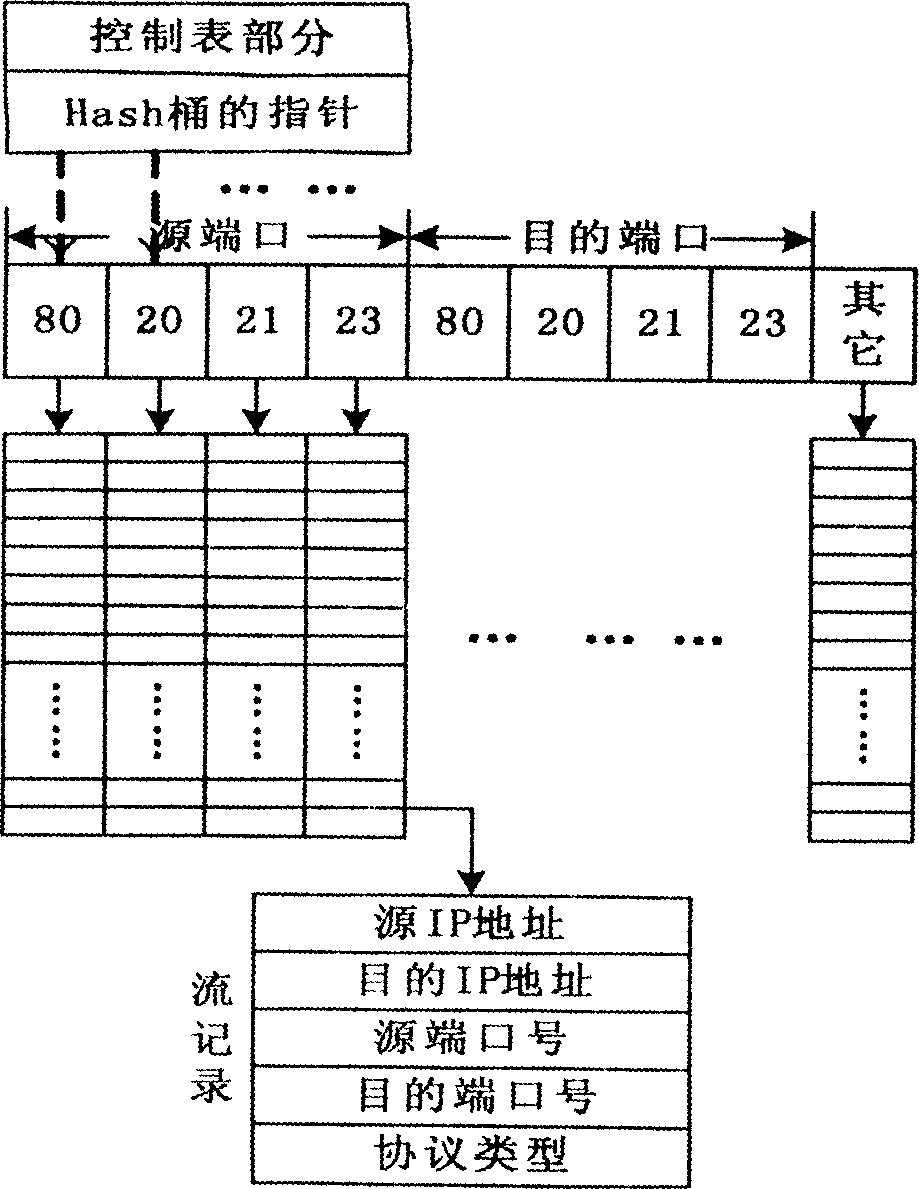

InactiveCN1700664AReduce system scheduling overheadReduce packet lossData switching networksTraffic volumeWeb technology

This invention relates to one detector of high speed network flow based on Linux and its method in computer technique, wherein the detector comprises data sampling module, message matching module, overflow disassemble module. The sample module is to extract the flow characteristics through mapping buffer area to matching module process and adopts user space to inner buffer area mapping and high-speed hash barrel.

Owner:SHENZHEN TINNO WIRELESS TECH

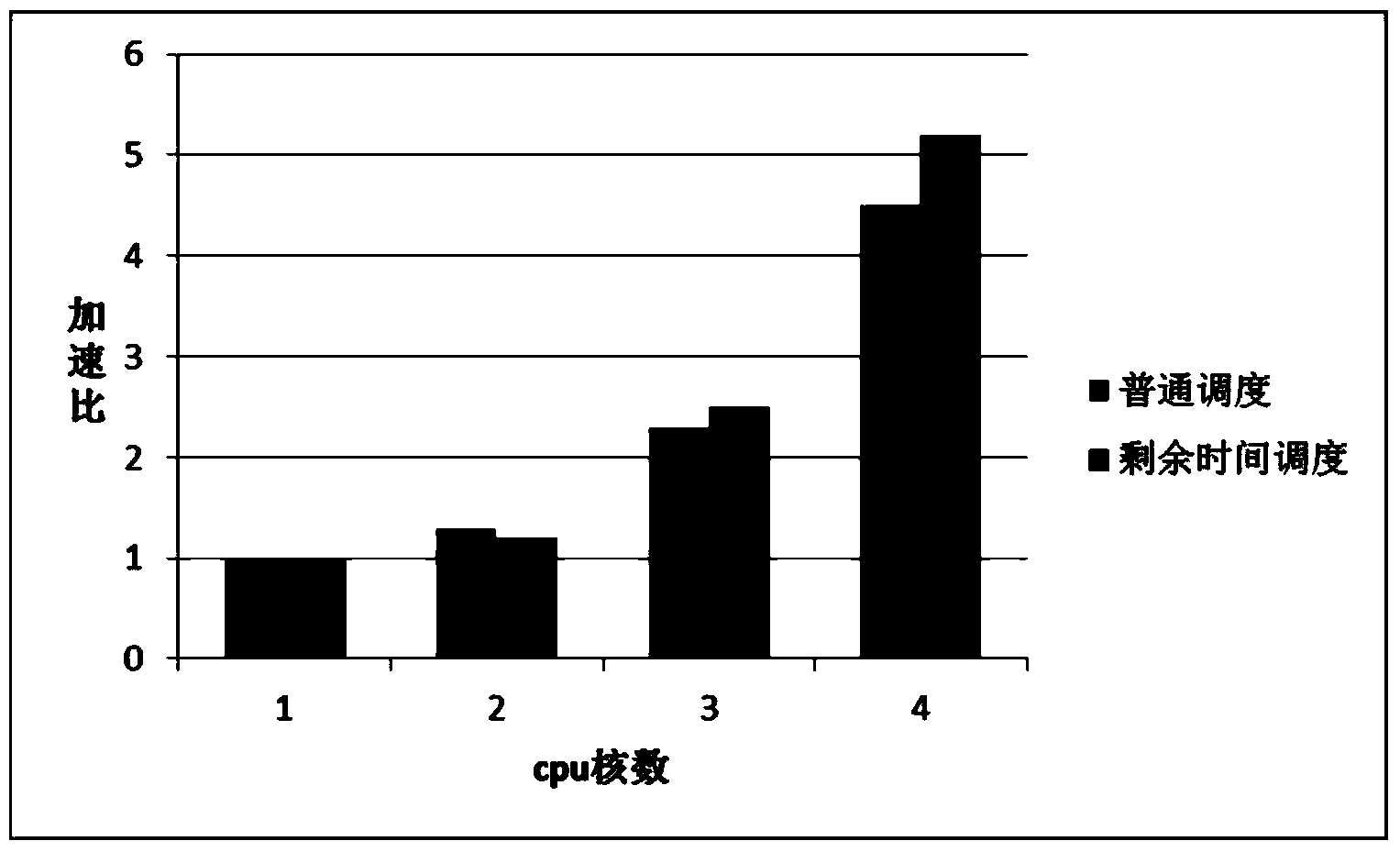

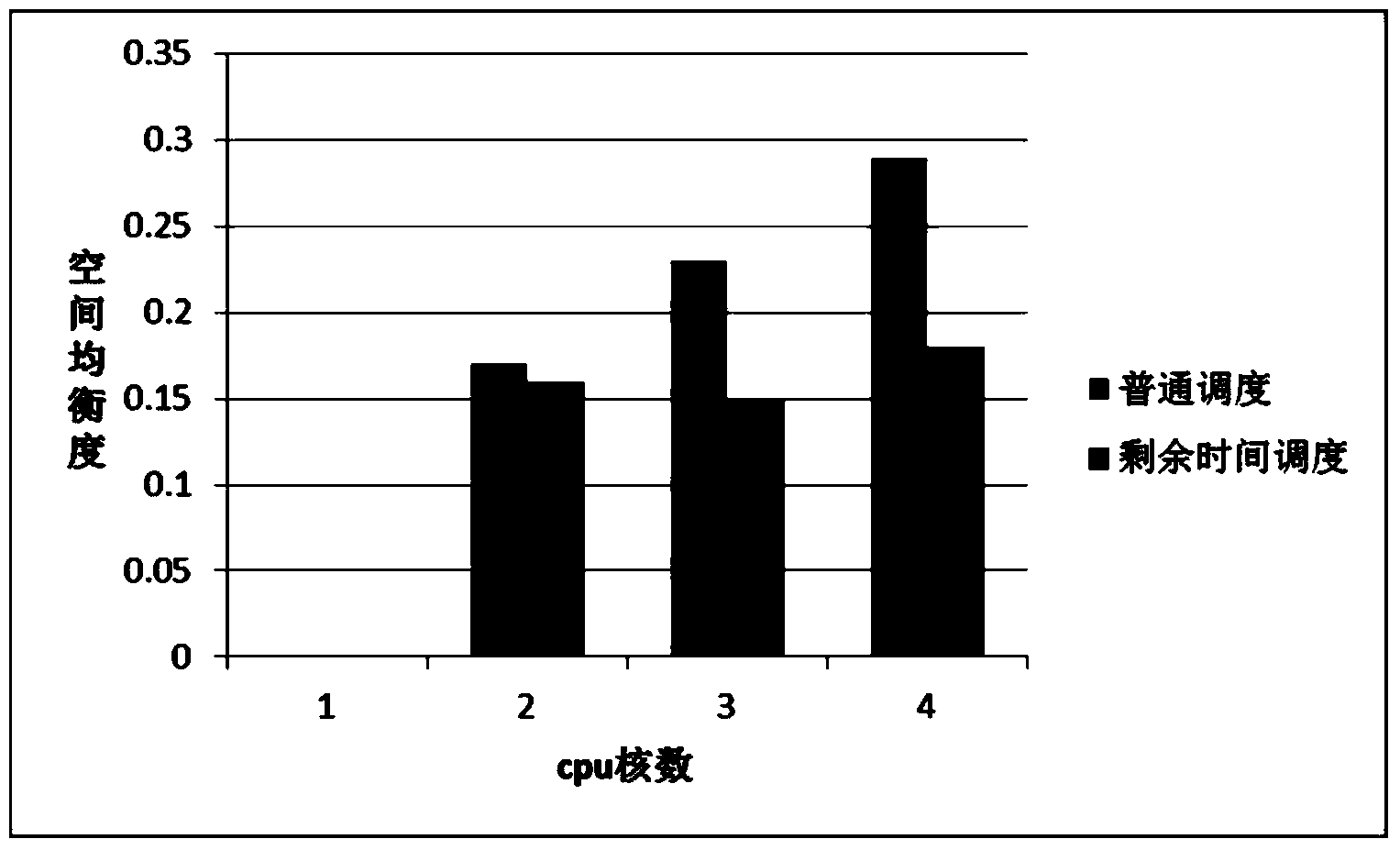

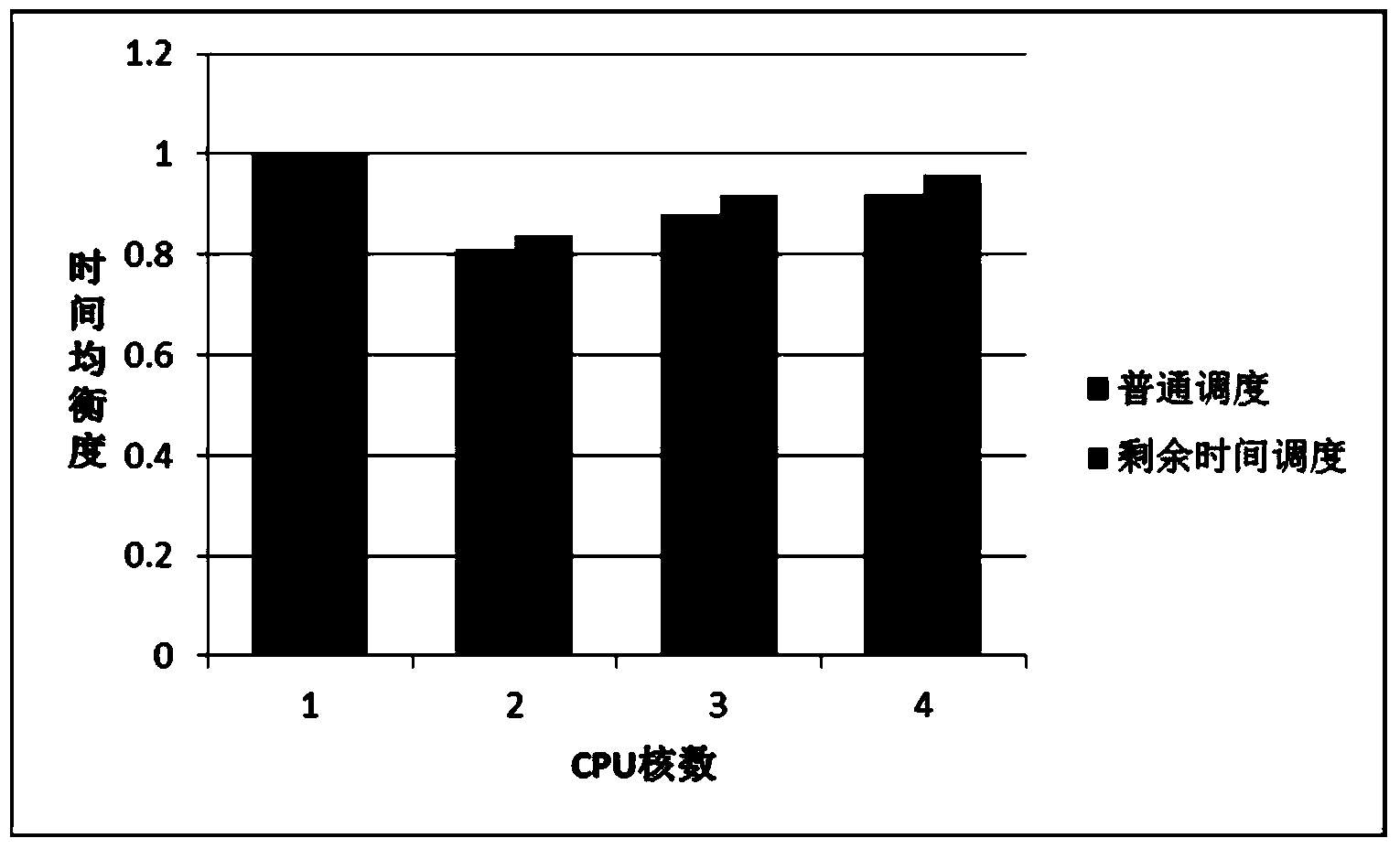

Network processor load balancing and scheduling method based on residual task processing time compensation

ActiveCN103685053AEvenly distributedReduce jitterData switching networksData streamNetwork processor

The invention discloses a network processor load balancing and scheduling method based on residual task processing time compensation. The method includes: using the processing time of each data packet as load scheduling weight, counting the residual task processing time of each processing unit, calculating the load of the each processing unit according to the task processing time, and selecting the processing unit with the minimum load to schedule the data packets. By the method, the defects of traditional load balancing algorithms based on data streams are overcome, the good multicore network processor balancing effect can be achieved.

Owner:BEIHANG UNIV

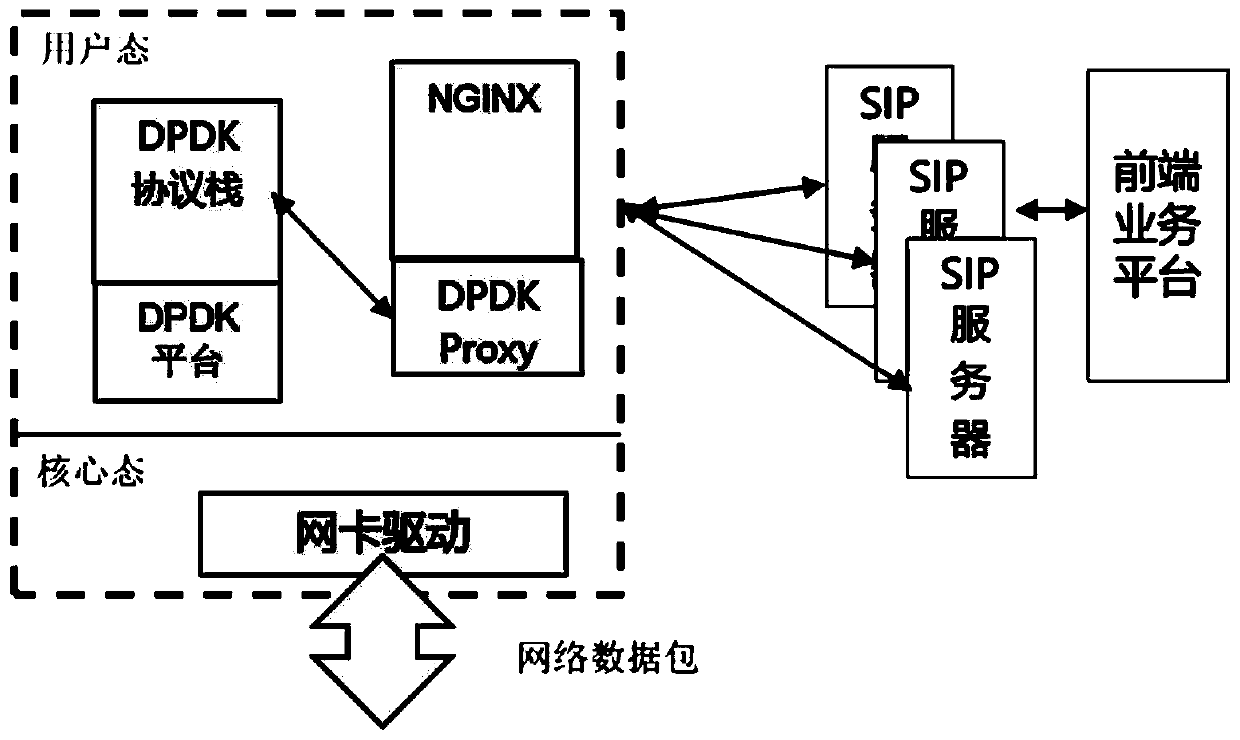

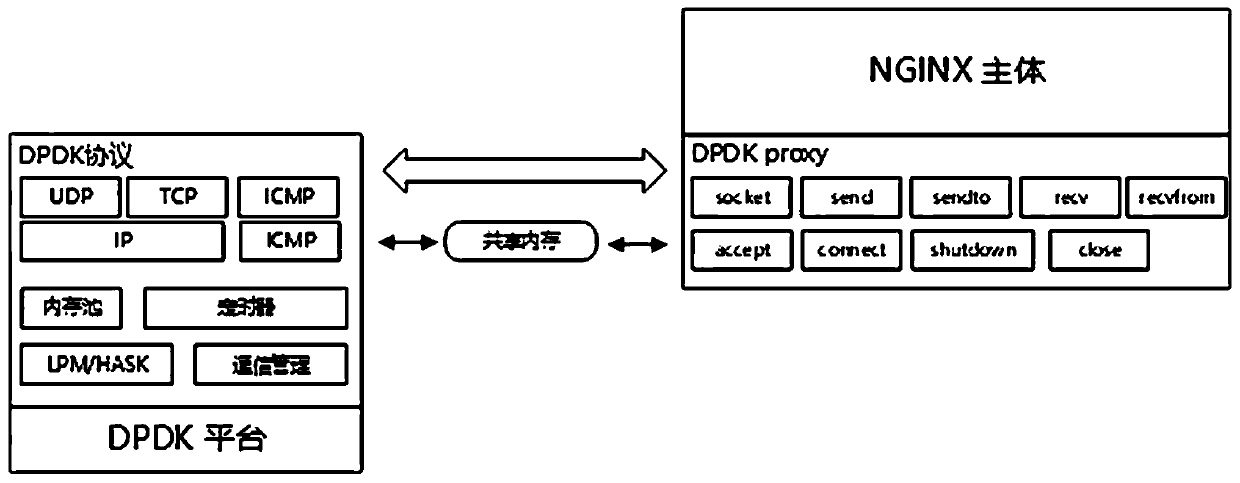

Method for improving performance of SIP gateway based on DPDK technology

ActiveCN110768994AReduce loadReduce the probability of packet lossData switching networksSession Initiation ProtocolData pack

The invention discloses a method for improving the performance of an SIP (Session Initiation Protocol) gateway based on a DPDK (Digital Protocol Data Kit) technology, which follows a DPDK programmingmode to realize a DPDK protocol stack and adopts a polling mode without interruption. Each traversed entity and its message can be quickly processed. The protocol stack and the NGINX are bound with each CPU core when being started, so that the optimization of the DPDK and the CPU cores is fully utilized, and the scheduling overhead of threads is reduced. The lock-free queue provided by the DPDK isused for communication between the protocol stack and the NGINX process, and the communication timeliness is high. The method is based on the DPDK technology, bypasses an LINUX system, directly captures packets from a network card to an application layer, forwards the data packets to the DPDK protocol stack of the NGINX, reduces the CPU load of the SIP gateway and the packet loss rate, and is suitable for scenes with large telephone traffic, communication timeliness requirements and low packet loss rate gateways.

Owner:中电福富信息科技有限公司 +1

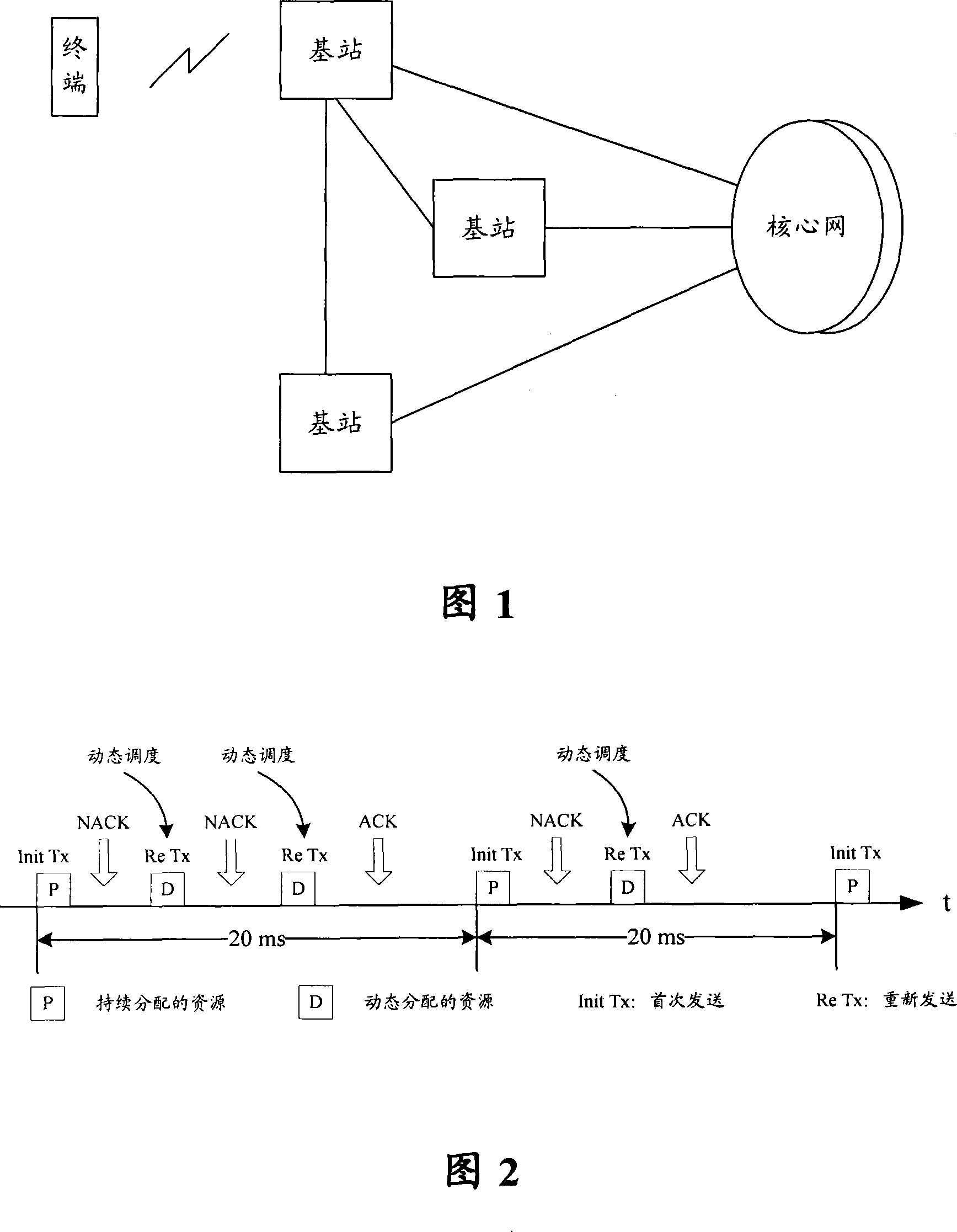

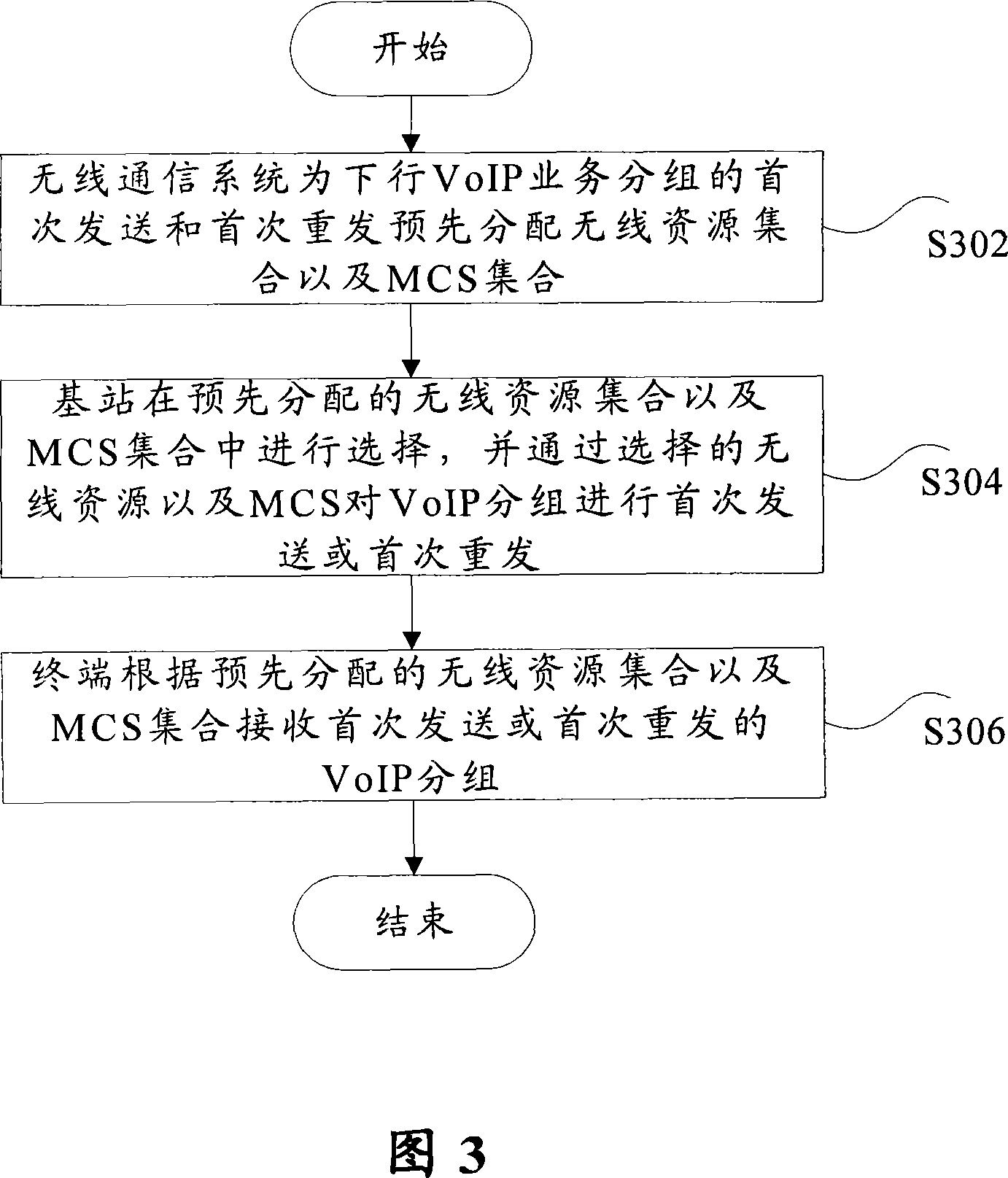

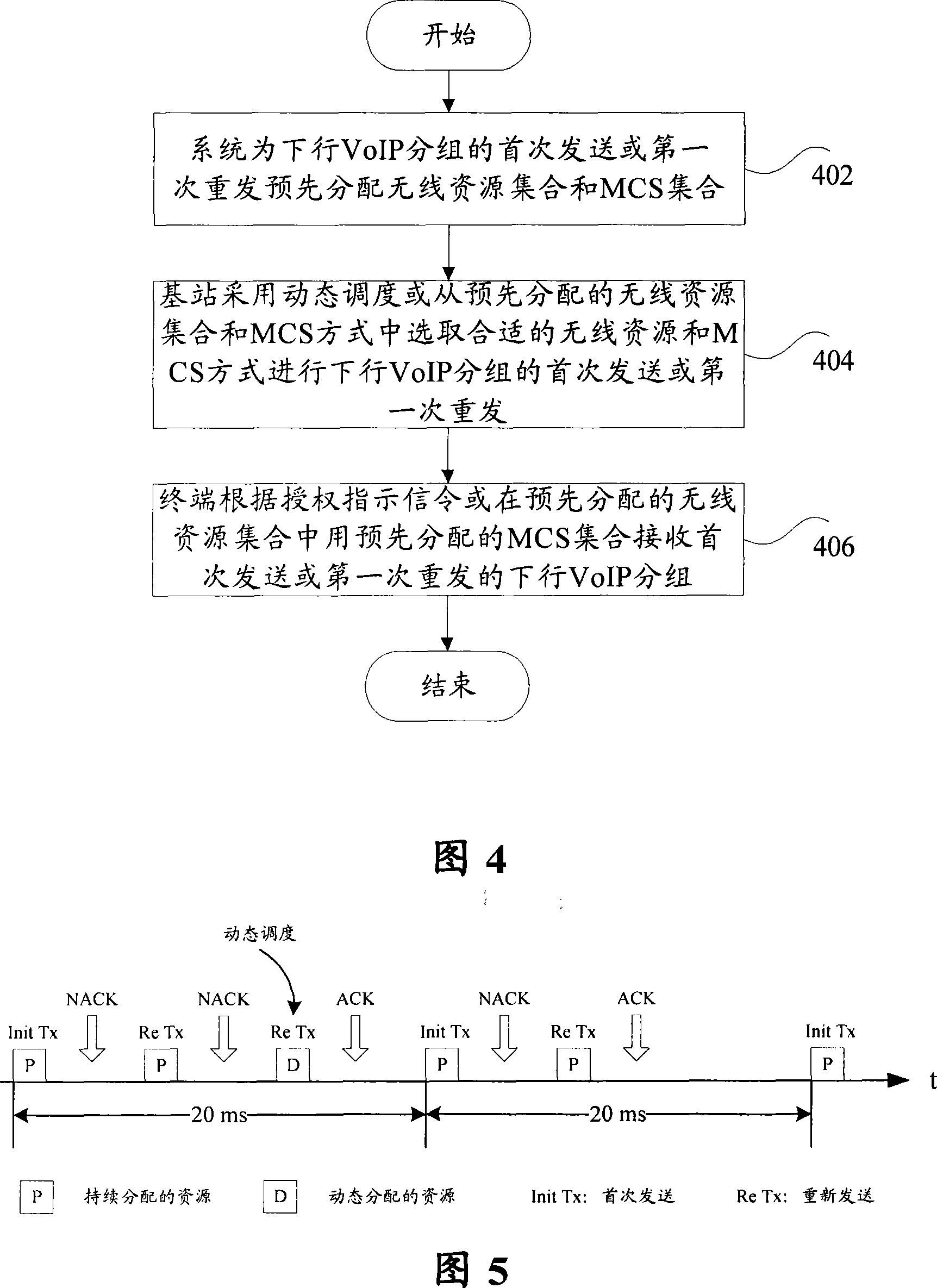

Scheduling method for downlink voice IP service

InactiveCN101127806AReduce scheduling overheadLarge scheduling flexibility and self-adaptive abilityInterconnection arrangementsRadio/inductive link selection arrangementsSelf adaptiveWireless communication systems

The utility model discloses a dispatching method for the descending VoIP business, comprising a step S302, a step S304 and a step S306; wherein the step S302 is that the wireless communication system transmits and retransmits firstly the pre-assigned wireless resources integration and MCS integration to the descending VoIP business grouping; the step S304 is that the base station makes option from the pre-assigned wireless resources integration and MCS integration and makes the first transmission or retransmission to the VoIP grouping through the selected wireless resources and MCS; the step S306 is that the terminal receives the first transmitted or retransmitted VoIP grouping according to the pre-assigned wireless resources integration and the MCS integration. The utility model has advantages of reducing fully the dispatching expenses and obtaining more dispatching flexibility and self-adaptive capacity.

Owner:ZTE CORP

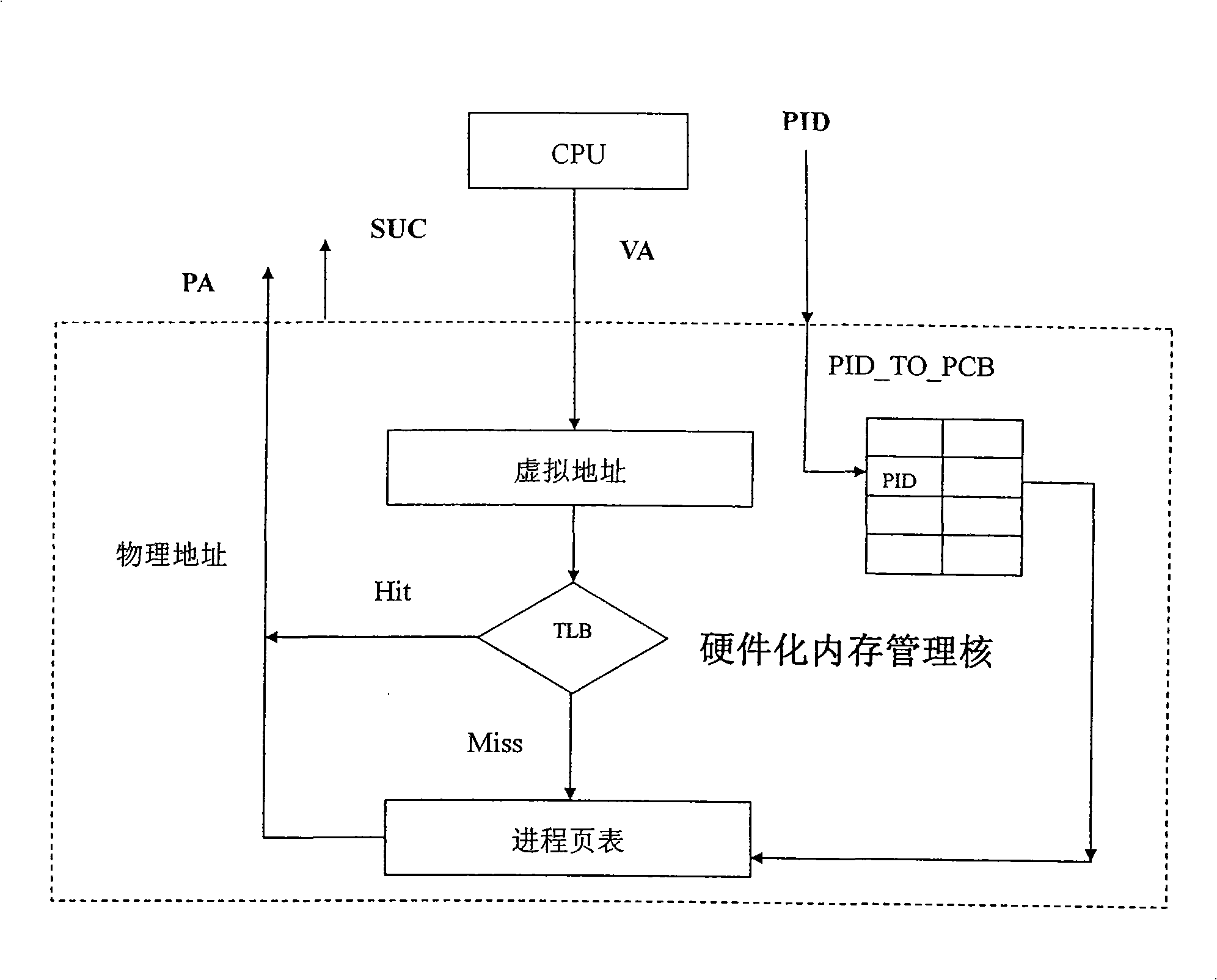

Hardware method for memory management core related to schedule performance

InactiveCN101539869AImprove efficiencyReduce overheadProgram initiation/switchingProcess memoryOperational system

The invention discloses a hardware method for process memory management core related to schedule performance. The method hands over the process memory management directly related to the schedule performance in an operating system, including the functions of creating, deleting and switching of process page tables, virtual address mapping, switching of the virtual address to the physical address, to hardware for completion, thus reducing the expenditure of process switching while scheduling and improving the efficiency when in process switching. The hardware method can be utilized for other parts of the operating system. The hardware method realizes process memory management, reduces the expenditure of process switching and improves the efficiency when in process switching, hands over the functions which are originally realized by the software code to a hardware unit for completion, eliminates the possibility that a certain part of the operating system is modified maliciously and guarantees the complete correctness and reliability of processing.

Owner:ZHEJIANG UNIV

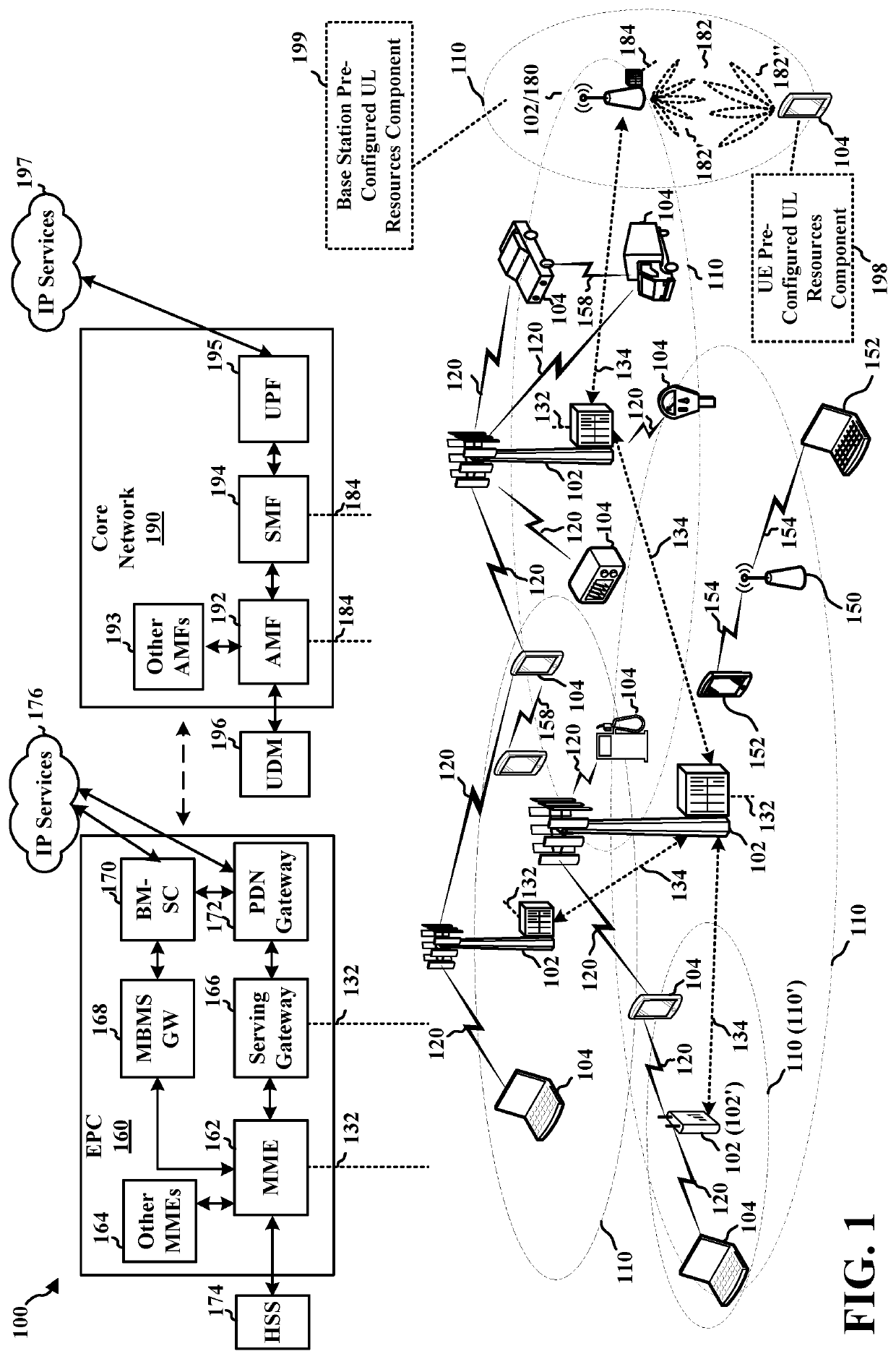

Resources and schemes for uplink transmissions in narrowband communication

PendingUS20210274568A1Increase power consumptionImprove transmission efficiencySynchronisation arrangementSignal allocationUplink transmissionReal-time computing

Apparatus, methods, and computer-readable media for facilitating using of pre-configured UL resources to transmit grant-free data or reference signals are disclosed herein. An example method for wireless communication by a UE includes transmitting, to a base station, a capability of the UE to transmit data in a first message of a random access procedure. The example method also includes receiving pre-configured UL resources from the base station. The example method also includes transmitting data in the first message of the random access procedure using the pre-configured UL resources.

Owner:QUALCOMM INC

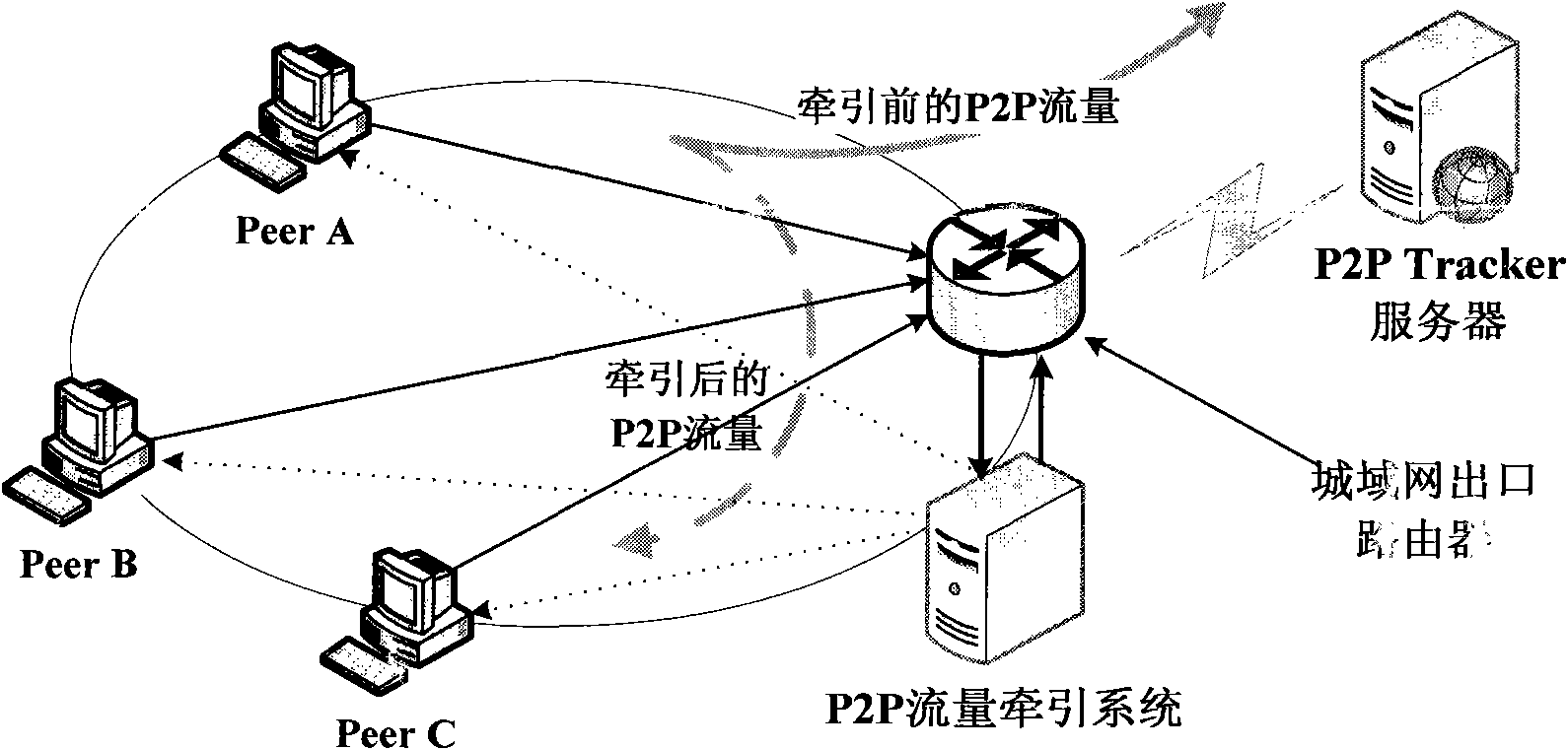

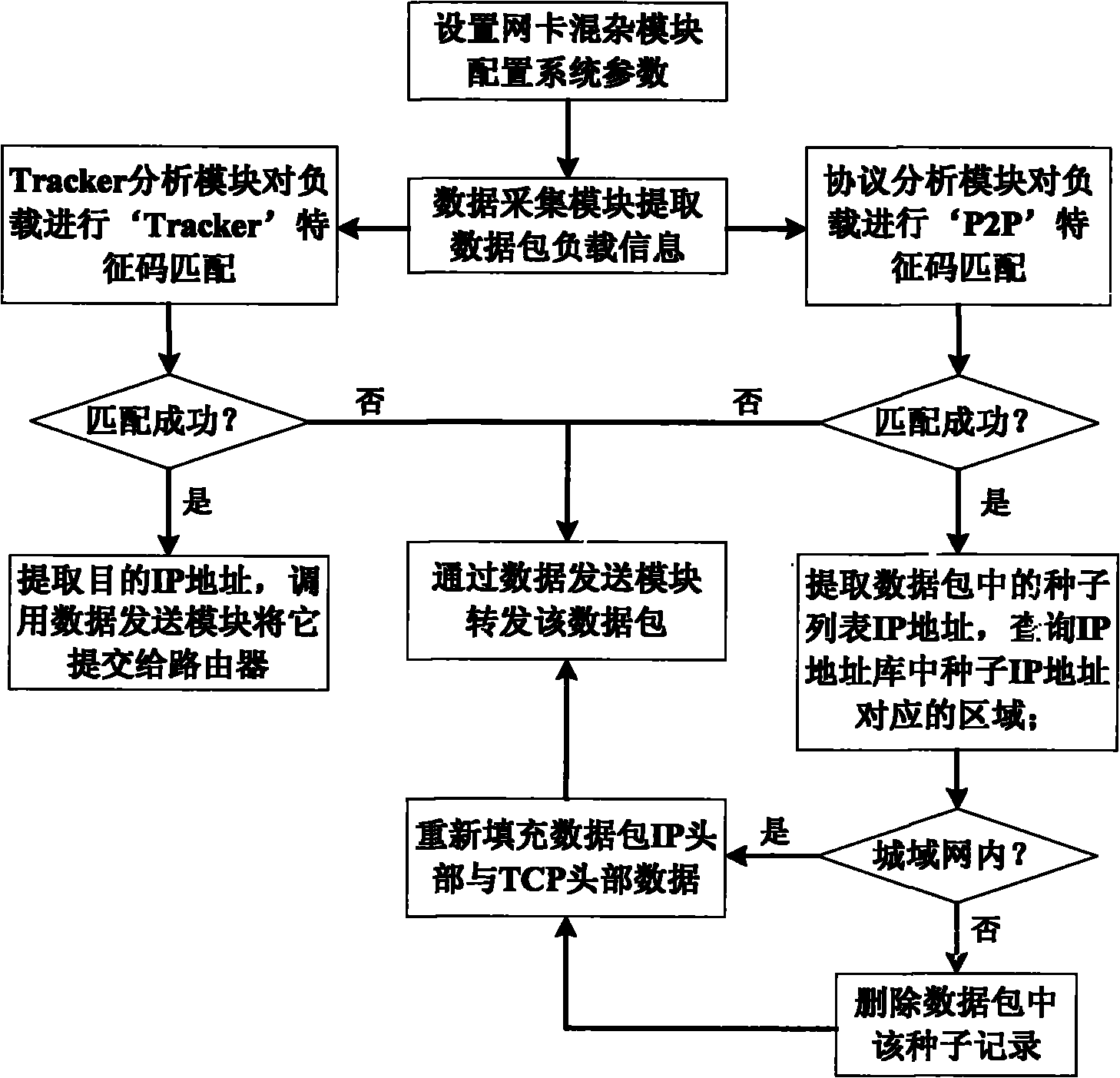

Peer-to-peer network flow traction system and method

InactiveCN101895469AReduce egress flowReduce scheduling overheadData switching networksTraffic capacityTraction system

The invention relates to a P2P (peer-to-peer) flow traction system relating to a peer-to-peer network. In the invention, a data packet which is successfully matched is sent to a Tracker server through carrying out Tracker characteristic code matching and P2P characteristic code matching on an acquired data packet; a seed list data packet between Peer and Tracker is analyzed to extract seed information; and the seed information is compared with address information stored in an IP address base, the seed information is deleted from the data packet if the address information is a metropolitan area network external address, otherwise, the seed information is reserved, thus the aim of traction of P2P flow is achieved. The invention can effectively control the flow generated by the traditional P2P type download software including BT, EasyMule and Thunder and can be widely applied to the fields of internet flow traction, internet flow control and the like.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

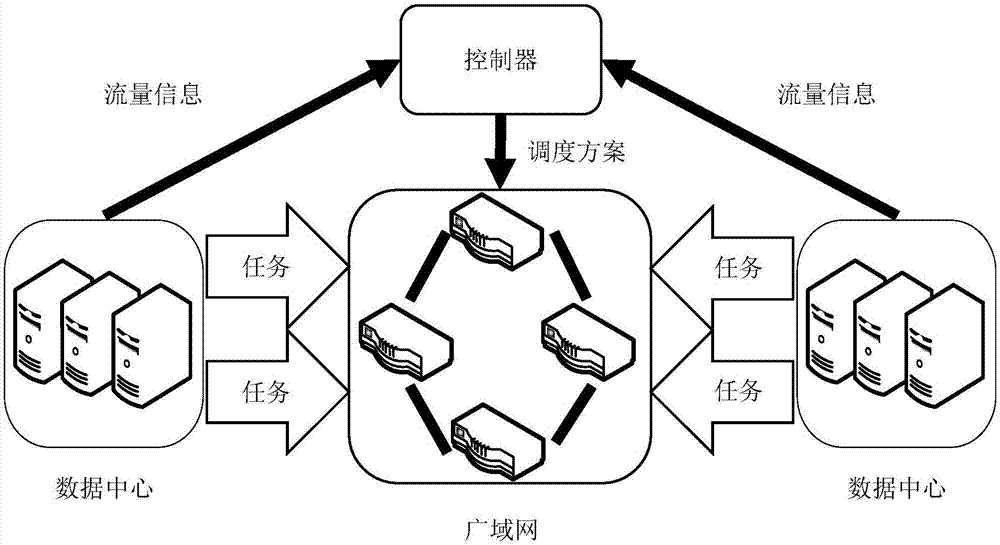

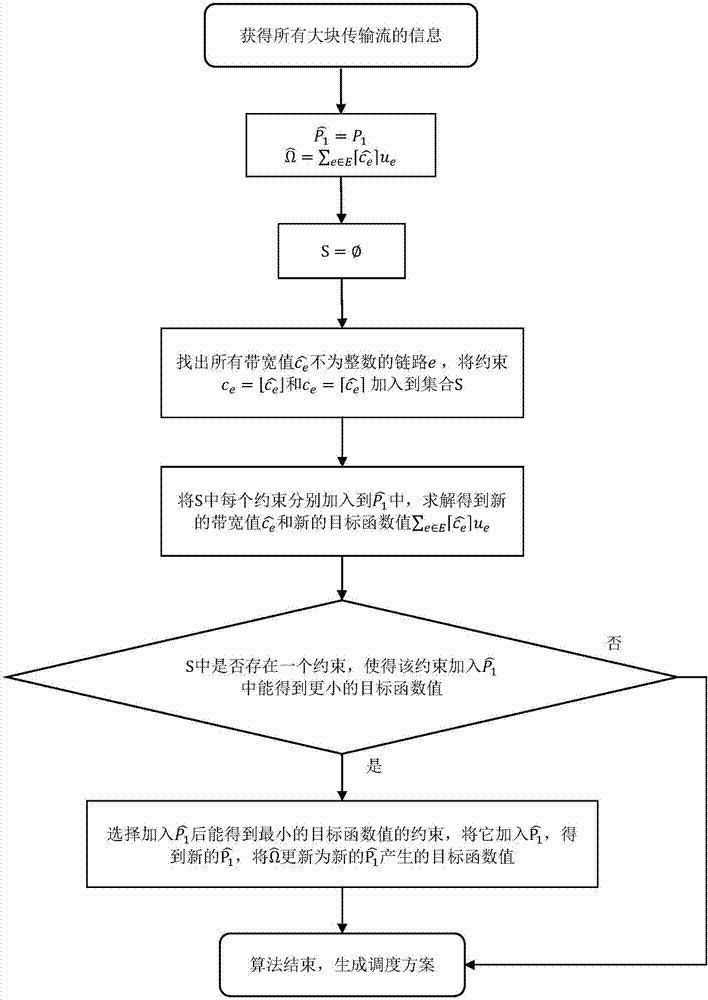

Data center-oriented offline scene low-bandwidth cost flow scheduling scheme

ActiveCN107454009AMinimize overheadPracticalData switching networksTraffic capacityQuality of service

The invention relates to an internet transmission control technology, specifically to a data center-oriented offline scene low-bandwidth cost flow scheduling scheme, and aims to reasonably schedule each block transmission stream on the premise of guaranteeing that all block transmission flows are completed on time, so that bandwidth hiring cost of a data center owner is minimum. The execution flow includes 1) obtaining the source nodes, destination nodes, data sizes, and arrival and cutoff time of all block transmission streams in a hiring period; 2) dividing the hiring period into a plurality of transmission time slots, reasonably scheduling the transmitting path and transmitting rate of each block transmission stream in each transmission time slot, and achieving minimization of bandwidth cost on the premise of guaranteeing that all the block transmission streams are completed. The data center-oriented offline scene low-bandwidth cost flow scheduling scheme provided by the invention can, on the premise of guaranteeing service quality, effectively reduce cost of the data center owner of hiring bandwidth from an internet service provider, and reduce data center operating cost.

Owner:TSINGHUA UNIV

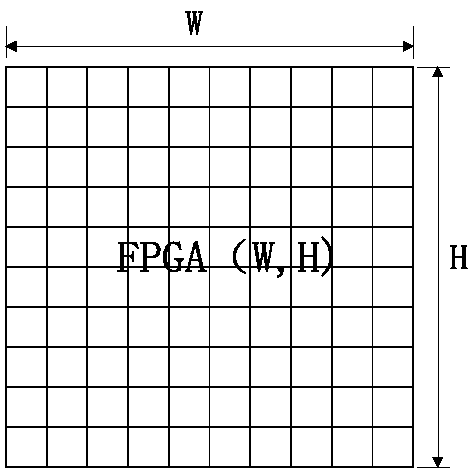

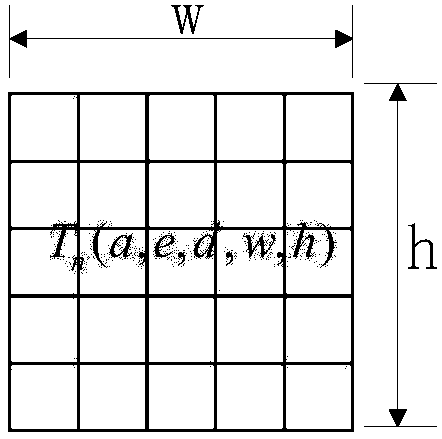

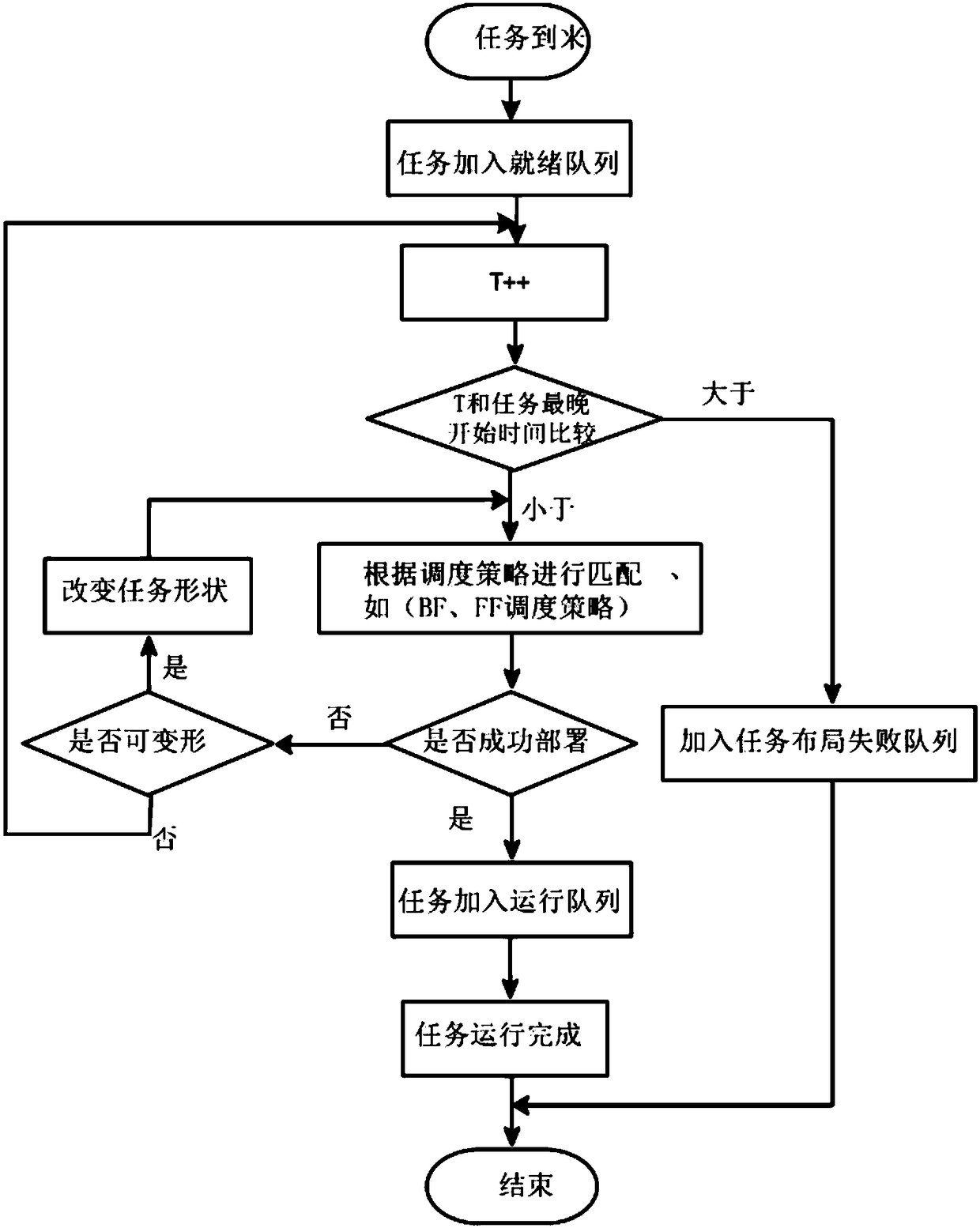

Layout method of dynamic reconfigurable FPGA

ActiveCN108256182AImprove resource utilizationImprove the success rate of layoutComputer aided designSpecial data processing applicationsComputer architectureUtilization rate

The invention discloses a layout method of dynamic reconfigurable FPGA. The method comprises the steps that calculation resources of an FPGA dynamic reconfigurable system are constructed, a bit map method is adopted to conduct management of the FPGA calculation resources, a task model of a single hardware task Tn is established, and during the process of FPGA online layout, deformation is conducted on a task model by using an FF Reshaped-Task-Model scheduling model to complete layout. According to the method, a strategy of changeable task model is introduced in the process of FPGA online layout, under the situation that the first layout fails, the shape of an online task is changed, a layout algorithm is scheduled again, the flexibility of a task is increased due to the fact that the shapeof the task is changeable, the selectivity of the task when layout is performed is improved, so that the success rate of task layout is increased, and the utilization rate of the FPGA resources is increased.

Owner:XI AN JIAOTONG UNIV

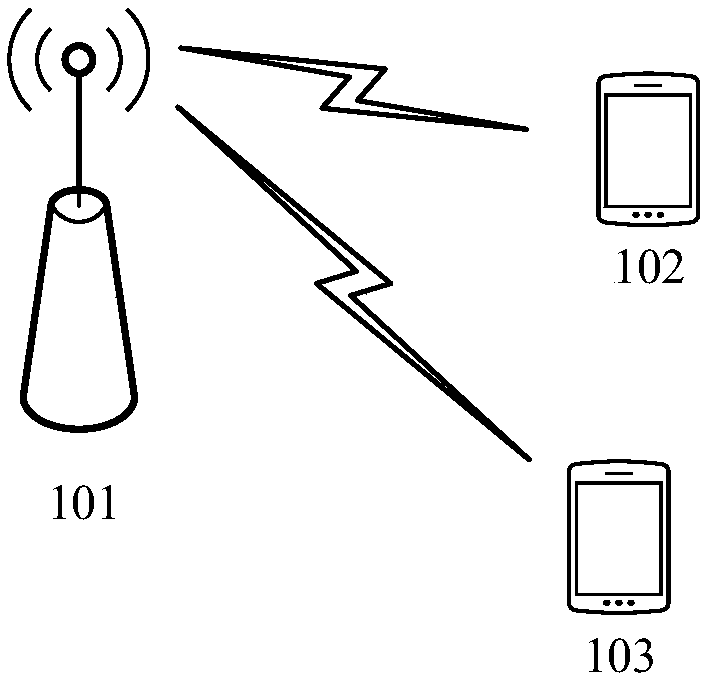

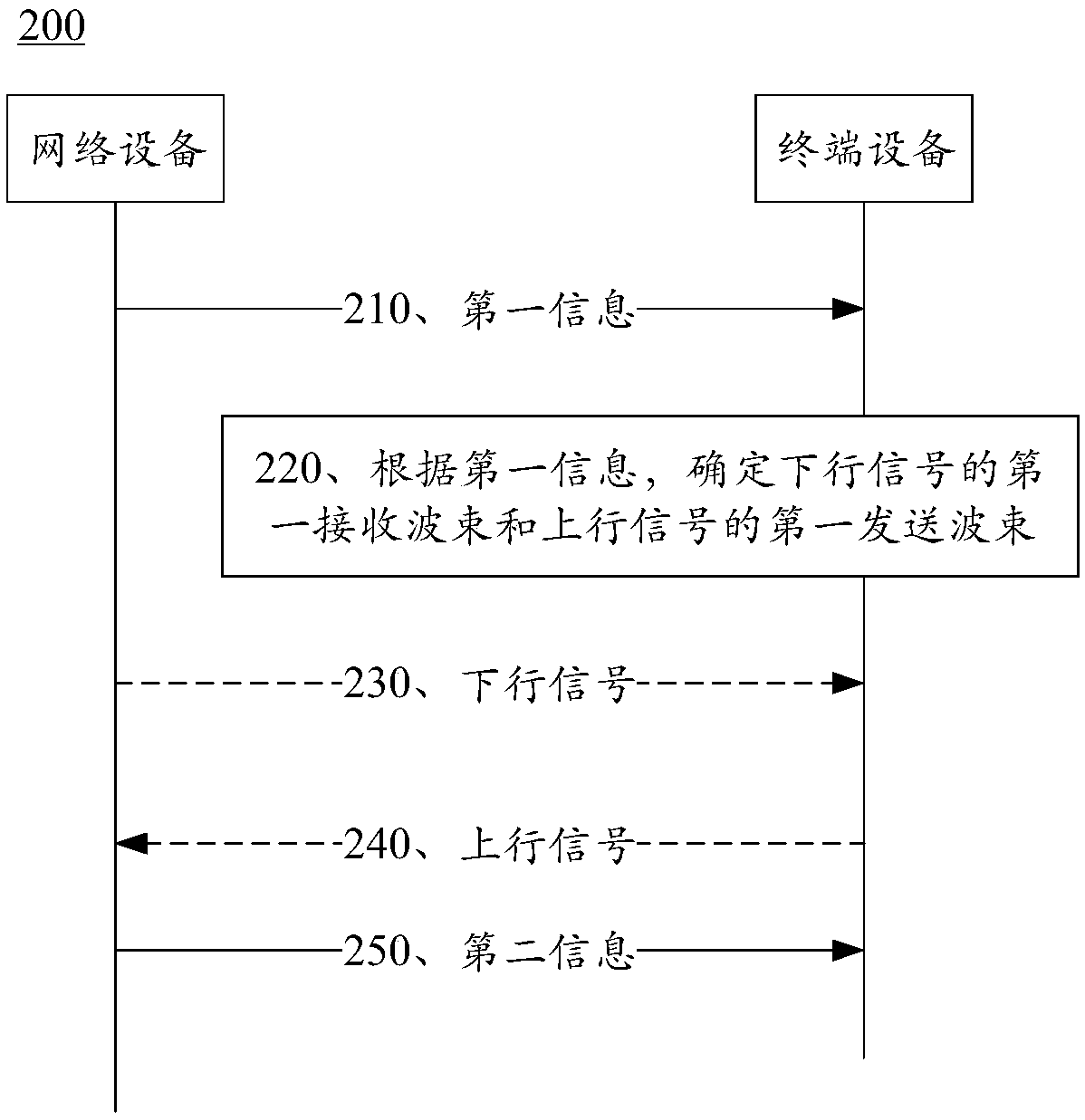

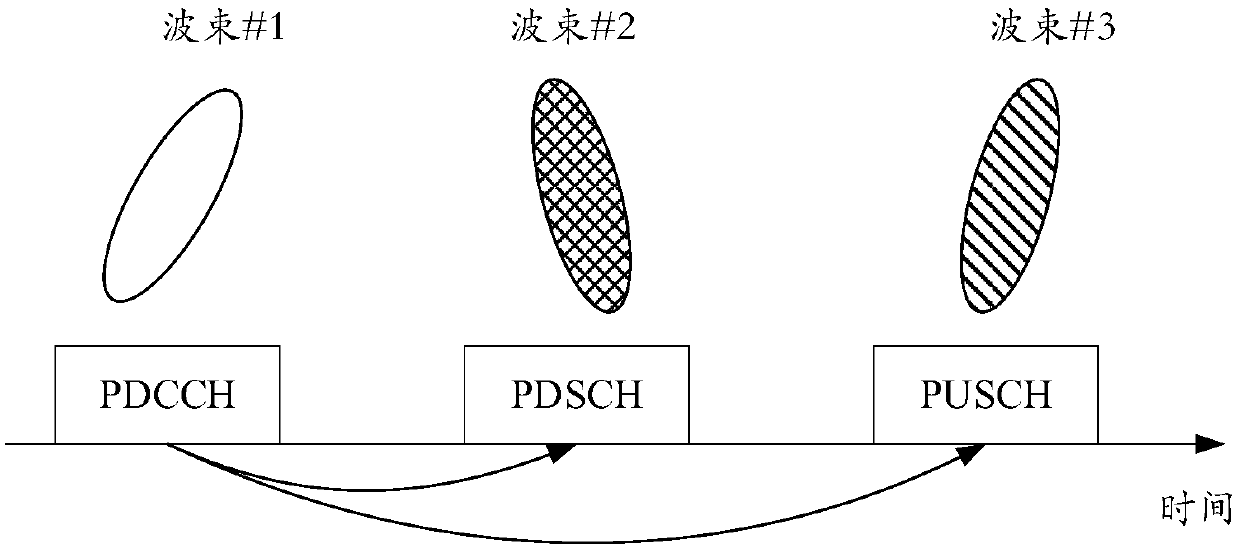

Beam indication method and device

ActiveCN110933749AReduce scheduling overheadSignal allocationRadio transmissionTelecommunicationsElectrical and Electronics engineering

Provided is a beam indication method, which can save the scheduling overhead of downlink signal reception and uplink signal transmission. The method comprises: receiving first information from networkequipment, wherein the first information is used for determining a first receiving beam of a downlink signal and a first sending beam of an uplink signal; and determining a first receiving beam of the downlink signal and a first sending beam of the uplink signal according to the first information.

Owner:CHENGDU HUAWEI TECH

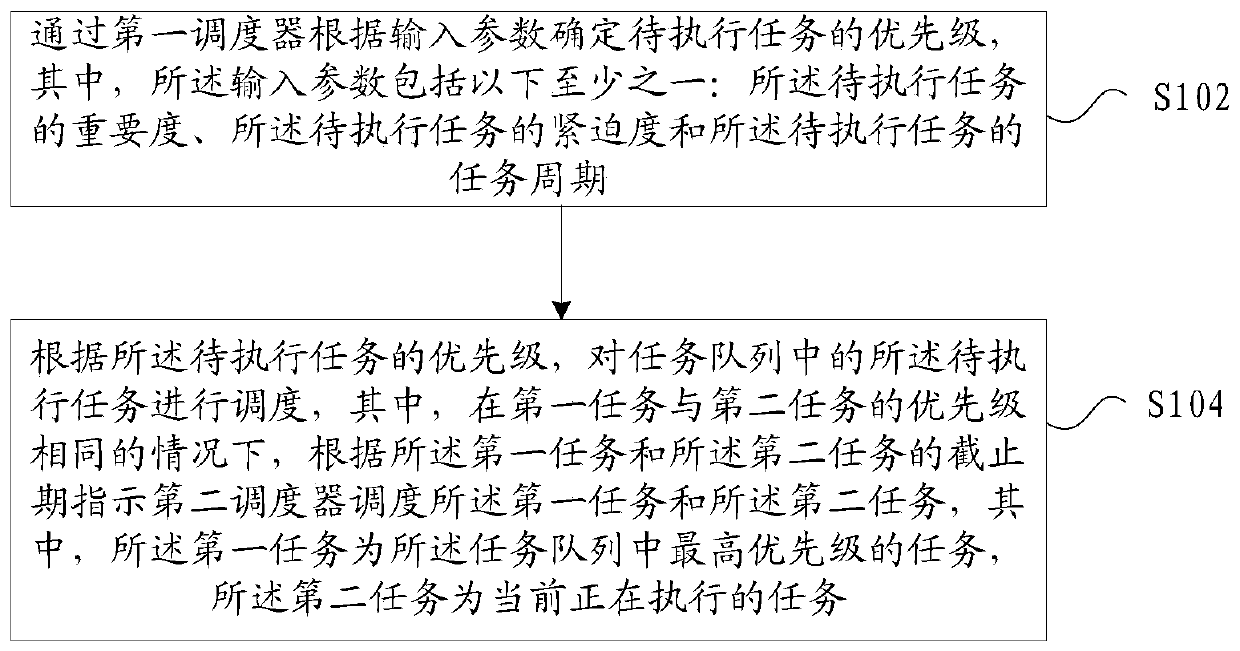

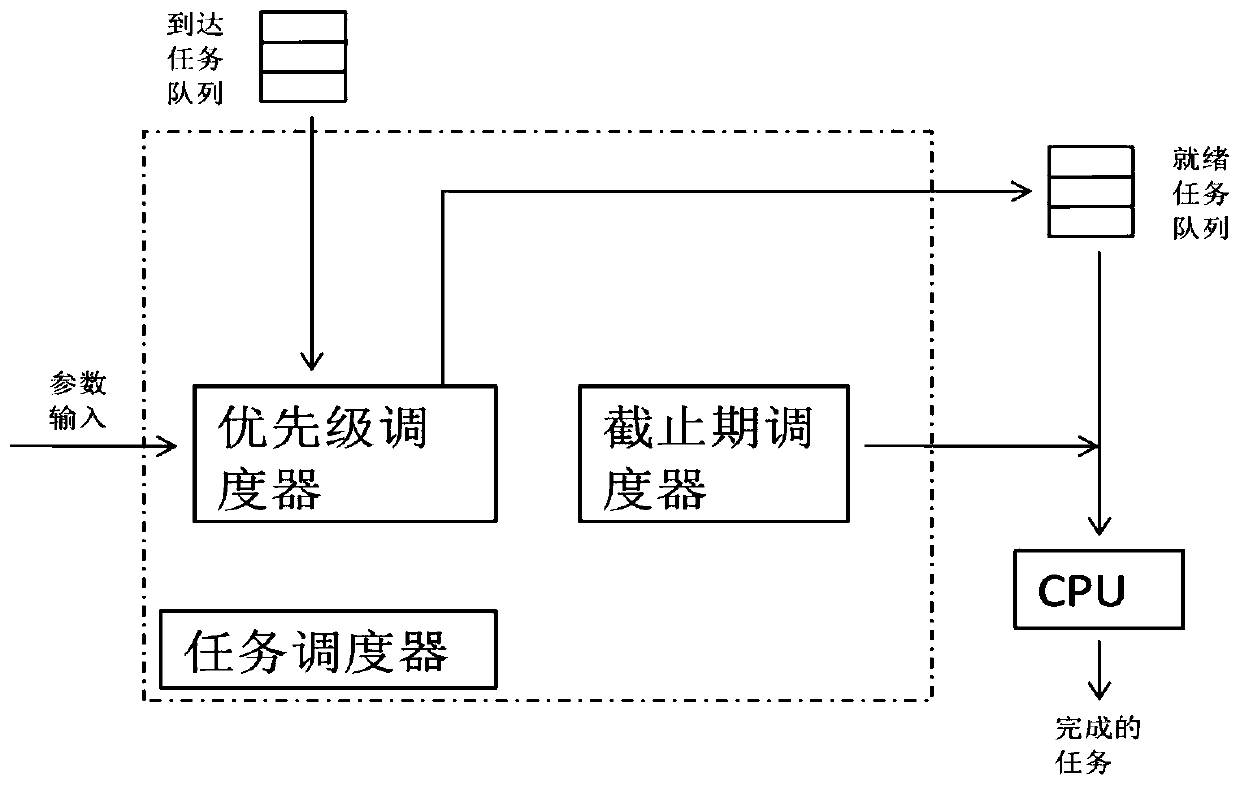

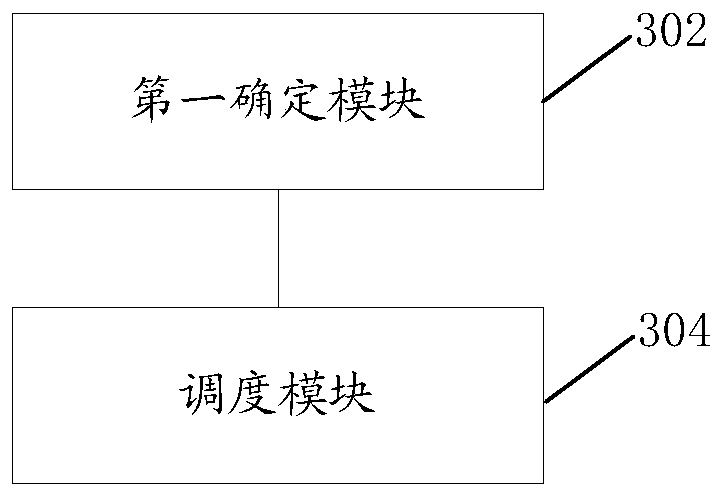

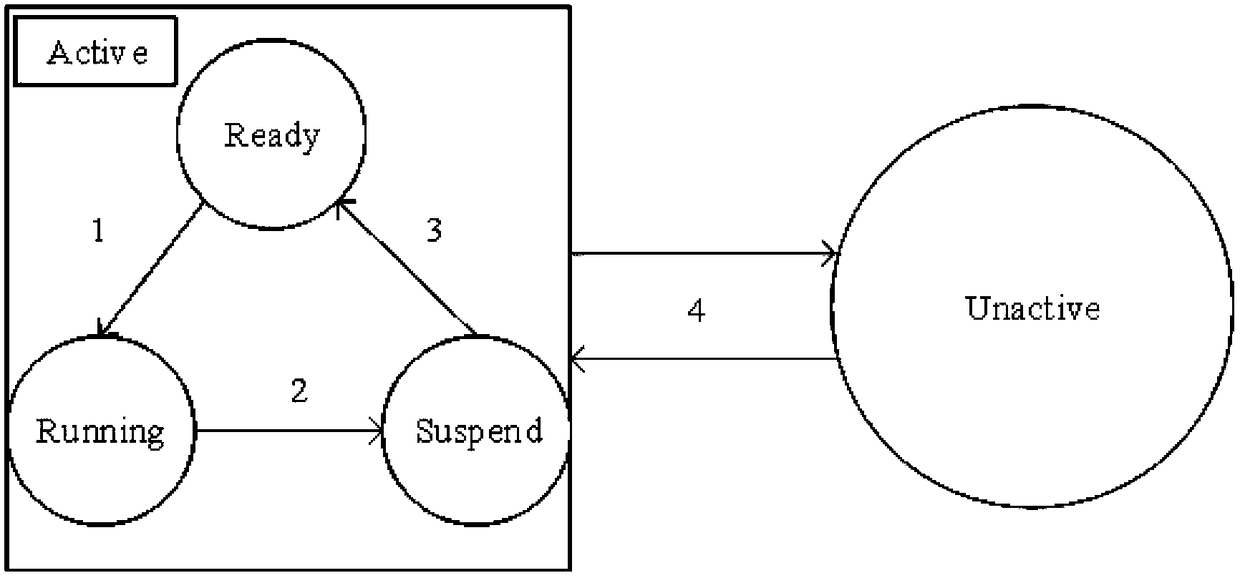

Task scheduling method and device of smart home operating system and storage medium

PendingCN111143045ASolve technical problems such as large scheduling overheadMeet real-time requirementsProgram initiation/switchingComputer controlOperational systemComputer science

The invention provides a task scheduling method and device of a smart home operating system and a storage medium. The method comprises the steps: determining the priority of a to-be-executed task through a first scheduler according to input parameters, wherein the input parameters comprise at least one of the importance degree of the to-be-executed task, the urgency degree of the to-be-executed task and the task period of the to-be-executed task; according to the priorities of the tasks to be executed, scheduling the to-be-executed tasks in the task queue; and under the condition that the priorities of the first task and the second task are the same, instructing the second scheduler to schedule the first task and the second task according to the deadlines of the first task and the second task, wherein the first task is the task with the highest priority in the task queue, and the second task is the currently executed task. By means of the task scheduling method and device, the technical problem that when tasks are scheduled in a smart home system, the scheduling expenditure is large can be solved, the real-time requirement of the system can be better met, and meanwhile the scheduling expenditure of the system is reduced.

Owner:QINGDAO HAIER TECH

Service data processing method and device and micro-service architecture system

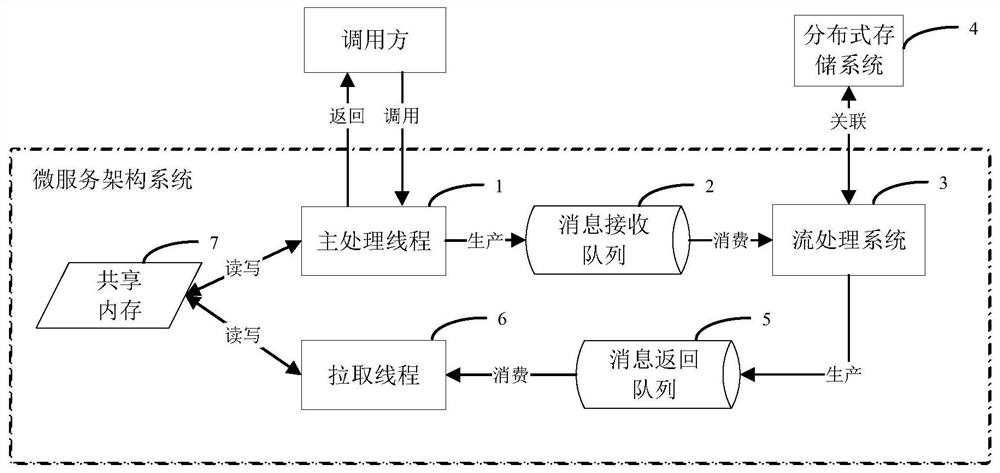

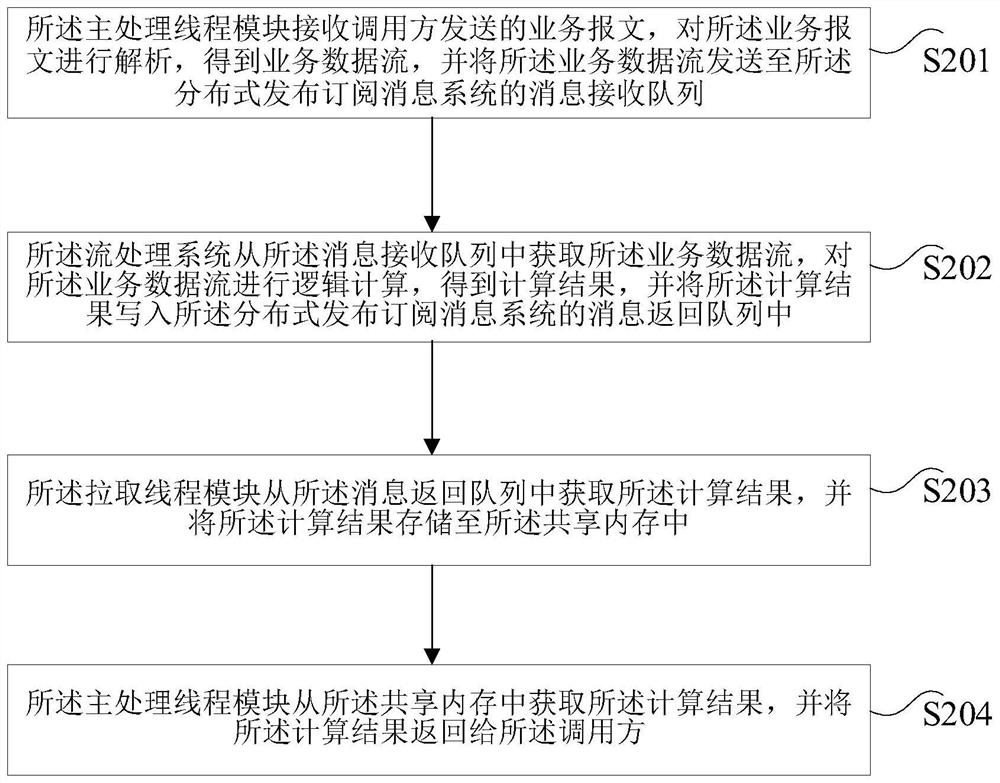

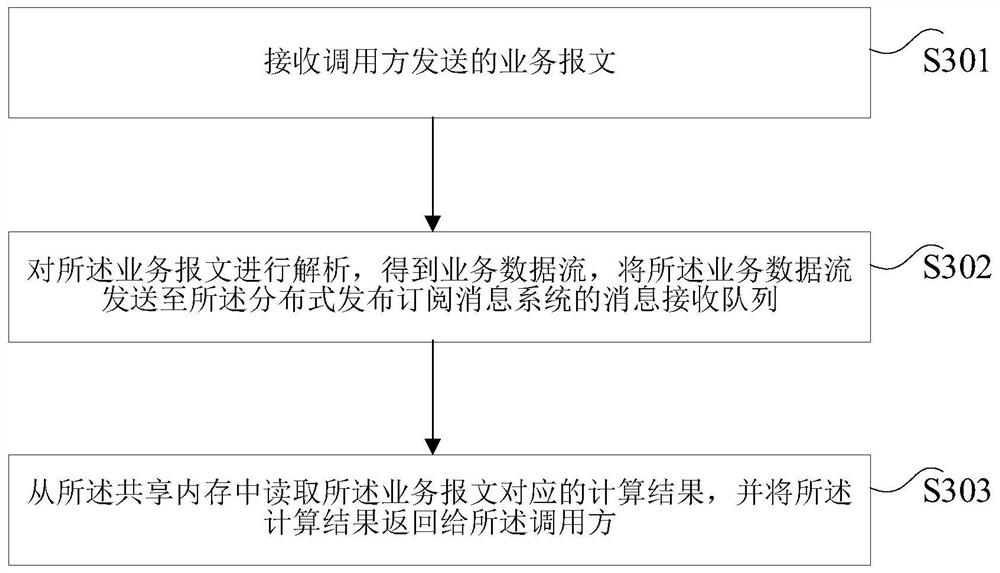

PendingCN113553153AImprove efficiencyImprove real-time performanceProgram initiation/switchingProgram synchronisationData streamEngineering

The invention relates to the technical field of micro-services, and particularly discloses a service data processing method and device and a micro-service architecture system. The micro-service architecture system comprises a main processing thread module, a shared memory, a pulling thread module, a distributed message publishing and subscribing system and a stream processing system; the main processing thread processing module receives the service message, analyzes the service message to obtain a service data stream, and sends the service data stream to a message receiving queue of the distributed message publishing and subscribing system; the main processing thread module also obtains a calculation result from the shared memory and returns the calculation result to the calling party; the flow processing system obtains the service data flow from the message receiving queue and performs logic calculation on the service data flow to obtain a calculation result and writes the calculation result into a message return queue of the distributed publishing and subscribing message system; and the pull thread module obtains the calculation result from the message return queue and stores the calculation result in the shared memory. According to the scheme, the system throughput and the server resource utilization rate can be improved.

Owner:INDUSTRIAL AND COMMERCIAL BANK OF CHINA

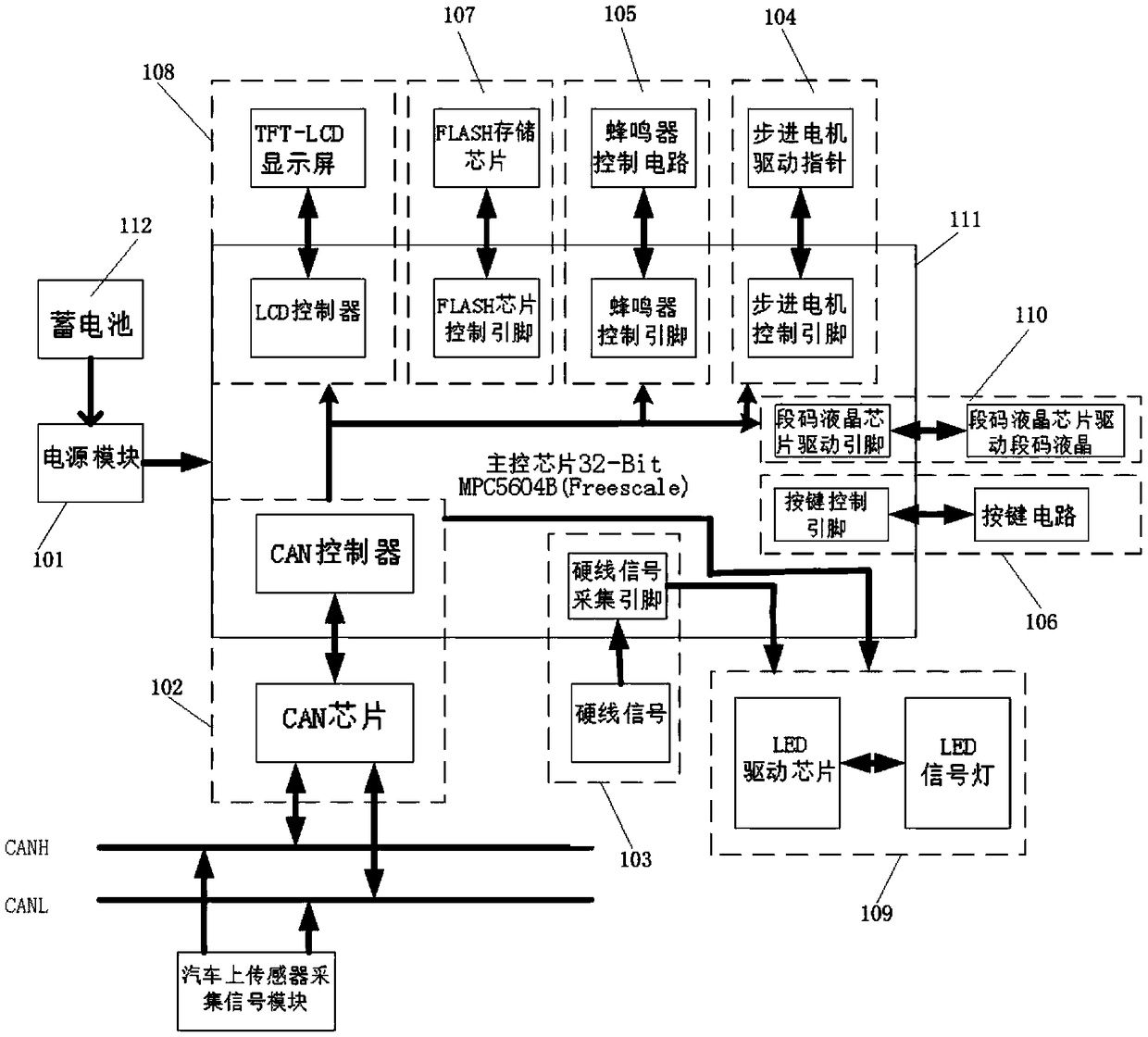

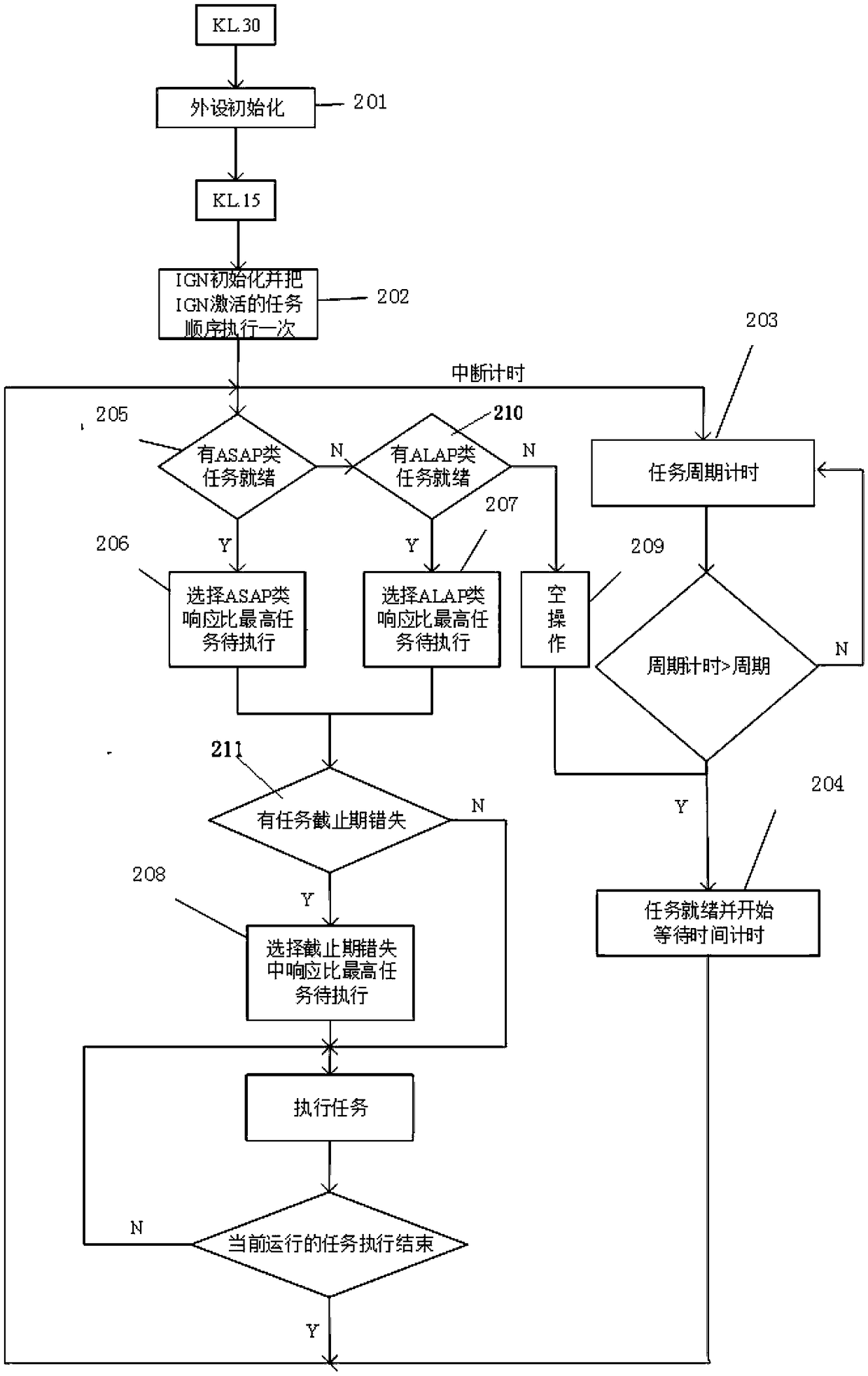

ELectric vehicLe instrumentation system and scheduLing method thereof

InactiveCN108859759AImprove real-time performanceReduce scheduling overheadVehicle componentsEngineeringElectric vehicle

The invention provides an eLectric vehicLe instrumentation system and a scheduLing method thereof. The eLectric vehicLe instrumentation system comprises a power moduLe, a CAN communication moduLe, a hard Line signaL acquisition moduLe, a stepper motor moduLe, a buzzer moduLe, a key moduLe, an FLASH storage moduLe, a TFT-LCD dispLay moduLe, an LED moduLe and a segment code LCD moduLe, wherein the voLtage of the power moduLe is divided into two types: cuttabLe and uncuttabLe. The task of each moduLe described above is written as a function interface, the task is divided into ASAP cLass and ALAPcLass, and the ASAP cLass task is executed first. Based on an improved high-response ratio priority task scheduLing aLgorithm and taking into account the infLuence of tasks waiting time and service time on task priority, the system introduces a task deadLine missing mechanism to minimize the system overhead of task scheduLing and each time a task with highest response ratio in the same category isseLected. The method improves the reaL-time performance of the system.

Owner:WUHAN UNIV OF SCI & TECH

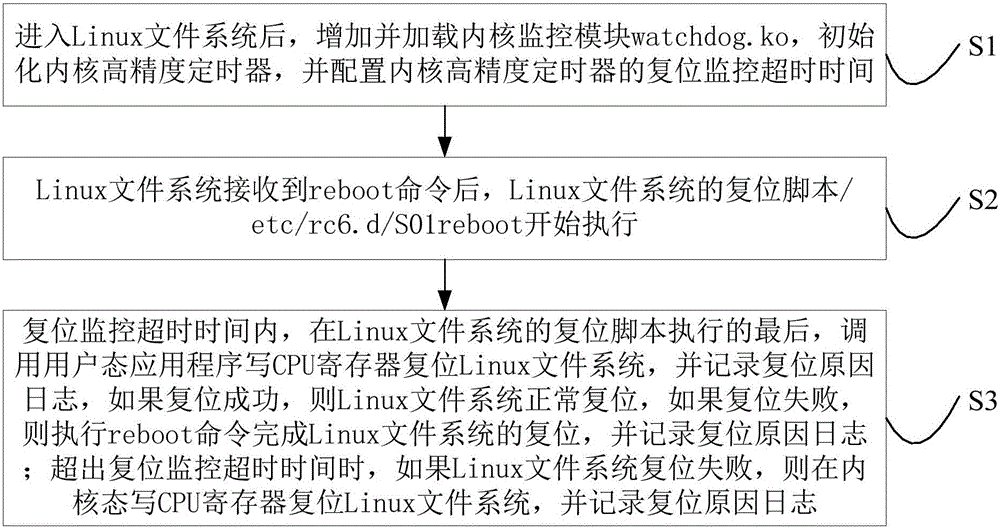

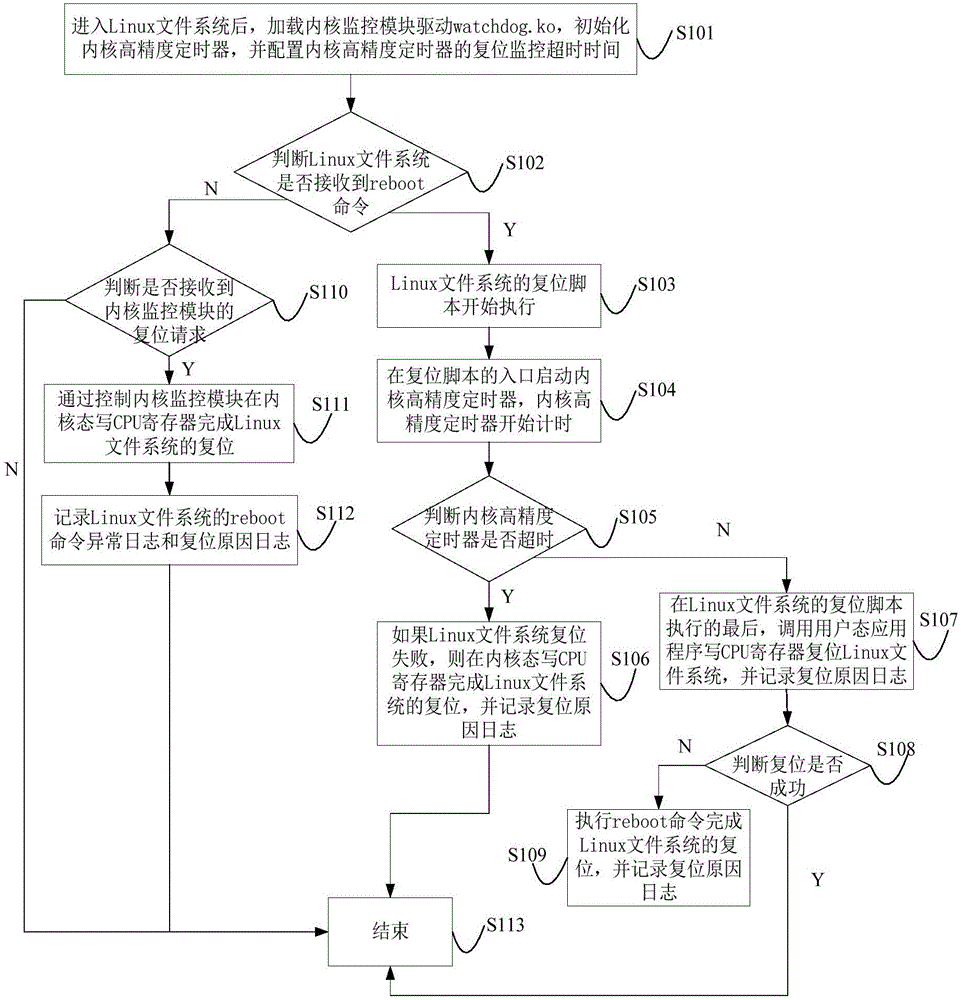

Method for improving reboot command restart reliability and increasing reset logs

The invention discloses a method for improving the reboot command restart reliability and increasing reset logs.The method includes the steps that a kernel monitoring module is added and loaded, a kernel timer is initialized, and the reset monitor timeout time is set; after a reboot command is received, a reset script is executed; within the timeout time, reset cause logs are recorded through a user mode reset system at the end of reset script execution, and if reset fails, the reboot command is executed to reset the system, and the reset cause logs are recorded; if system reset fails after timeout, a kernel mode reset system records the reset cause logs.The timer is started to monitor reset by increasing user mode application programs and kernel mode reset control protection, the reset cause logs are increased for query, the kernel monitoring module is controlled to reset the system when the reboot command cannot reset the system due to system anomaly, system reset measures are increased, and the robustness and maintainable management capability of the system are improved.

Owner:烽火超微信息科技有限公司

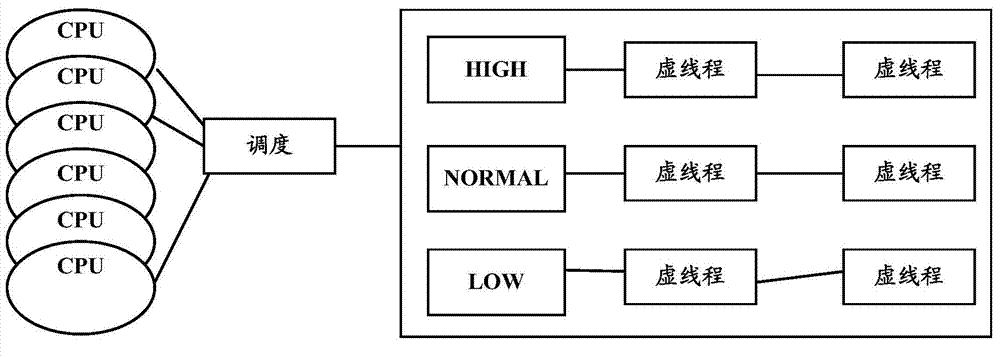

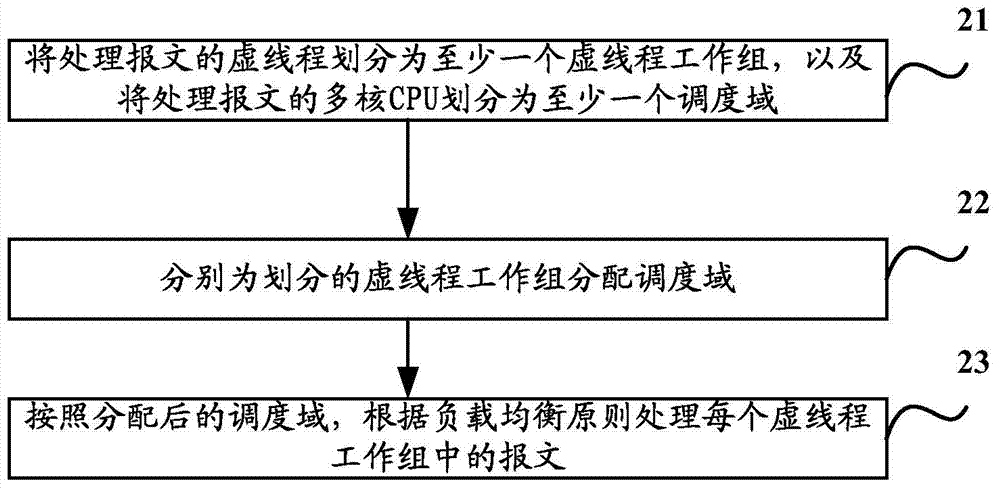

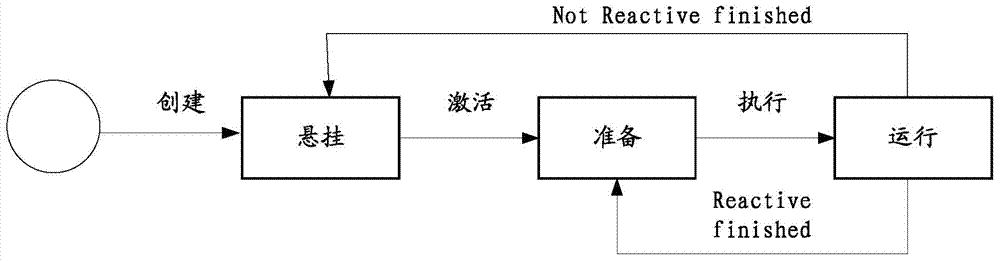

Message processing method and message processing device

ActiveCN104506452AMake full use of concurrent processing capabilitiesIncrease profitData switching networksGlobal schedulingMessage processing

The invention discloses a message processing method and a message processing device. The method comprises the following steps of dividing multi-core central processing unit CPU of a processed message into at least one scheduling domain, and dividing a virtual thread of the processed message into at least one virtual thread working group; respectively distributing the at least one scheduling domain for the at least one virtual thread working group; according to the at least one distributed scheduling domain, and according to the load balancing principle, processing messages in each virtual thread working group. The message processing method and the message processing device are used for solving the problems that CPU resources are wasted comparatively, and the message processing flexibility is lower by an adopted priority global scheduling algorithm under the normal conditions when message processing is performed.

Owner:RUIJIE NETWORKS CO LTD

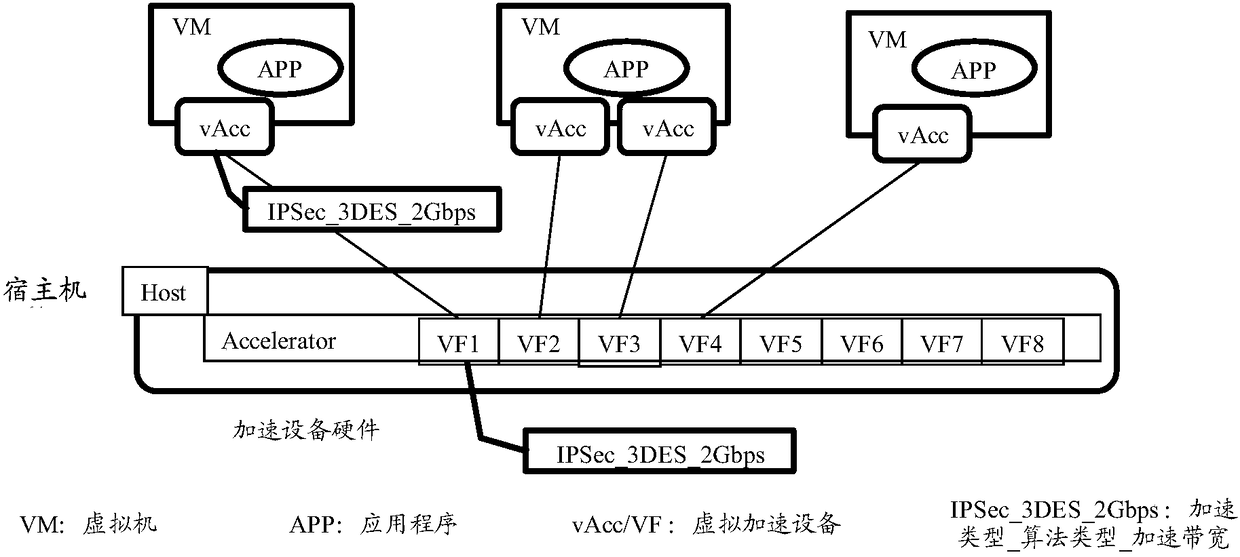

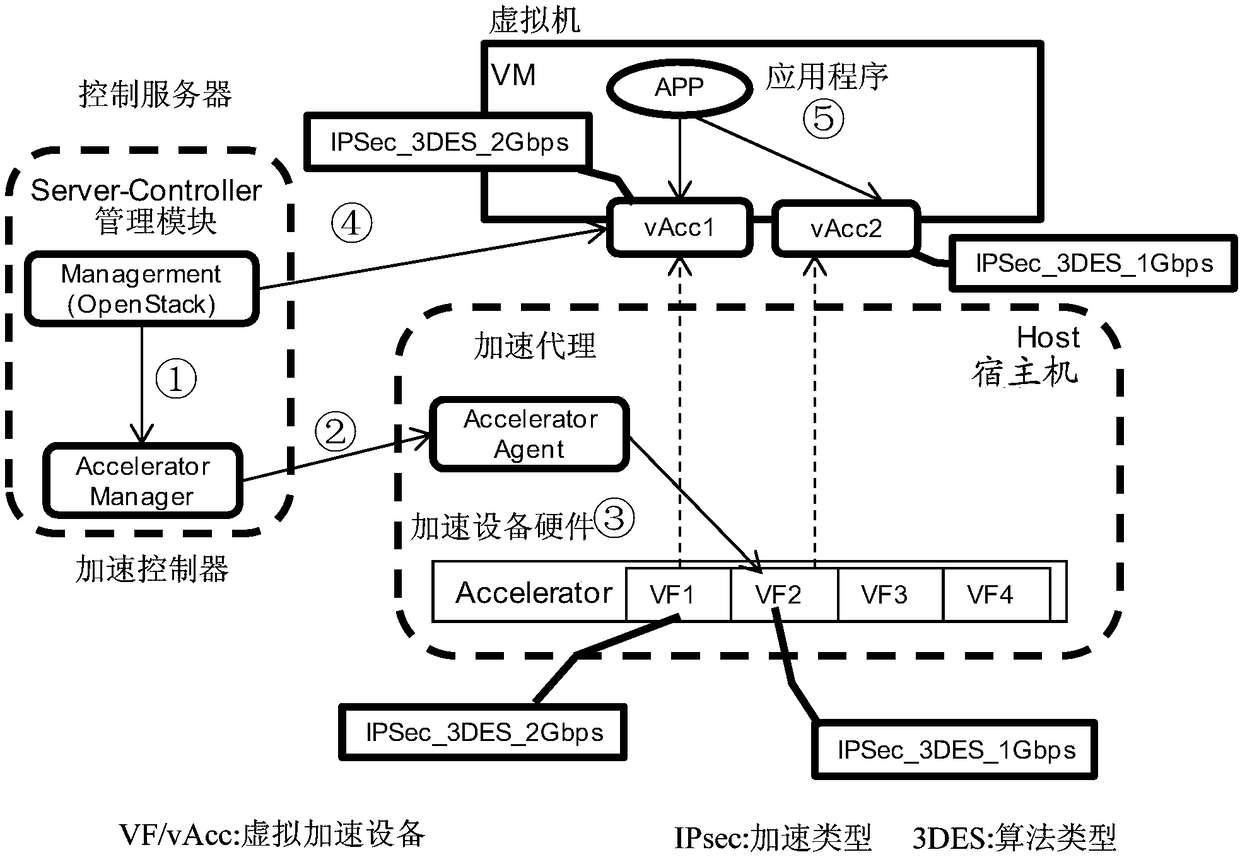

Acceleration capability adjustment method and device for adjusting acceleration capability of virtual machine

InactiveCN108347341ANo business interruptionAchieving Acceleration Capability RequirementsData switching networksSoftware simulation/interpretation/emulationVirtual machineAcceleration Unit

The application relates to the technical field of networks, and particularly relates to an acceleration capability adjustment method and device for adjusting an acceleration capability of a virtual machine, aiming to solve the problem of service interruption caused by allocating or releasing PCI acceleration equipment to the virtual machine. The method includes the following steps: determining a virtual machine to be adjusted and an acceleration capability requirement of the virtual machine to be adjusted, and reconfiguring the acceleration capability of at least one virtual acceleration equipment that has been allocated to the virtual machine to be adjusted according to the virtual machine to be adjusted and the acceleration capability requirement. Therefore, by adopting the method provided by the embodiment of the application, the number of the virtual acceleration equipment cannot be changed, the process of restarting the virtual machine to make the newly allocated acceleration capability or the released acceleration capability take effect is not required, and the service interruption cannot be caused.

Owner:HUAWEI TECH CO LTD

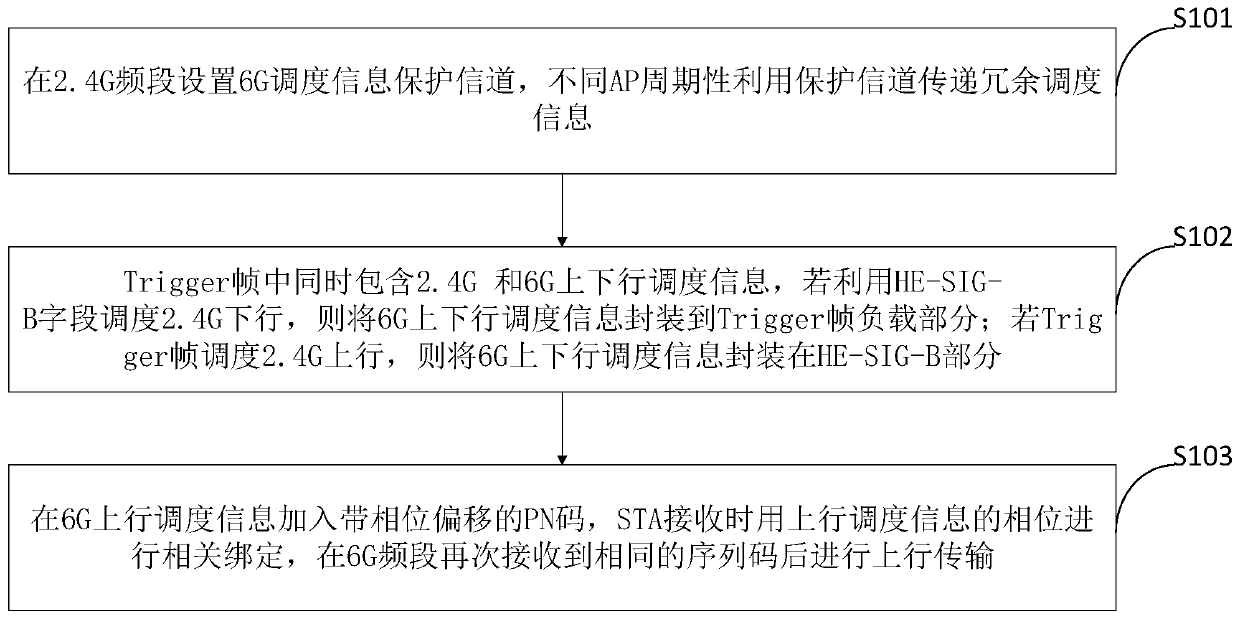

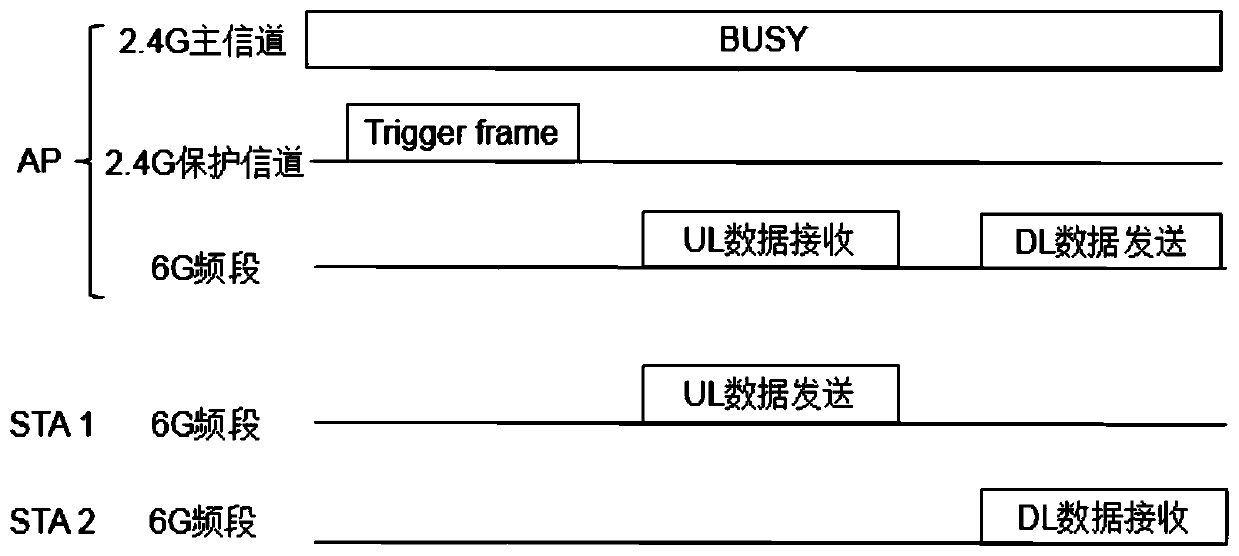

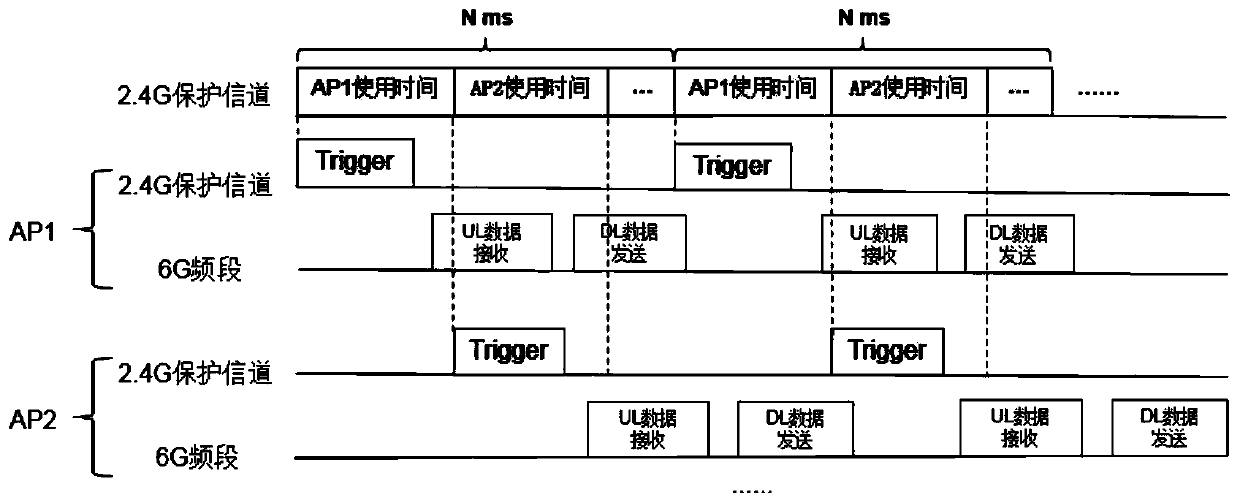

6G scheduling information protection method and system

ActiveCN110087259ASend accuratelyImprove spectrum utilizationError preventionNetwork traffic/resource managementInformation processingUplink transmission

The invention belongs to the technical field of information processing, and discloses a 6G scheduling information protection method and system, and the method comprises the steps: setting a 6G scheduling information protection channel in a 2.4 G frequency band, and enabling different APs to periodically utilize the protection channel to transmit redundant scheduling information; trigger frame utilization HE- SIG If the B field schedules 2.4 G downlink, 6G uplink and downlink scheduling information is packaged into a Trigger frame load part; if the Trigger frame schedules 2.4 G uplink, packaging 6G uplink and downlink scheduling information in the HE-; sIG A part B; and after the same sequence code is received again in the 6G frequency band, uplink transmission is carried out. According to the method, 2.4 G uplink and downlink scheduling information and 6G uplink and downlink scheduling information are simultaneously contained in the Trigger frame, the scheduling overhead is reduced,the 6G uplink scheduling information comprises the trigger sequence code, the sequence code detection error probability is low, and the error rate is reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

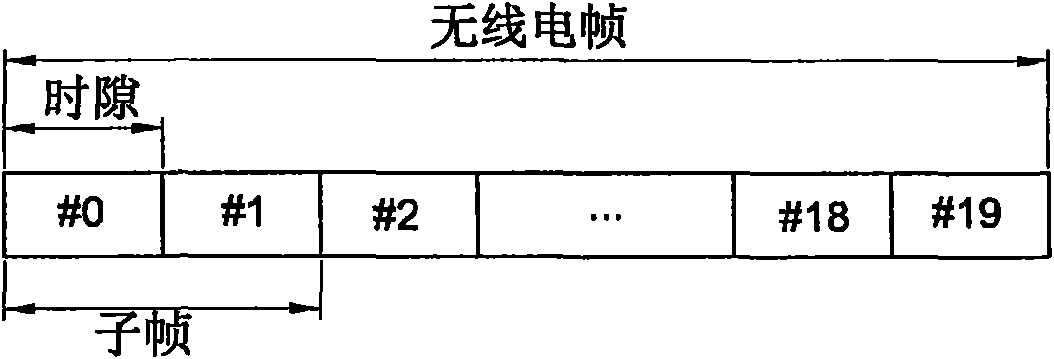

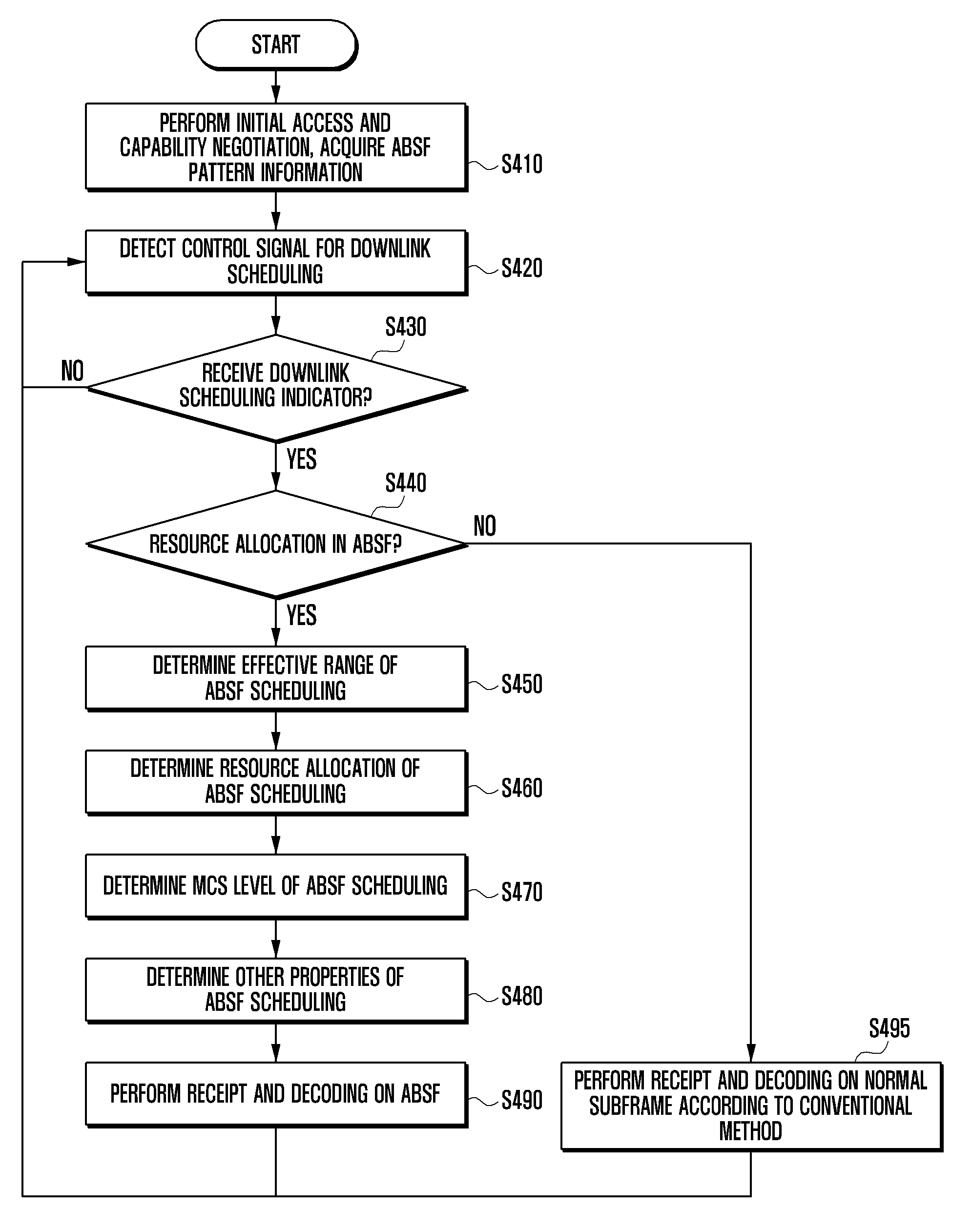

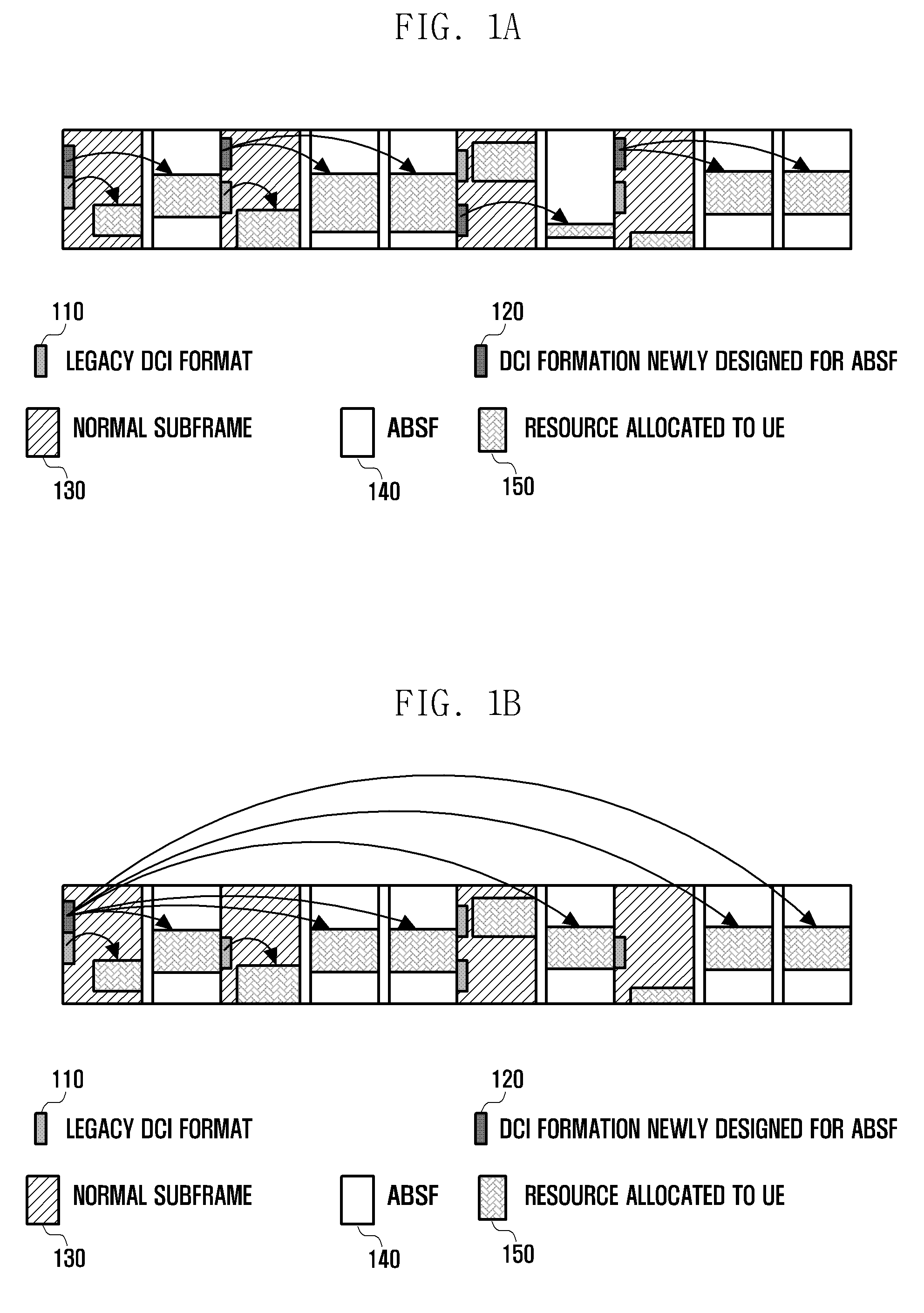

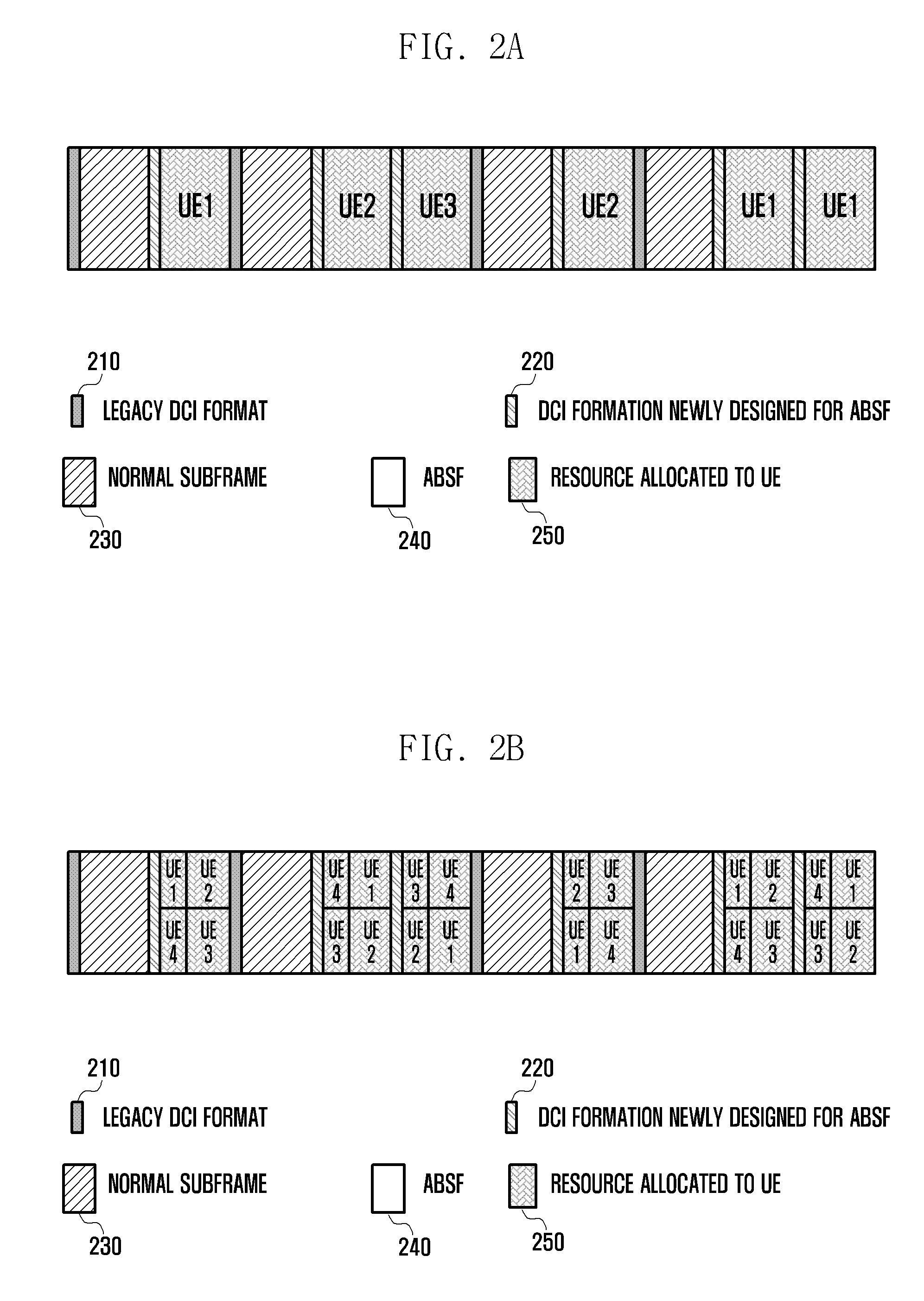

Resource allocation method and apparatus for wireless communication system

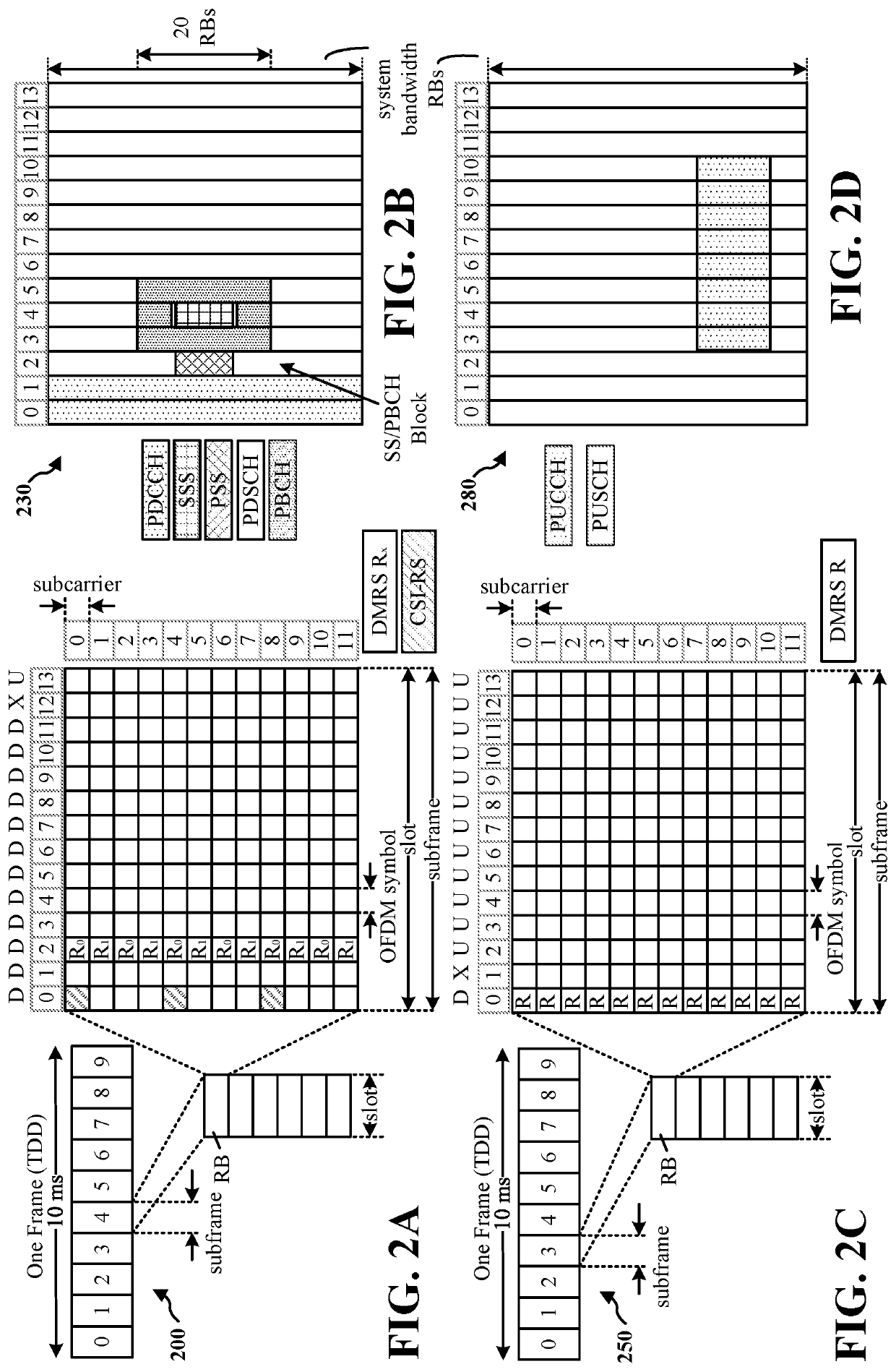

InactiveUS9161319B2Reduce scheduling overheadEnergy efficient ICTPower managementCommunications systemTransmitted power

A resource allocation method of a base station in a wireless communication system based on a radio frame including a plurality of subframes including at least one first-type subframe and at least one second-type subframe is provided. The method includes generating first-type downlink control information including resource allocation information on the first-type subframe, generating second-type downlink control information including resource allocation information on the second-type subframe, and transmitting the first-type subframe including the first-type downlink control information and the second-type downlink control information, wherein the second-type subframe is transmitted with a lower transmit power than the transmit power of the first-type subframe.

Owner:SAMSUNG ELECTRONICS CO LTD

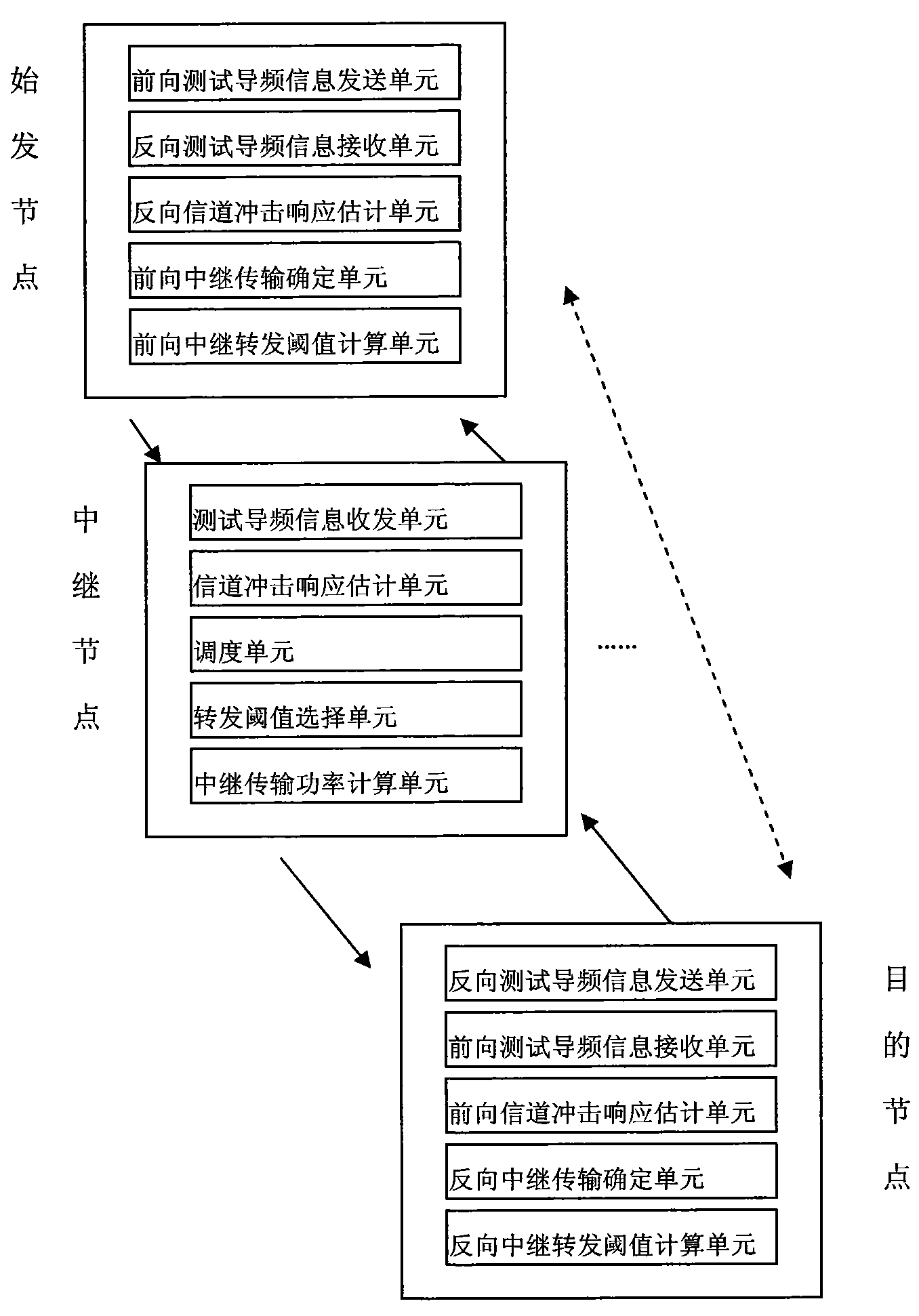

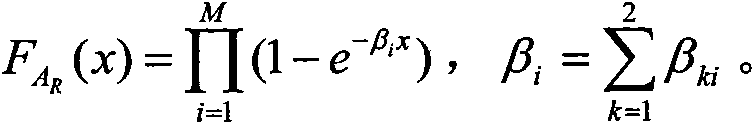

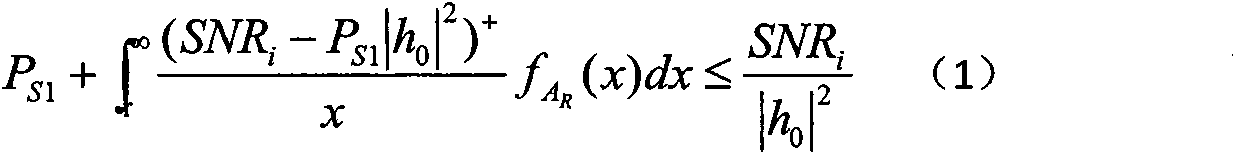

Broadband wireless communication relay dispatching system

InactiveCN104244360AReduce the probability of stoppingReduce scheduling overheadBaseband system detailsHigh level techniquesBroadbandChannel gain

The invention provides a broadband wireless communication relay dispatching system. Integral channel gain information is not needed, and an initiating node decides whether a relay transmission mode is adopted or not; a selected relay node decides whether data are forwarded or not according to the local channel gain information. According to the broadband wireless communication relay dispatching system, the transmitted space diversity capacity is improved while the dispatching expenditure between the nodes is reduced, and therefore the communication stopping probability is reduced, and the total transmission power of the system is reduced while the service quality of the system is ensured.

Owner:朱今兰

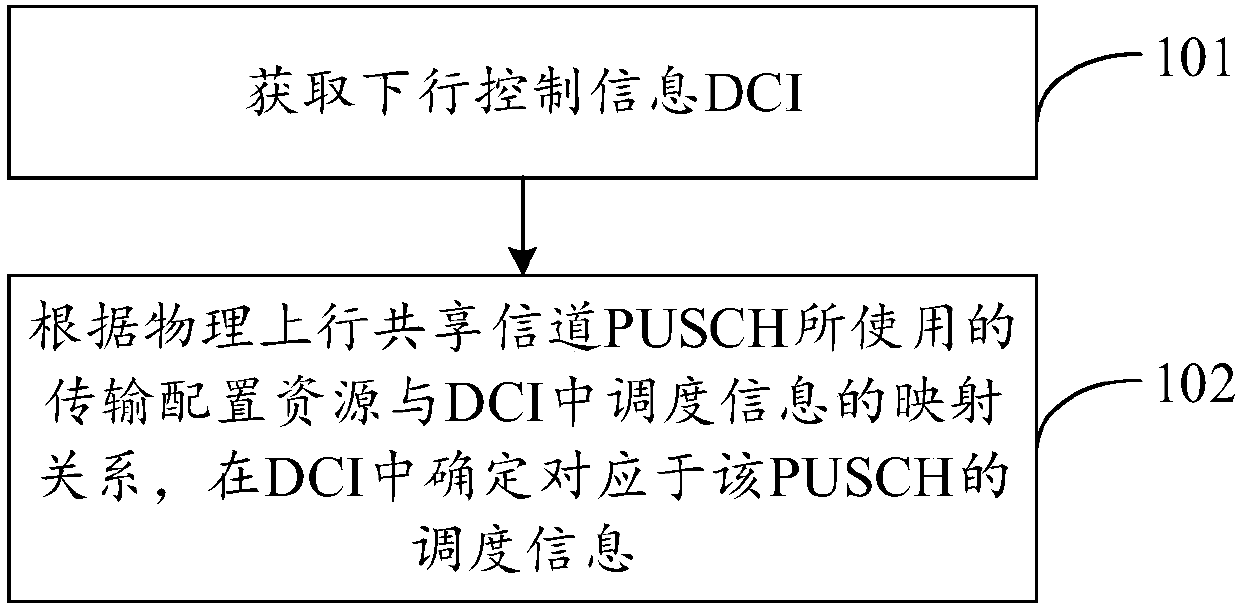

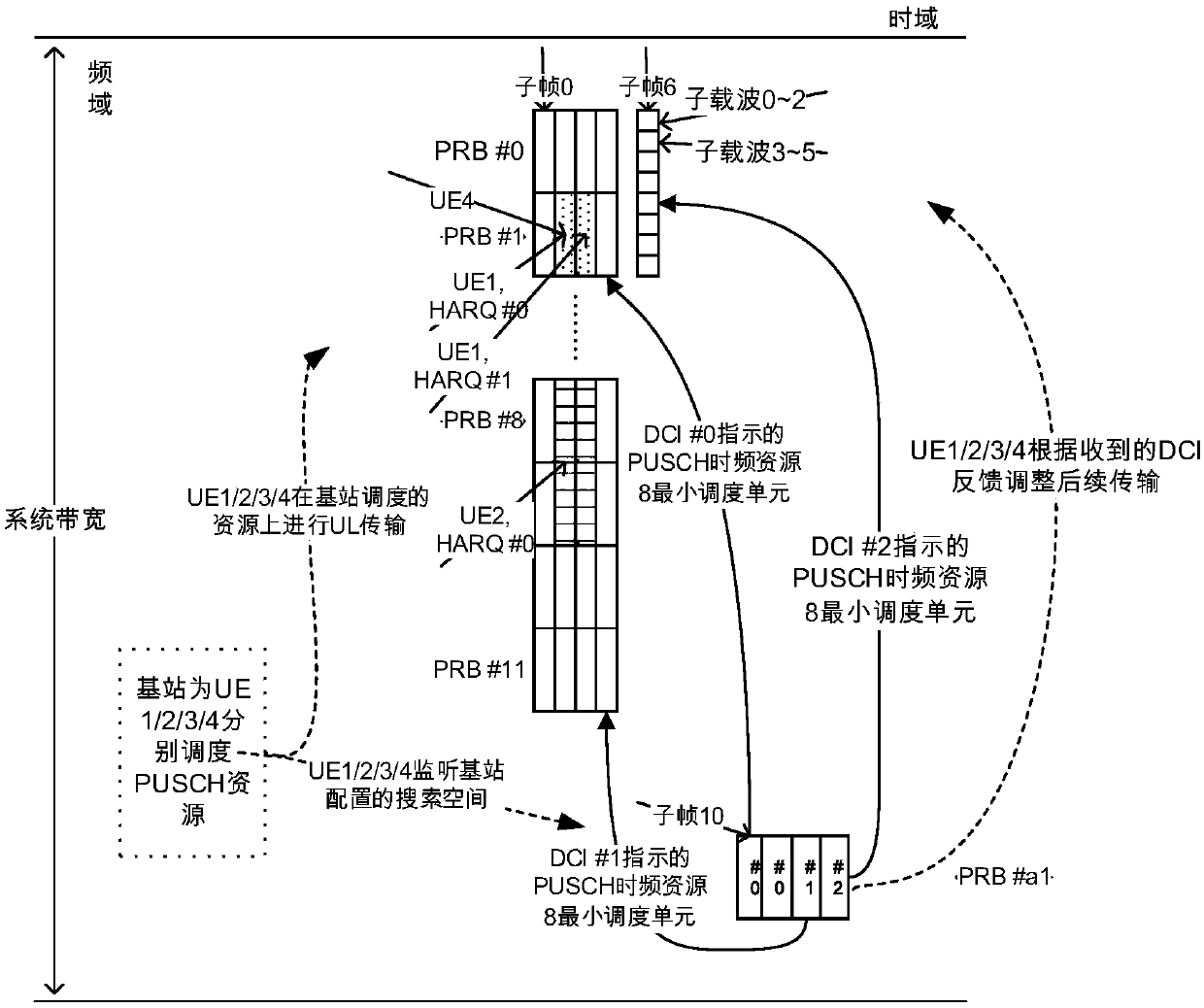

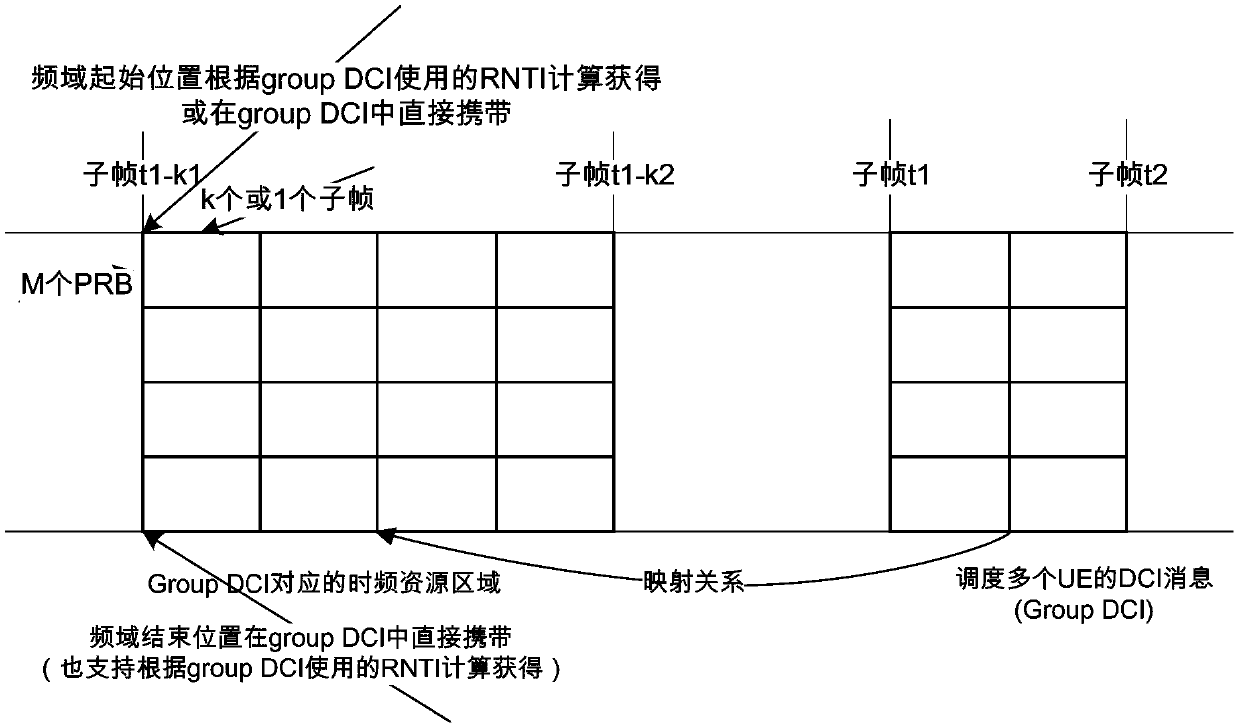

A scheduling information receiving method and device

PendingCN109842869AReduce scheduling overheadReduce waste of resourcesError prevention/detection by using return channelMachine-to-machine/machine-type communication serviceResource useDistributed computing

The invention discloses a scheduling information receiving method. The method comprises the following steps: acquiring downlink control information (DCI); And determining the scheduling information corresponding to the PUSCH in the DCI according to the mapping relation between the transmission configuration resources used by the physical uplink shared channel PUSCH and the scheduling information in the DCI. Compared with the prior art, the method has the advantages that the cost is low;, According to the invention, the scheduling information in the DCI is determined according to the mapping relation between the transmission configuration resources used by the UE for transmitting the PUSCH and the scheduling information in the DCI; According to the embodiment of the invention, the base station can only send one DCI (Downlink Control Information), so that the scheduling of all UE (User Equipment) with a mapping relation between the PUSCH transmission configuration resources and the scheduling information in the DCI is realized, the scheduling overhead is reduced, the resource waste is reduced, and the scheduling efficiency of the communication system on the terminal is remarkably improved.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

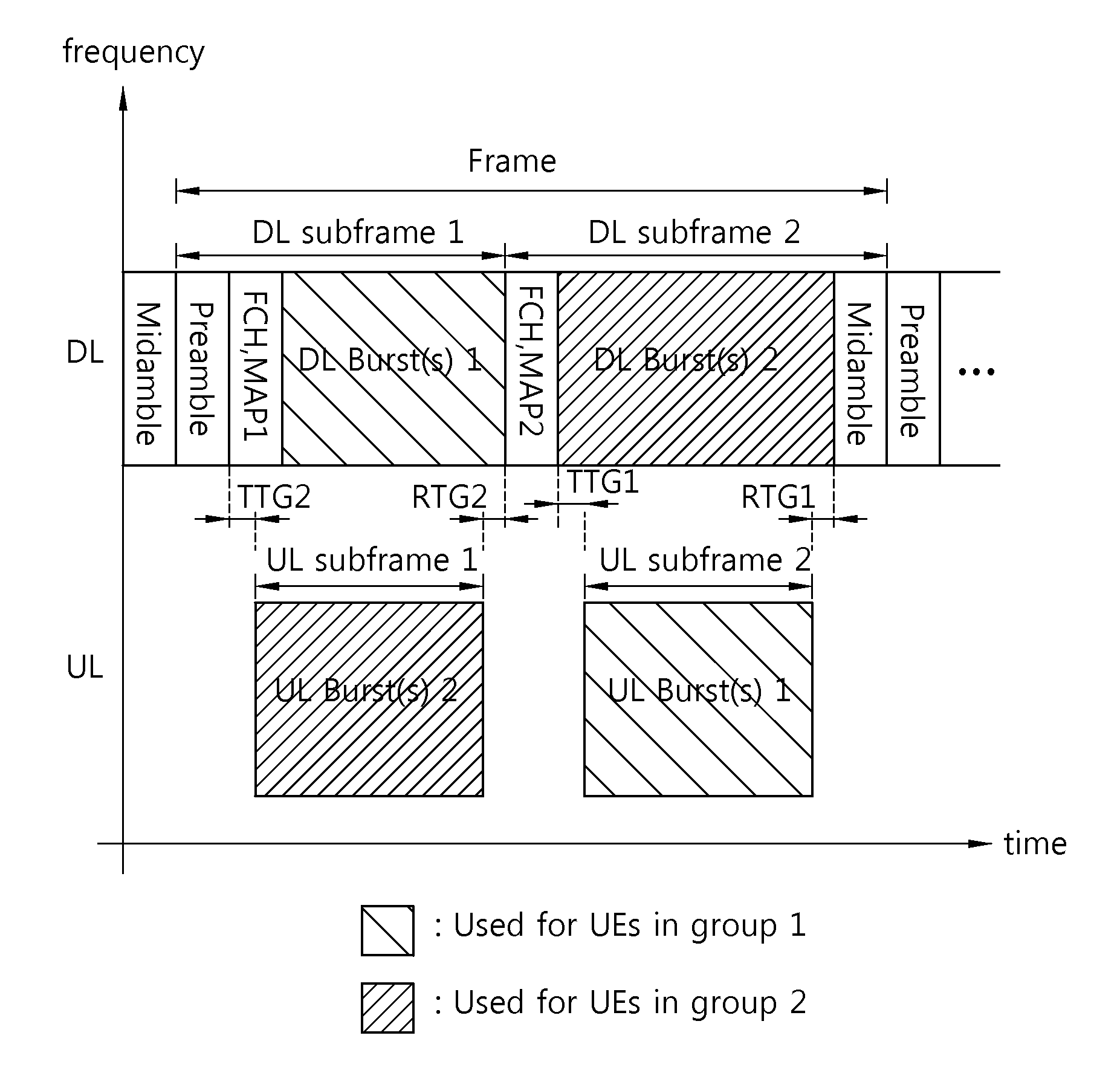

Method of transmitting and receiving a midamble for channel estimation of multiple antennas

InactiveUS8428172B2Reduce overheadRadio resource is limitedTransmission path divisionDiversity/multi-antenna systemsUser equipmentRadio resource

A method of transmitting a midamble for channel estimation of multiple antennas performed by a base station in a system having segregated uplink and downlink frequency bands is provided. The method includes transmitting midamble information indicating presence of the midamble within a downlink frame to a user equipment (UE), and transmitting the midamble on a single downlink subframe among at least one downlink subframe included in the downlink frame or on a common zone to the UE by using the multiple antennas. A scheduling overhead of a base station and other overheads can be reduced by decreasing the number of transmissions of a midamble on one downlink frame, and thus limited radio resources can be effectively used.

Owner:LG ELECTRONICS INC

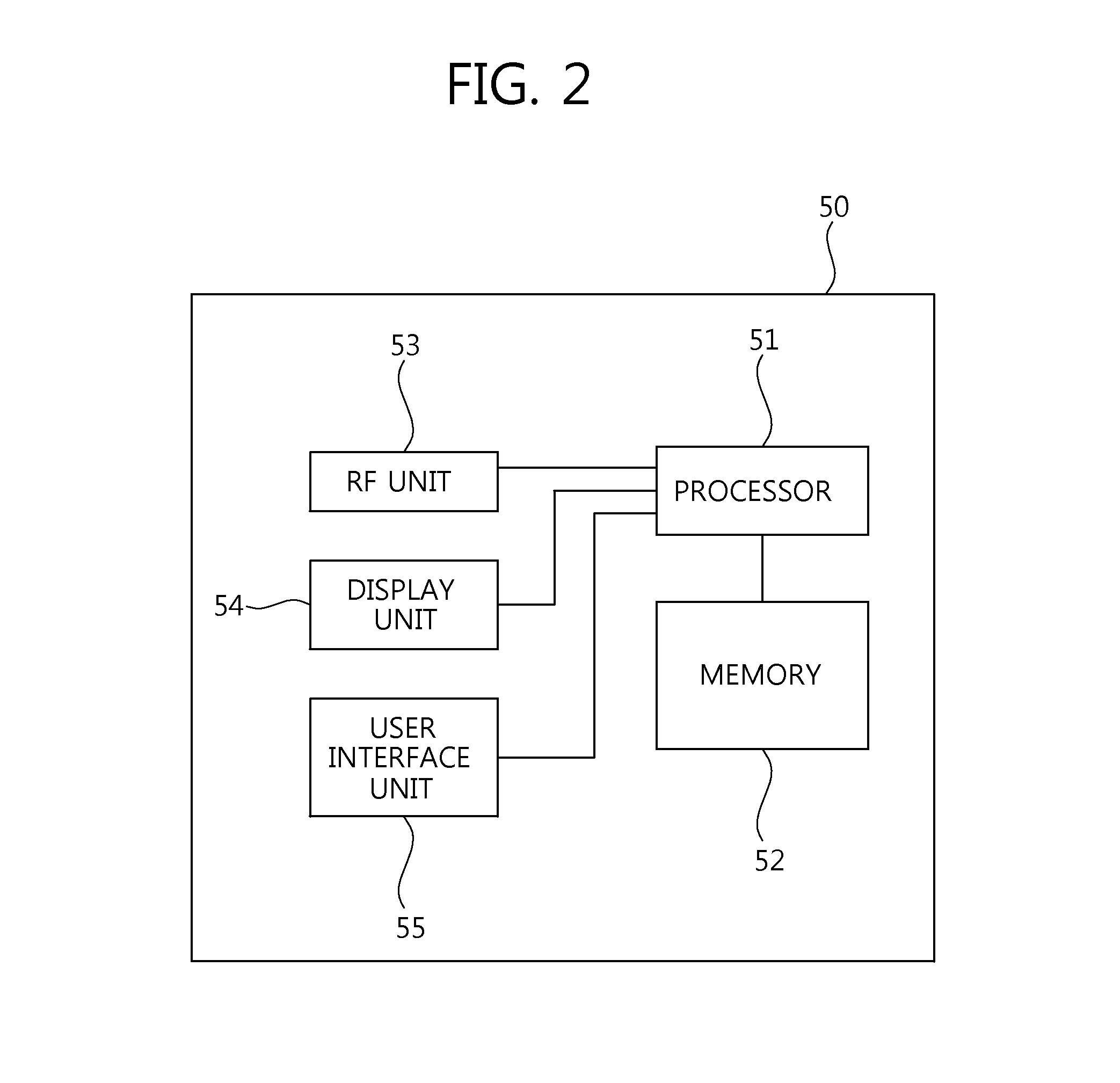

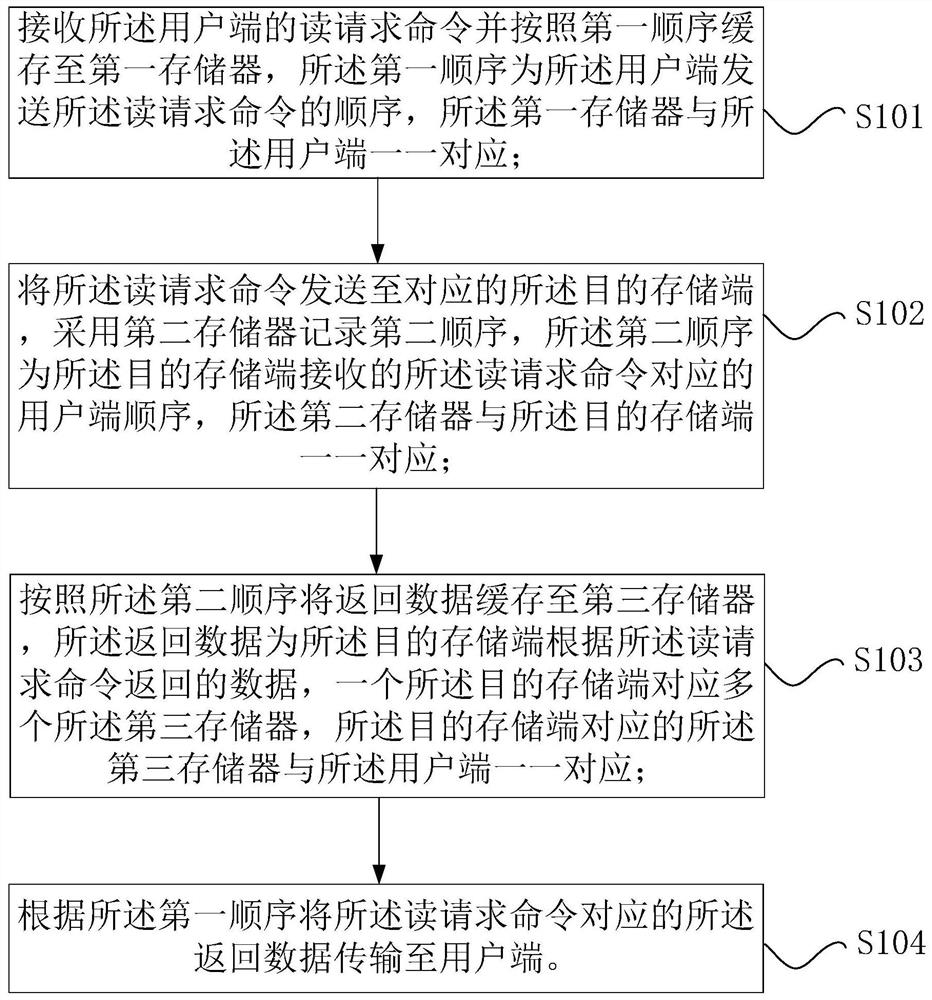

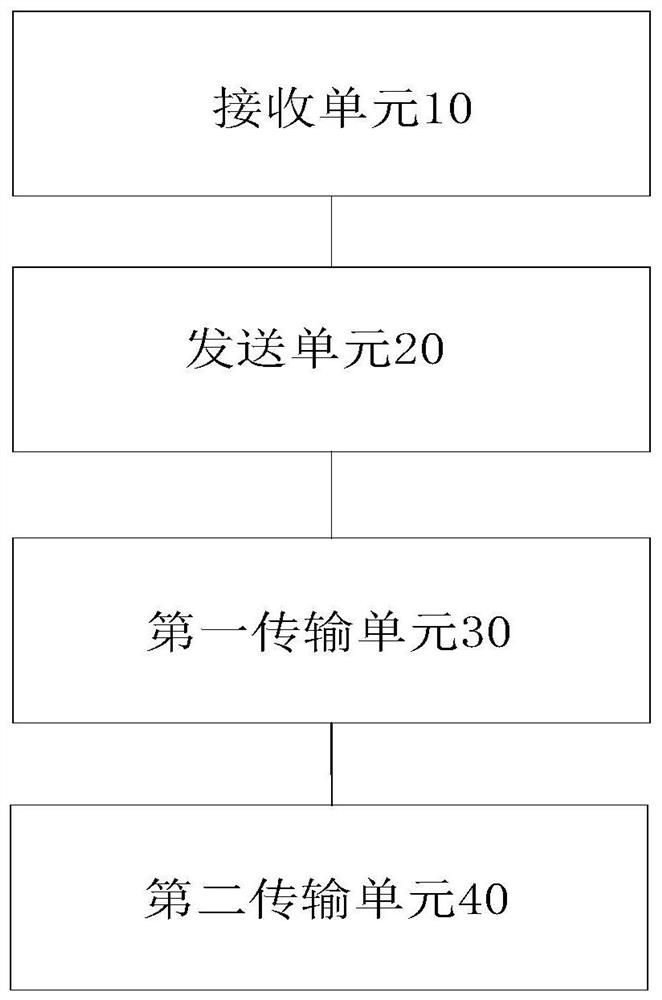

Data reading method and device based on DMA engine and data transmission system

ActiveCN112199309AMeet the needs of accessing multiple destination storage terminalsReduce scheduling overheadElectric digital data processingComputer hardwareData transport

The invention provides a data reading method and device based on a DMA engine and a data transmission system, and the method comprises the steps: receiving a reading request command of a user side, caching the reading request command to a first memory according to a first sequence, sending the reading request command to the user side according to the first sequence, and enabling the first memory to be in one-to-one correspondence with the user side; sending the read request command to a corresponding destination storage end, recording a second sequence by adopting a second memory, the second sequence being a user end sequence corresponding to the read request command received by the destination storage end, and the second memory being in one-to-one correspondence with the destination storage end; caching the returned data to third memories according to a second sequence, enabling one destination storage end to correspond to a plurality of third memories, and enabling the third memoriescorresponding to the destination storage ends to be in one-to-one correspondence with the user ends; and transmitting return data corresponding to the read request command to the user side accordingto the first sequence. Compared with the prior art, the method has the advantages that the number of DMA engines and interfaces is reduced, and then the scheduling overhead is reduced.

Owner:BEIJING ZETTASTONE TECH CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com