Single video content-driven clothing 3D animation generation method

A three-dimensional animation, content-driven technology, used in animation production, image data processing, instruments, etc., can solve problems such as difficulty in generating animation, and achieve the effects of simple operation, improved fidelity, and wide application range.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will be further described below in conjunction with the accompanying drawings, so that those of ordinary skill in the art can implement it after referring to this specification.

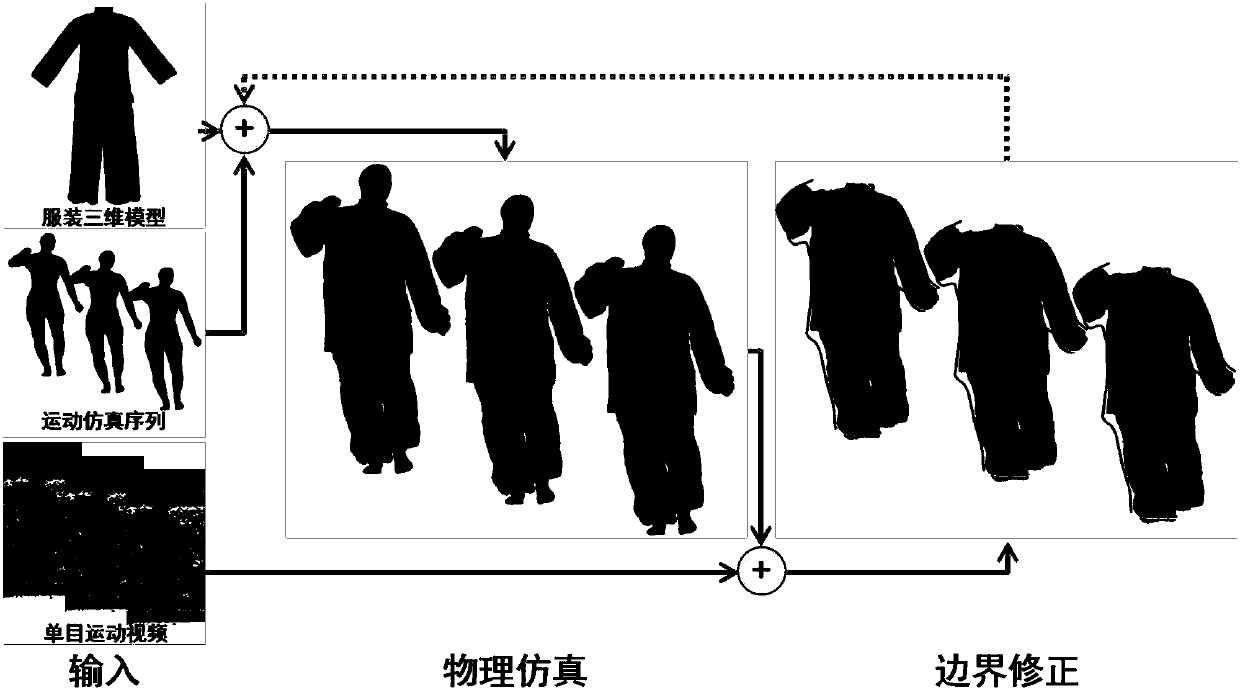

[0020] see figure 1 , is the overall design framework of the method of the present invention. According to the input video image, first extract the boundary of the video clothing, and estimate the human body model sequence corresponding to the video content; then iteratively generate a 3D clothing animation similar to the video content frame by frame, and each frame iteration includes the initial shape generation of the clothing 3D animation and clothing Three-dimensional animation shape correction two steps.

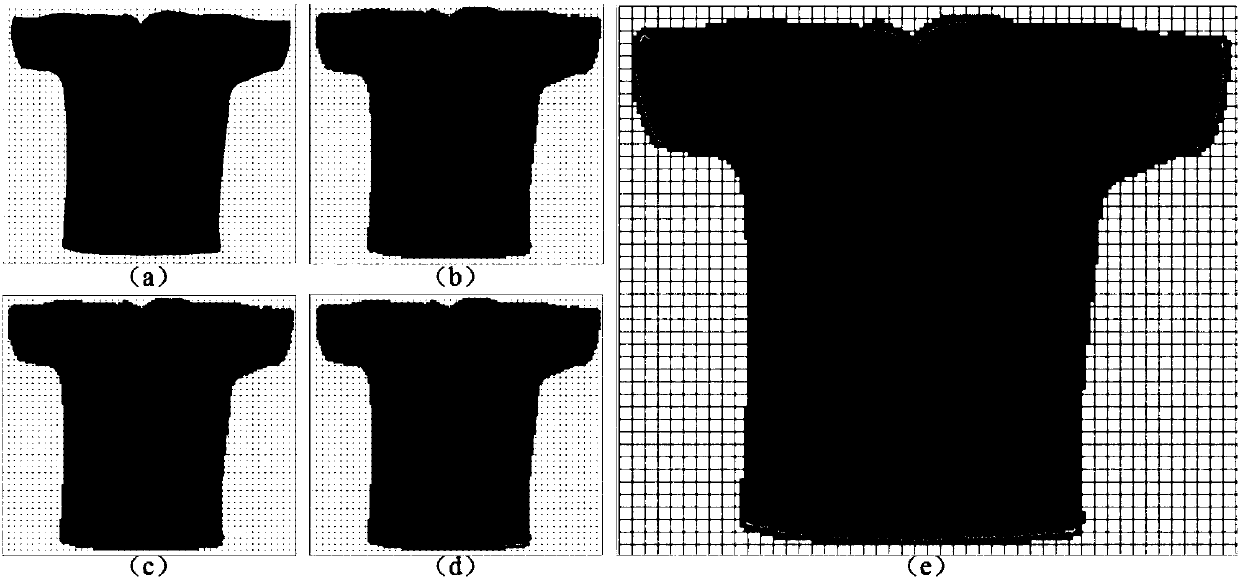

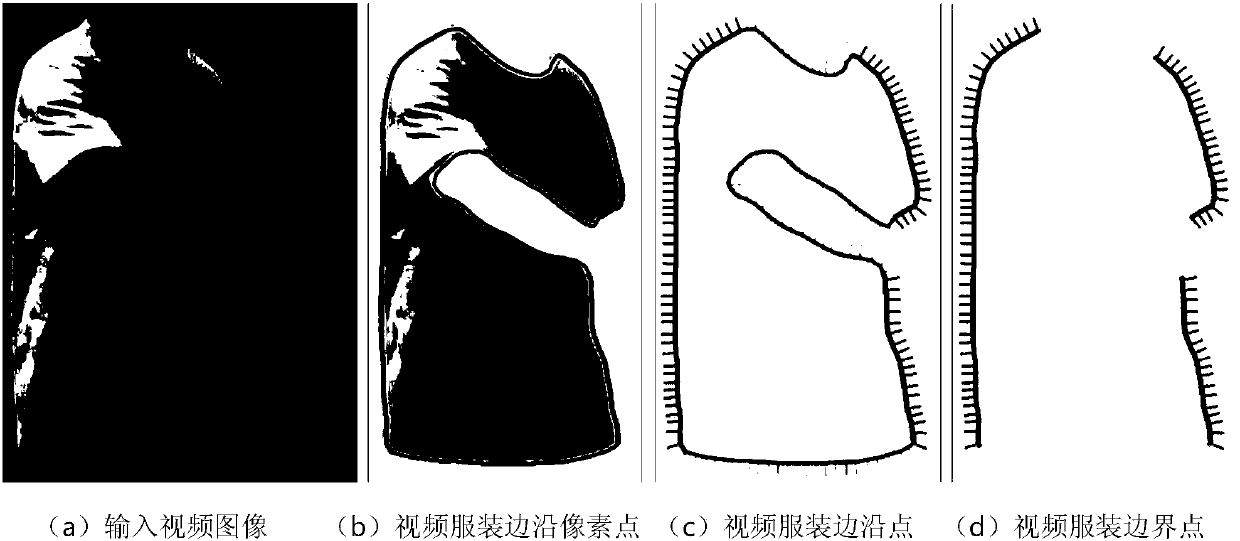

[0021] Step 1: video clothing boundary extraction and unclothed human body 3D model sequence generation. To extract the video clothing boundary, first use mature tools to segment the clothing pixel area from the video image, and then extract the clothing boundary...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com