Device and method for asynchronous pre-reading of small files in parallel network file system

A network file system and small file technology, applied in instruments, computing, electrical and digital data processing, etc., can solve problems such as rigidity, reduce waiting delays, improve cache hit rate, and have a wide range of applications.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

[0064] The following are specific embodiments of the present invention, as follows:

[0065] The device of the present invention includes: a client module, a server module, and a data storage module;

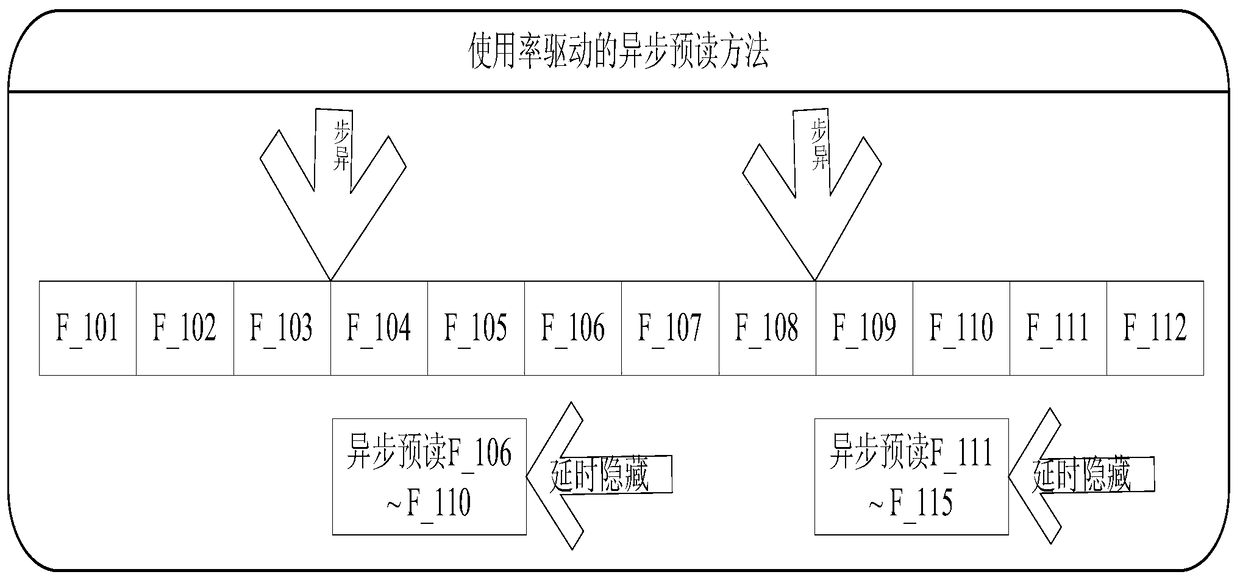

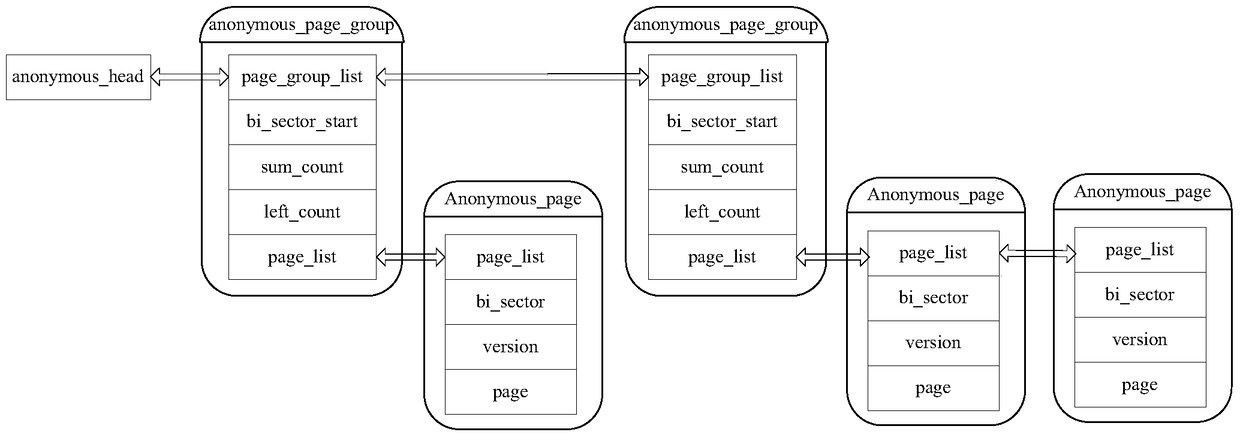

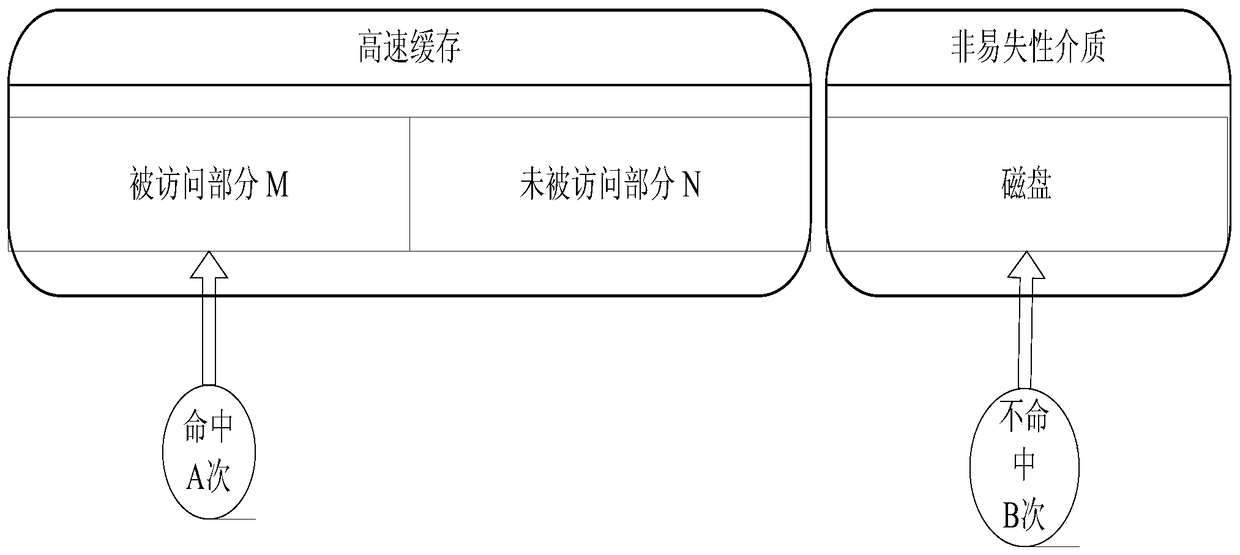

[0066] The client module is used for the client to send an operation request to the server, and receive the client extended read-only directory authorization granted by the server, and insert the file directory into the end of the corresponding directory list after obtaining the client extended read-only directory authorization , and put the page associated with the file directory into the page cache, and the client reads the data of the file directory from the page cache, if the data of the file directory is not in the page cache hit, then check whether there is a corresponding anonymous page in the anonymous page linked list, if hit, then return the anonymous page to the upper application, if not hit, then initiate a synchronous disk read operation, wherein the remaining anony...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com