Data-driving indoor scene coloring method

An indoor scene, data-driven technology, applied in image data processing, 3D image processing, instruments, etc., can solve cumbersome, time-consuming, troublesome and other problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

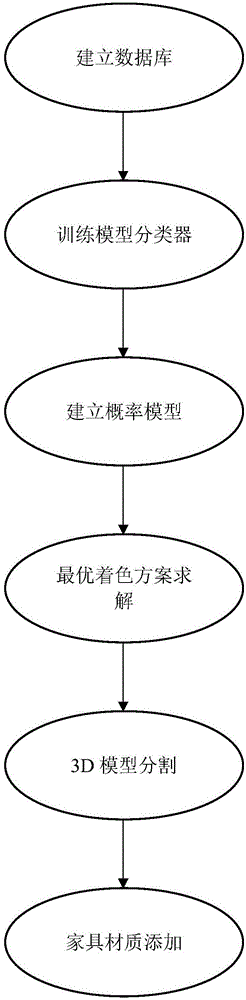

[0062] The flow chart of this method is as follows figure 1 As shown in the figure, it is divided into six major processes: the first is to establish an image-model database and a texture database; then the model classifier is trained on the 3D model of each type of furniture in the image-model database; then the extraction of the furniture in the image-model database is performed. Color theme and establish a probability model; then the optimal coloring scheme is solved according to the established probability model and the color theme input by the user; then each 3D model in the input scene is segmented using the corresponding classifier; The resulting shading scheme assigns a corresponding material to each piece of furniture in the input scene.

[0063] Specifically, as figure 1 As shown, the present invention discloses a data-driven indoor scene coloring method, which mainly includes the following steps: Step 1, establish a database: collect images of different scenes, f...

Embodiment 2

[0107] The implementation hardware environment of this embodiment is: Intel Core i5-45903.3GHz, 8G memory, and the software environment is MicrosoftVisualStudio2010, MicrosoftWindows7Professional, and 3dsmax2012. The input model comes from the network.

[0108] The invention discloses a data-driven indoor scene coloring method, the core of which is to solve the optimal coloring scheme for each furniture in the scene, and divide the furniture grid according to the image, so as to assign corresponding materials to each part, Include the following steps:

[0109] Step 1, database establishment: collect images, models and material samples from the network, and process the collected data to establish an image-model database and a texture database;

[0110] Step 2, training the model classifier: perform feature extraction on the 3D model of each type of furniture in the image-model database and train the classifier;

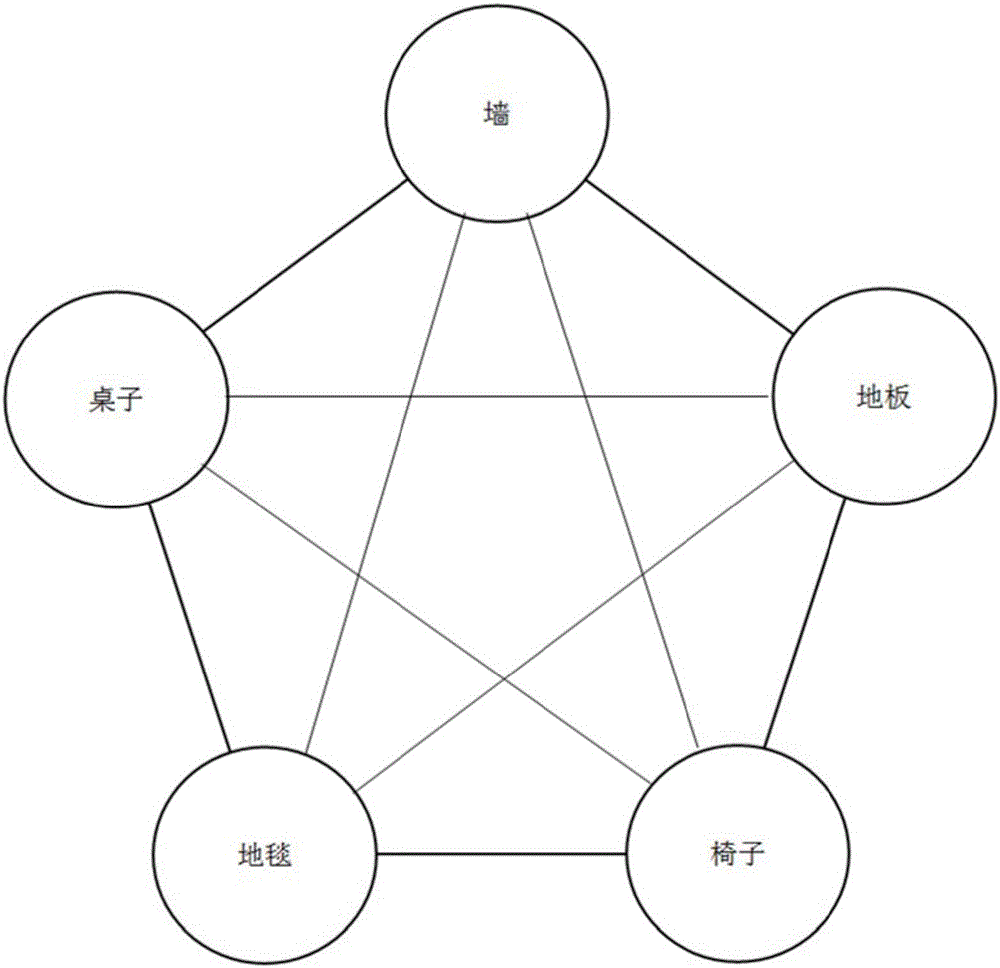

[0111] Step 3, establish a probability model of image furniture...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com