Automatic image annotation method integrating depth features and semantic neighborhood

An automatic image and depth feature technology, applied in character and pattern recognition, instruments, computer parts, etc., to achieve the effect of simple method, flexible implementation and strong practicability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

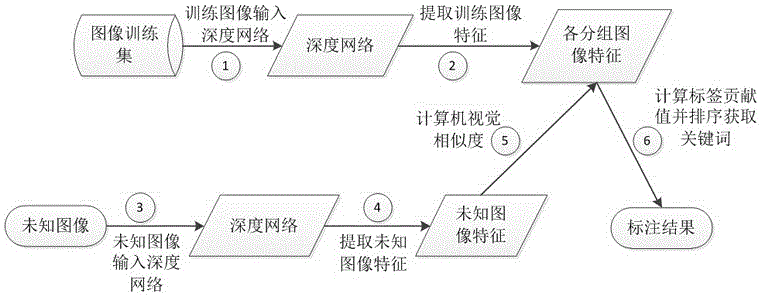

[0030] The present invention provides an automatic image labeling method that combines depth features and semantic neighborhoods, such as figure 1 As shown, in view of the time-consuming and labor-intensive manual feature selection and the traditional label propagation algorithm ignoring semantic similarity, which makes it difficult for the labeling model to be applied to the real image environment, an image labeling method that combines deep features and semantic neighborhoods is proposed. The method first utilizes a multi-layer CNN deep feature extraction network to achieve general and effective deep feature extraction. Then, the semantic groups are divided according to the keywords, and the visual neighbors are limited to the semantic groups to ensure that the images in the neighborhood image set are semantically adjacent and visually adjacent. Fina...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com