Distributed parallel training method and system for neural network acoustic model

An acoustic model and neural network technology, applied to biological neural network models, speech analysis, instruments, etc., can solve problems such as extremely high network bandwidth requirements, frequent parameter transmission, and limited acceleration effects, so as to shorten the training period, prevent divergence, The effect of ensuring stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

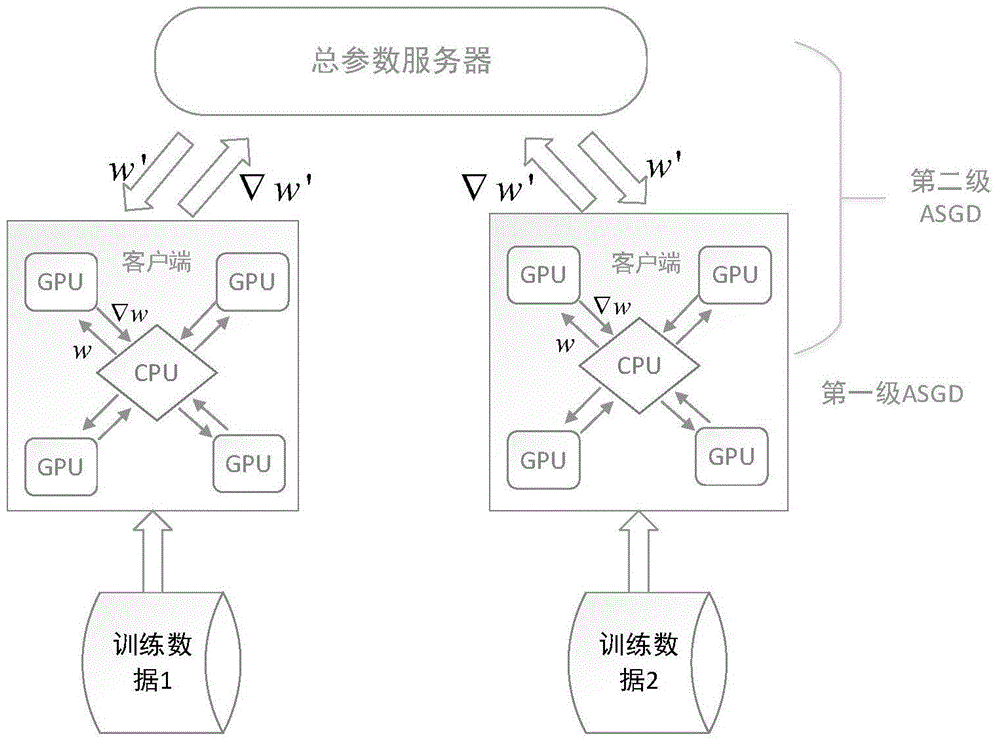

[0054] 1. Construction of two-level ASGD system

[0055] attached figure 1 It is a two-level ASGD neural network system architecture diagram proposed by the present invention. The overall architecture is composed of multiple clients and a parameter server, wherein the client is responsible for calculating the gradient, and the parameter server is responsible for updating the model. Parameters are passed between servers to form an upper-level (second-level) ASGD system; each client’s internal CPU and each GPU constitute a bottom-level (first-level) ASGD system, and parameters are passed between the CPU and GPU through the bus. The process of model training based on the two-level ASGD system is as follows: first, the model in the parameter server will be initialized (random value) at the beginning of training, and the initialized model will be sent to each client (in the CPU), if each client Using 4 GPU cards (G1, G2, G3, G4), the 4 GPUs calculate the gradient according to the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com