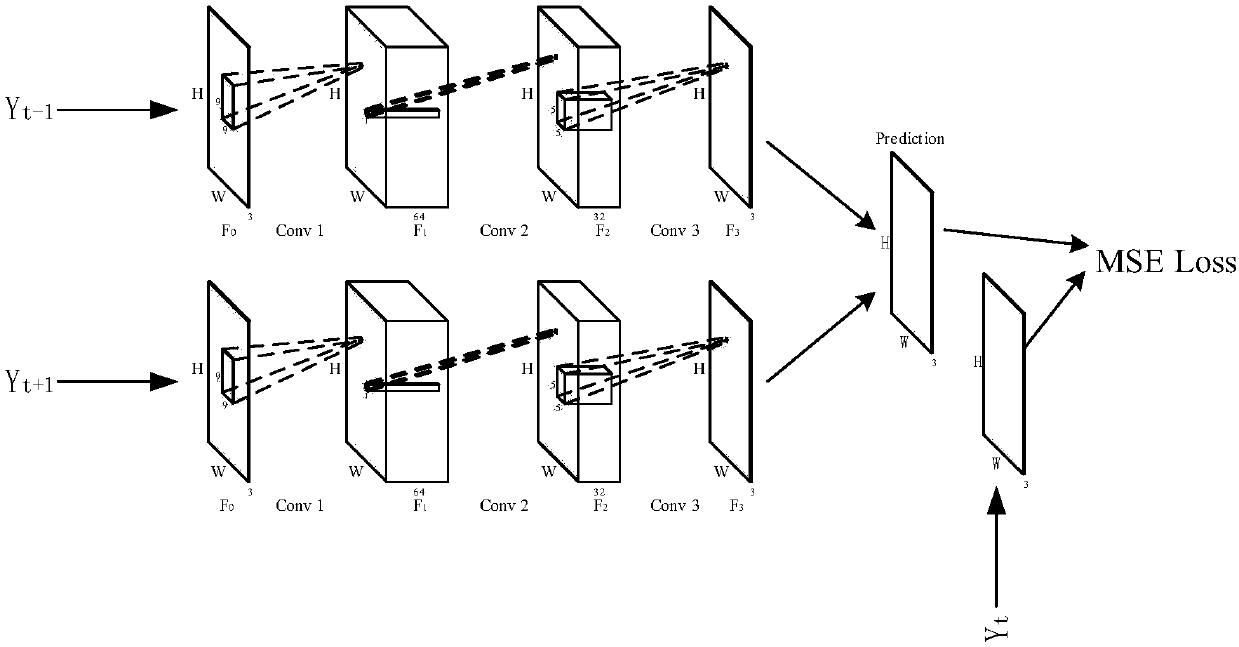

A method and system for generating high frame rate video based on deep learning

A deep learning, high frame rate technology, applied in the field of computer vision, can solve problems such as video quality degradation and frame loss, and achieve the effect of overcoming the inconspicuous effect and overcoming the time-consuming and labor-intensive effects.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

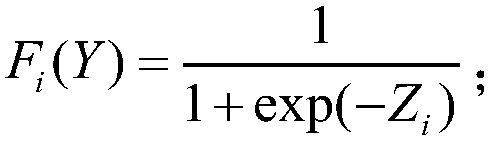

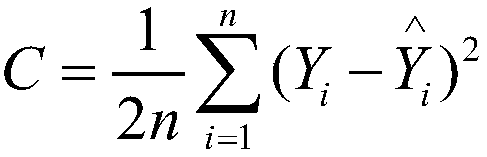

Method used

Image

Examples

Embodiment Construction

[0033] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention. In addition, the technical features involved in the various embodiments of the present invention described below can be combined with each other as long as they do not constitute a conflict with each other.

[0034] Below at first explain and illustrate with regard to the technical terms of the present invention:

[0035] Convolutional Neural Network (CNN): A neural network that can be used for image classification, regression and other tasks. Its particularity is reflected in two aspects. On the one hand, the connection between its neurons is not fully connected. , on the other hand the weights of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com