A Video Human Behavior Recognition Method Based on Salient Trajectory Spatial Information

A recognition method and spatial information technology, applied in the field of computer vision, can solve the problems of inaccurate trajectories and redundancy, and achieve the effect of expressing ability, small error, and improving the recognition effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

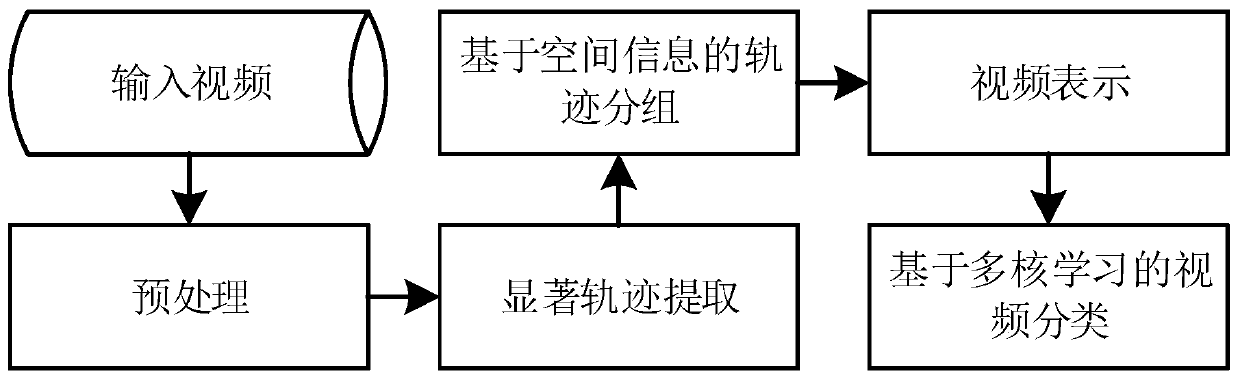

[0063] Such as figure 1 As shown, the present invention first preprocesses the video, then filters the dense trajectory features of the video by calculating the saliency to obtain the salient trajectory, and then uses the spatial information of the trajectory to perform two-layer clustering on the salient trajectory of the video, clustering After completion, use the visual dictionary to obtain the representation of the video, and finally use the method of multi-core learning to learn and classify.

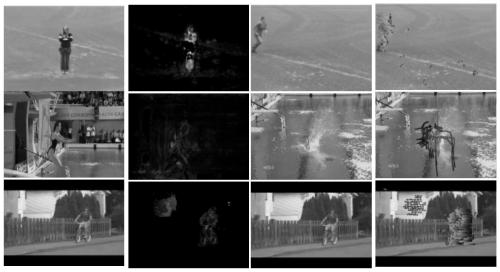

[0064] Such as figure 2 As shown, it includes the original frame of the video, the saliency of the dynamic and static combination of the frame, and the original frame and the saliency trajectory based on the dynamic and static combination of saliency filtering. In the present invention, the length of the trajectory is set to be 15, and the trajectories whose significance is less than 1.4 times the average significance of the 15 frames where the trajectory is located are filtered....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com