Method for detecting video action based on convolutional neural network

一种卷积神经网络、动作检测的技术,应用在基于卷积神经网络的视频动作检测领域,能够解决破坏动作连续性、3DCNN学习不到运动特征、增加类内差异性等问题

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way.

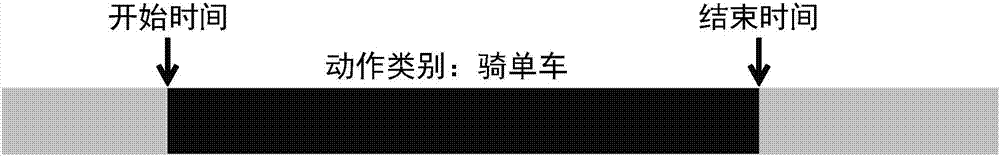

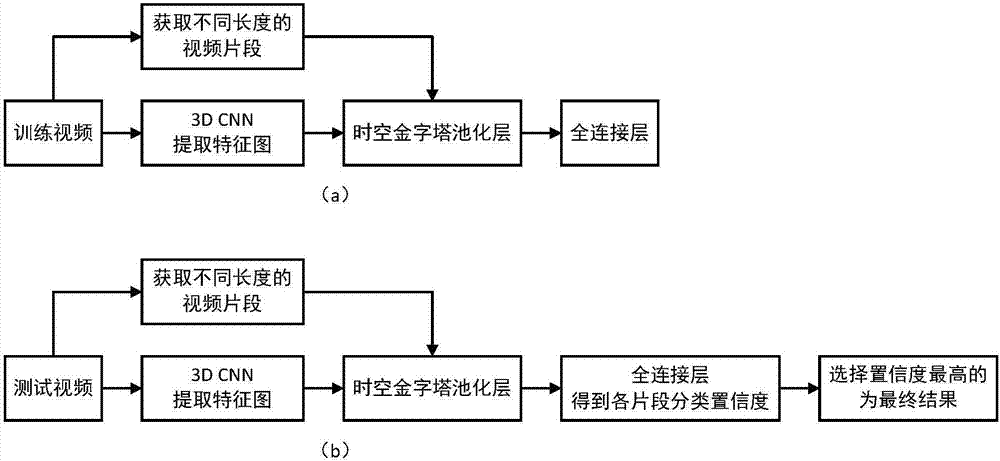

[0042] The invention provides a video action detection method based on a convolutional neural network. By adding a spatiotemporal pyramid pooling layer to the traditional network structure, it eliminates the limitation of the network on input, speeds up training and testing, and better excavates the action in the video. Motion information enables improved performance in both video action classification and temporal localization. The present invention does not require that the input video segments have the same size.

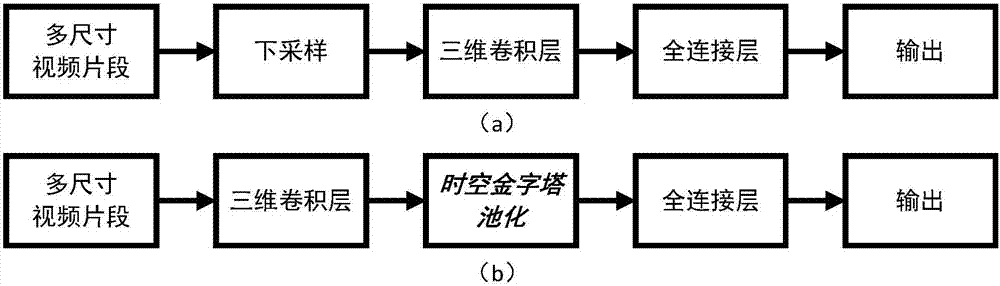

[0043] Such as figure 2 As shown, since the traditional convolutional neural network requires the same size of the input video clips, the video clips need to be down-sampled before being input into the network. However, the present invention removes the downsampling process and inser...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com