Patents

Literature

783results about "Video data browsing/visualisation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

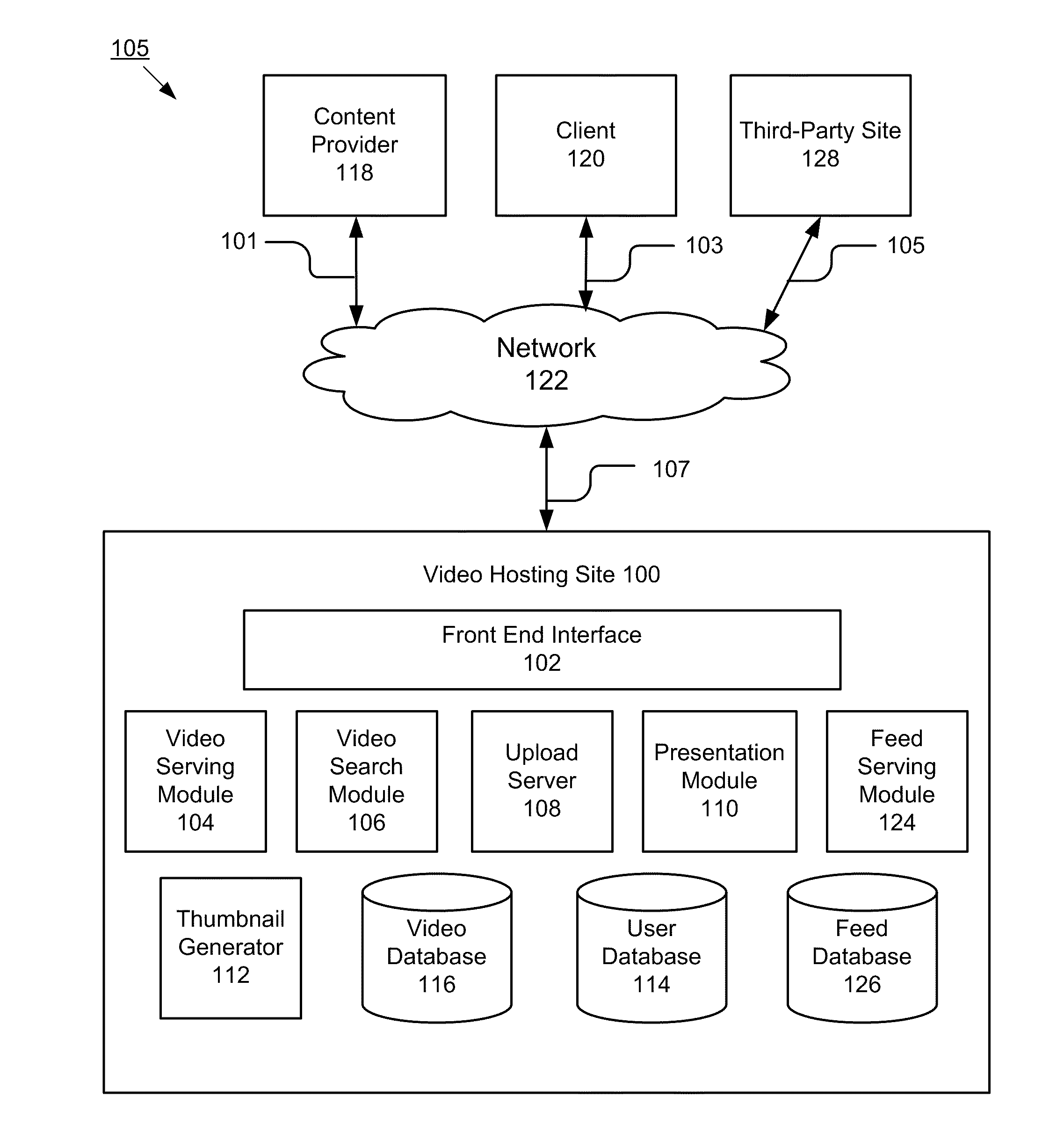

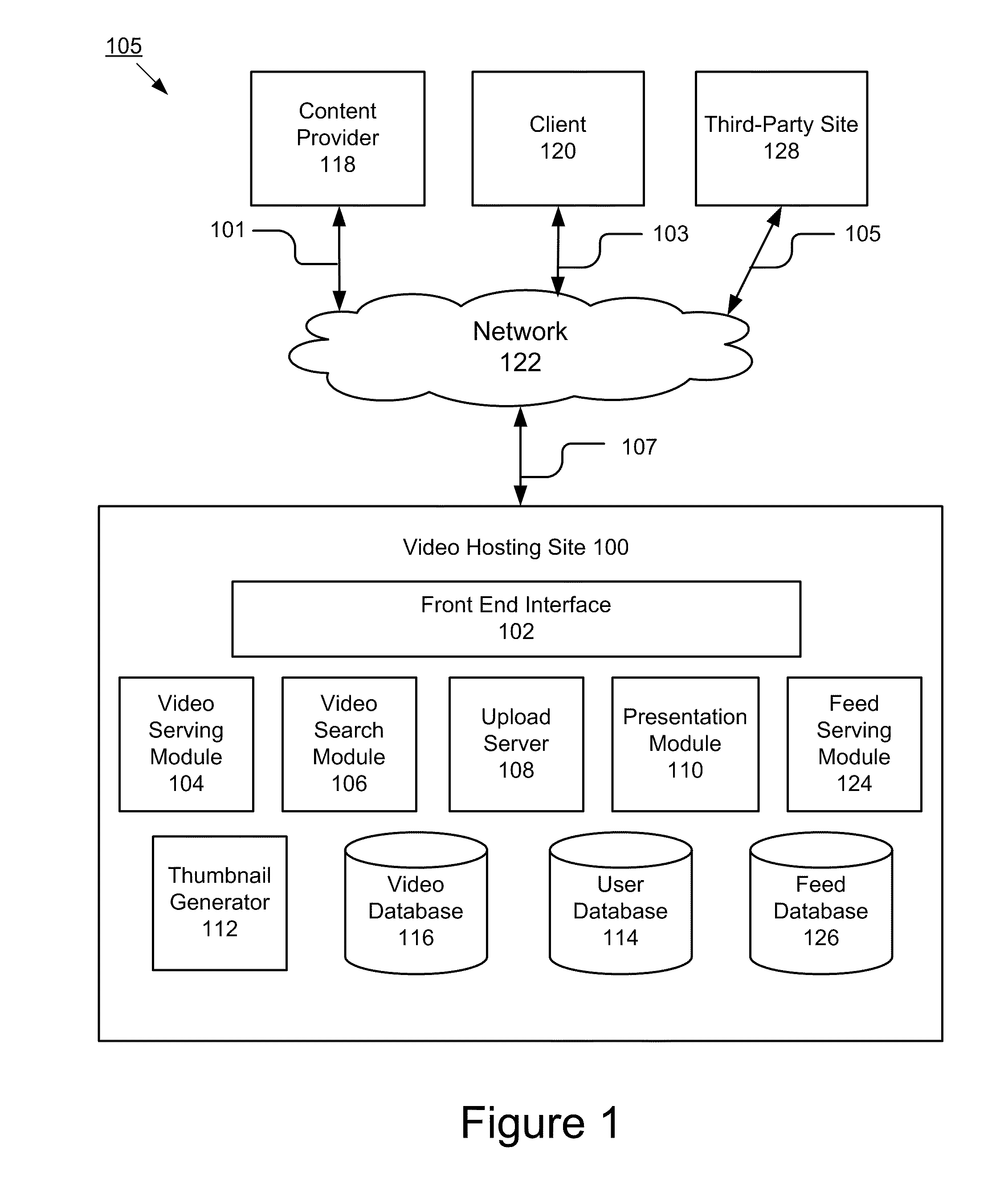

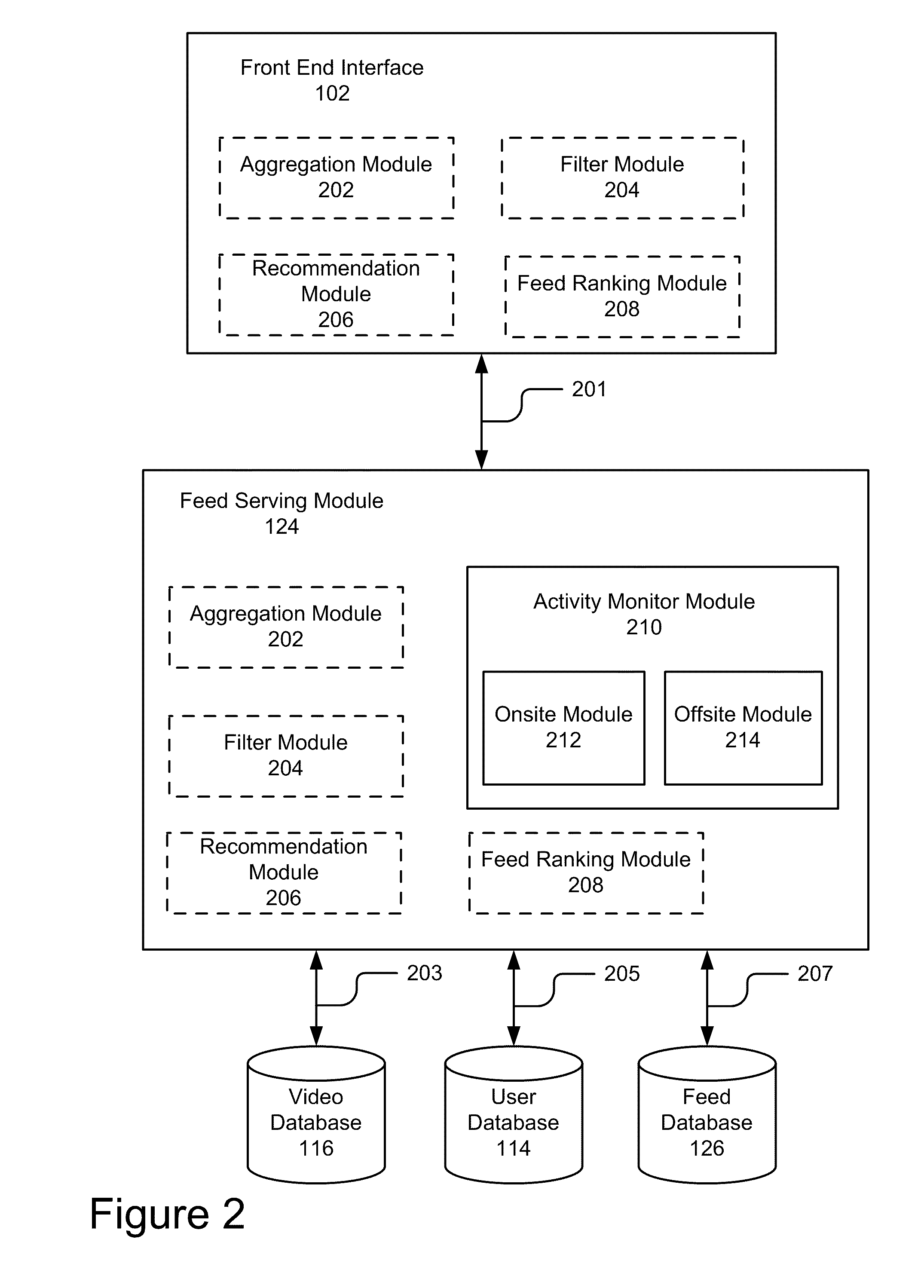

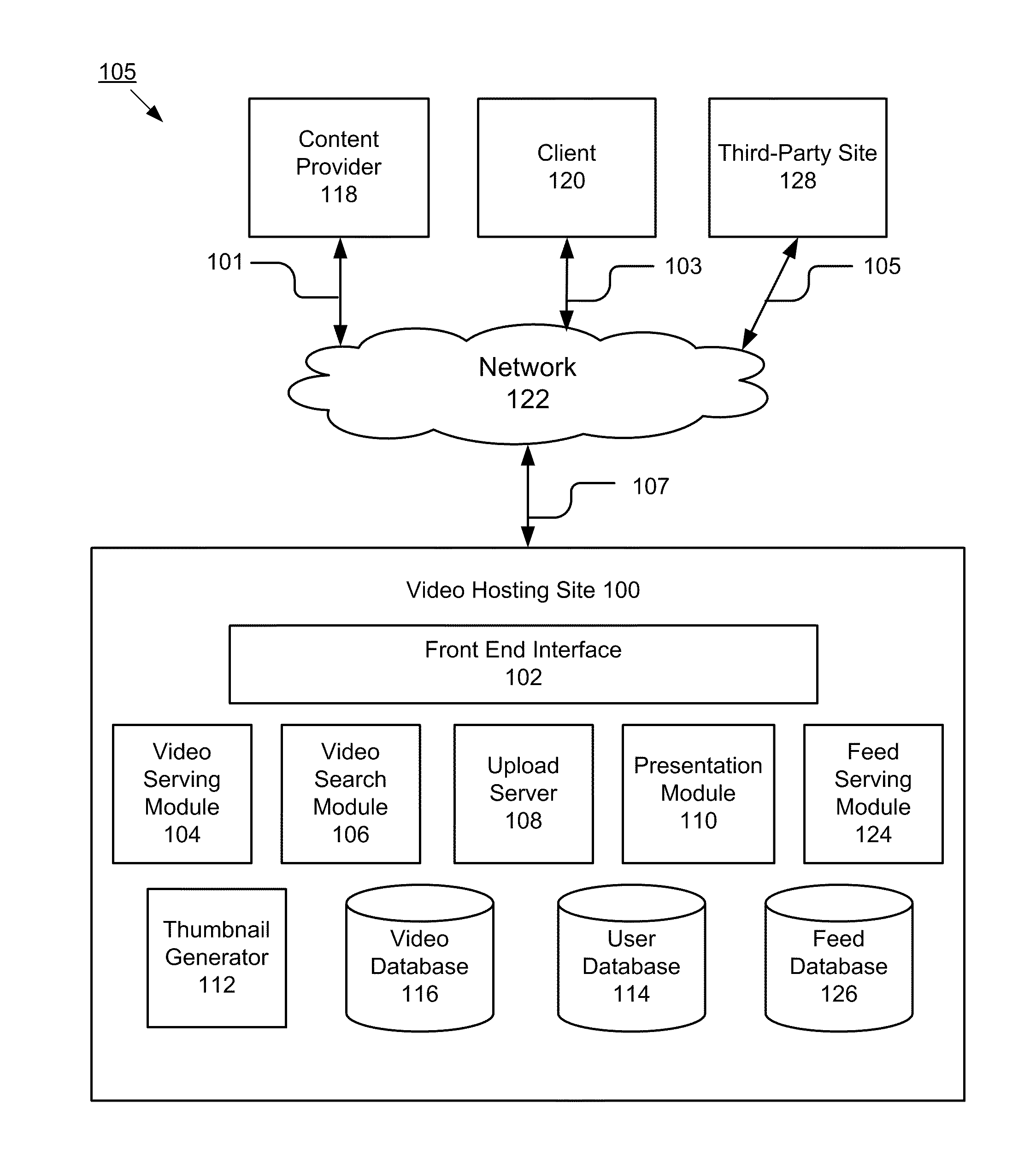

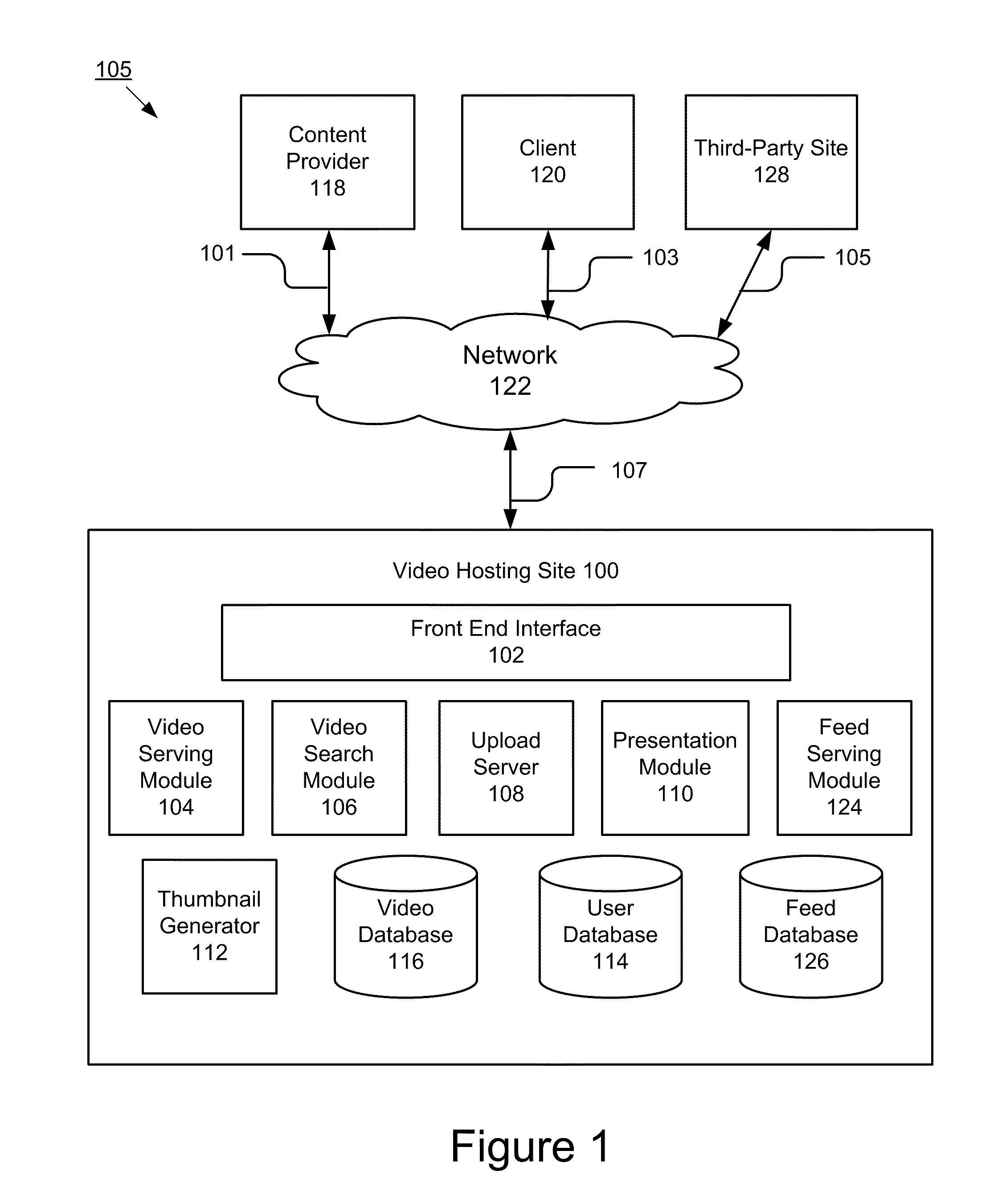

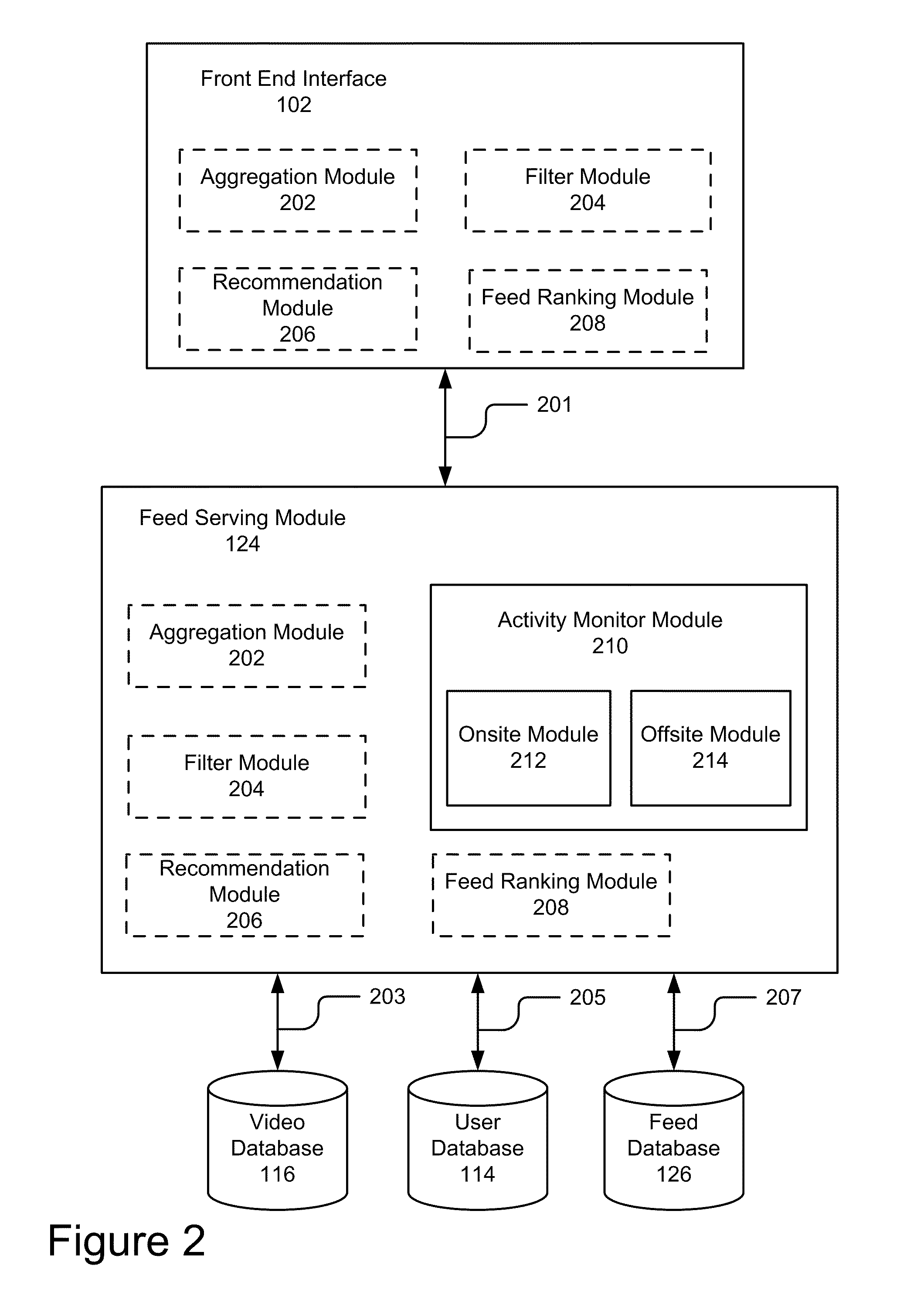

Organizing social activity information on a site

A system and method for organizing social activity information on a website is disclosed. The system comprises a feed serving module and a presentation module. The feed serving module is configured to receive social activity of at least a first user from at least one third-parity source. The feed serving module aggregates the social activity information to form aggregated social activity information. The presentation module is communicatively coupled to the feed serving module and is configured to receive the aggregated social activity information from the feed serving module. The presentation module generates feed display associated with the aggregated social activity information and sends feed display to a client for display to a second user.

Owner:GOOGLE LLC

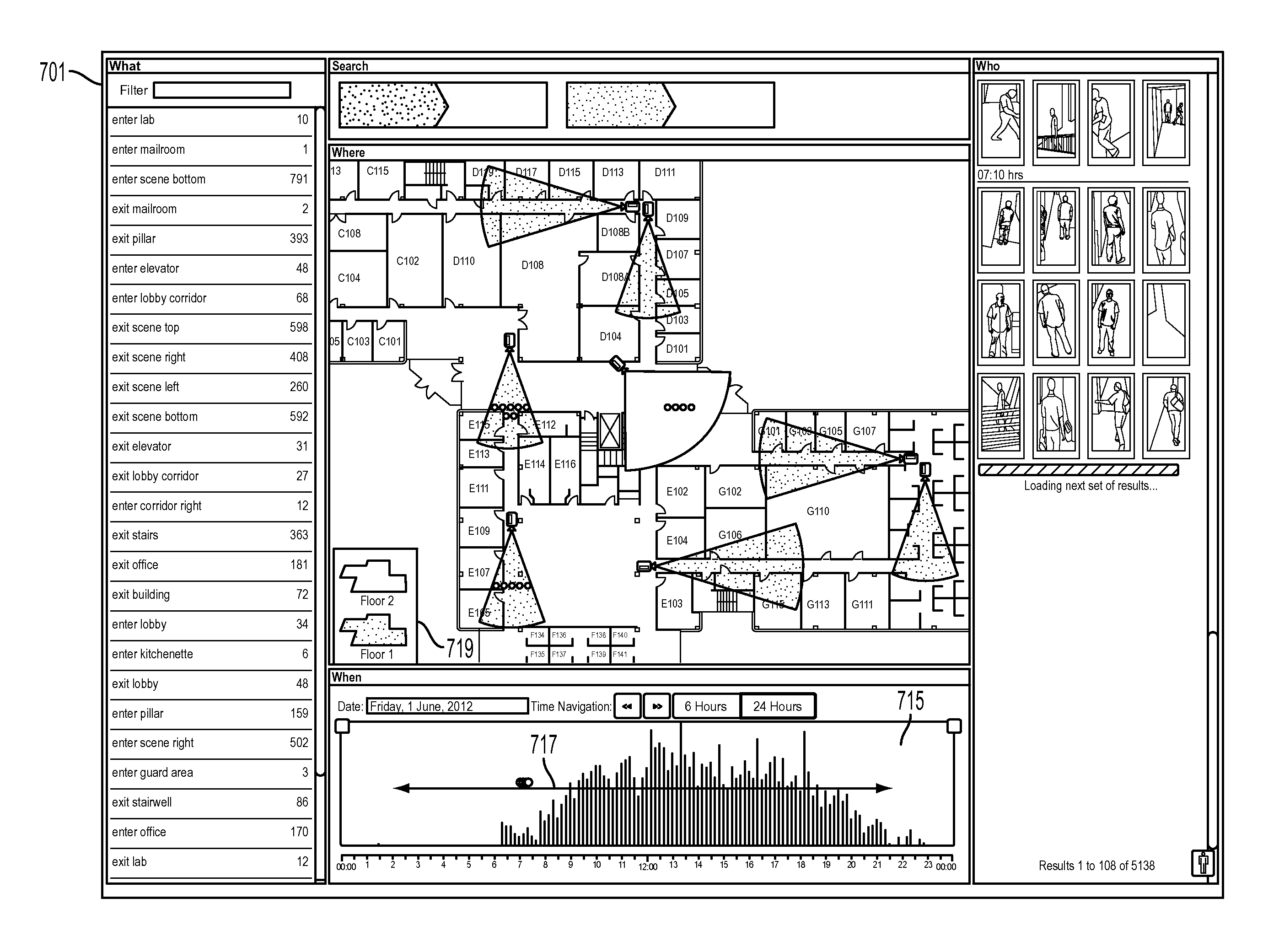

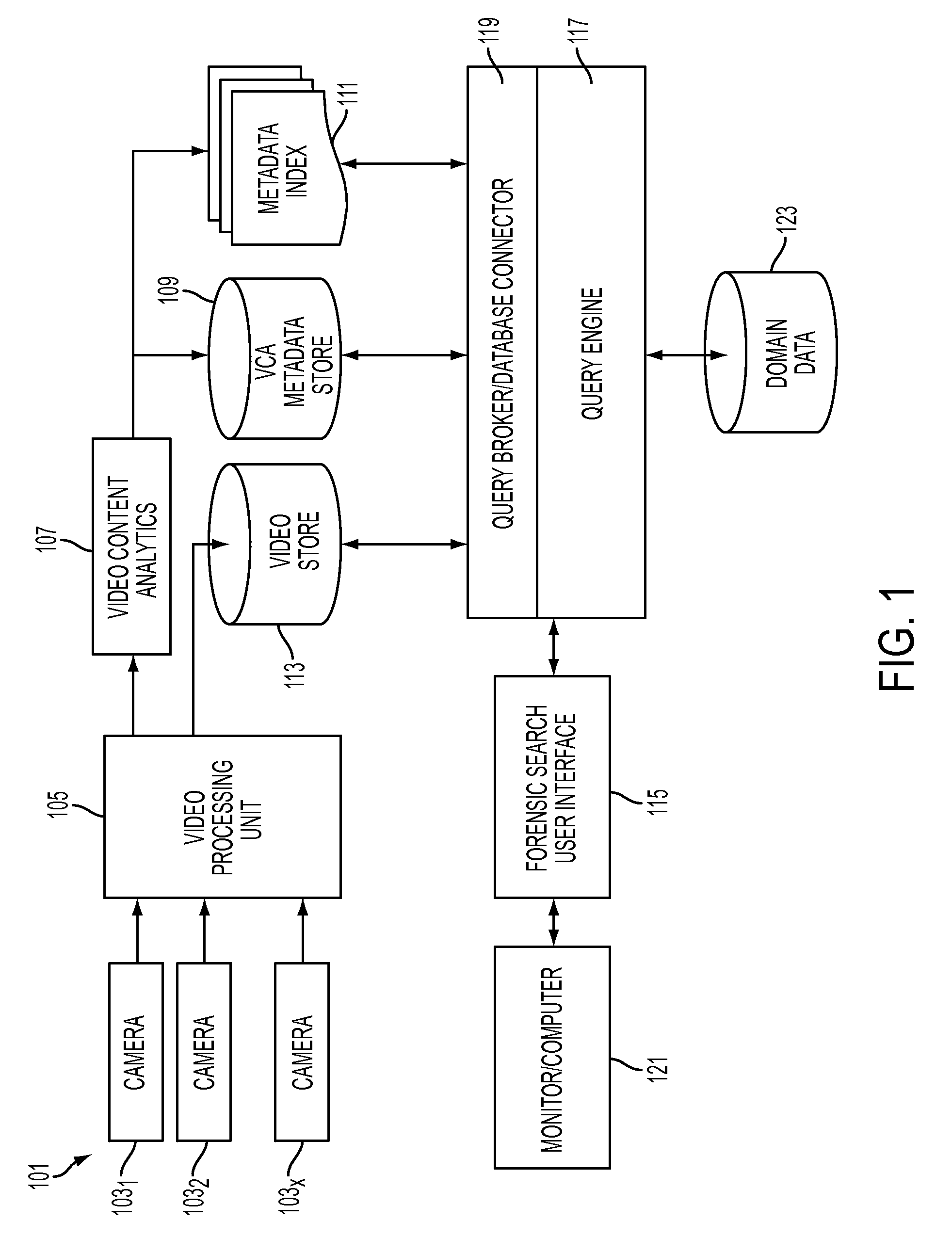

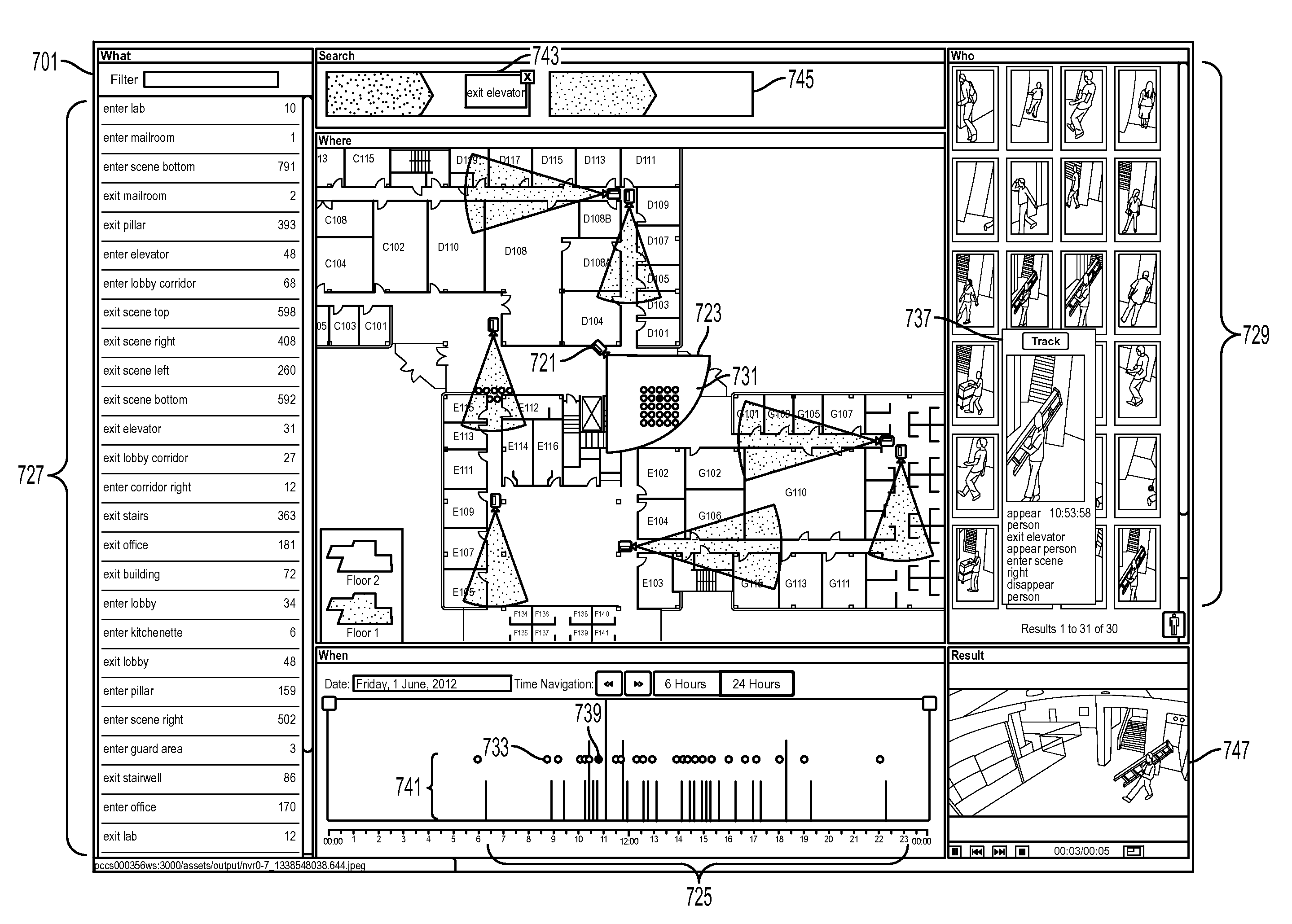

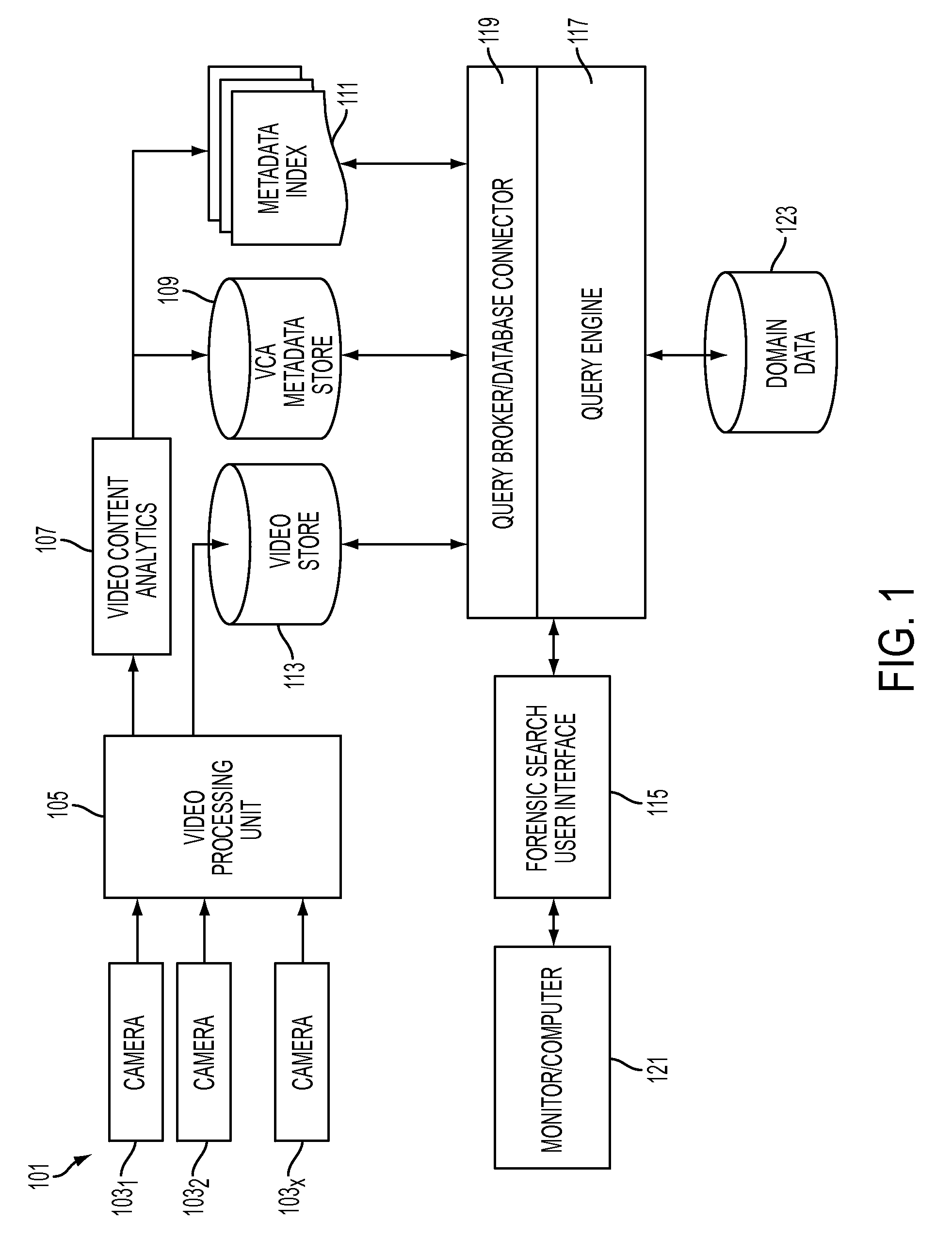

Method and user interface for forensic video search

ActiveUS20130091432A1Easy searchVideo data queryingVideo data browsing/visualisationComputer graphics (images)Low complexity

A forensic video search user interface is disclosed that accesses databases of stored video event metadata from multiple camera streams and facilitates the workflow of search of complex global events that are composed of a number of simpler, low complexity events.

Owner:SIEMENS AG

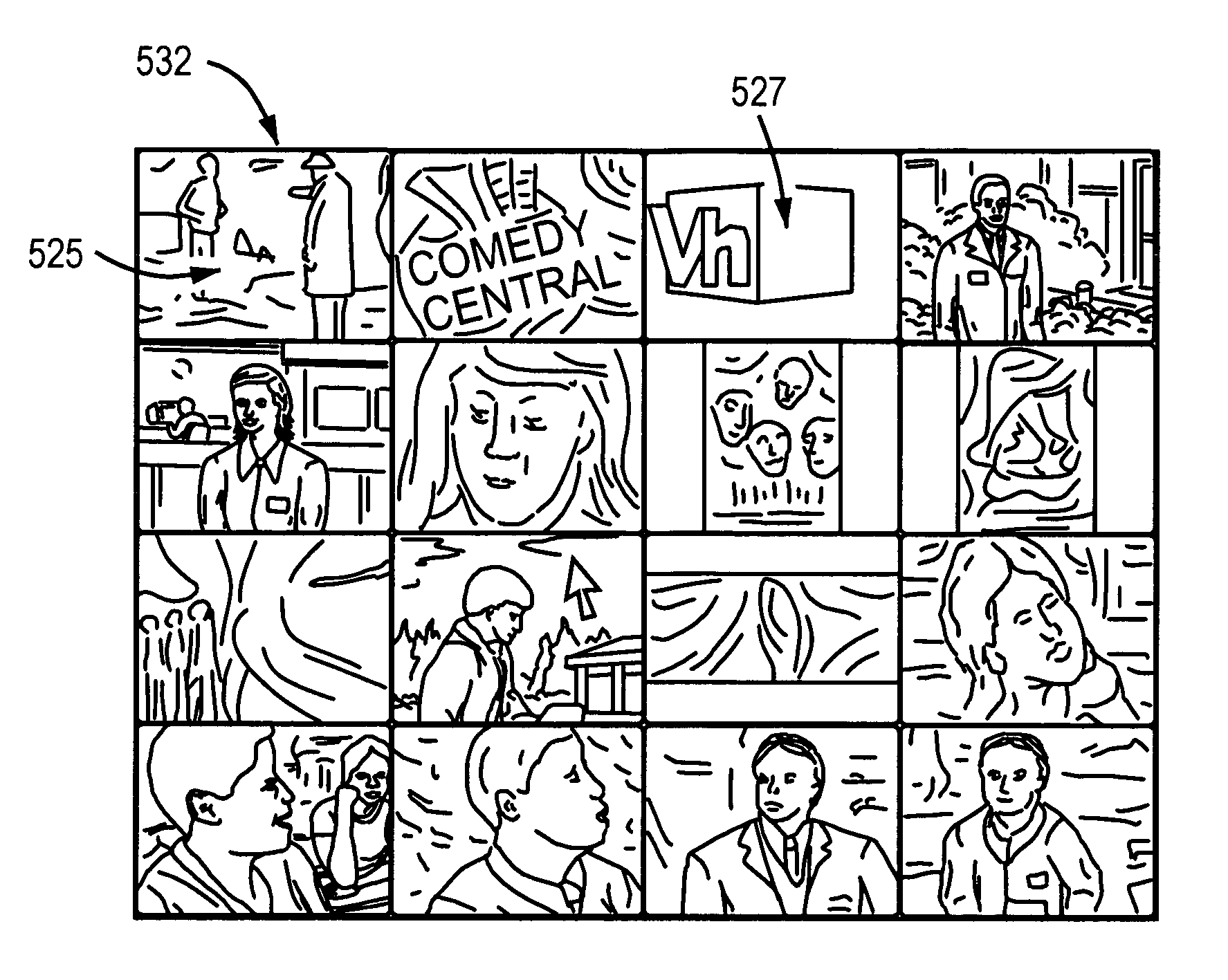

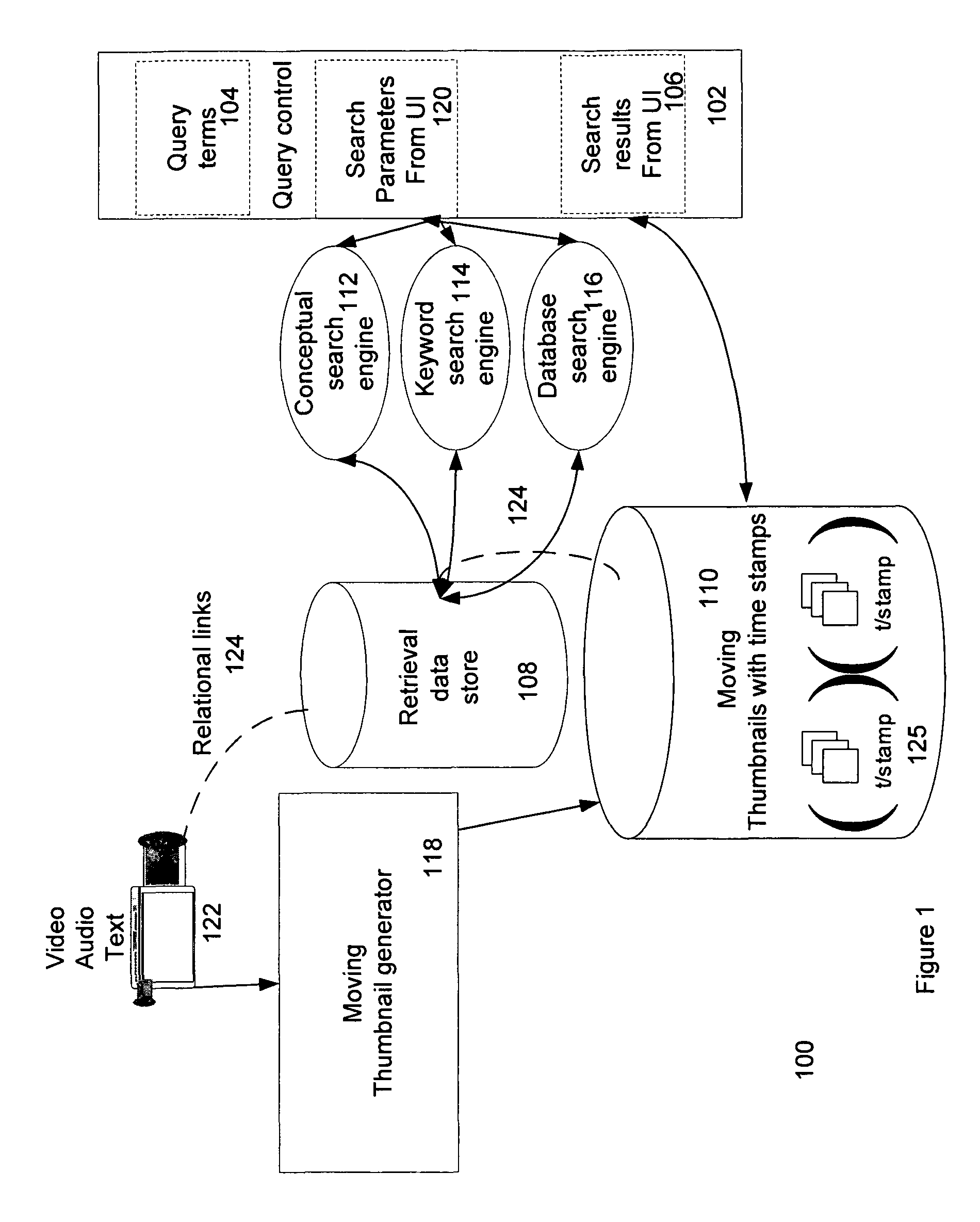

Various methods and apparatuses for moving thumbnails

ActiveUS8078603B1Video data browsing/visualisationDigital data processing detailsThumbnailMoving frame

Various methods, apparatuses, and systems are described for a moving thumbnail generator. The moving thumbnail generator generates one or more moving thumbnails that are visually and aurally representative of the content that takes place in an associated original video file. Each of the moving thumbnails has two or more moving frames derived from its associated original video file. Each moving thumbnail is stored with a relational link back to the original video file in order so that the moving thumbnail can be used as a linkage back to the original video file.

Owner:RHYTHMONE

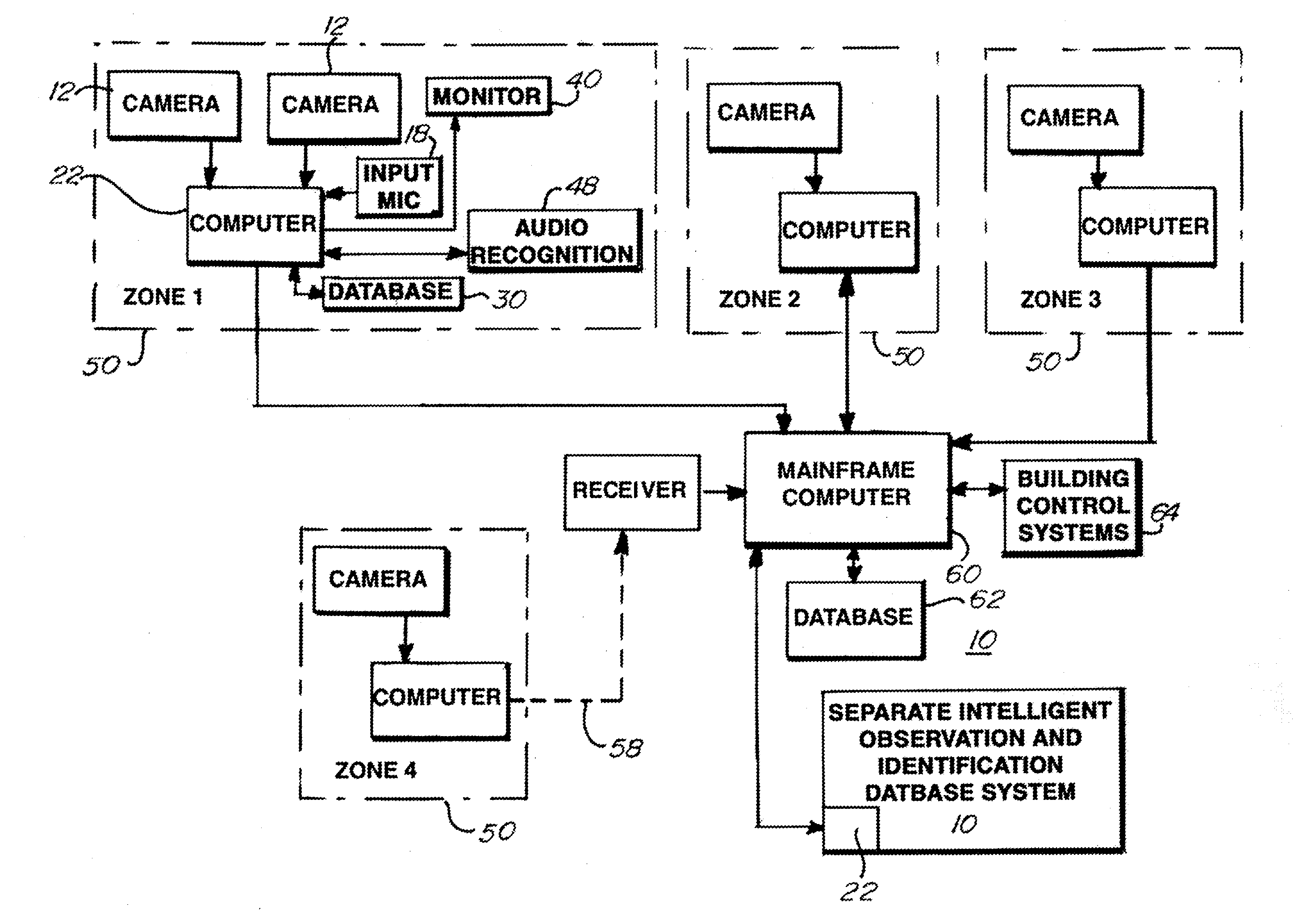

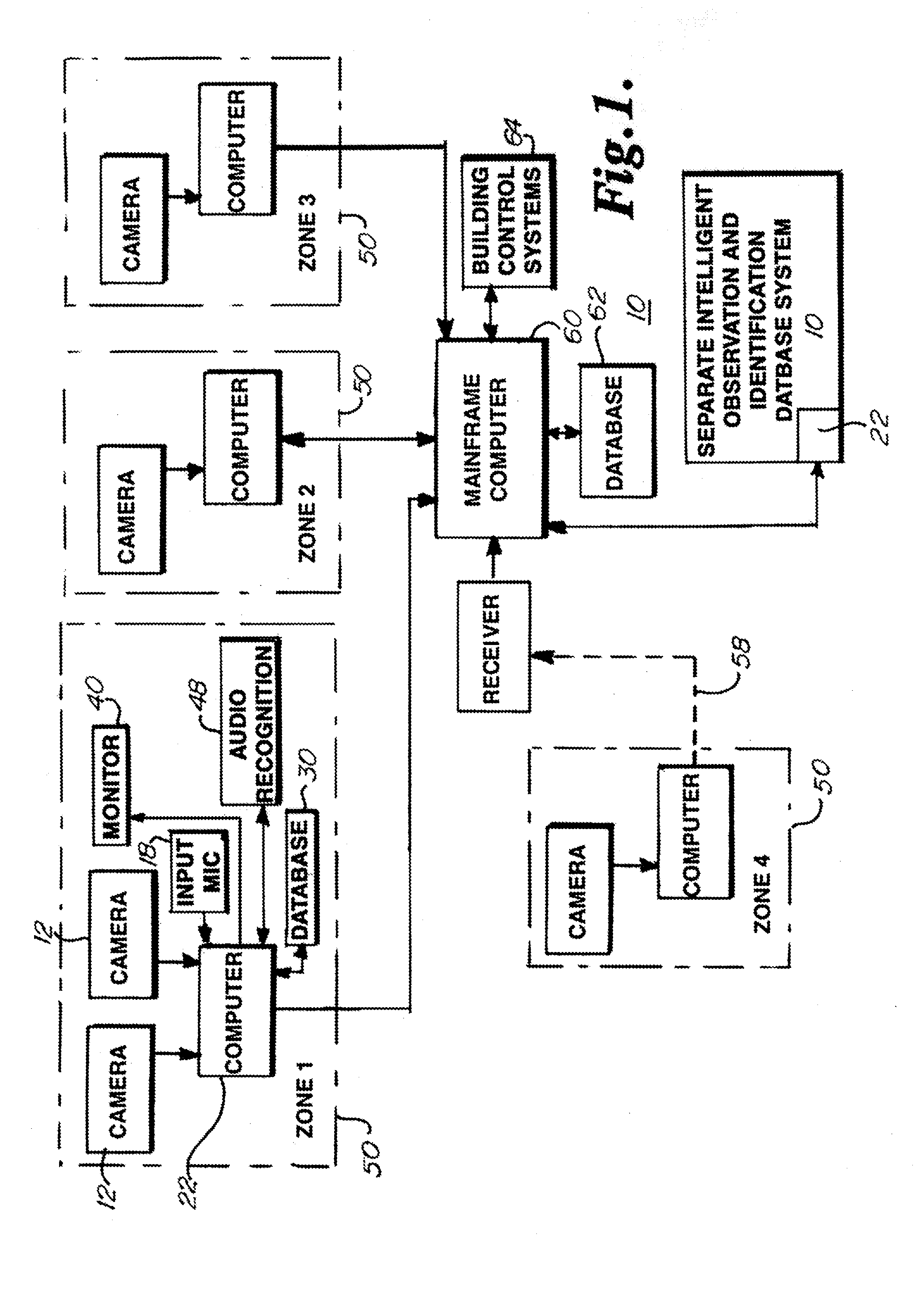

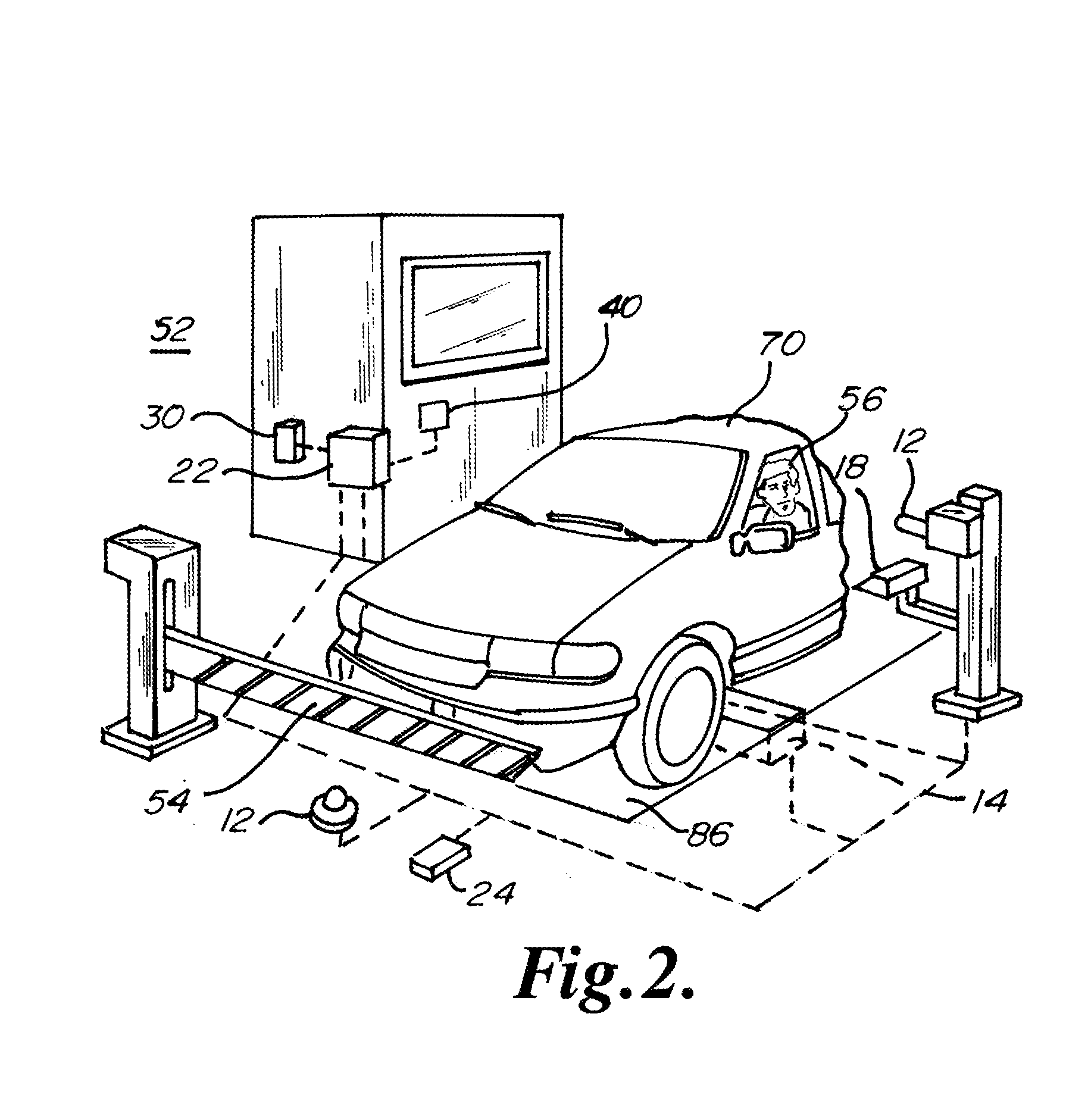

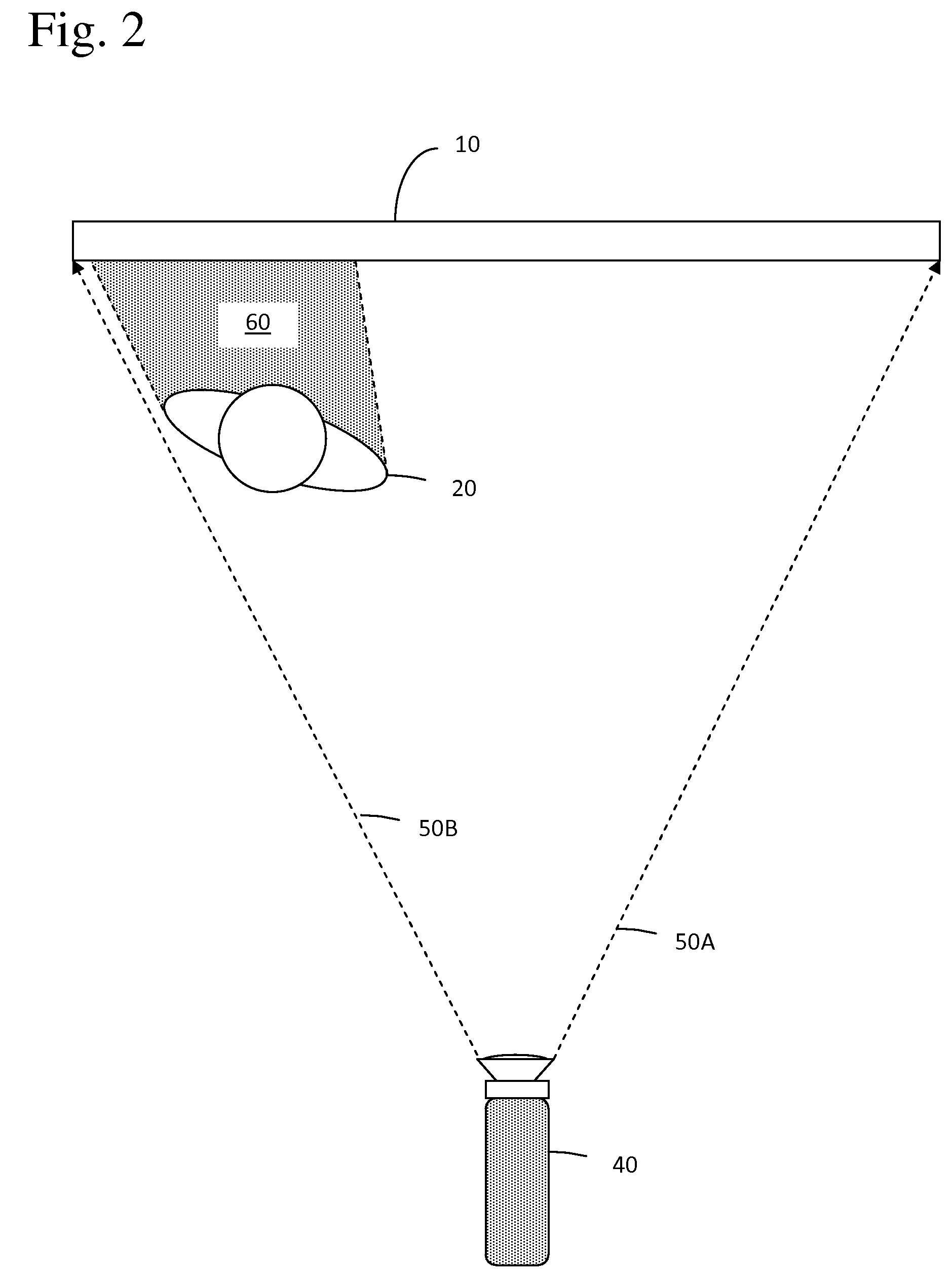

Intelligent Observation And Identification Database System

An intelligent video / audio observation and identification database system may define a security zone or group of zones. The system may identify vehicles and individuals entering or exiting the zone through image recognition of the vehicle or individual as compared to prerecorded information stored in a database. The system may alert security personnel as to warrants or other information discovered pertaining to the recognized vehicle or individual resulting from a database search. The system may compare images of a suspect vehicle, such as an undercarriage image, to standard vehicle images stored in the database and alert security personnel as to potential vehicle overloading or foreign objects detected, such as potential bombs. The system may further learn the standard times and locations of vehicles or individuals tracked by the system and alert security personnel upon deviation from standard activity.

Owner:FEDERAL LAW ENFORCEMENT DEV SERVICES

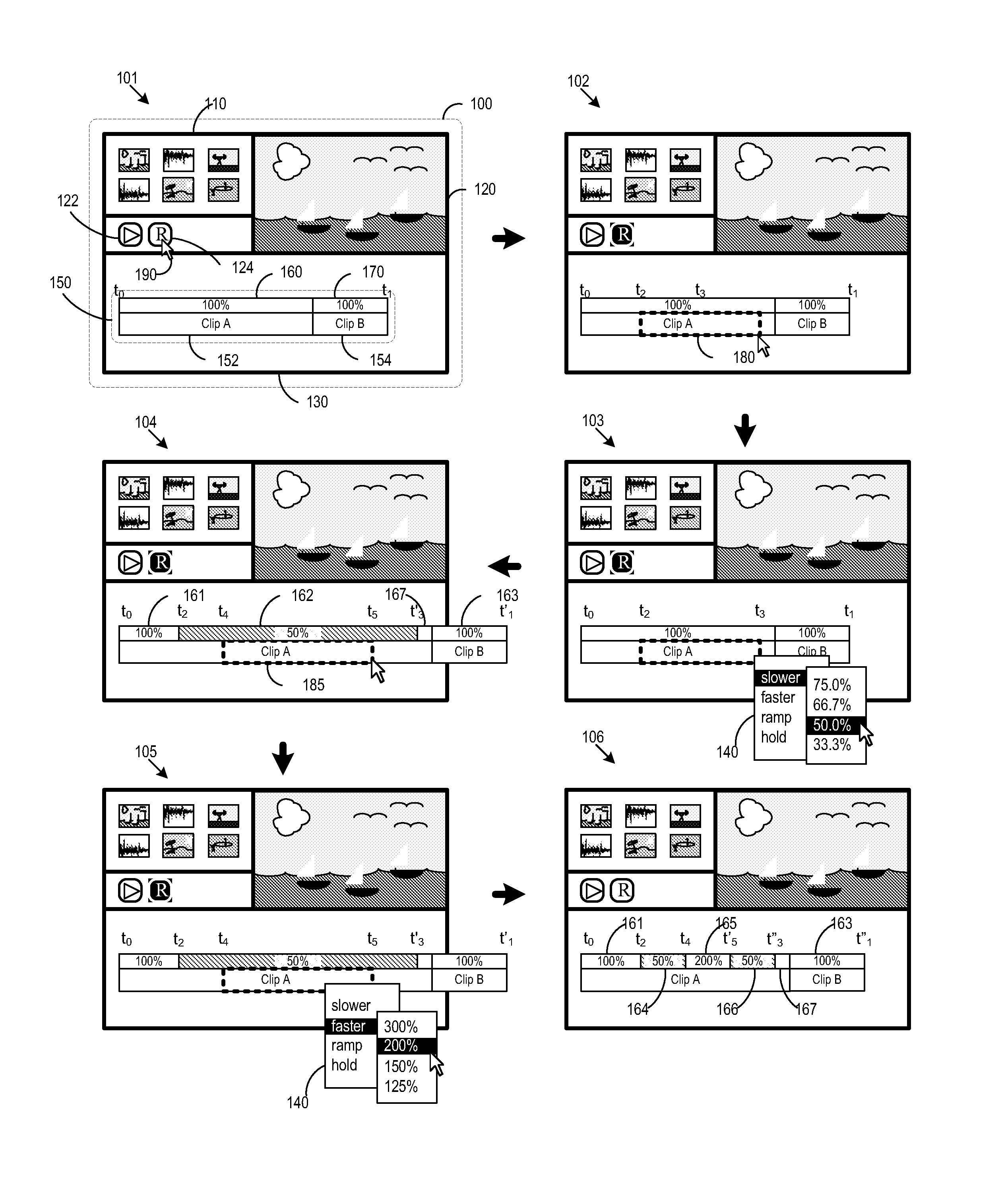

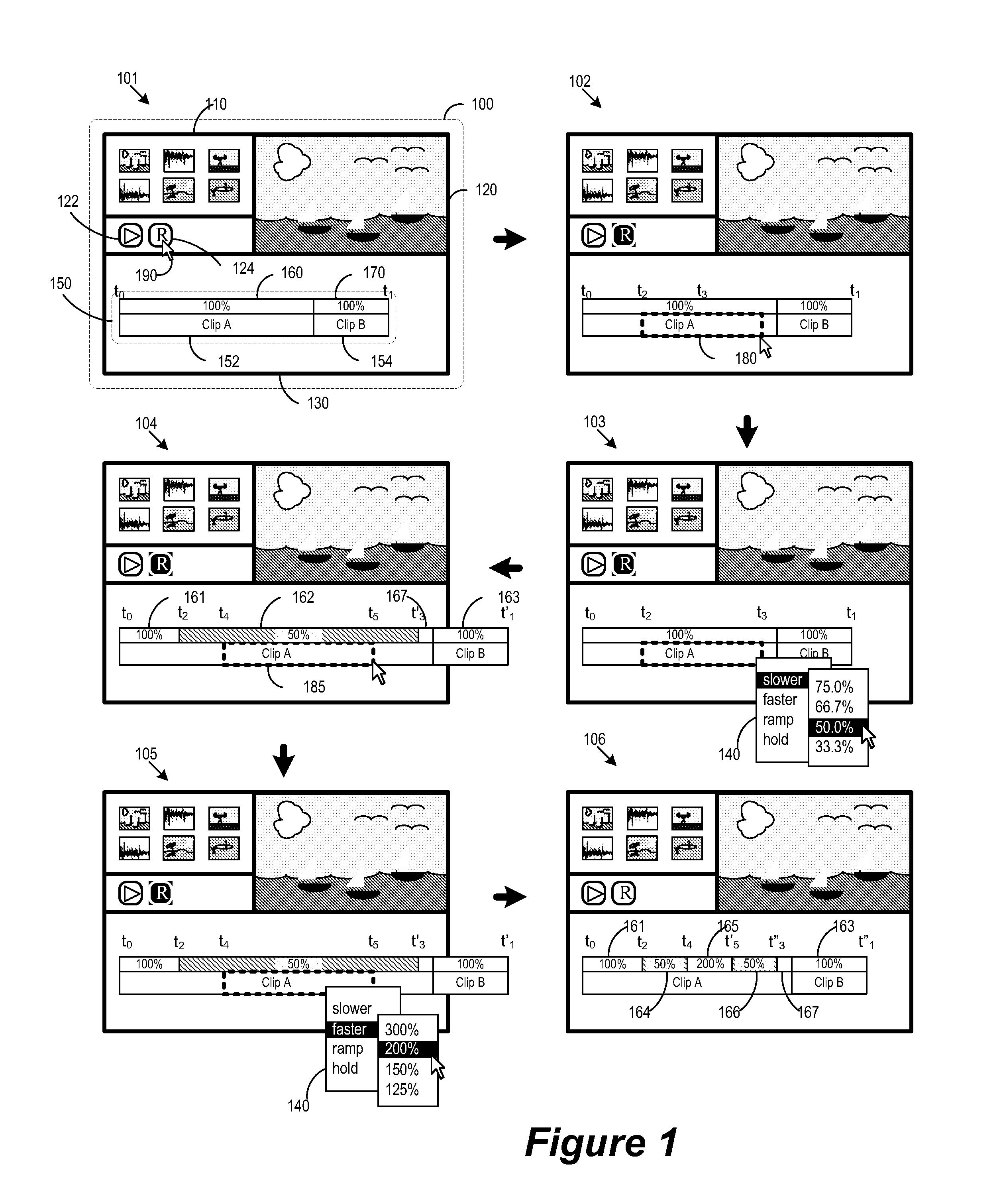

Retiming media presentations

ActiveUS20120210228A1Quick effectFaster rateVideo data browsing/visualisationElectronic editing digitised analogue information signalsComputer graphics (images)Slow speed

A novel method for retiming a portion of a media content (e.g., audio data, video data, audio and video data, etc.) in a media-editing application is provided. The media editing application includes a user interface for defining a range in order to select a portion of the media content. The media editing application performs retiming by applying a speed effect to the portion of the media content selected by the defined range. For a faster speed effect, the media editing application retimes the selected portion of the media content by sampling the media content at a faster rate. For a slower speed effect, the media editing application retimes the selected portion of the media content by sampling the content at a slower rate.

Owner:APPLE INC

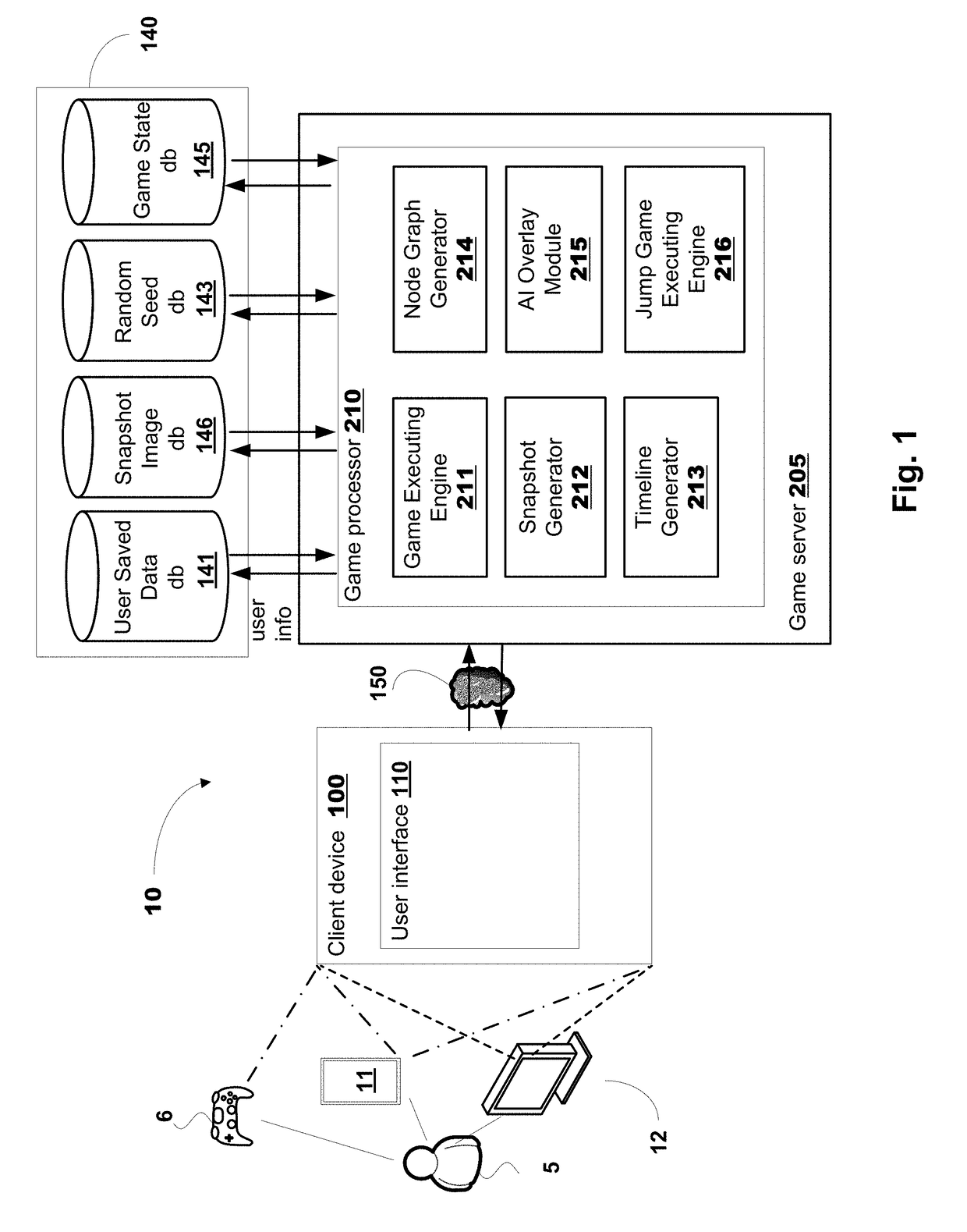

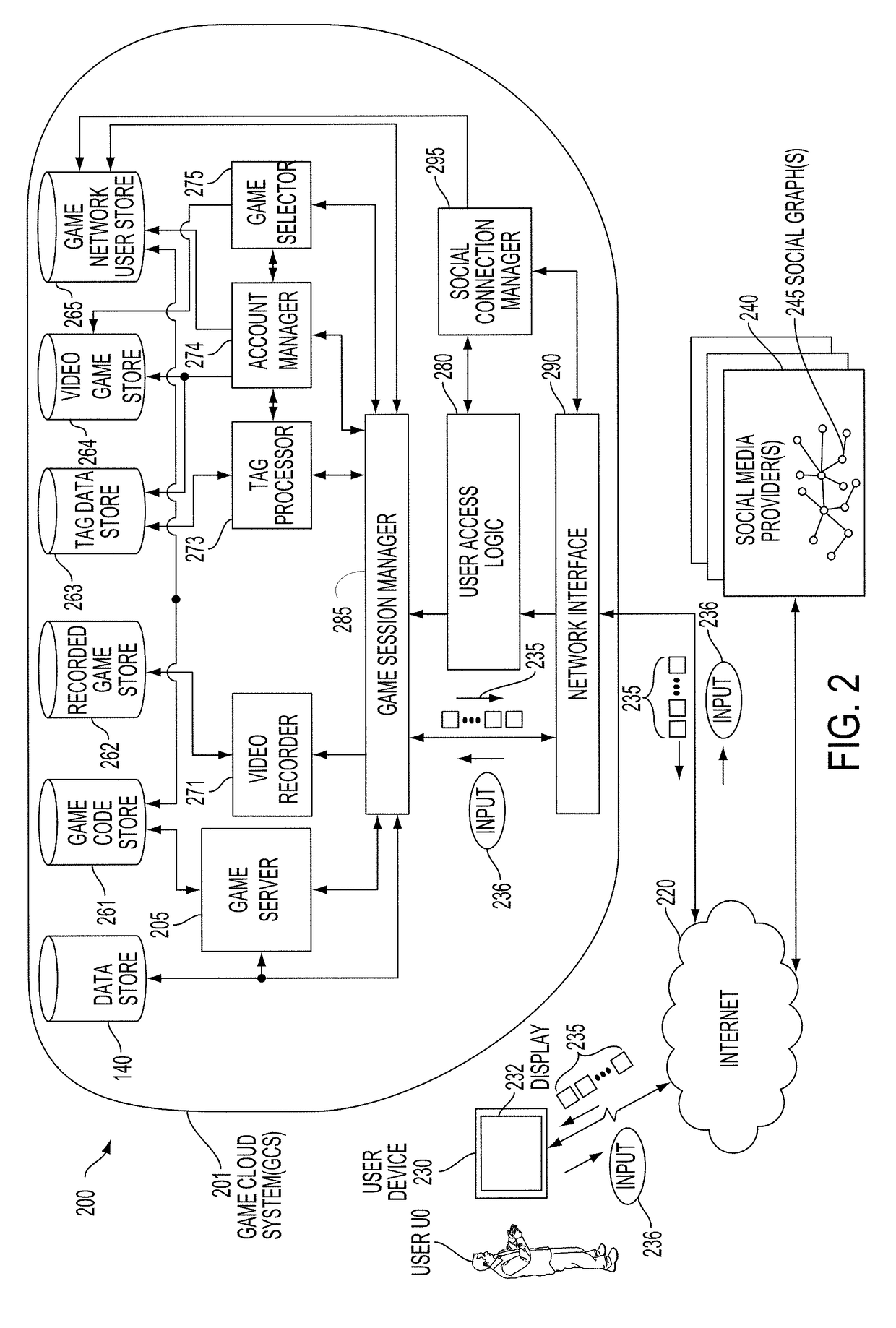

Method and system for accessing previously stored game play via video recording as executed on a game cloud system

A method for gaming, including receiving from a client device of a user selection of a video recording of game play of a player for a gaming application, and streaming the video recording to the client device. The video recording is associated with a snapshot captured at a first point in the recorded game play. Selection of a jump point in the recorded game play is received from the client device. An instance of the gaming application is initiated based on the snapshot to initiate a jump game play. Input commands used to direct the game play and associated with the snapshot are accessed. Image frames are generated based on the input commands for rendering at the client device, the image frames replaying the game play to the jump point. Input commands from the client device are handled beginning from the jump point for the jump game play.

Owner:SONY INTERACTIVE ENTRTAINMENT LLC

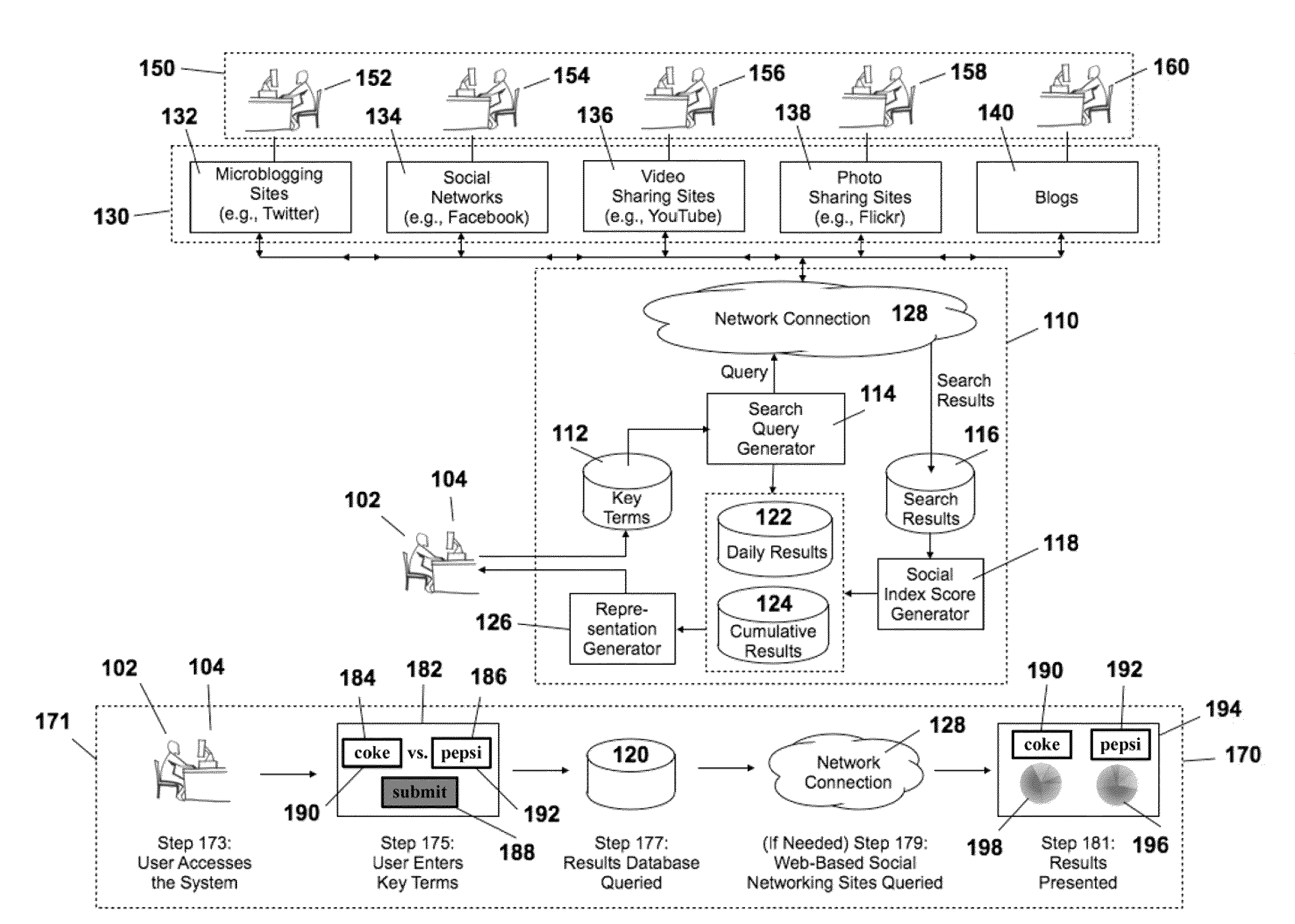

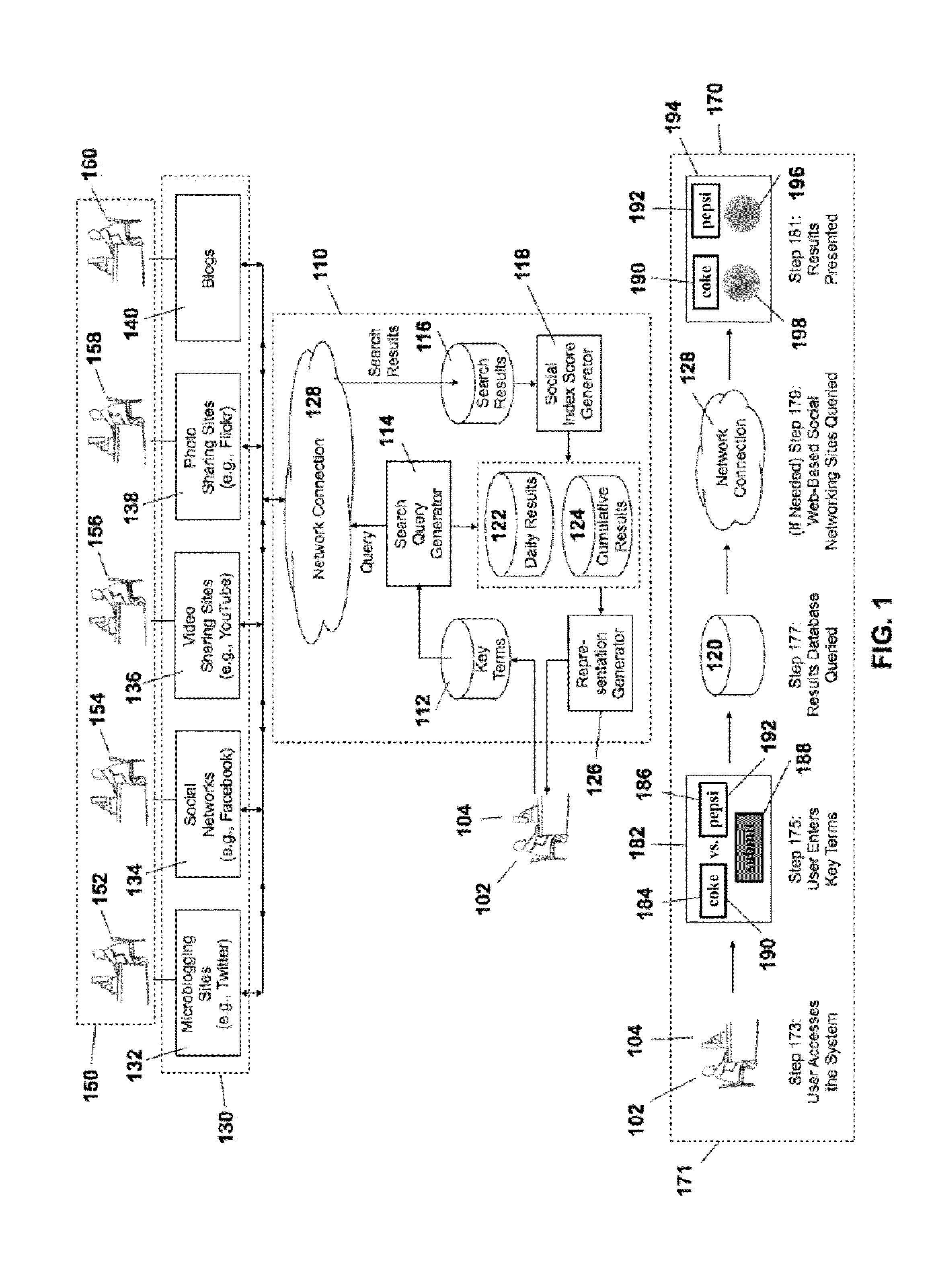

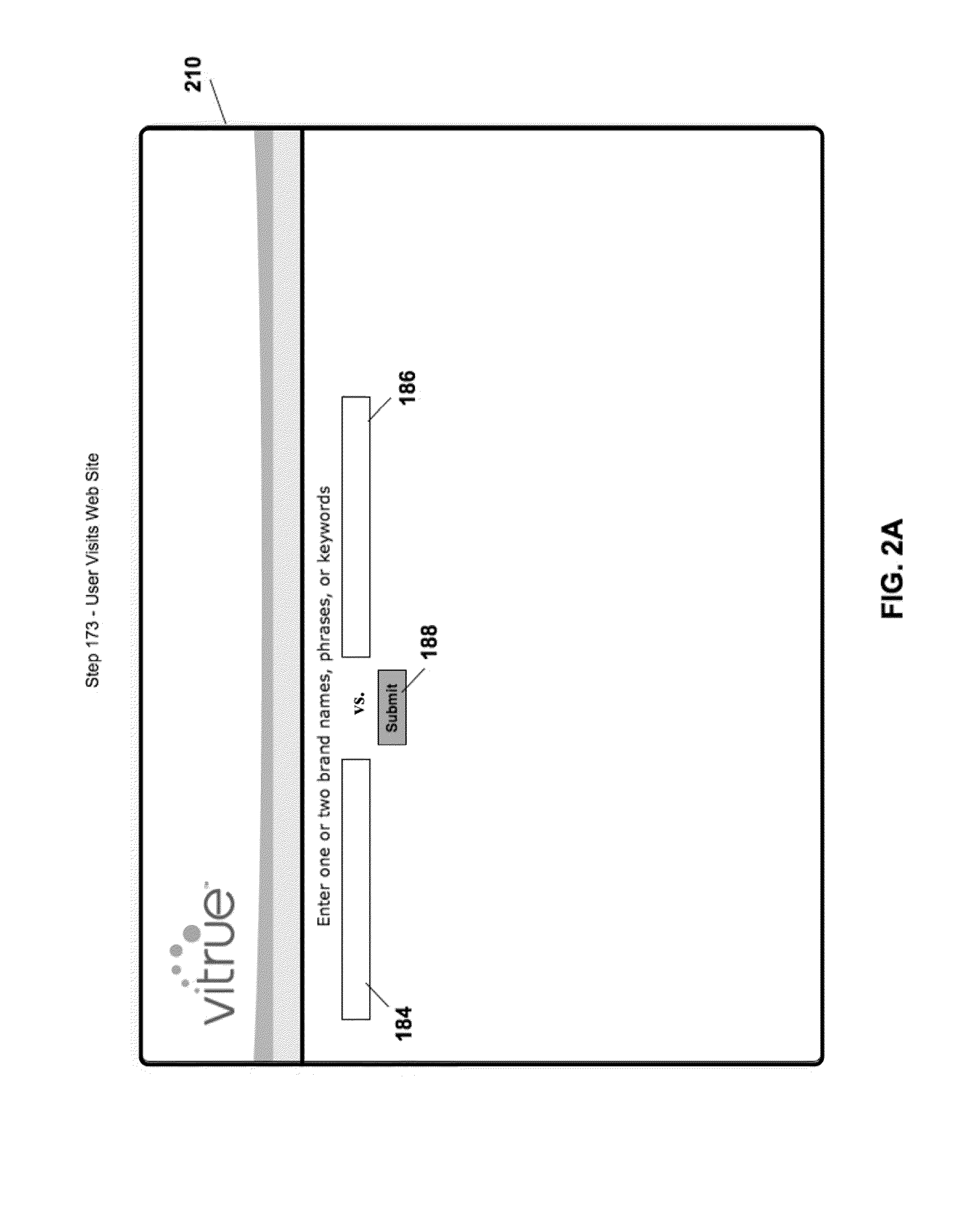

Systems and methods for generating social index scores for key term analysis and comparisons

In one aspect, the present disclosure relates to a method, in a computer network, for generating social index scores associated with key terms within web-based social network sites. Generally, the method comprises the steps of receiving an input of the key terms from a user, generating search queries from the key terms, providing the generated search queries to the web-based social network sites, capturing search results received from the web-based social network sites in response to the provided search queries, generating, from the captured search results, the social index scores using a processing algorithm, storing the generated social index scores in at least one database, and, providing at least one representation of the generated social index scores to the user in one or more of numerical, visual, and printed form.

Owner:ORACLE INT CORP

Organizing social activity information on a site

A system and method for organizing social activity information on a website is disclosed. The system comprises a feed serving module and a presentation module. The feed serving module is configured to receive one or more user inputs for one or more activities associated with the social activity information. The feed serving module aggregates the social activity information based at least in part on the one or more user inputs to form aggregated social activity information. The presentation module is communicatively coupled to the feed serving module and is configured to receive the aggregated social activity information from the feed serving module. The presentation module generates a graphic associated with the aggregated social activity information and sends the graphic to a client.

Owner:GOOGLE LLC

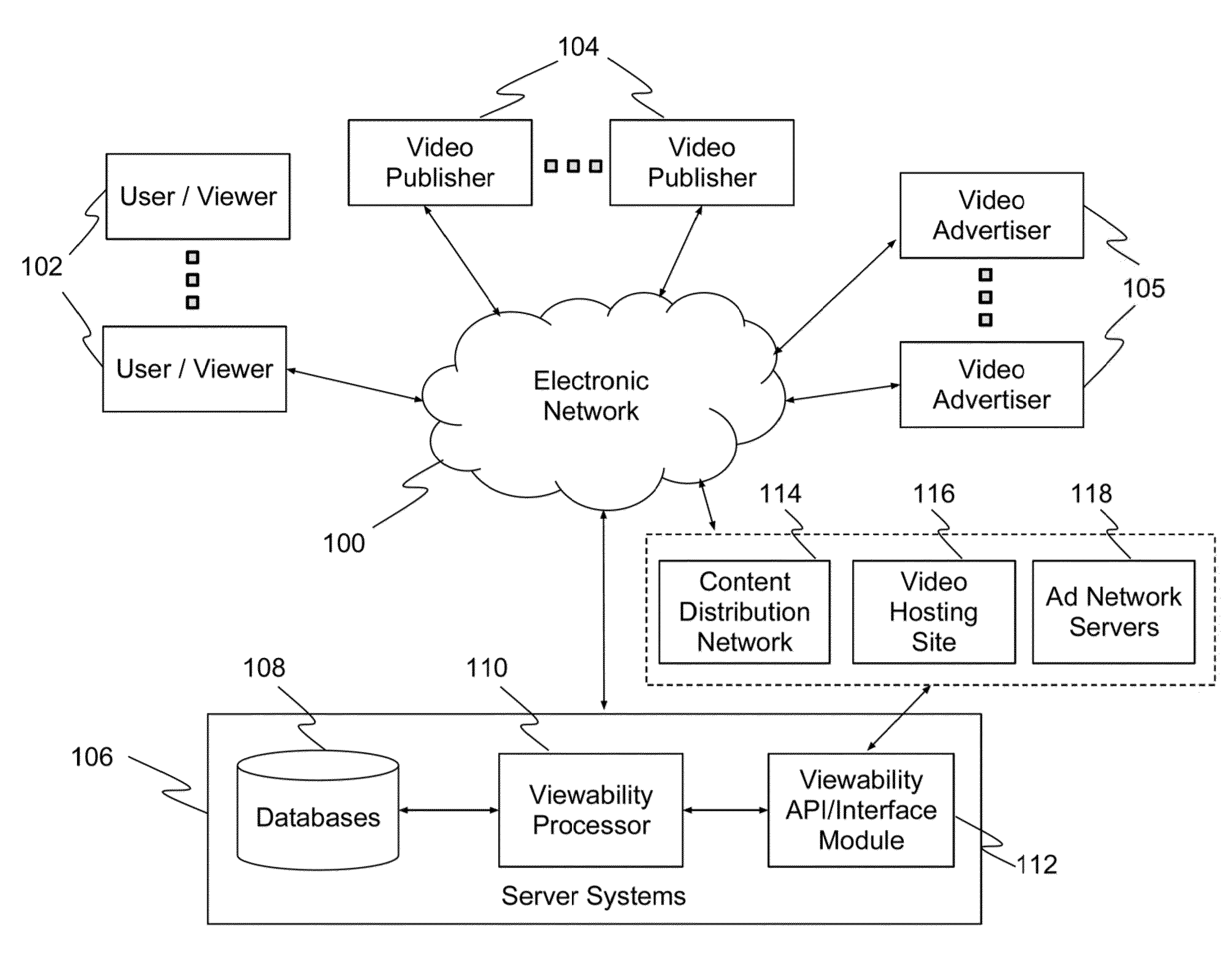

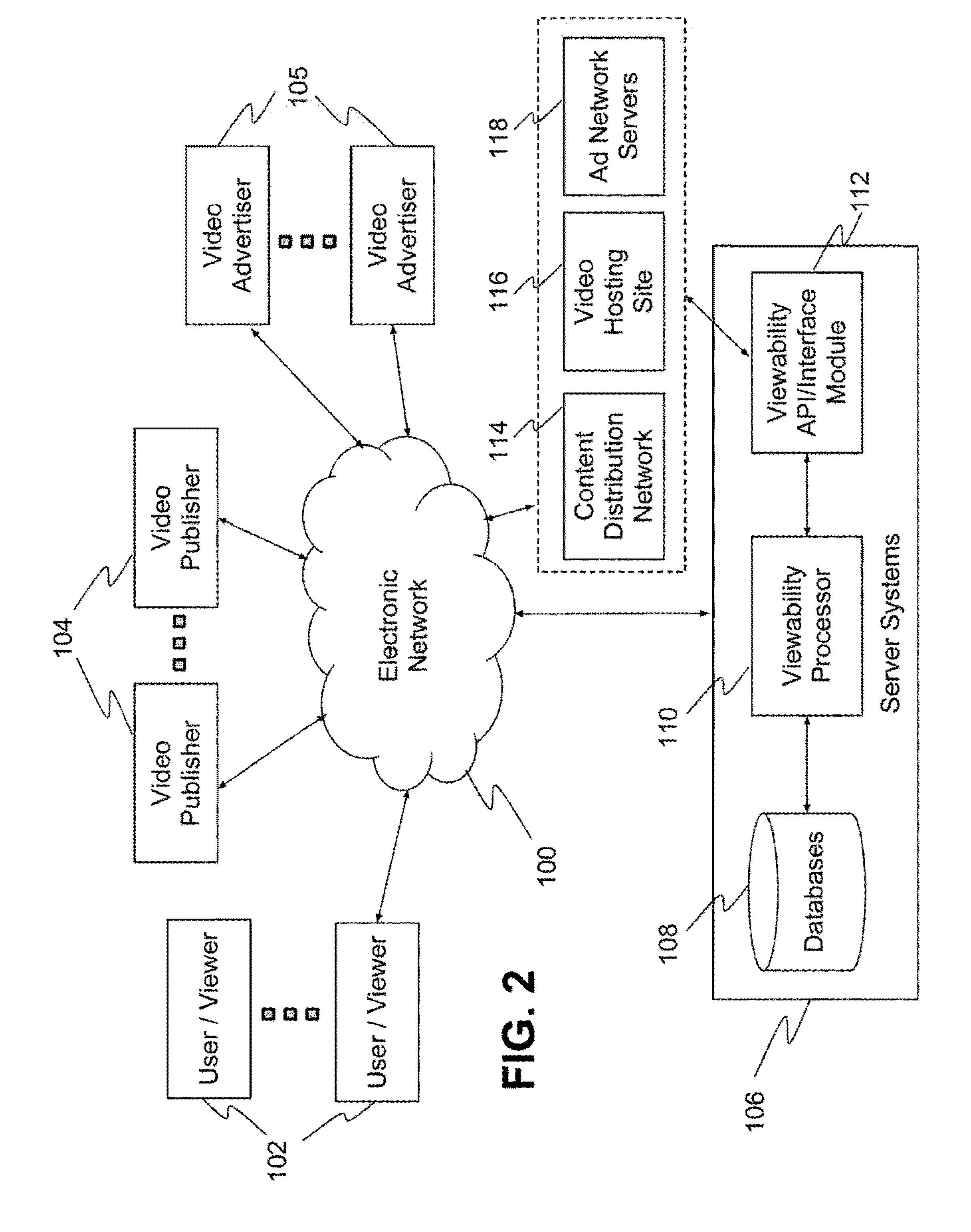

Systems and methods for evaluating online videos

ActiveUS20140344880A1Video data browsing/visualisationWebsite content managementUniform resource locatorWeb page

Systems and methods for evaluating online videos. One method includes receiving a URL; determining a URL type; detecting whether the URL includes one or more videos; determining at least one of a size of the video, a position of the video on a web page of the web page URL, whether the video is set to autoplay, and whether the video is set to mute; computing a score based on one or more of the size of the video, the position of the video on the web page of the web page URL, whether the video is set to autoplay, and whether the video is set to mute; obtaining at least two frames of at least part of the video, wherein each frame is obtained at one or more predetermined intervals during playback of the video; and classifying each detected video based on the at least two frames.

Owner:INTEGRAL AD SCI

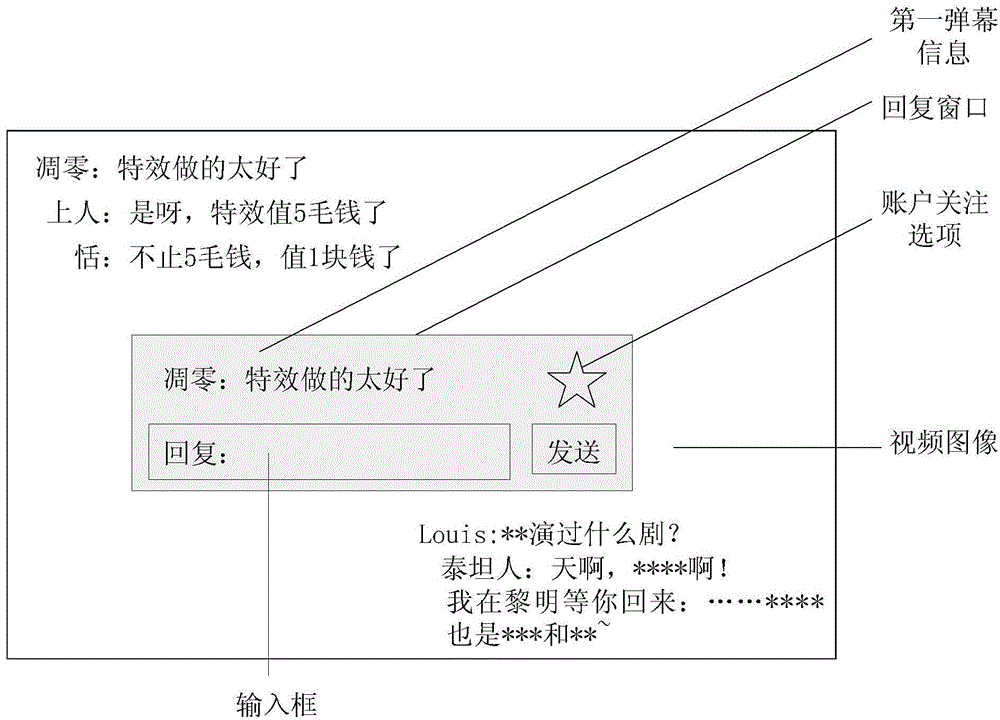

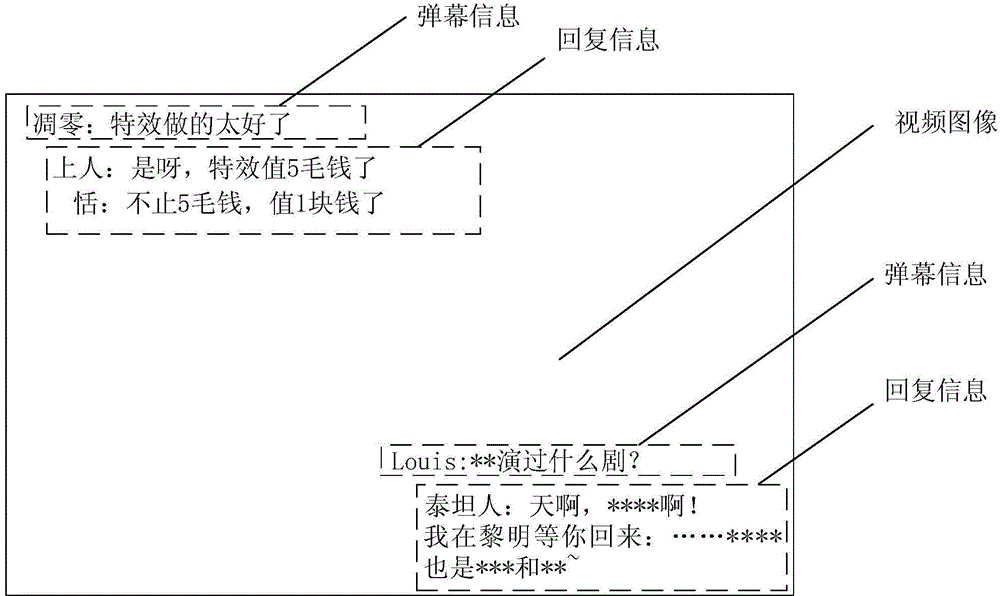

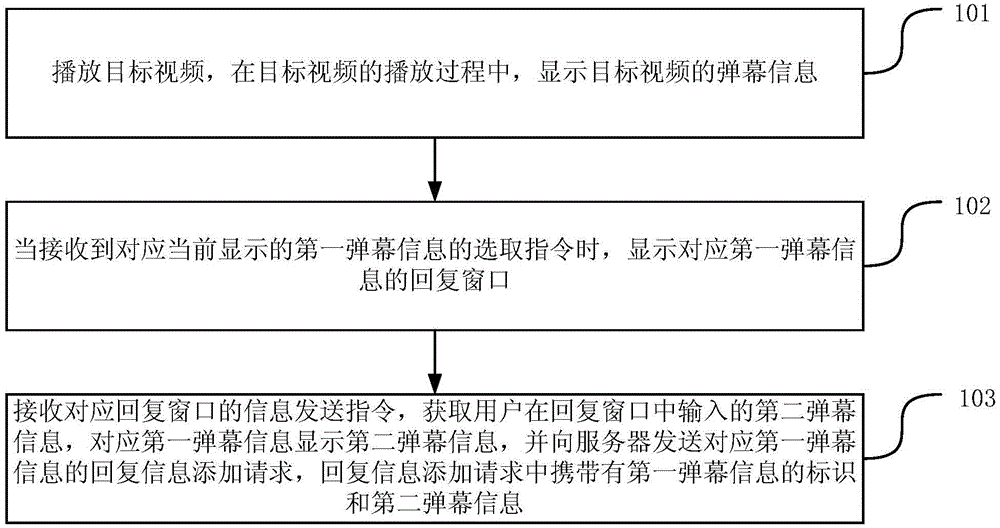

Method and apparatus for displaying screen information

InactiveCN104869468AIncrease flexibilityVideo data browsing/visualisationSelective content distributionThe InternetHuman–computer interaction

The invention discloses a method and apparatus for displaying screen information, and belongs to the technical field of Internet. The method comprises the following steps: playing a target video, and displaying the screen information of the target video in the playing process of the target video; when a selection instruction corresponding to currently displayed first screen information is received, displaying a reply window corresponding to the first screen information; and receiving an information sending instruction corresponding to the reply window, obtaining second screen information input by a user in the reply window, displaying the second screen information in response to the first screen information, and sending a reply information adding request corresponding to the first screen information to a server, wherein the reply information adding request carries identification of the first screen information and the second screen information. By adopting the method and apparatus provided by the invention, the screen information releasing flexibility of the user can be improved.

Owner:TENCENT TECH (BEIJING) CO LTD

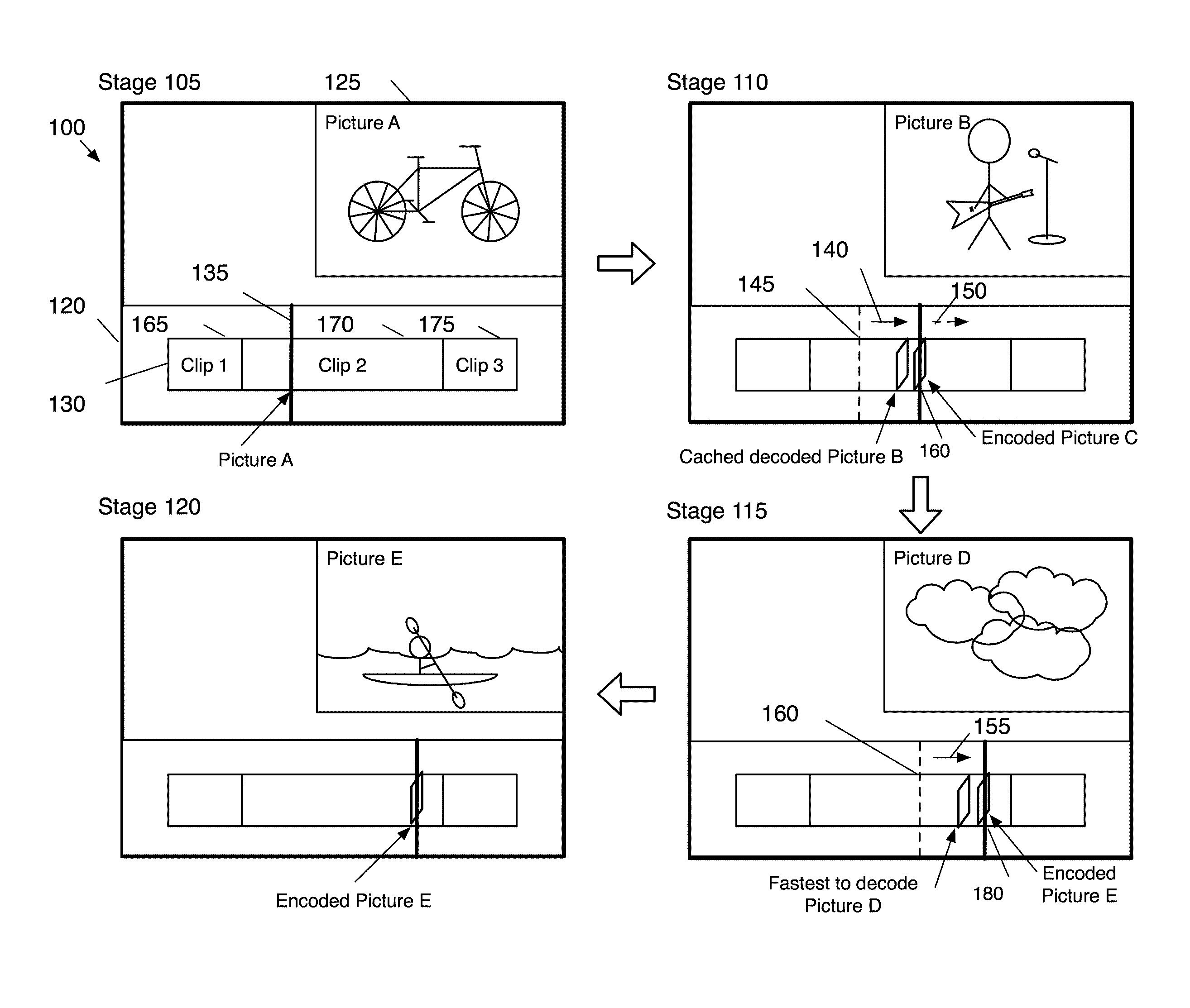

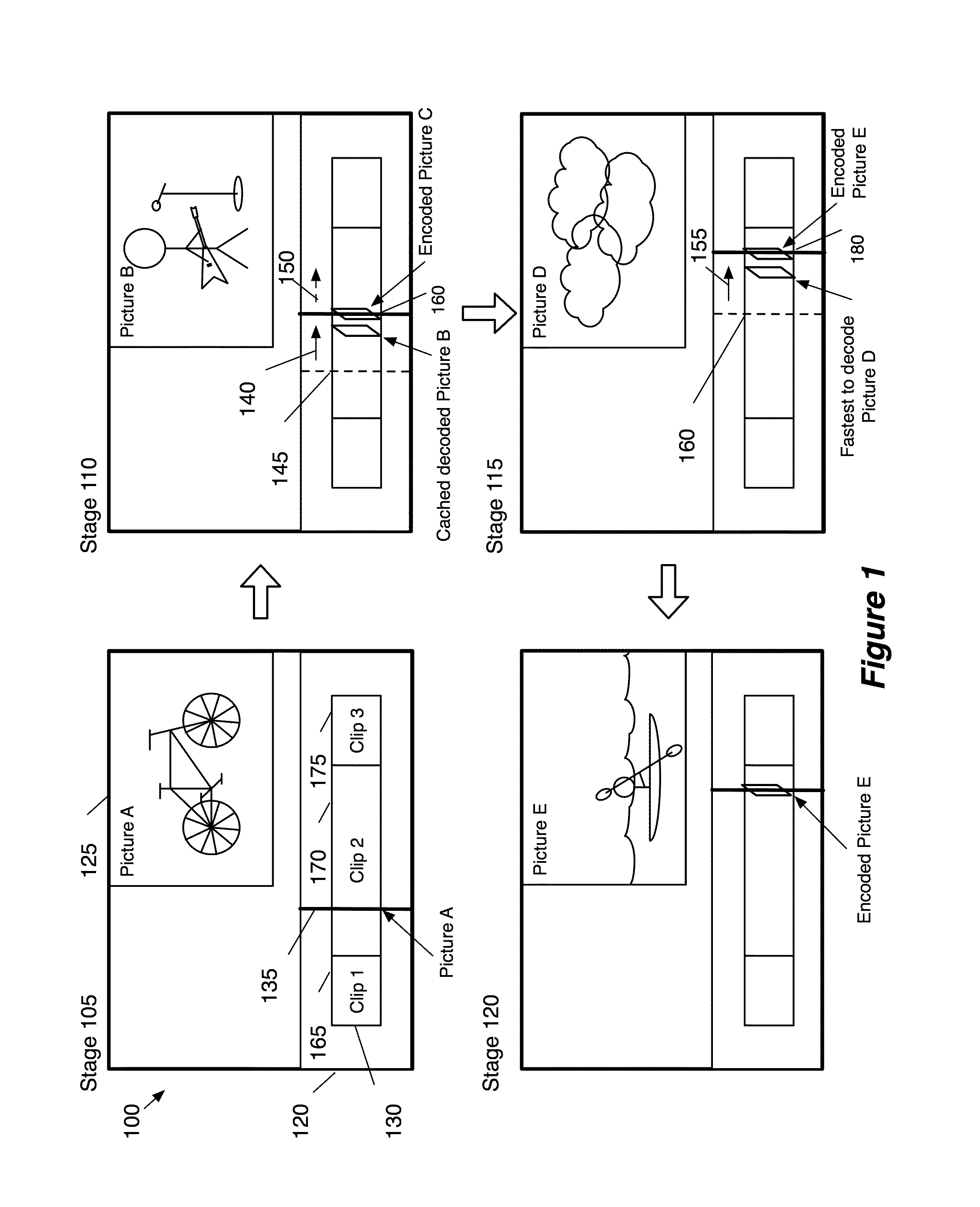

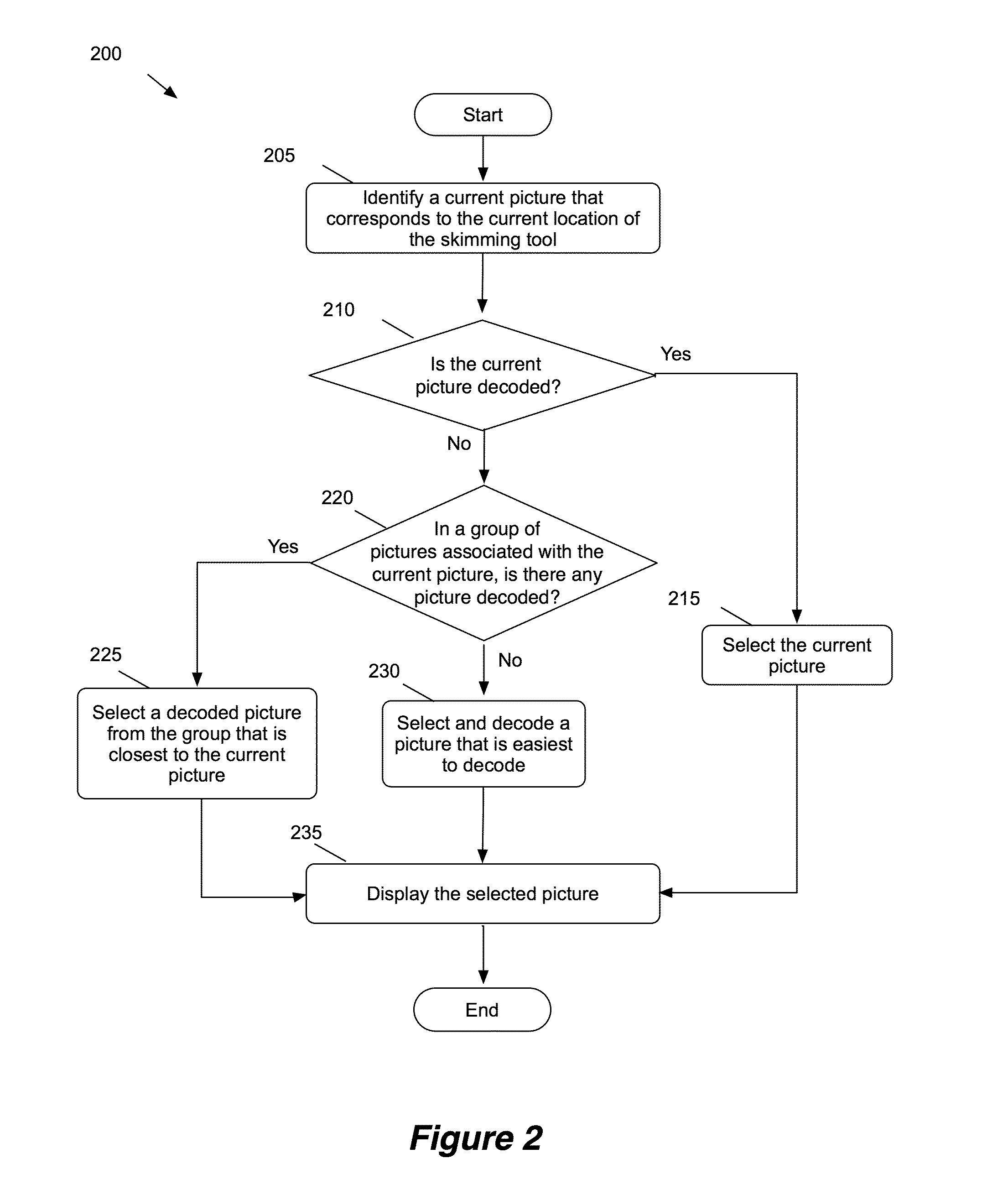

Picture Selection for Video Skimming

ActiveUS20120321280A1Quick displayEasy to presentVideo data browsing/visualisationElectronic editing digitised analogue information signalsComputer graphics (images)Video skimming

Owner:APPLE INC

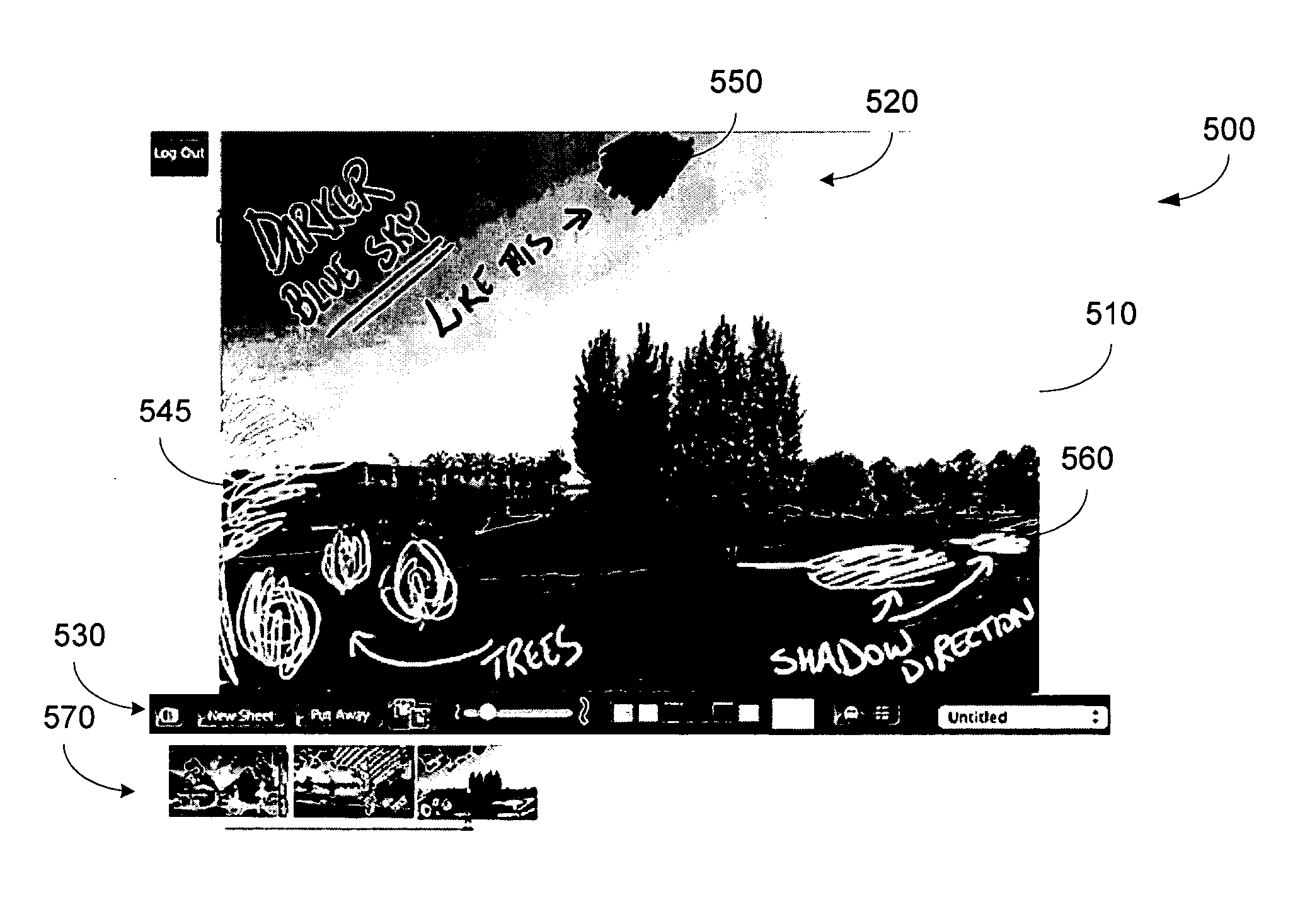

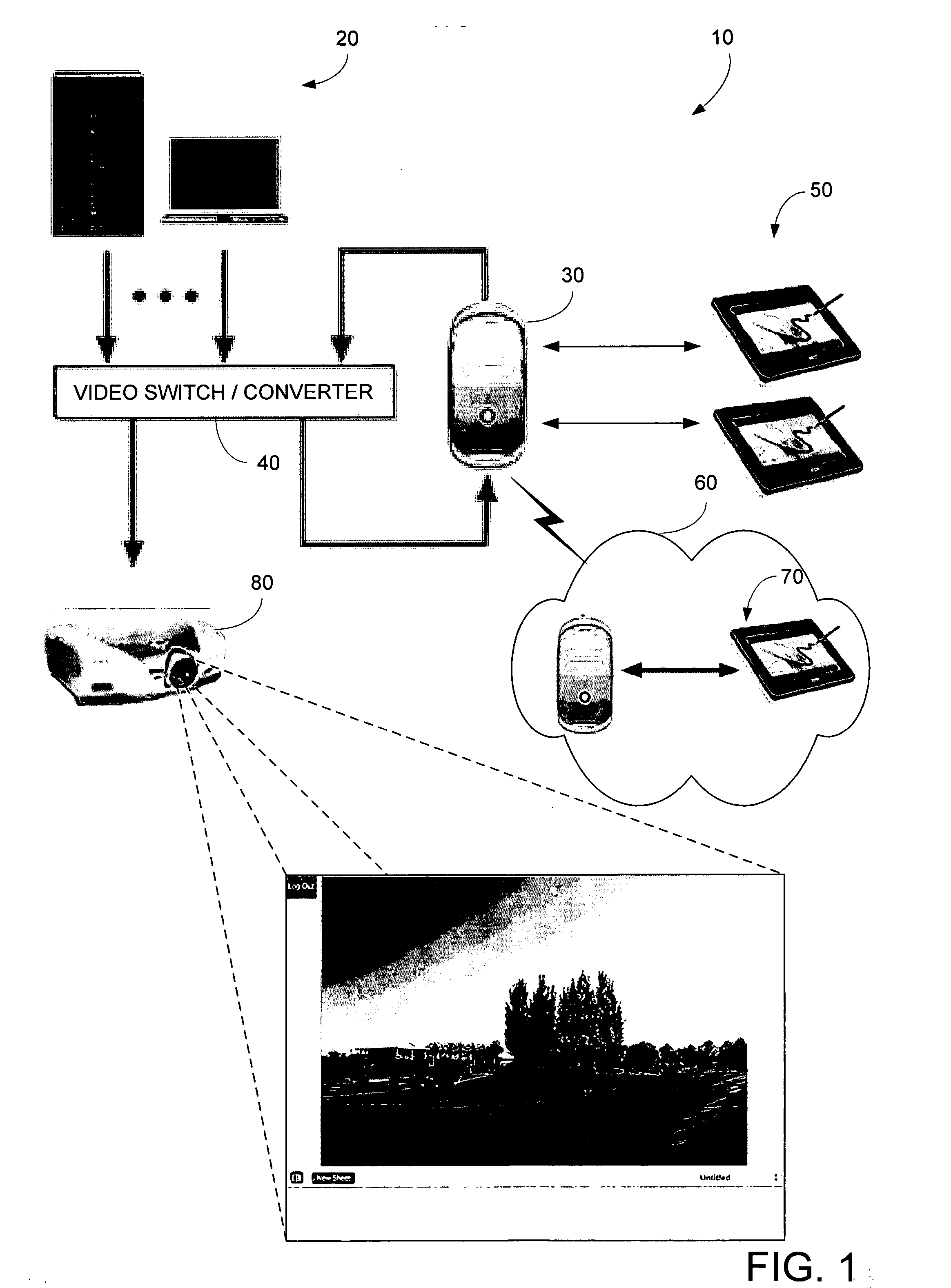

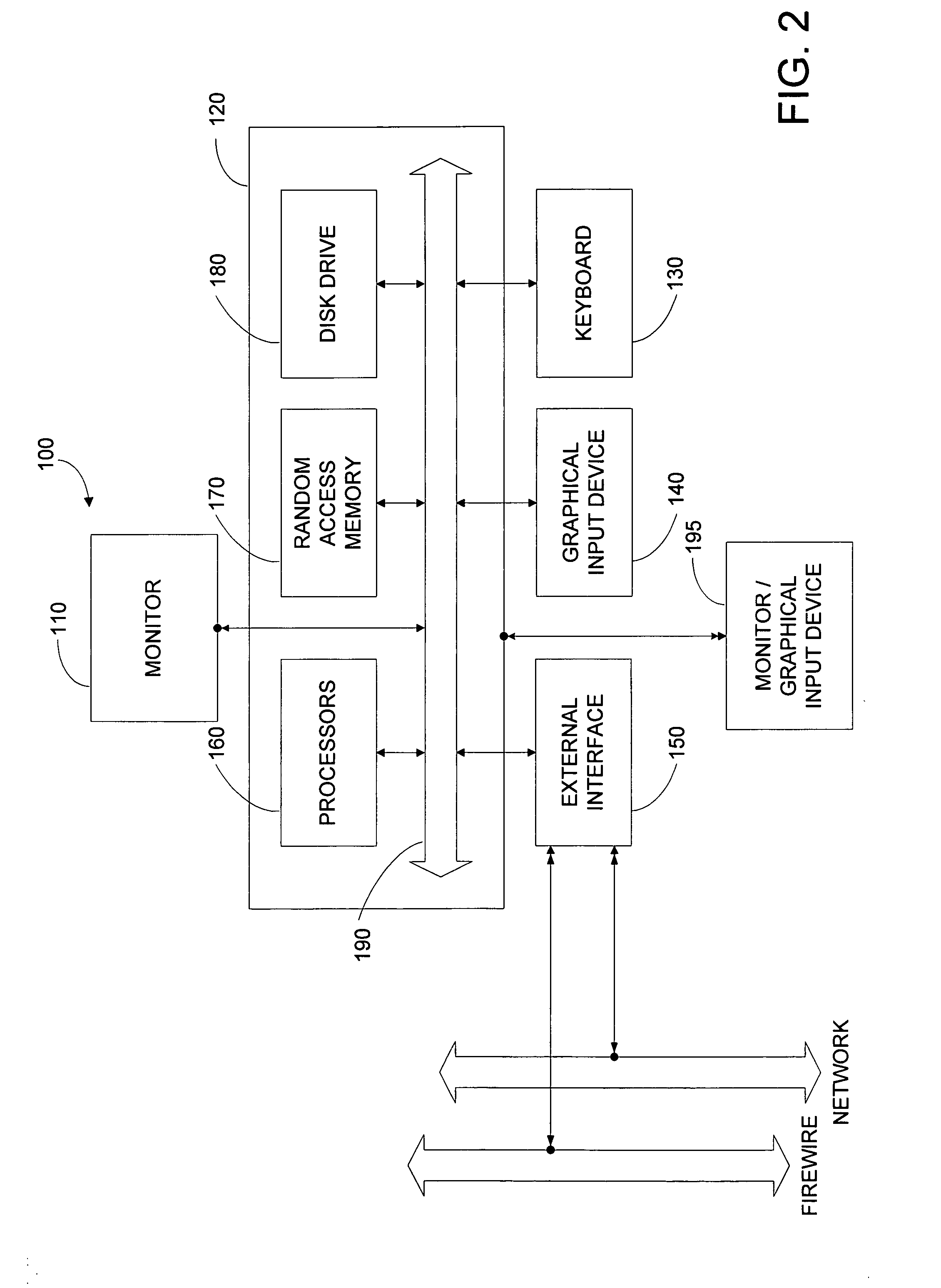

Animation review methods and apparatus

ActiveUS20050257137A1Minimal impactEasy to createVideo data browsing/visualisationMultimedia data retrievalGraphicsGraphical user interface

A system comprises a source providing video in a first format, a video converter for converting video form the first to a second format, a server storing a video image from the video, and combining the video image with a graphical user interface to form a composite video, and a first user display device displaying the composite video, and receiving from the user, a plurality of commands, wherein the first user display device is for receiving from the user, a plurality of annotations associated with a video frame from the composite video, and for determining the video frame from the composite video in response to a command, wherein the server is for forming an annotated video in response to the video frame and the plurality of annotations, for storing the plurality of annotations and the video frame, and for associating the plurality of annotations and the video frame.

Owner:PIXAR ANIMATION

Method and user interface for forensic video search

ActiveUS9269243B2Easy searchVideo data queryingVideo data browsing/visualisationComputer graphics (images)User interface

A forensic video search user interface is disclosed that accesses databases of stored video event metadata from multiple camera streams and facilitates the workflow of search of complex global events that are composed of a number of simpler, low complexity events.

Owner:SIEMENS AG

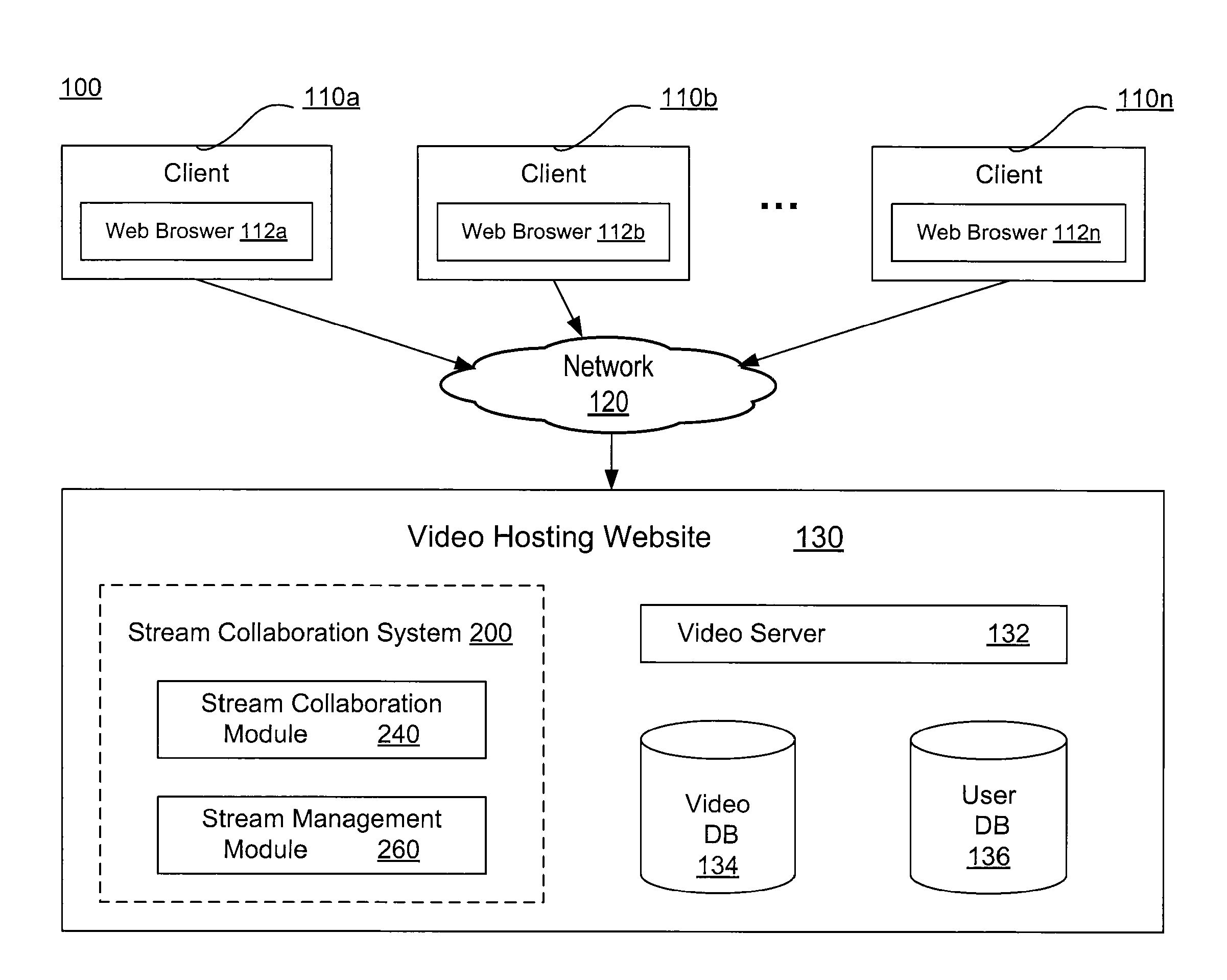

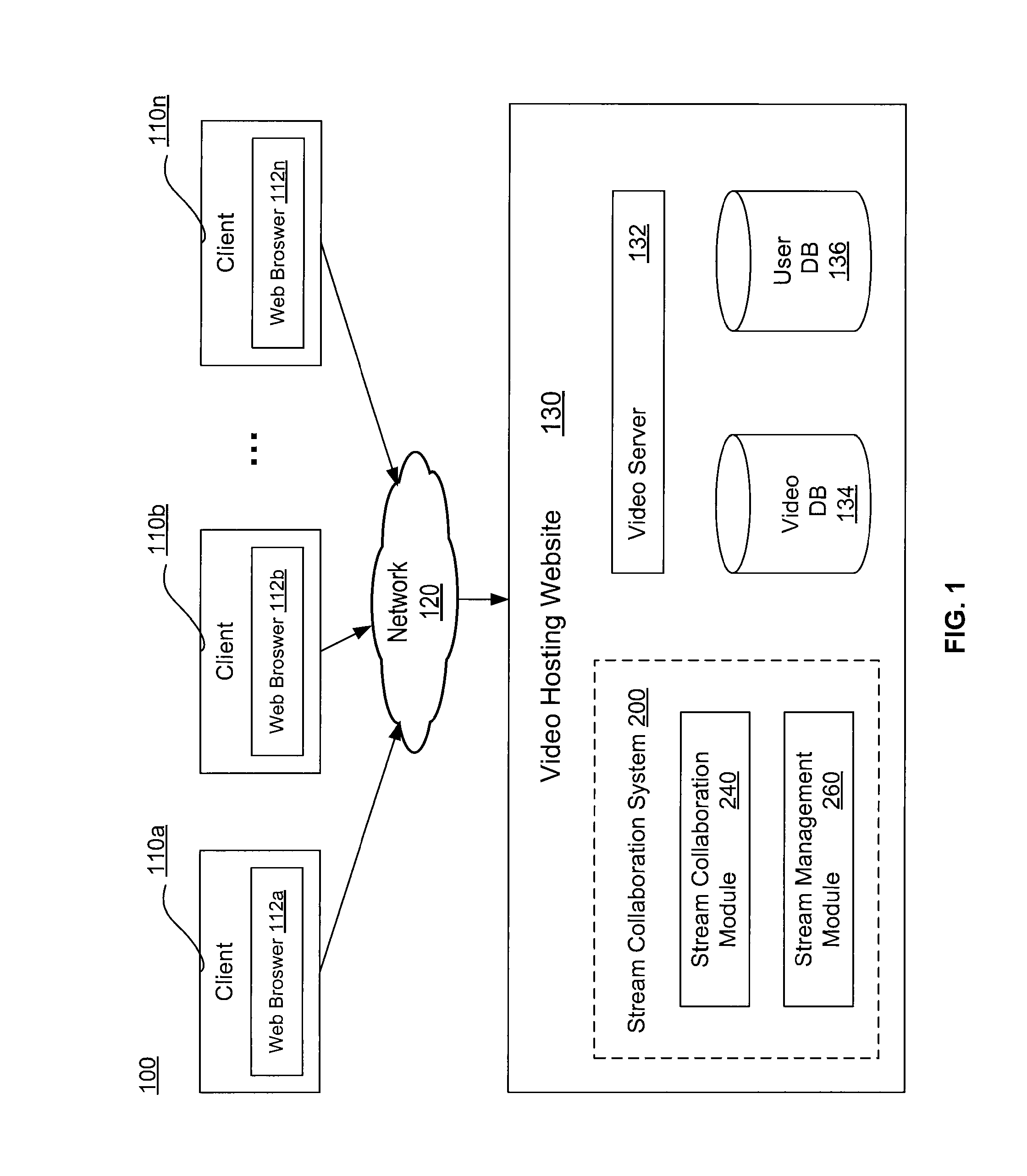

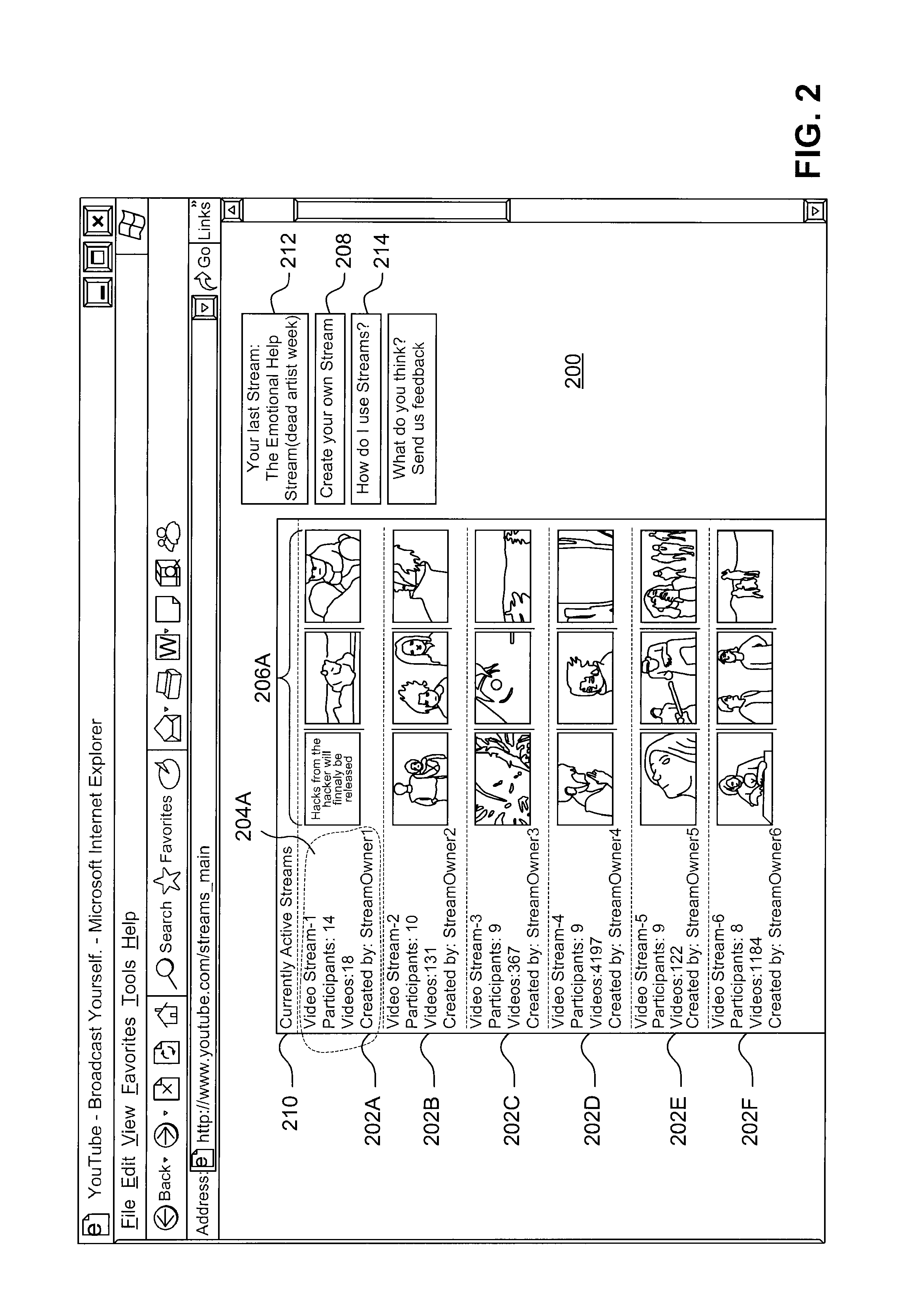

Collaborative streaning of video content

ActiveUS8700714B1Web data retrievalVideo data browsing/visualisationContent distributionUser interface

A system, method and various user interfaces enable visually browsing multiple groups of video recommendations. A video stream includes a group of videos to be viewed and commented by users who join the stream. Users who join a stream form a stream community. In a stream community, community members can add videos to the stream and interact collaboratively with others community members, such as chatting in real time with each other while viewing a video. With streams, a user can create a virtual room in an online video content distribution environment to watch videos of the streams and interact with others while sharing videos simultaneously. Consequently, users have an enhanced video viewing and sharing experience.

Owner:GOOGLE LLC

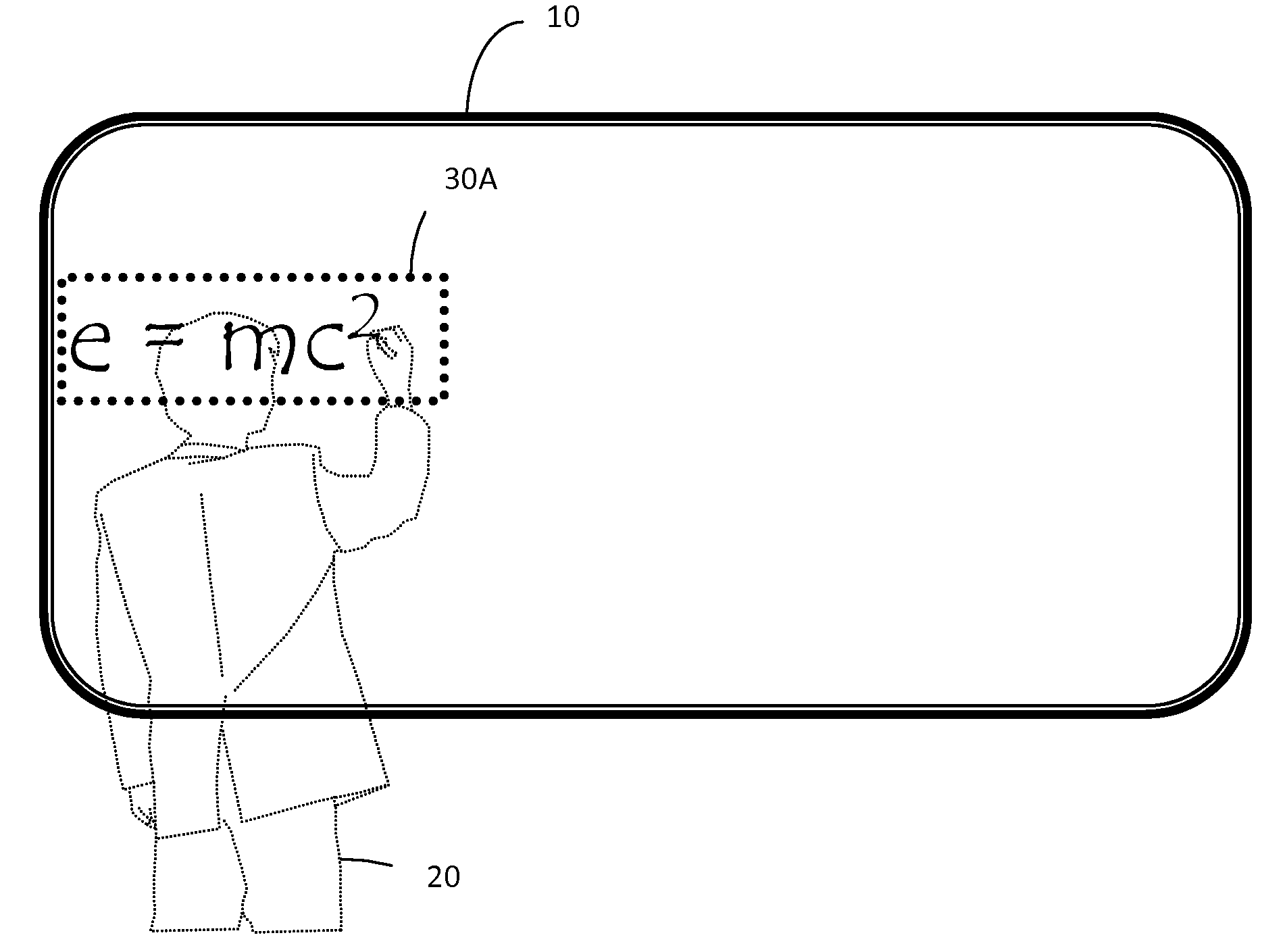

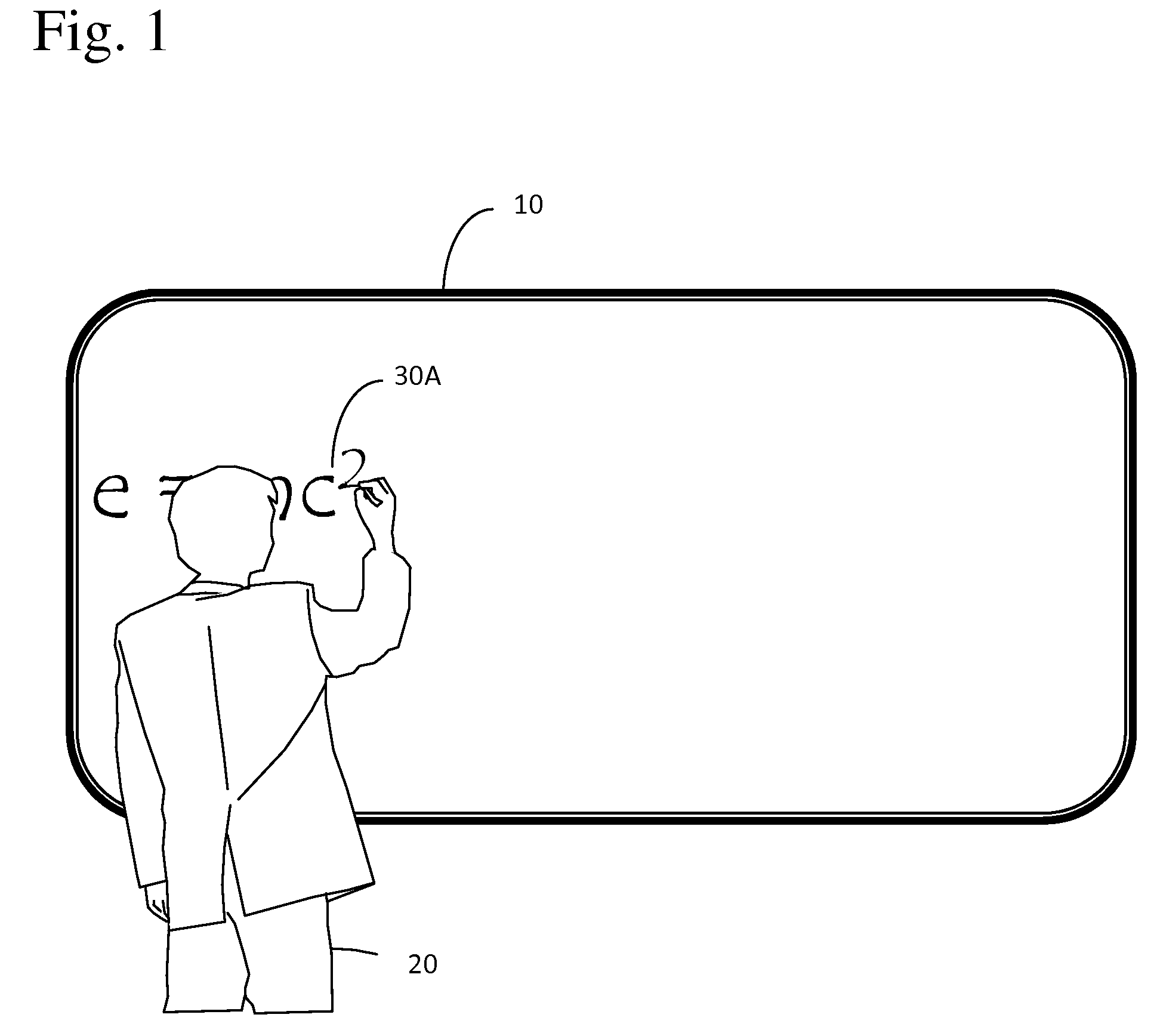

Whiteboard archiving and presentation method

ActiveUS8639032B1Easy to detectQuality improvementVideo data browsing/visualisationCarrier indexing/addressing/timing/synchronisingWhiteboardDigital imaging

The present invention discloses methods of archiving and optimizing lectures, presentations and other captured video for playback, particularly for blind and low vision individuals. A digital imaging device captures a preselected field of view that is subject to periodic change such as a whiteboard in a classroom. A sequence of frames is captured. Frames associated with additions or erasures to the whiteboard are identified. The Cartesian coordinates of the regions of these alterations within the frame are identified. When the presentation is played back, the regions that are altered are enlarged or masked to assist the low vision user. In another embodiment of the invention, the timing of the alterations segments the recorded audio into chapters so that the blind user can skip forward and backward to different sections of the presentation.

Owner:FREEDOM SCI

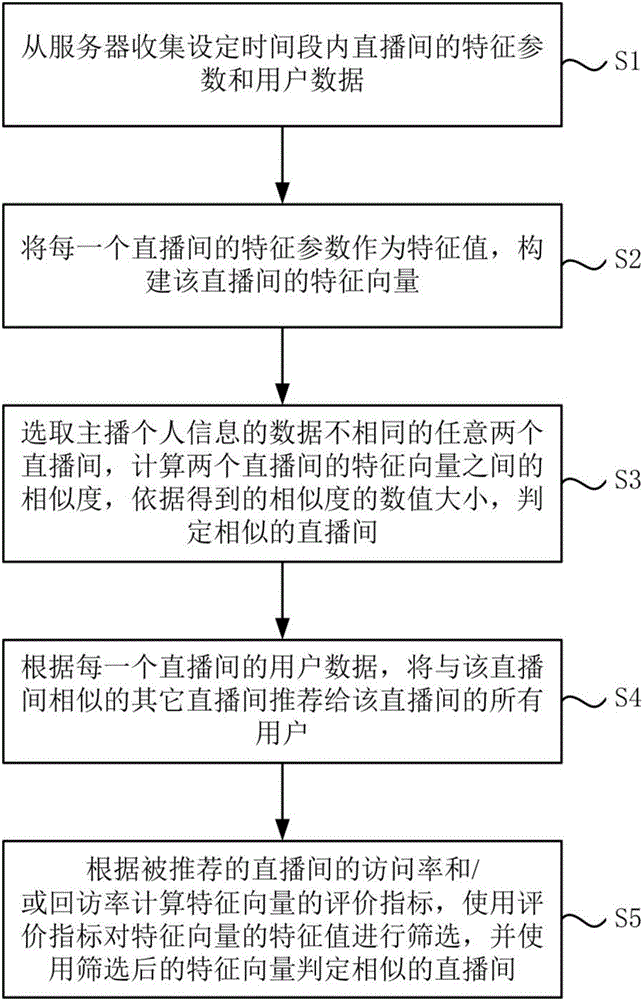

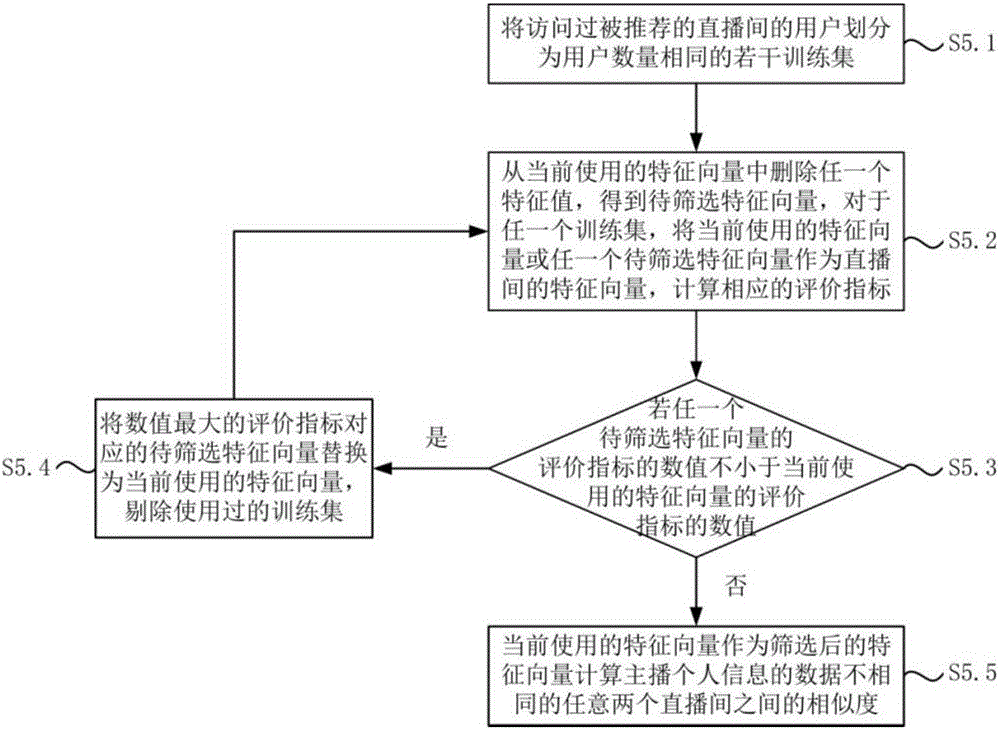

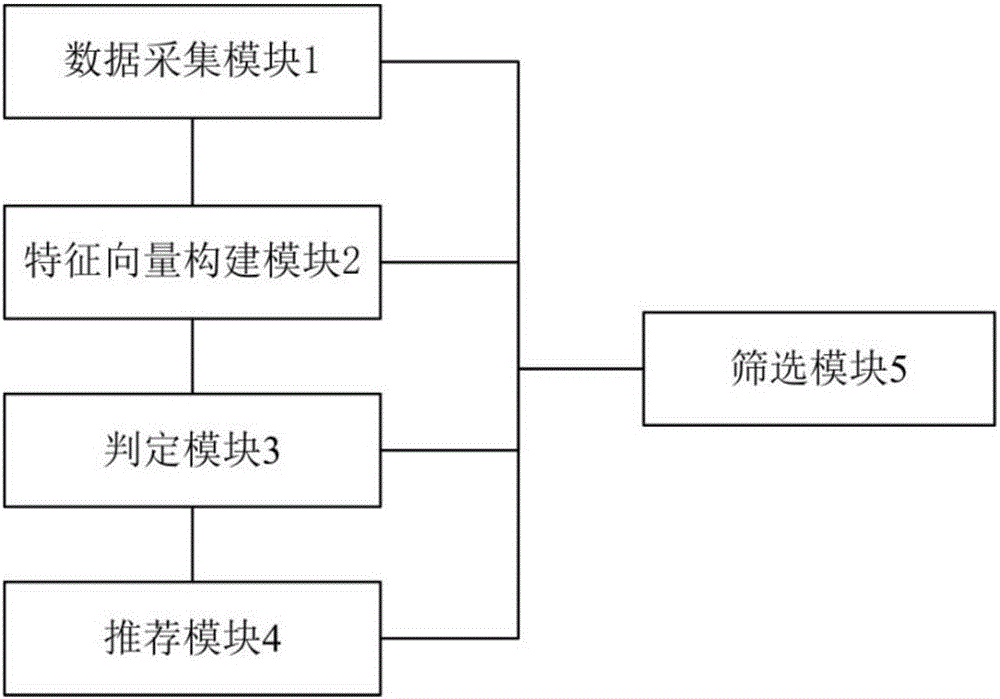

Direct broadcasting room recommending method and system based on broadcaster style

InactiveCN106560811AAccurate recommendationImprove experienceVideo data browsing/visualisationSelective content distributionTime segmentBroadcasting

The invention discloses a direct broadcasting room recommending method and system based on broadcaster styles, and relates to the network technical field; the method comprises the following steps: collecting characteristic parameters and user data of direct broadcasting rooms from a server in a set time period; using characteristic parameters of each direct broadcasting room as characteristic constants to build a characteristic vector of the direct broadcasting room; selecting two random direct broadcasting rooms with broadcasters of different personal information, calculating similarity between characteristic vectors of the two direct broadcasting rooms, and determining direct broadcasting rooms with similarities; recommending other direct broadcasting rooms similar to the direct broadcasting room to all users in the direct broadcasting room according to user data of each direct broadcasting room; calculating a characteristic vector evaluate index according to the visiting rate and / or return visiting rate of the recommended direct broadcasting room, using the evaluate index to screen the characteristic vector characteristic constant, and using the screened characteristic vector to determine similar direct broadcasting rooms. The method and system can precisely recommend direct broadcasting rooms with similar styles to users, thus improving recommending efficiency, and improving user experiences.

Owner:WUHAN DOUYU NETWORK TECH CO LTD

Media browsing user interface with intelligently selected representative media items

ActiveUS20200356590A1Faster and efficient methodFaster and efficient and interfaceMultimedia data browsing/visualisationVideo data browsing/visualisationUser inputMediaFLO

The present disclosure generally relates to navigating a collection of media items. In accordance with one embodiment, a device displays a plurality of content items in a first layout, including a first content item at a first aspect ratio and a first size, a second content item, and a third content item. While displaying the plurality of content items in the first layout, the device detects a user input that includes a gesture, wherein the user input corresponds to a request to change a size of the first content item. In response to detecting the user input, and as the gesture progresses, the device changes the size of the first content item from the first size to a second size while concurrently gradually changing an aspect ratio of the first content item from the first aspect ratio to a second aspect ratio.

Owner:APPLE INC

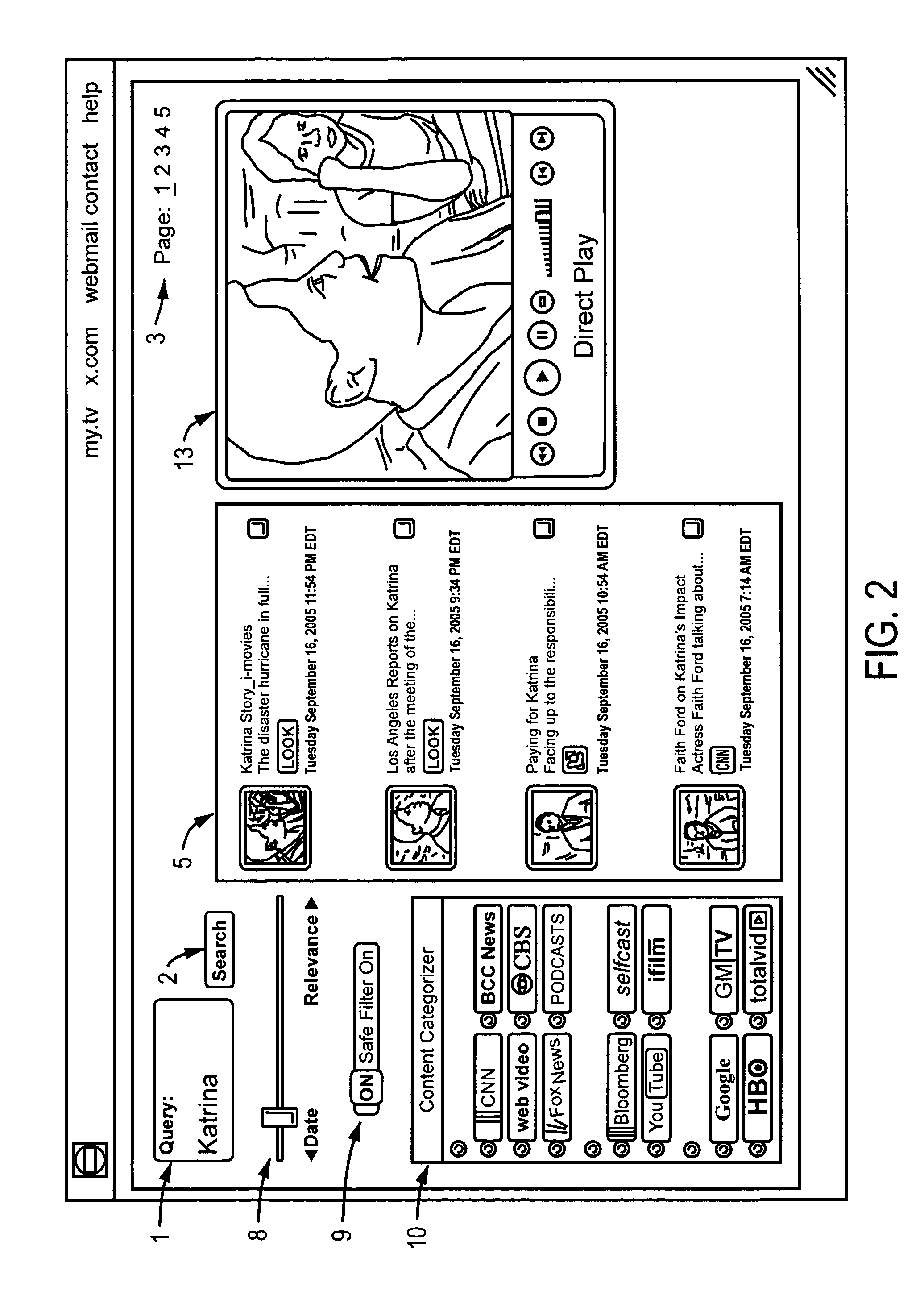

Method for content-based non-linear control of multimedia playback

ActiveUS8479238B2Special service provision for substationTelevision system detailsLinear controlNonlinear control

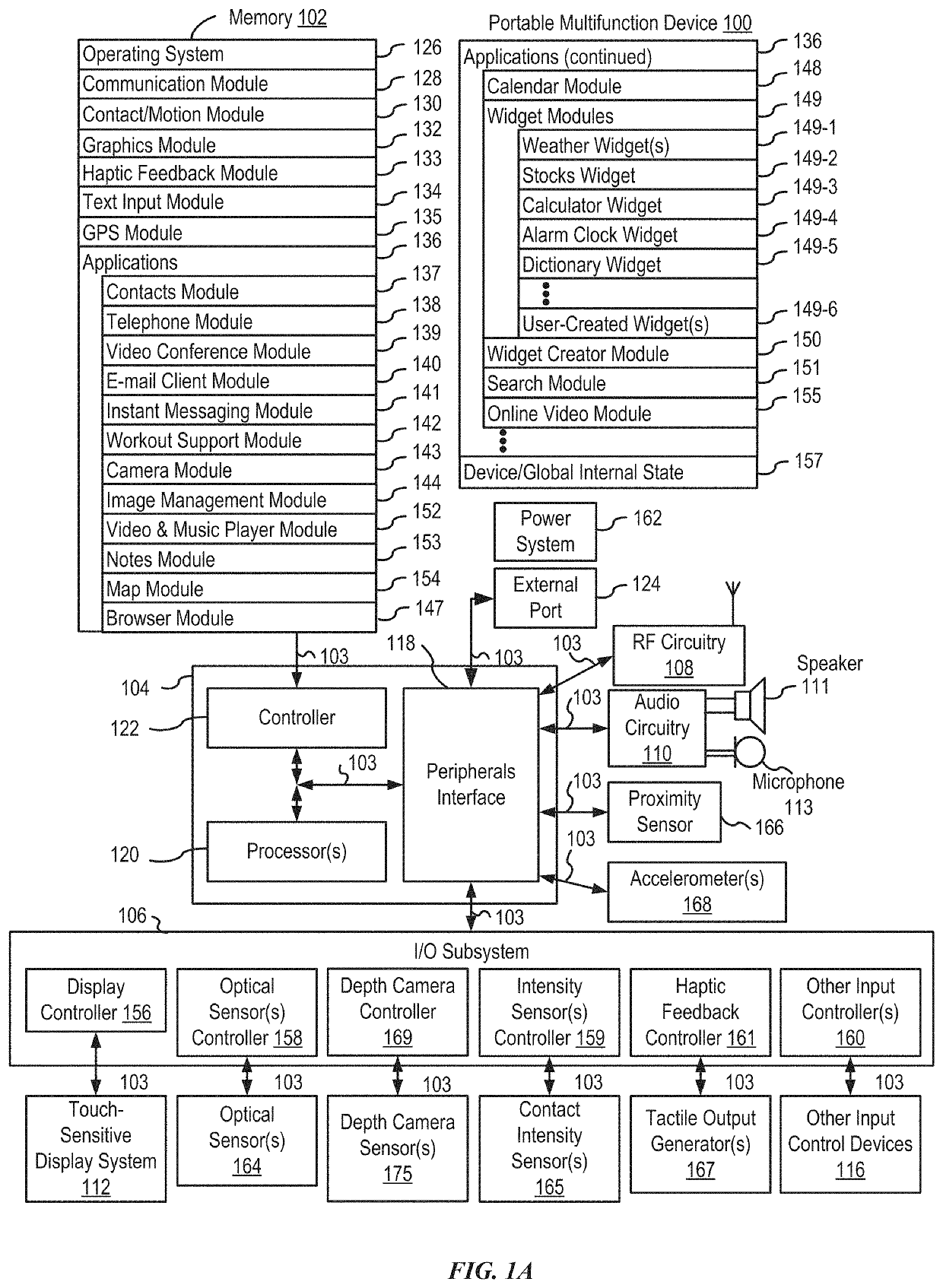

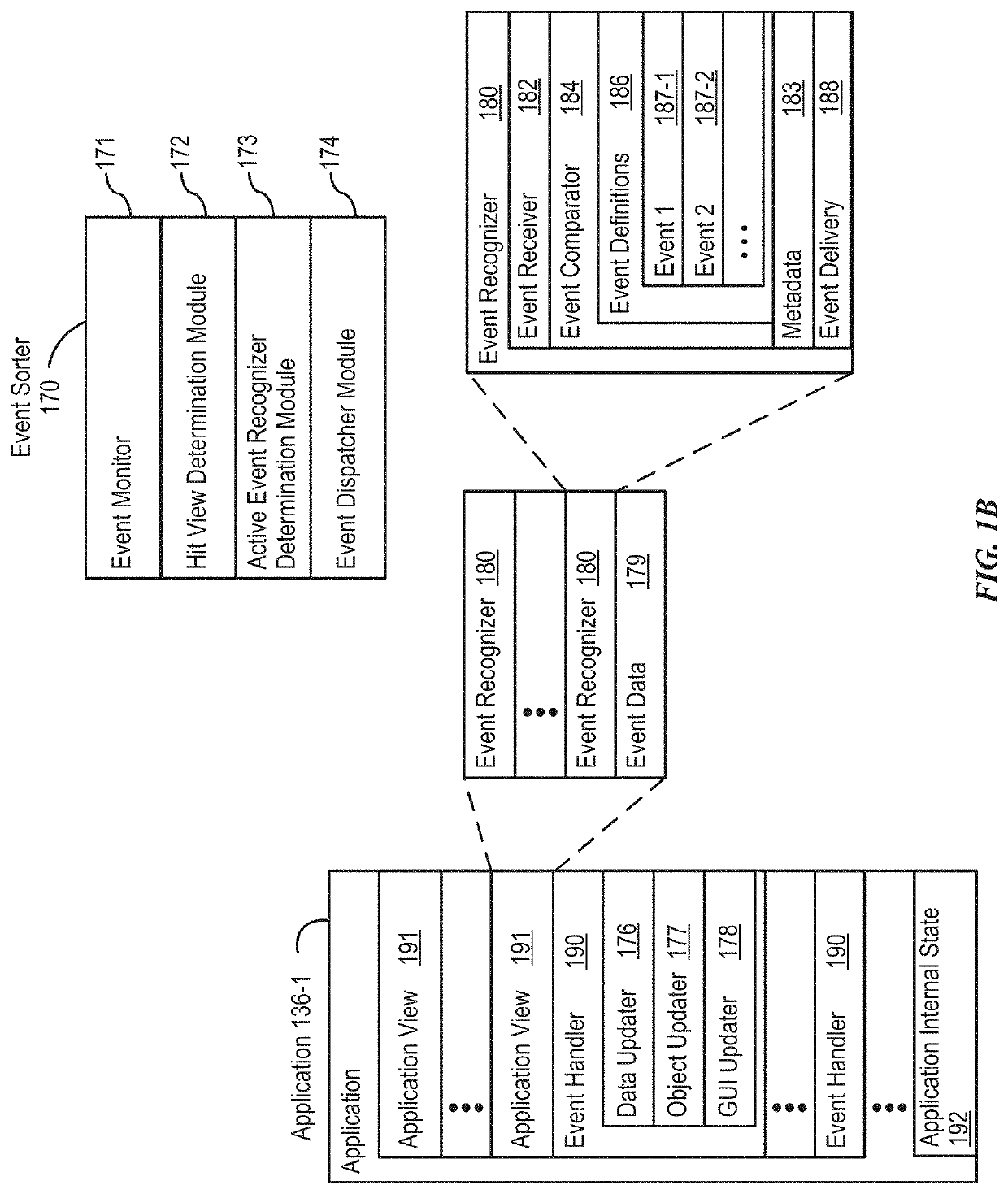

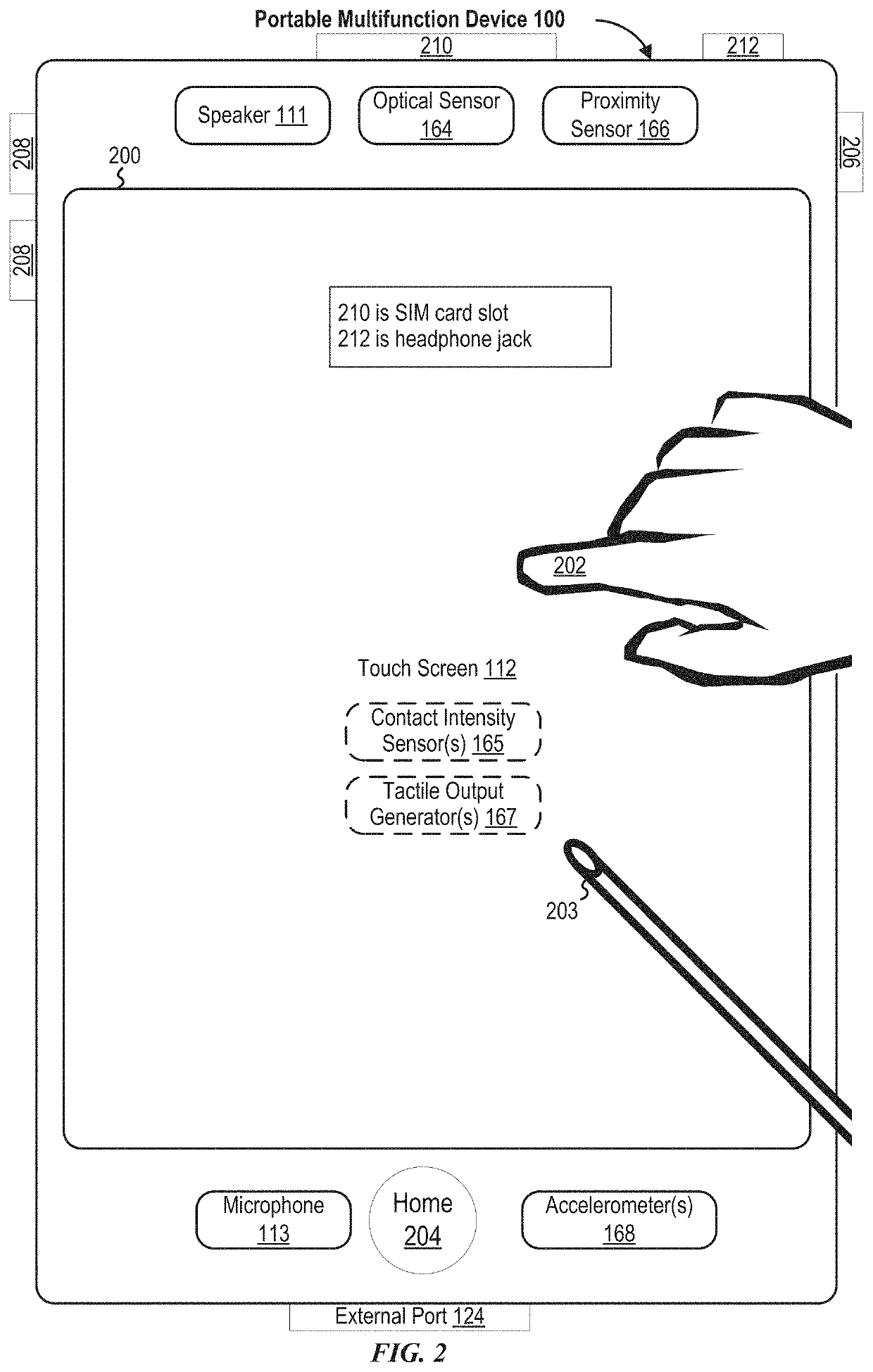

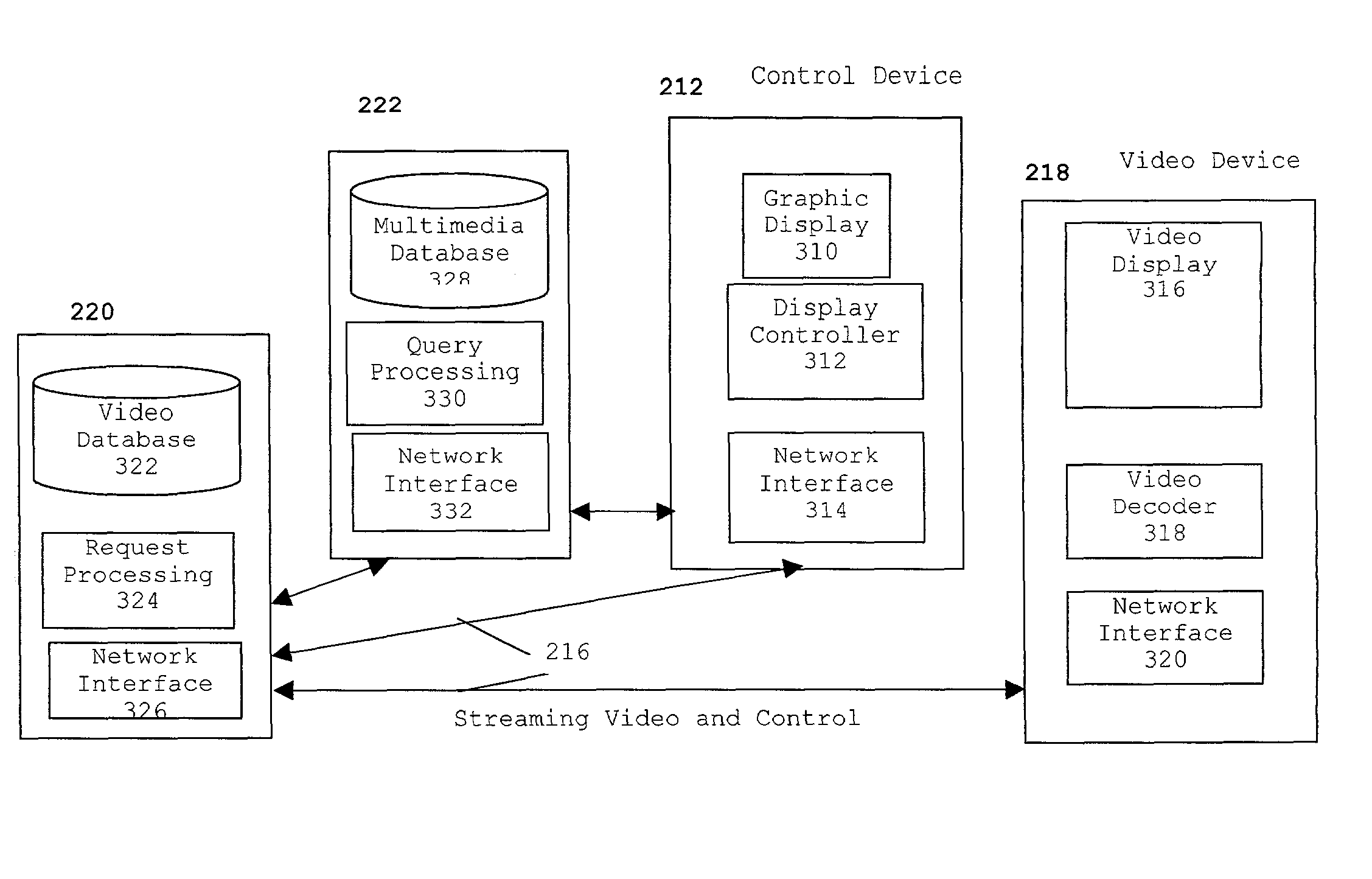

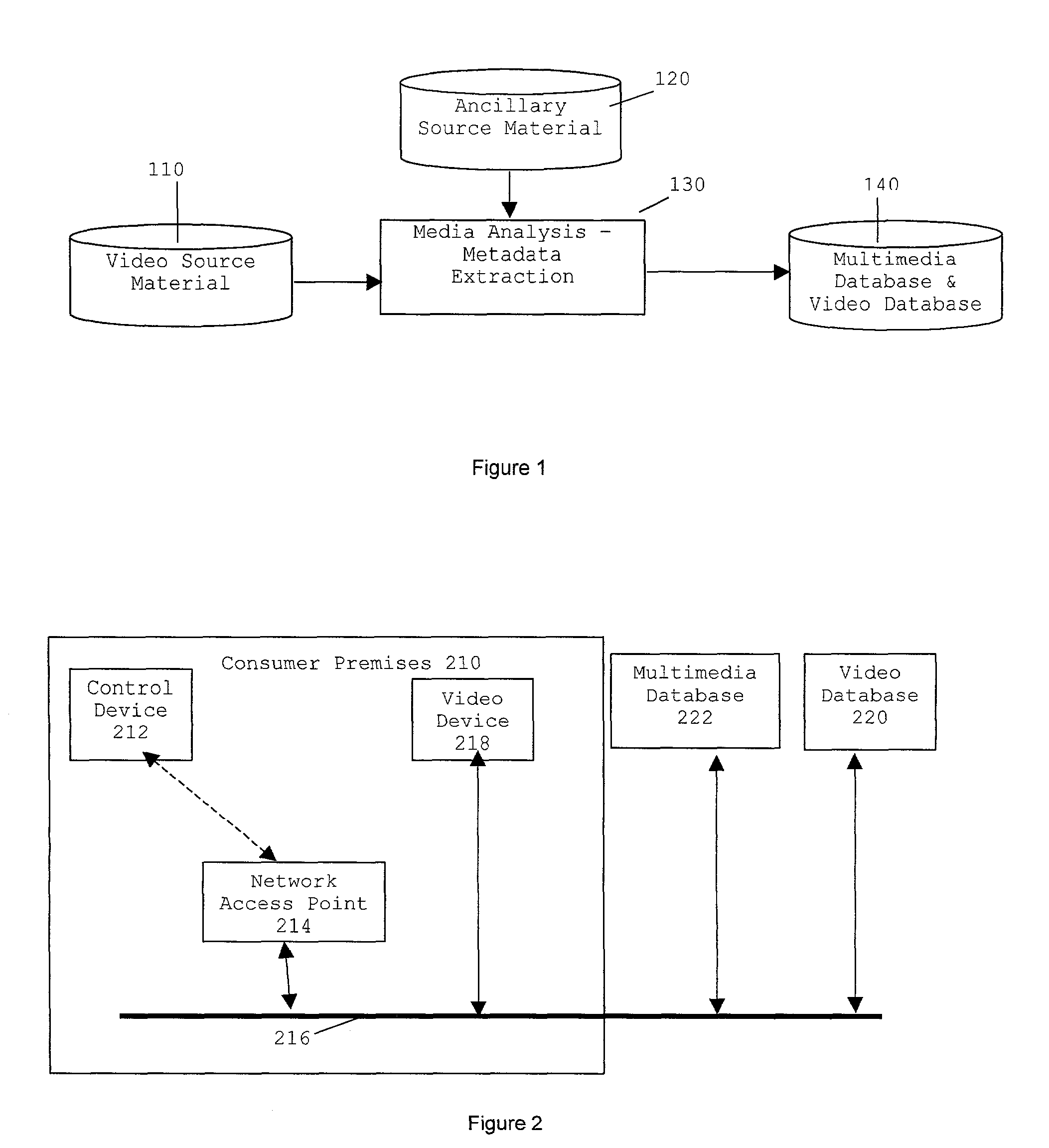

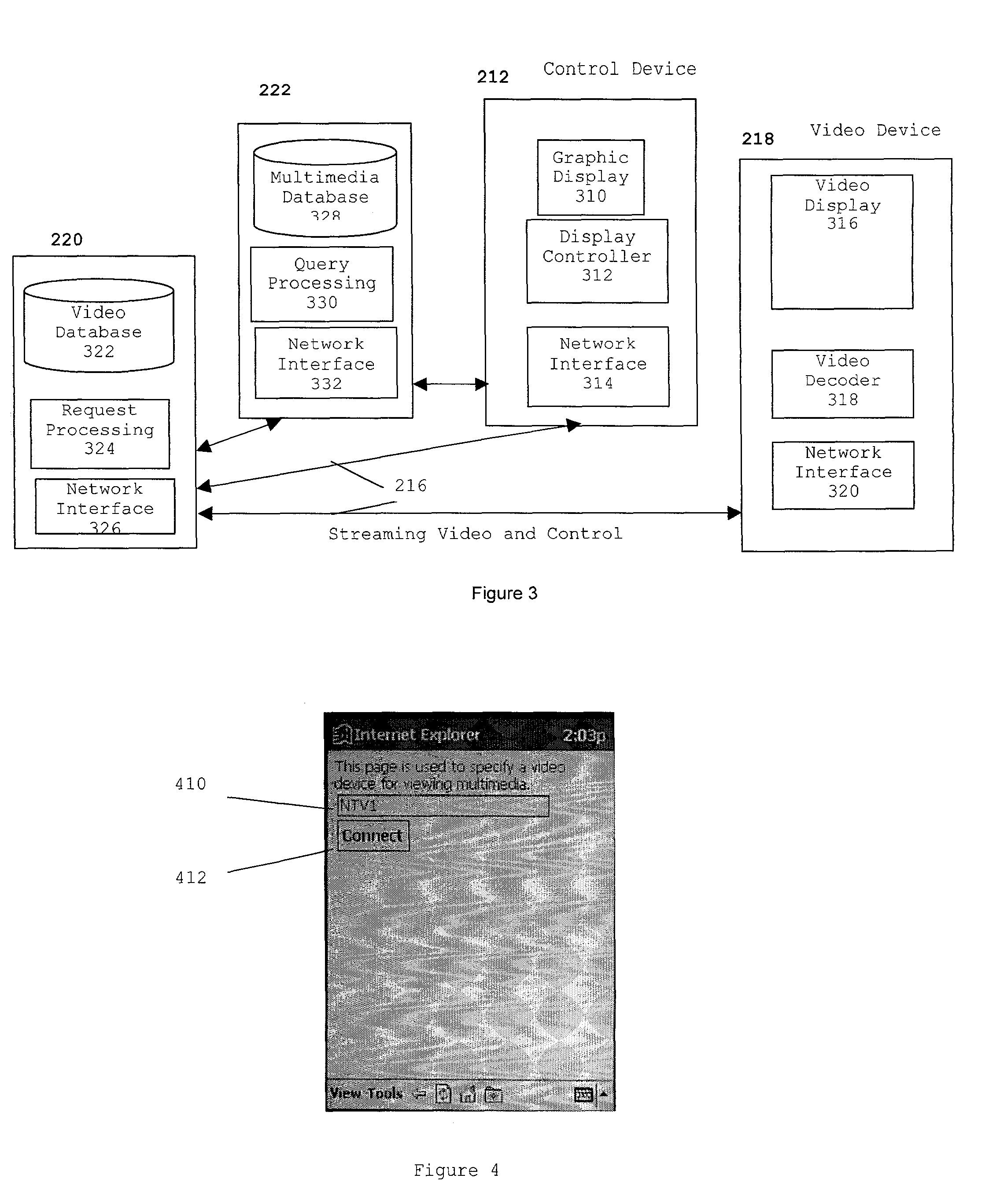

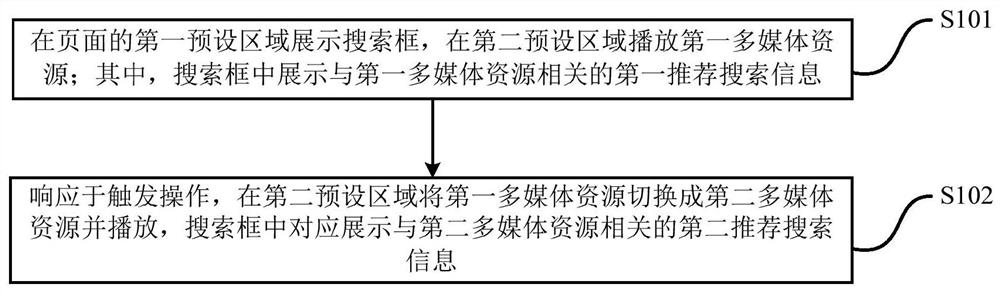

A system and method are provided for content-based non-linear control of video data playback. A multimedia database having multimedia data including multimedia content data is searched based on a user query to determine a first set of multimedia data. The multimedia data includes indexes to and condensed representations of corresponding video data stored in a video database. A portion of the first set of multimedia data is displayed at a control device in response to the user query. A user of the control device selects an element of the first set of multimedia data for video playback and video data corresponding to the element delivered to a video device for playback. A user of the control device selects an element of the first set of multimedia data for additional information and a second set of multimedia data corresponding to the element delivered to the control device.

Owner:AT&T INTPROP II L P

Information display method and device and computer storage medium

PendingCN113111286AImprove search efficiencyEasy to operateSpecial data processing applicationsWeb data queryingInformation searchingHuman–computer interaction

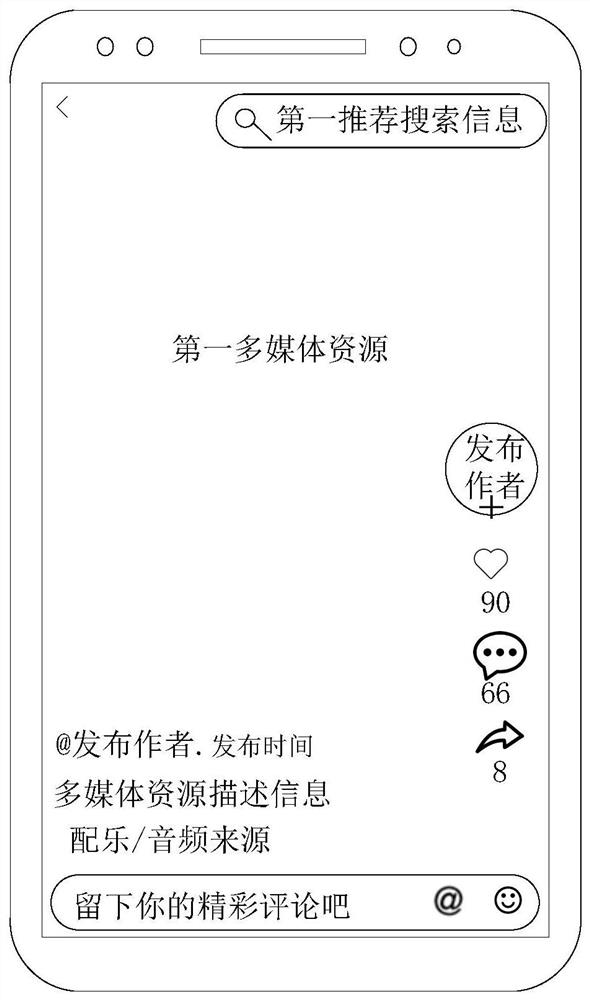

The invention provides an information display method and device and a computer storage medium, and the method comprises the steps: displaying a search box in a first preset region of a page, and playing a first multimedia resource in a second preset region, wherein first recommended search information related to the first multimedia resource is displayed in the search box; and in response to the trigger operation, switching the first multimedia resource into a second multimedia resource in a second preset area and playing the second multimedia resource, and correspondingly displaying second recommended search information related to the second multimedia resource in the search box. The search box containing the recommended search information related to the multimedia resource can be synchronously displayed on the video playing page, and the recommended search information displayed in the search box can be synchronously switched when the video resource played on the current page is switched, so that the information search efficiency can be improved.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

Video display method

ActiveUS20120194734A1Video data browsing/visualisationPicture reproducers using cathode ray tubesImage resolutionTime segment

A method for video playback uses only resources universally supported by a browser (“inline playback”) operating in virtually all handheld media devices. In one case, the method first prepares a video sequence for display by a browser by (a) dividing the video sequence into a silent video stream and an audio stream; (b) extracting from the silent video stream a number of still images, the number of still images corresponding to at least one of a desired output frame rate and a desired output resolution; and (c) combining the still images into a composite image. In one embodiment, the composite image having a number of rows, with each row being formed by the still images created from a fixed duration of the silent video stream. Another method plays the still images of the composite image as a video sequence by (a) loading the composite image to be displayed through a viewport defined the size of one of the still images; (b) selecting one of the still images of the composite image; (c) setting the viewport to display the selected still image; and (d) setting a timer for a specified time period based on a frame rate, such that, upon expiration of the specified time period: (i) selecting a next one of the still images to be displayed in the viewport, unless all still images of the composite image have been selected; and (ii) return to step (c) if not all still images have been selected.

Owner:SILVERPUSH PTE LTD

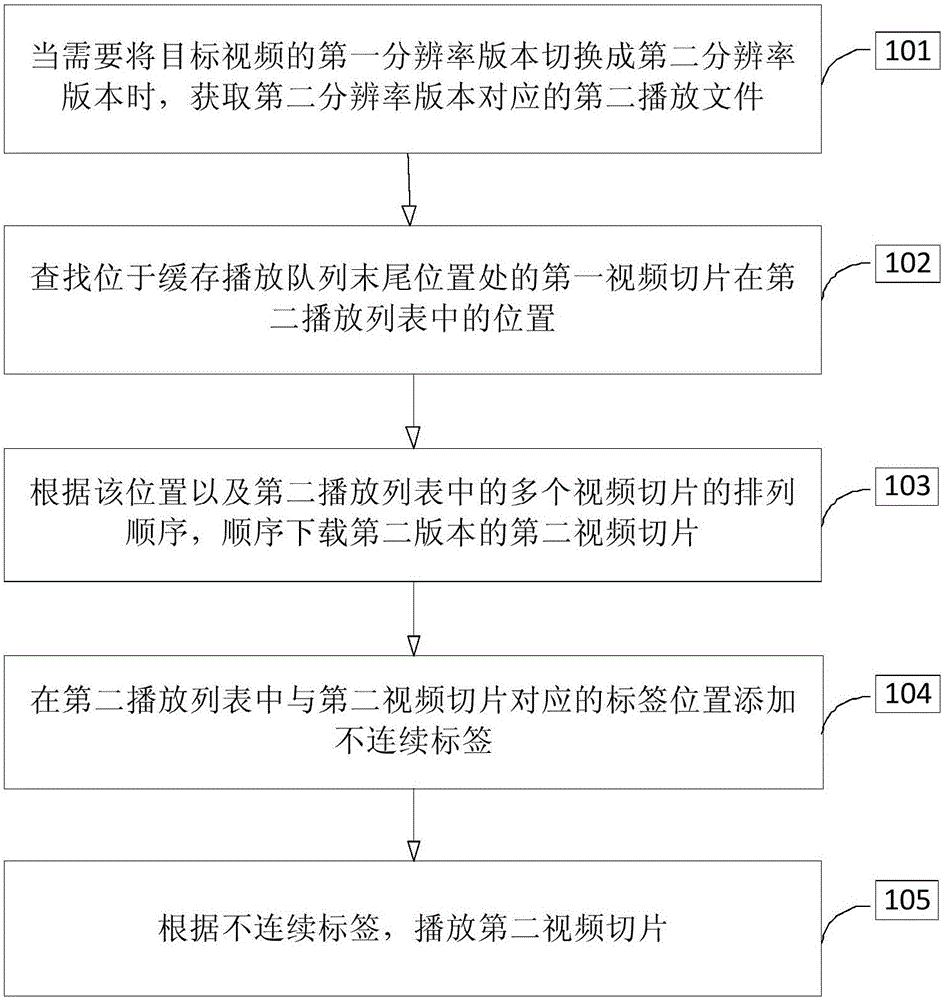

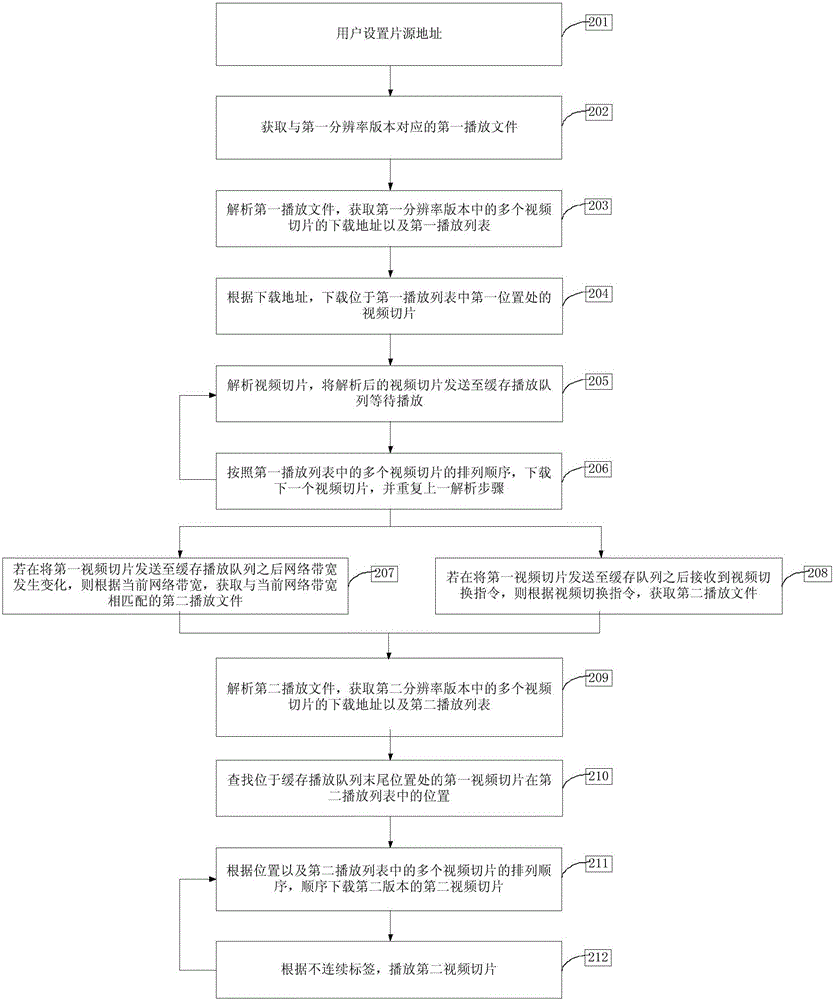

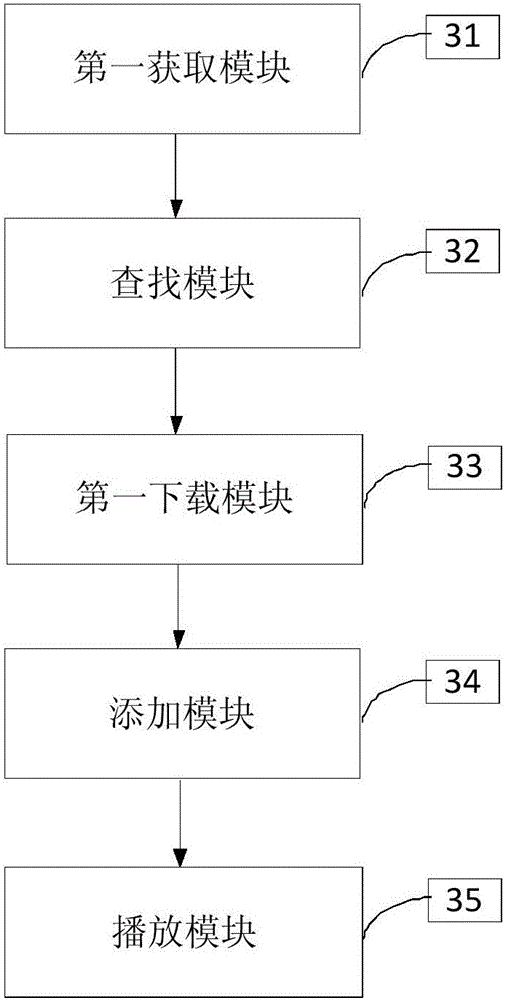

Method, equipment and device for on-line video playing

InactiveCN106131610ARealize seamless switchingImprove viewing experienceVideo data browsing/visualisationTransmissionImage resolutionComputer engineering

Embodiments of the invention provide a method, equipment and a device for on-line video playing. The method comprises the following steps of obtaining a second playing file corresponding to a second resolution version when switching from a first resolution version of a target video to the second resolution version is needed, wherein the second playing file comprises a second playlist used for describing a playing order of a plurality of video slices in the target video; searching a position of a first video slice at the end of a cache playing queue in the second playlist; downloading second video slices of the second version in sequence according to the position and the order of the video slices in the second playlist; adding discontinuous labels on the label positions corresponding to the second video slices in the second playlist; and playing the second video slices according to the discontinuous labels. According to the method, the equipment and the device, the seamless switching between video data with different resolutions is realized, and the user viewing experience is greatly improved.

Owner:LETV HLDG BEIJING CO LTD +1

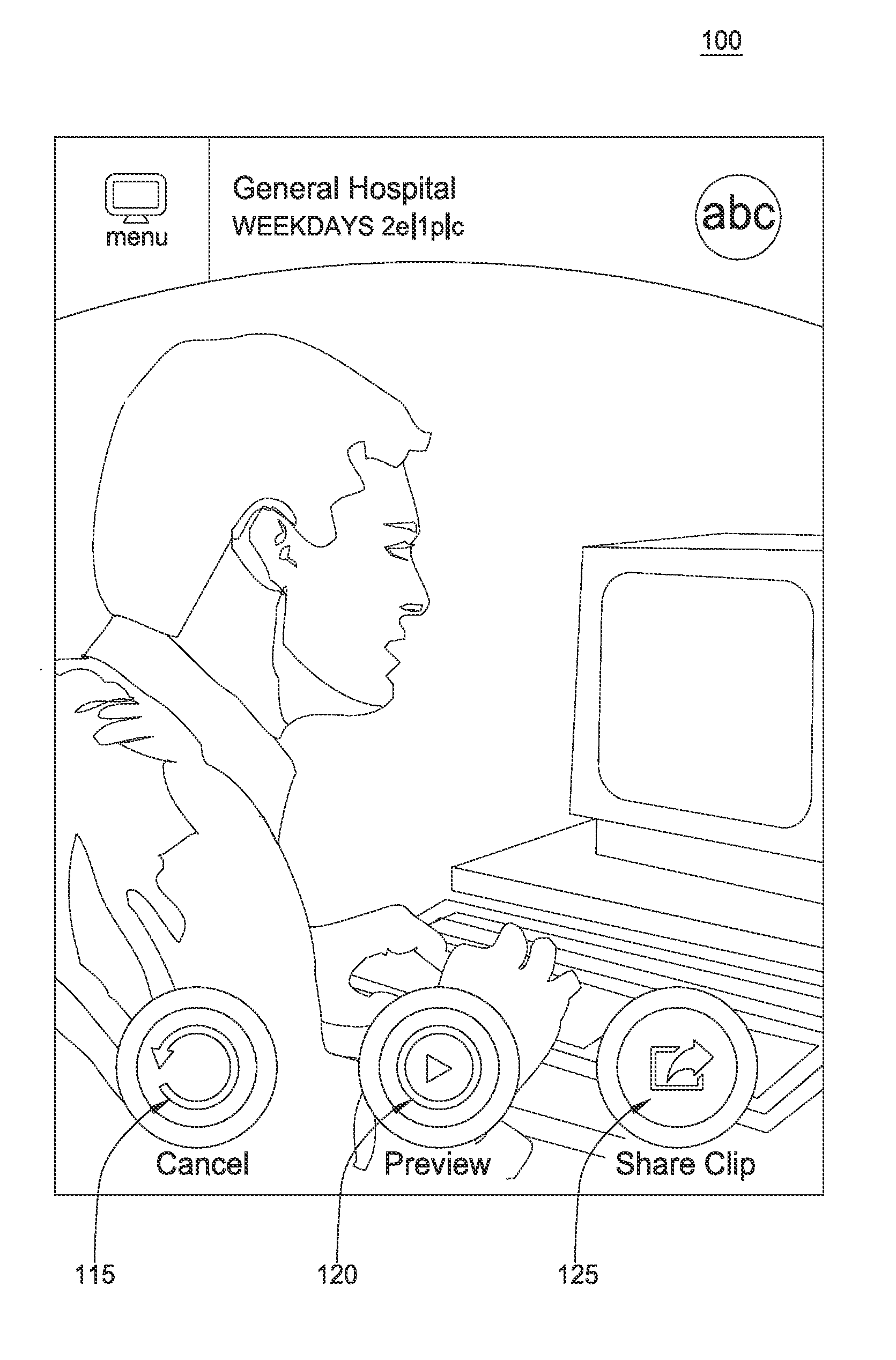

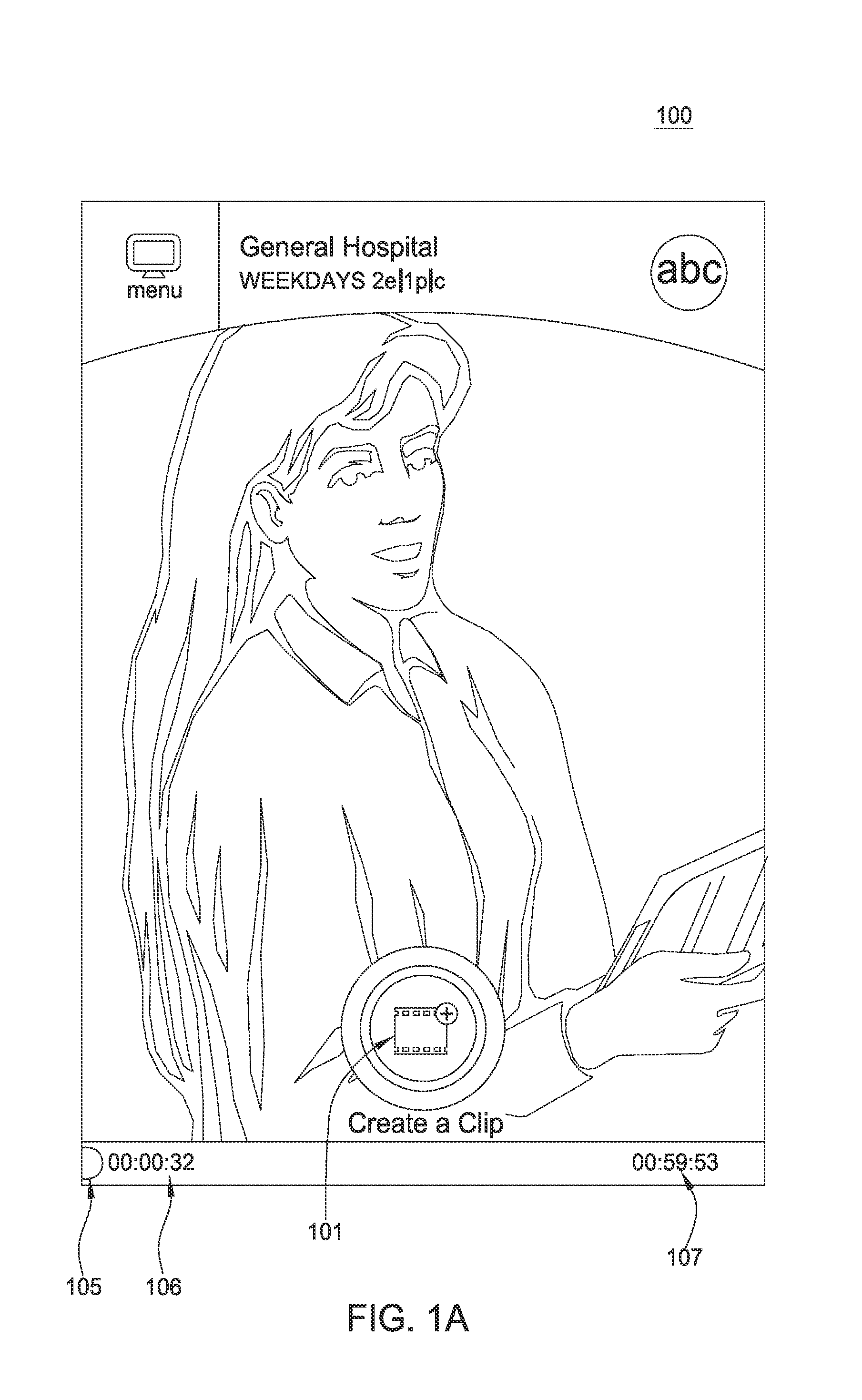

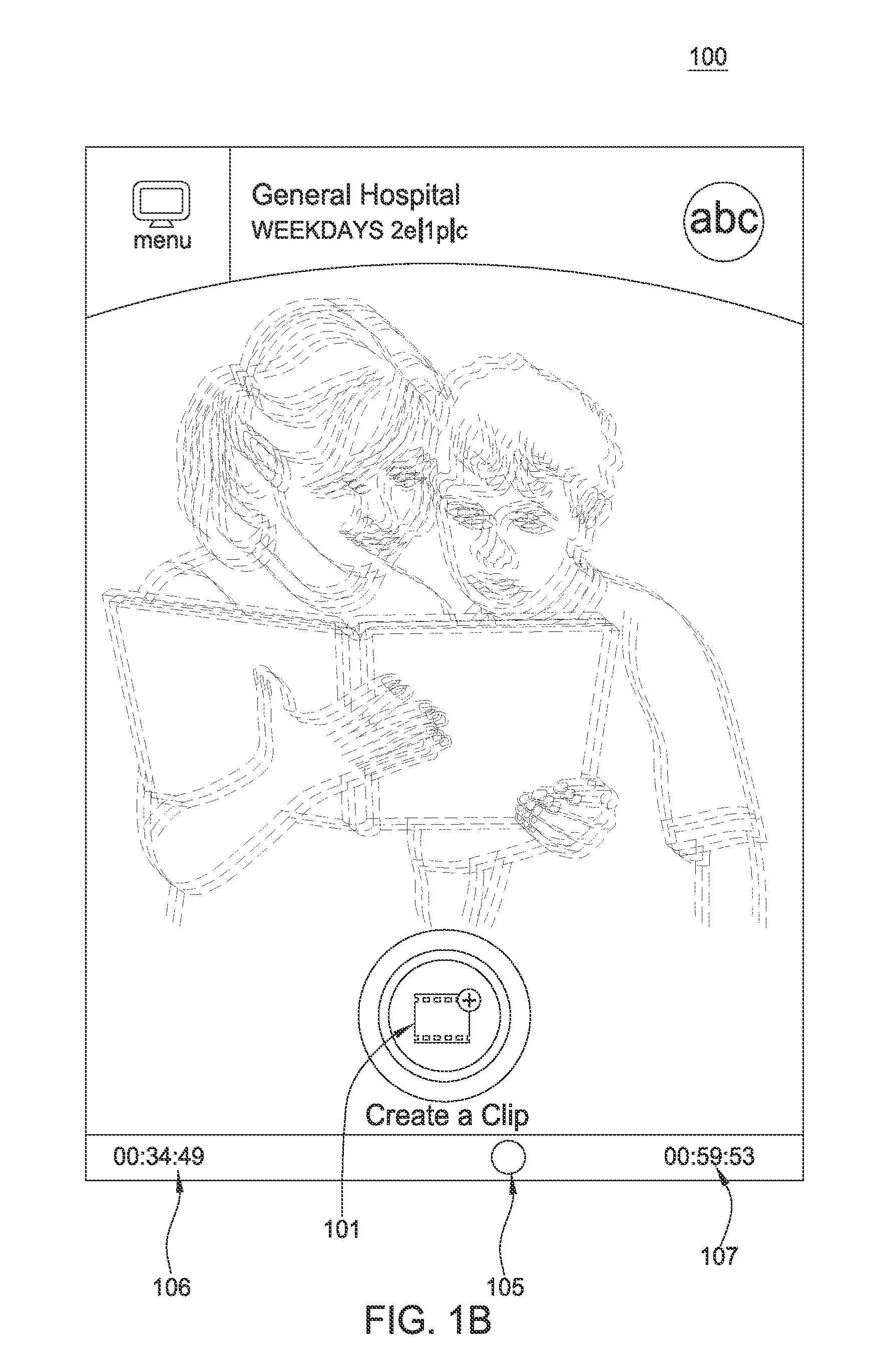

Gesture based video clipping control

ActiveUS20140282001A1Video data browsing/visualisationSpecial data processing applicationsControl systemComputer program

System, method, and computer program product to generate a clip of a media file on a device having a touch input component, the media file comprising a plurality of segments, by outputting for display a first segment of the media file, responsive to receiving: (i) input indicating to generate the clip of the media file using the first segment, and (ii) a first swipe gesture on the touch input component: identifying a subset of segments, of the plurality, based on a direction of the first swipe gesture, the first subset of segments including a destination segment, and outputting for display each of the subset of segments, and responsive to receiving input selecting the destination segment as part of the clip of the media file, generating the clip of the media file, the media clip including each segment of the media file between the first and the destination segment.

Owner:DISNEY ENTERPRISES INC

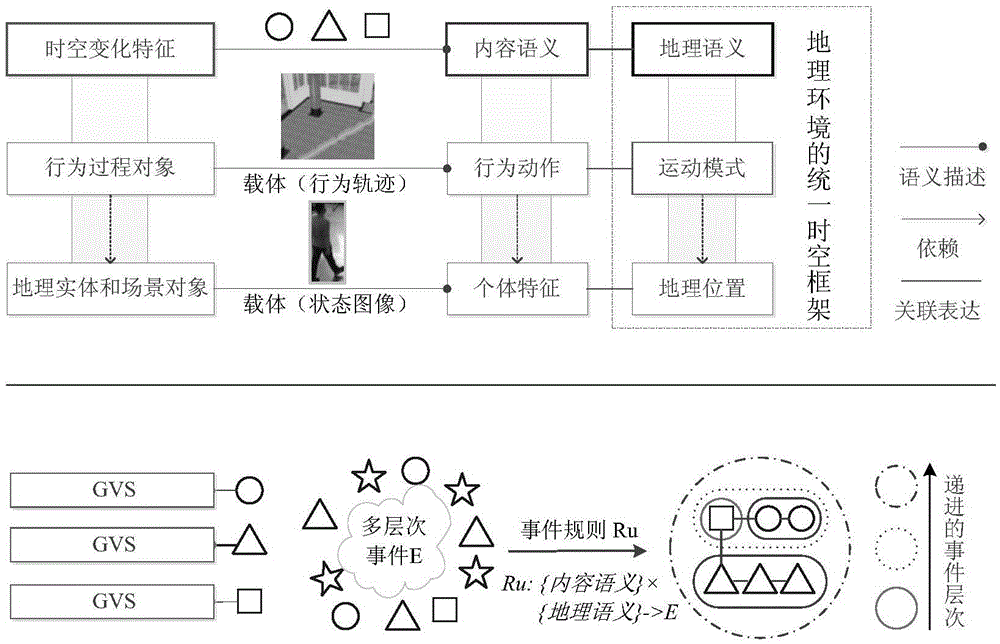

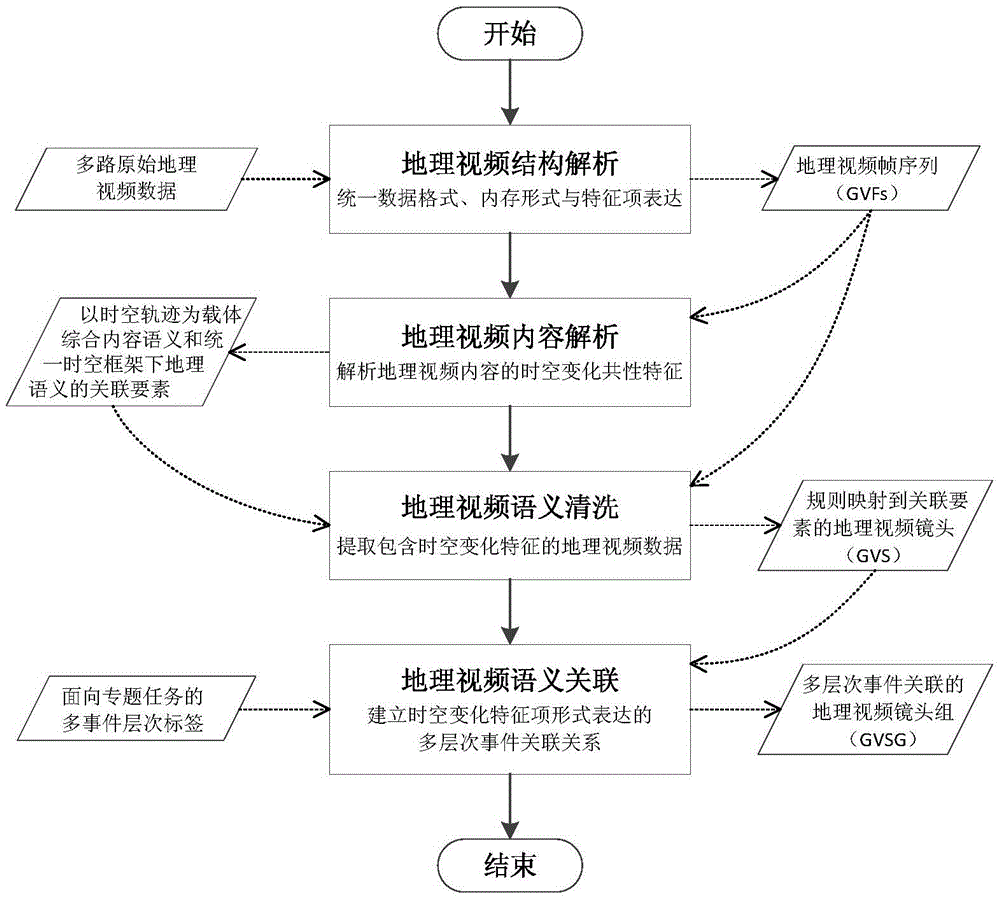

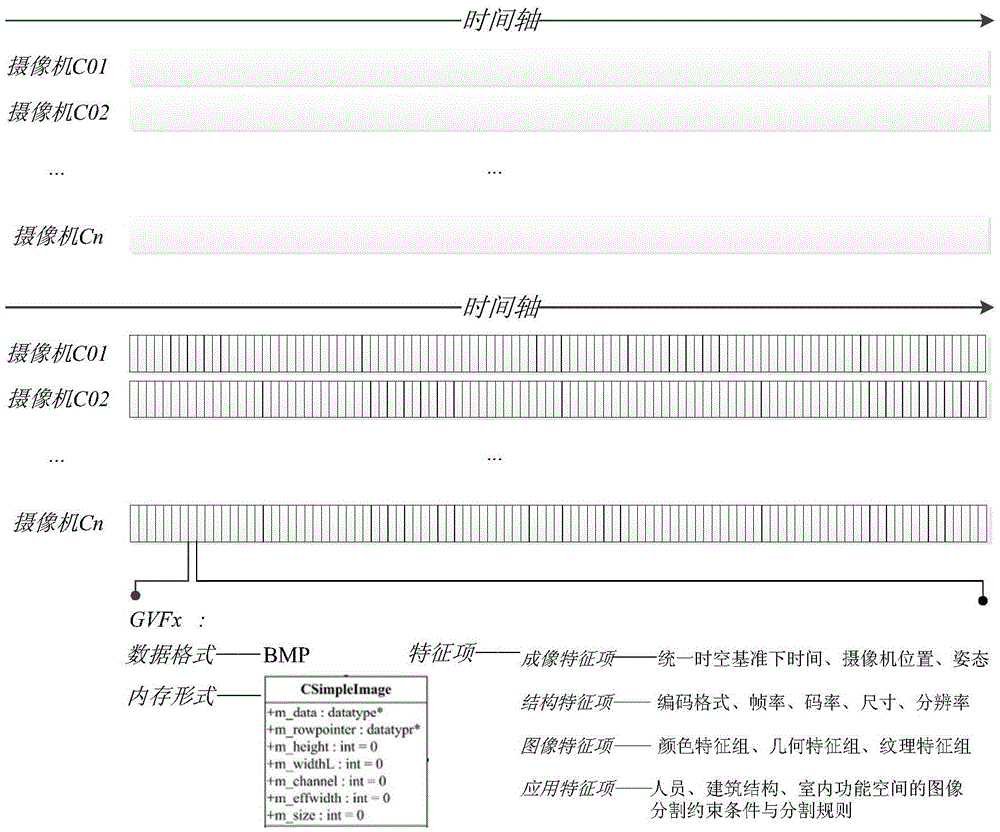

Content-aware geographic video multilayer correlation method

ActiveCN105630897AAvoid redundant detailsImprove understanding efficiencyVideo data browsing/visualisationGeographical information databasesCognitive computingData set

The invention relates to a content-aware geographic video multilayer correlation method. The method comprises the following steps: a) unifying the structural features of a multi-source geographic video; b) analyzing the common features of temporal and spatial variation during which a track object is used as a carrier, and establishing an associated element view which combines the content semantics and the geographic semantics under a uniform basis reference; c) extracting a geographic video data set which comprises temporal and spatial variation features, and establishing regular function mapping from data to associated elements; and d) distinguishing the relevancy of geographic video data examples on the basis of a rule and calculating the association distance, gathering the geographic data layer by layer according to the hierarchical property of the association, and ranking data objects in the set according to the association distance. According to the method, the global association, in which the comprehensive geographic video contents are similar, geographically related under the uniform basis reference can be supported, so that the cognitive calculation ability and information expression efficiency of multi-scale complicated behavior events in discontinuous or cross-region monitoring scenes behind multiple geographic videos in monitoring network systems can be enhanced.

Owner:WUHAN UNIV

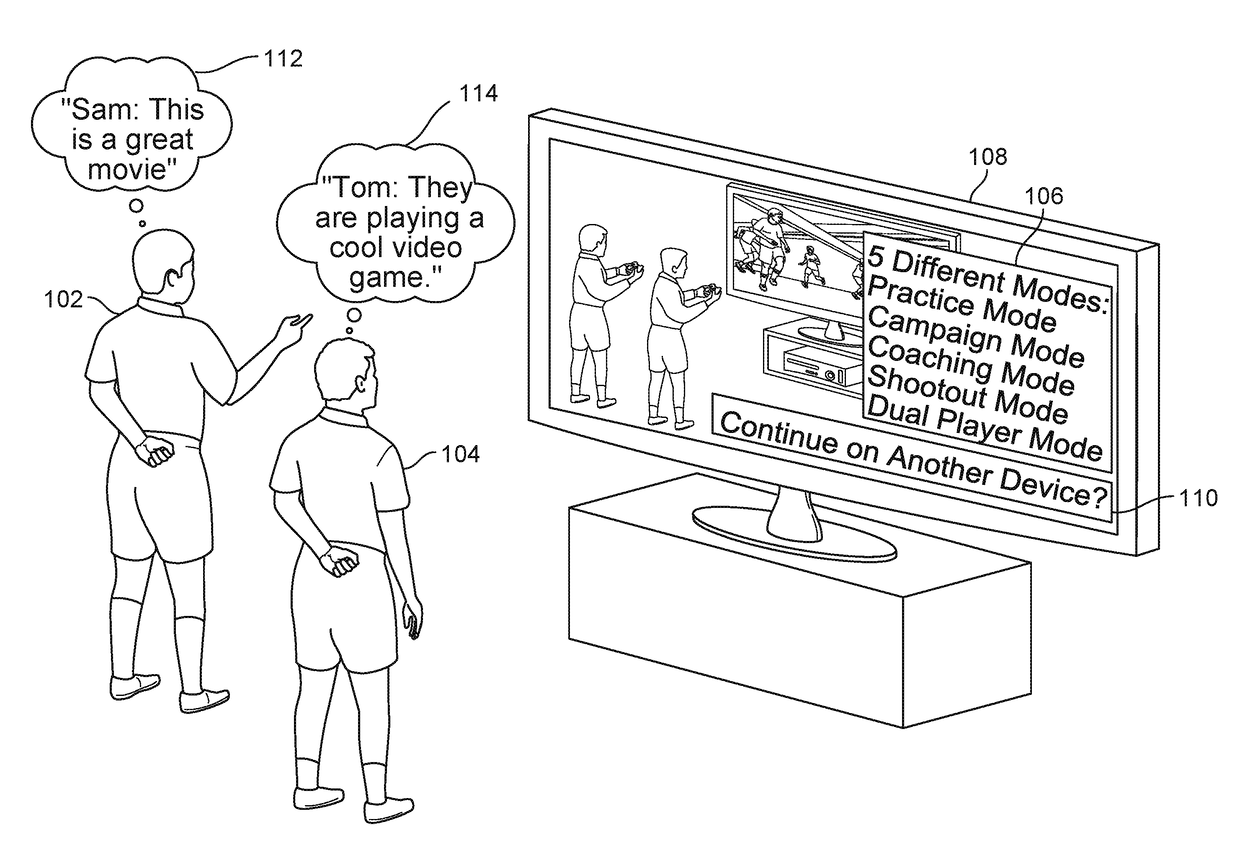

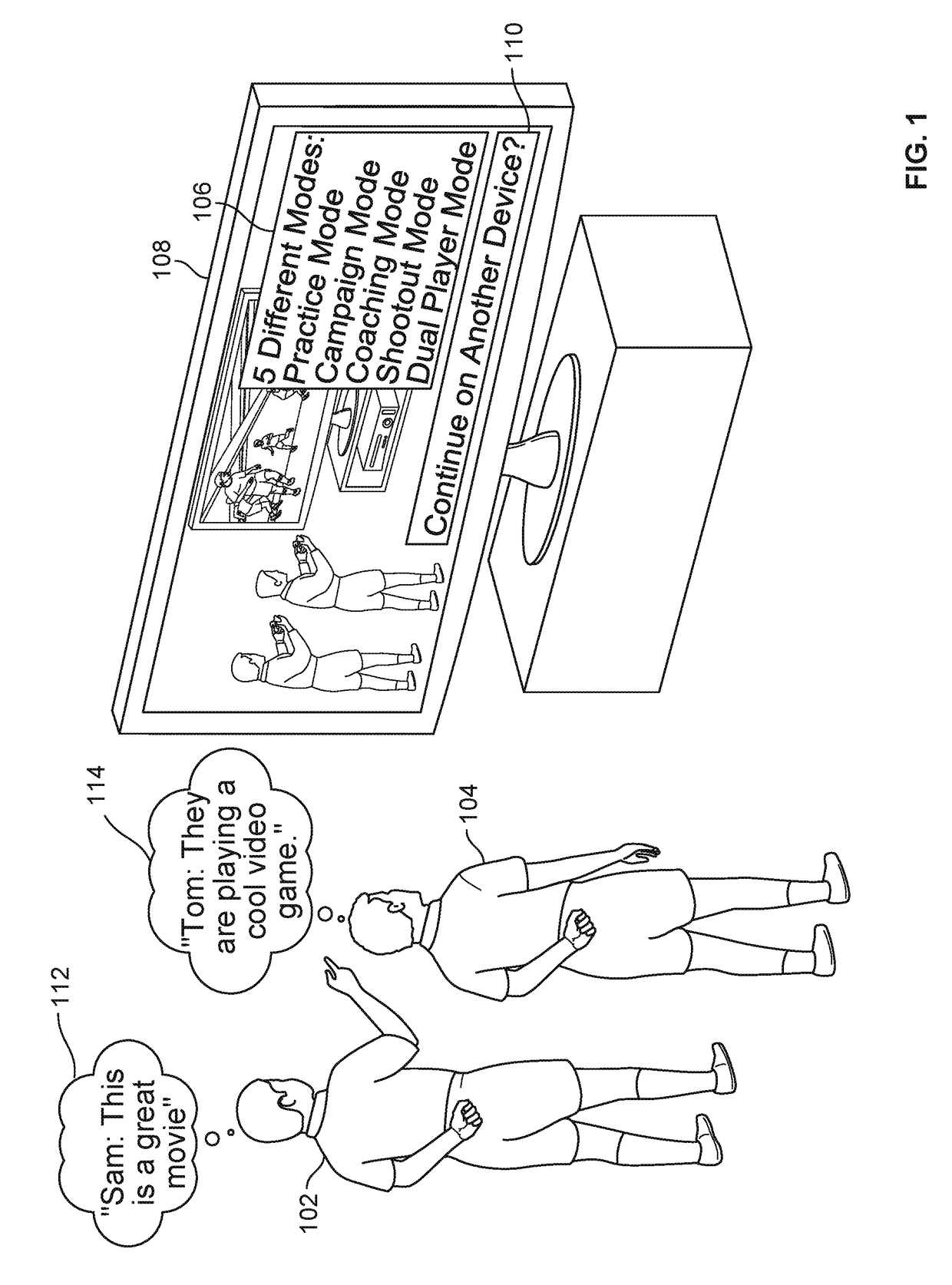

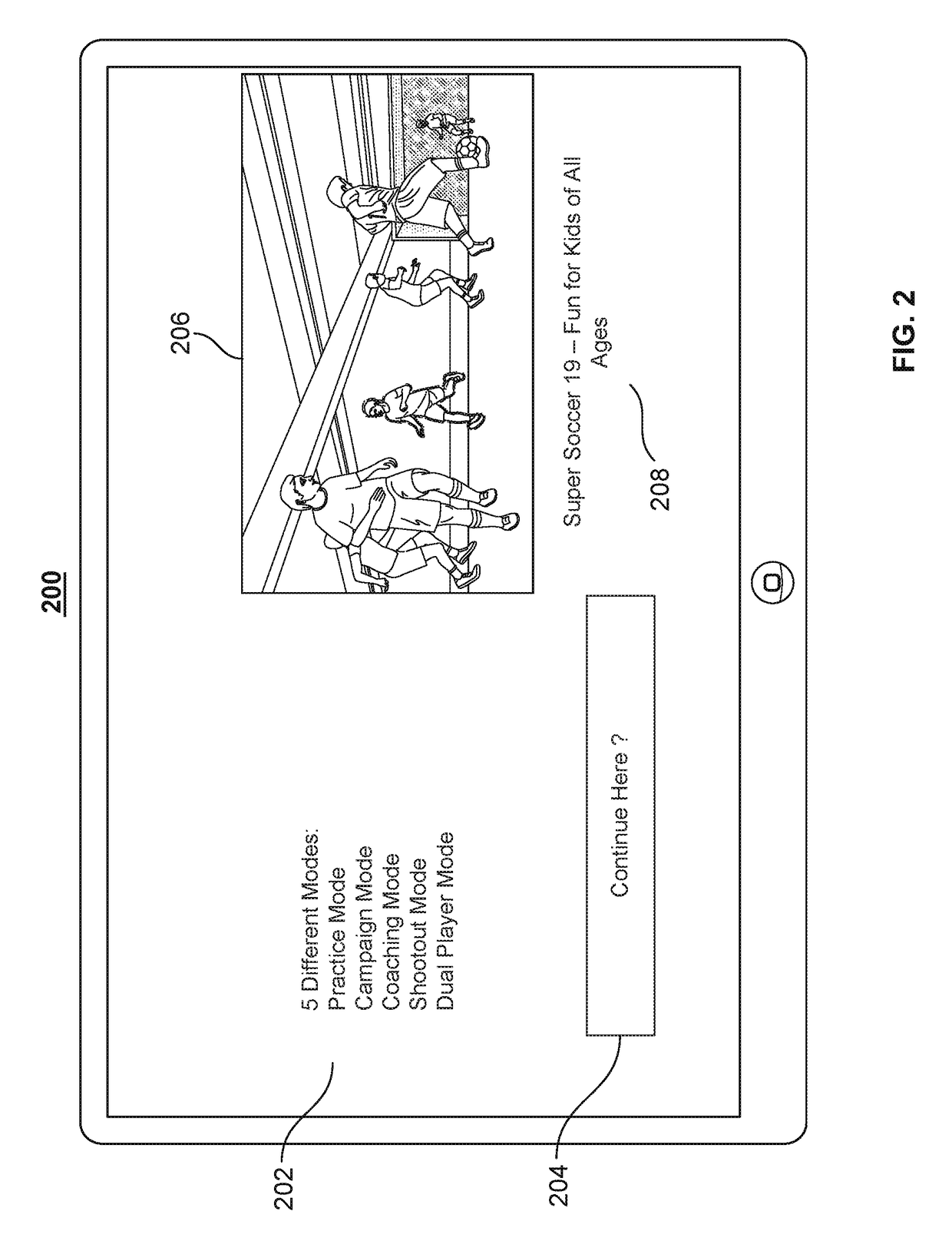

Method and system for transferring an interactive feature to another device

ActiveUS20170332140A1Raise the barDouble thresholdAdvertisementsMultimedia data browsing/visualisationComputer networkTimer

Methods and systems are presented for transferring an interactive feature from a first device to a second device. Two users may be consuming a media asset. Upon receipt of a command to active an interactive feature, a determination is made whether a user who did not activate the interactive feature is interested in the media asset. Upon that determination, a timer is activated that tracks an amount of time for which the interactive feature is active and a determination is made whether a threshold time period has been met. Once the threshold time period is met, a device associated with the user that activated the interactive feature is identified and the users are prompted to transfer the interactive feature to the device.

Owner:ROVI GUIDES INC

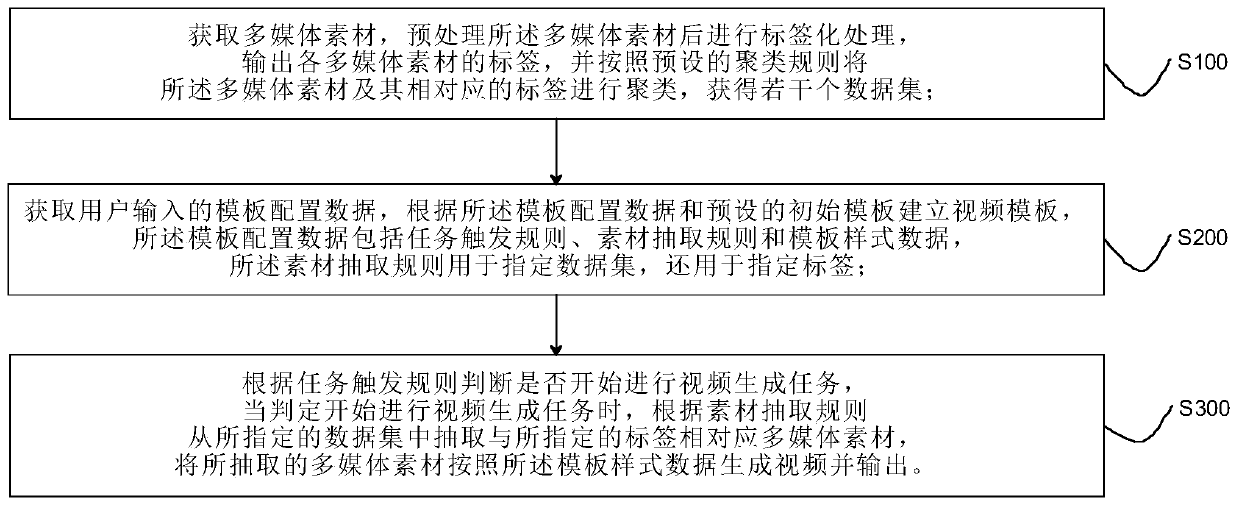

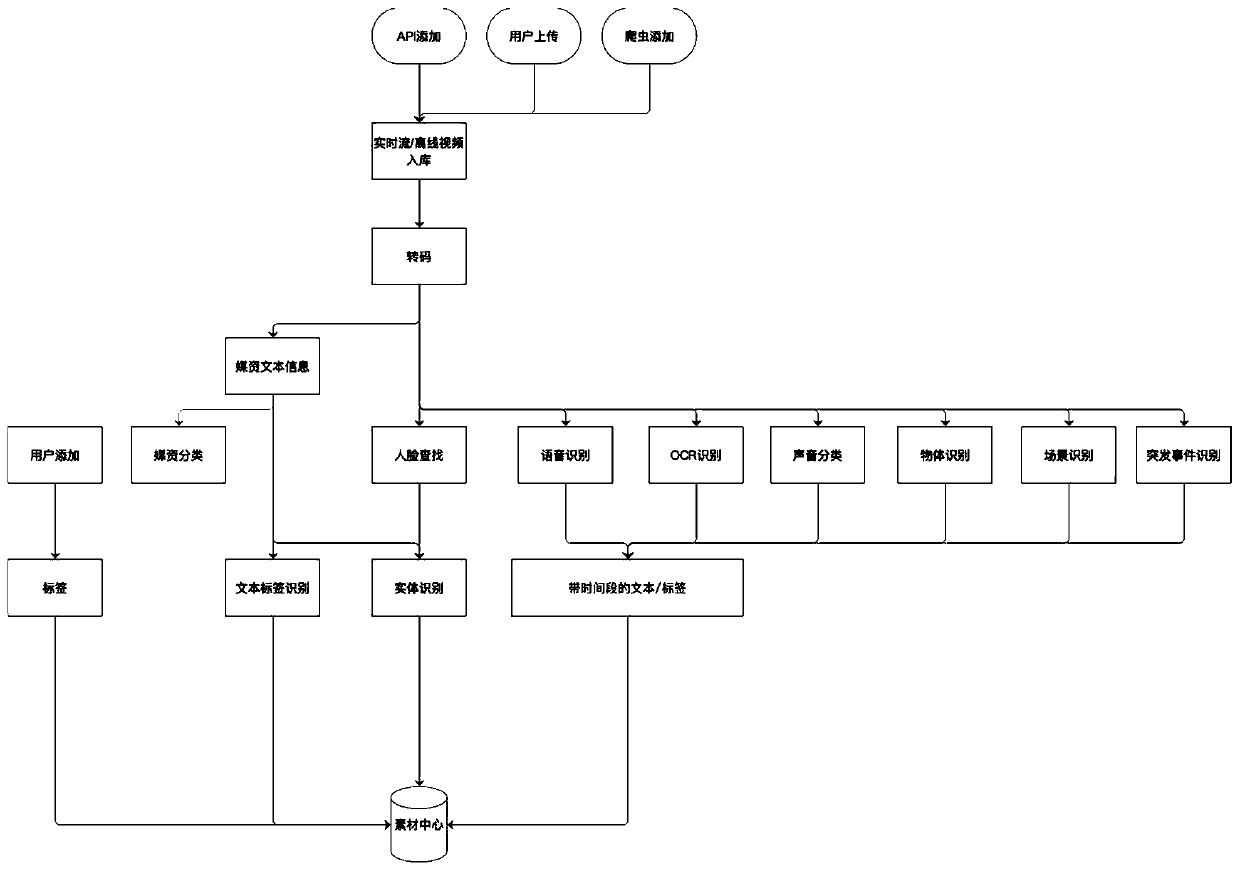

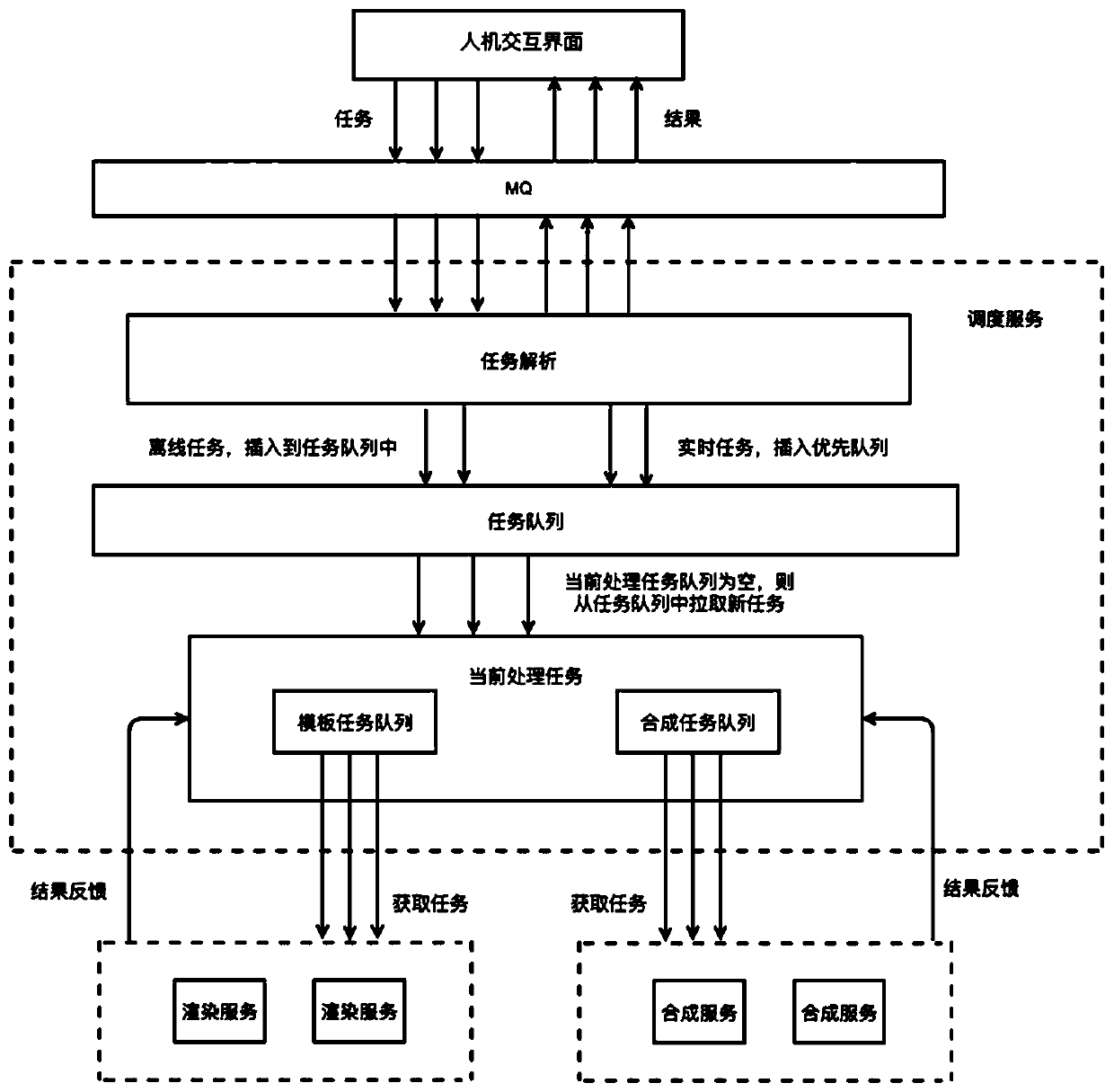

Method and system for generating video by extracting multimedia material based on template

InactiveCN110532426AGood technical effectEasy to operateVideo data indexingVideo data browsing/visualisationData setComputer graphics (images)

The invention discloses a method for generating a video by extracting a multimedia material based on a template. The method comprises the following steps: acquiring multimedia materials, preprocessingthe multimedia materials, performing tagging processing, outputting tags of the multimedia materials, and clustering the multimedia materials and the corresponding tags according to a preset clustering rule to obtain a plurality of data sets; obtaining template configuration data input by a user, and establishing a video template according to the template configuration data and a preset initial template; and automatically performing a video generation task according to the template configuration data, extracting the multimedia material according to the template configuration data and the label, generating a video according to the template configuration data and the extracted multimedia material, and outputting the video. According to the invention, the user do not need to search, screen and confirm video materials, and video generation tasks can be automatically carried out according to user requirements, thereby reducing repeated operation processes of the users.

Owner:新华智云科技有限公司

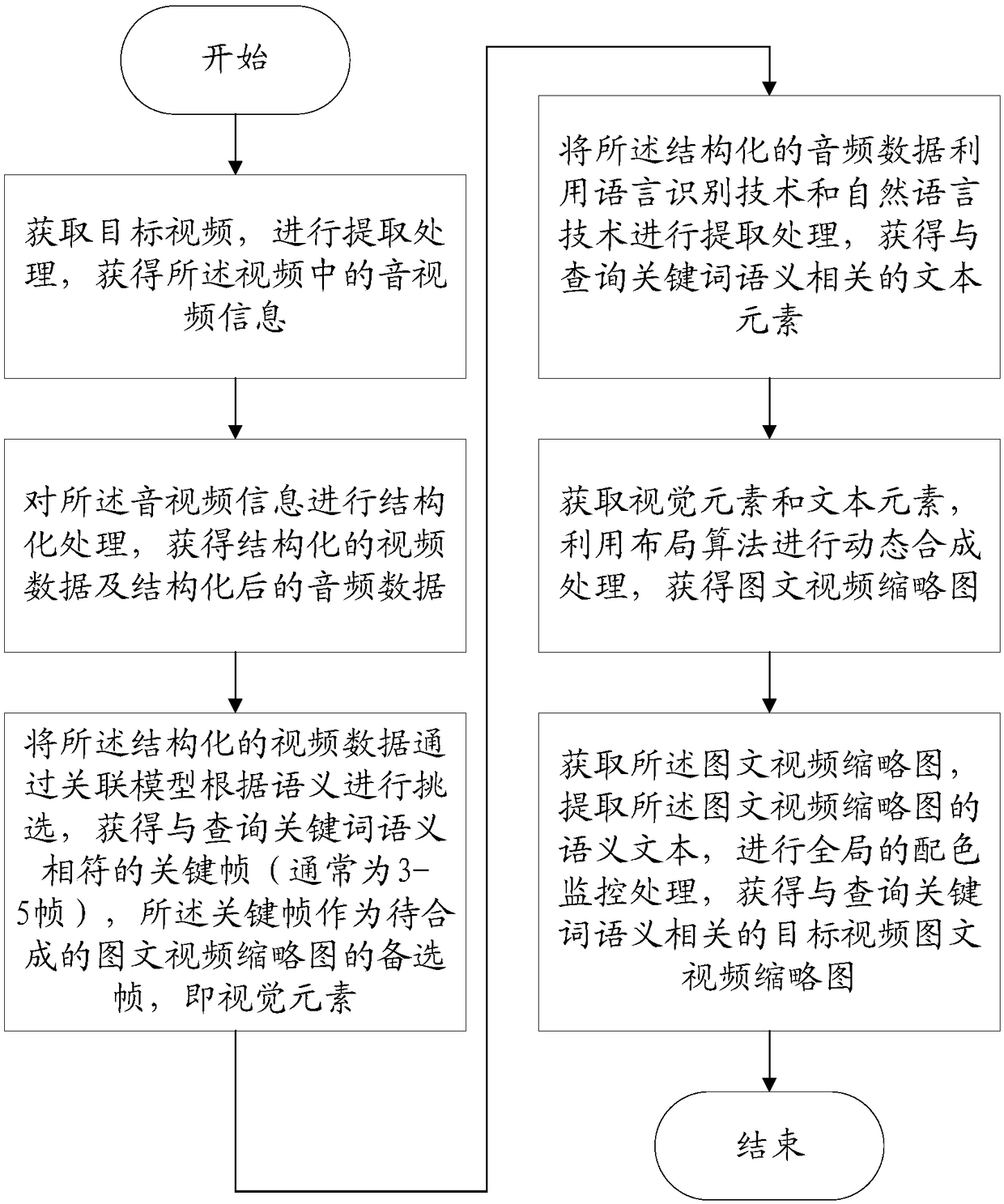

Adaptive intelligent generation method of picture-text video thumbnails based on query terms

ActiveCN109145152AImprove browsing efficiencyFind quicklyVideo data browsing/visualisationSpecial data processing applicationsSemanticsThumbnail

The invention discloses a self-adaptive intelligent generation method of picture-text video thumbnails based on query words. The method comprises the following steps: acquiring an object video, extracting the object video, and obtaining audio and video information in the video; performing structured processing on the audio and video information to obtain structured video data and structured audiodata; selecting the structured video data to obtain a key frame corresponding to the query keyword semantics, namely a visual element; extracting and processing the structured audio data to obtain text elements related to query keyword semantics; obtaining visual elements and text elements for dynamic composition processing, and obtaining picture-text video thumbnails; the thumbnails are obtained,the semantic text of the thumbnails is extracted, the global color matching monitoring process is carried out, and the target video thumbnails related to the semantics of the query keywords are obtained. The embodiment of the invention can intelligently generate video thumbnails according to query keywords according to a system, thereby saving human resources and having more purposefulness than the existing automatic generation technology of video thumbnails.

Owner:SUN YAT SEN UNIV

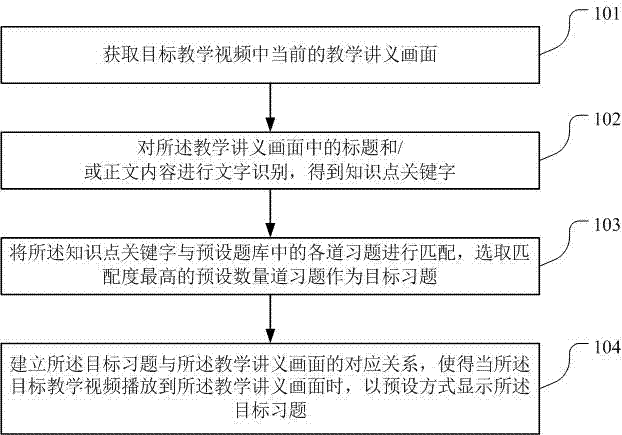

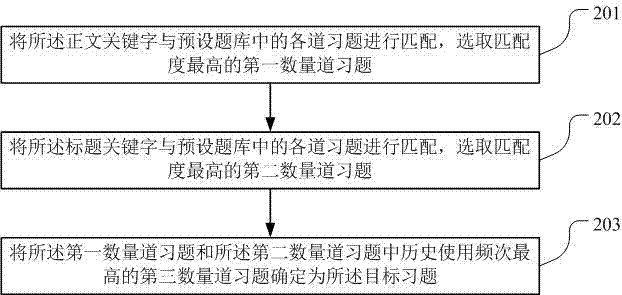

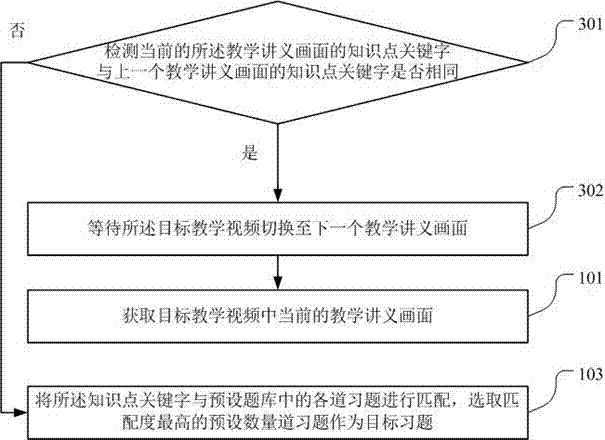

Method and device for matching exercises with teaching video, and recorded playing system

PendingCN107247732AImprove teaching effectReduce the difficulty of screeningVideo data browsing/visualisationCarrier indexing/addressing/timing/synchronisingComputer scienceData science

The embodiment of the invention discloses a method for matching exercises with a teaching video. The method is used to solve the problems that the screening difficulty of manual exercise screening is large and the efficiency is low. The method disclosed by the embodiment of the invention comprises the steps that a current teaching material picture in a target teaching video is acquired; character recognition is conducted on a title and / or main body contents in the teaching material picture, so knowledge point keywords are obtained; the knowledge point keywords are matched with each exercise in a preset exercise base, and the exercises of a preset amount with the highest matching degree are taken as target exercises; and corresponding relations between the target exercises and the teaching material picture are established, so the target exercises can be displayed in a preset mode when the teaching material picture is played in the target teaching video. The embodiment of the invention also provides a device for matching the exercises with the teaching video, and a recorded playing system.

Owner:广州盈可视电子科技有限公司

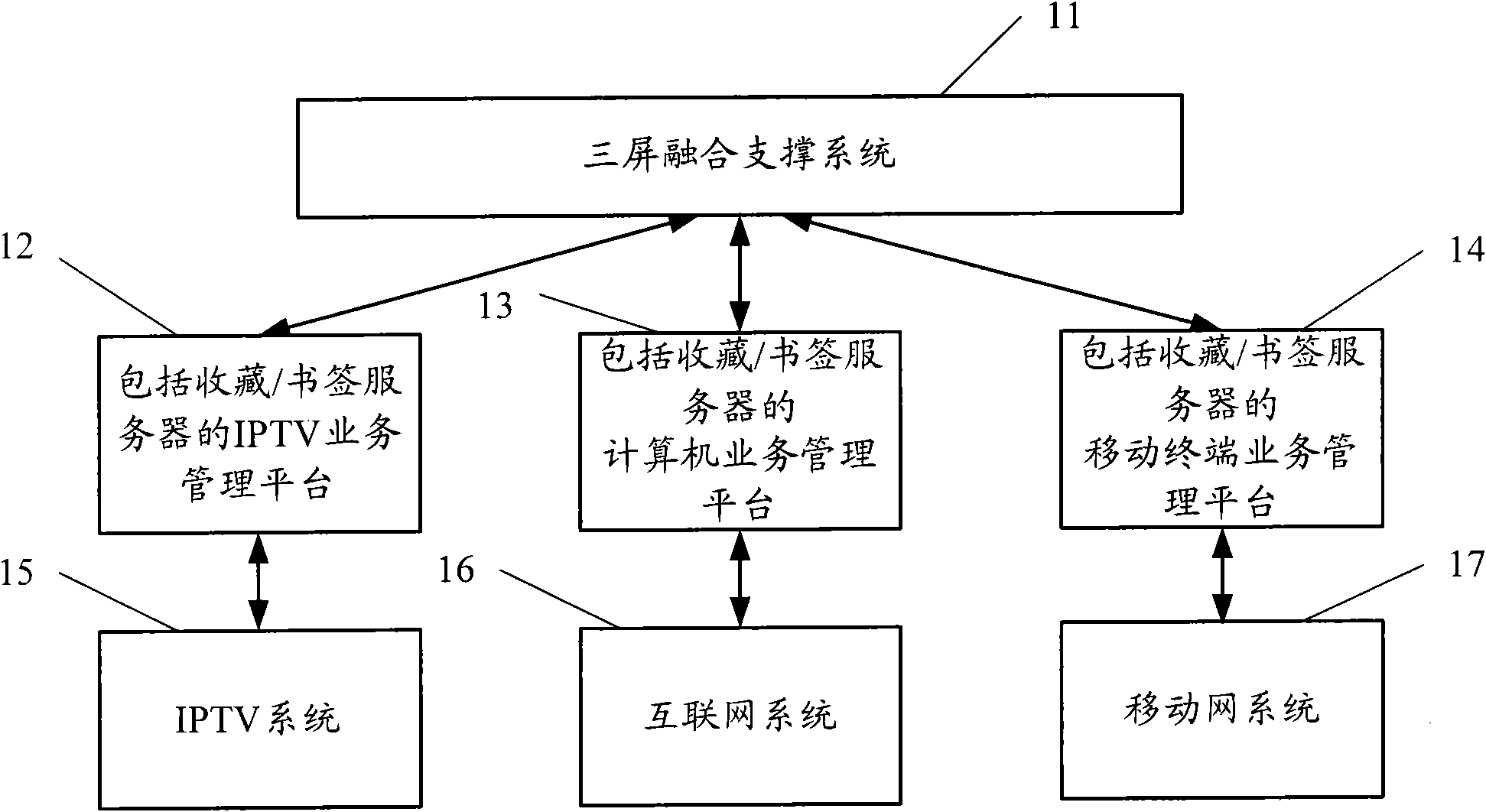

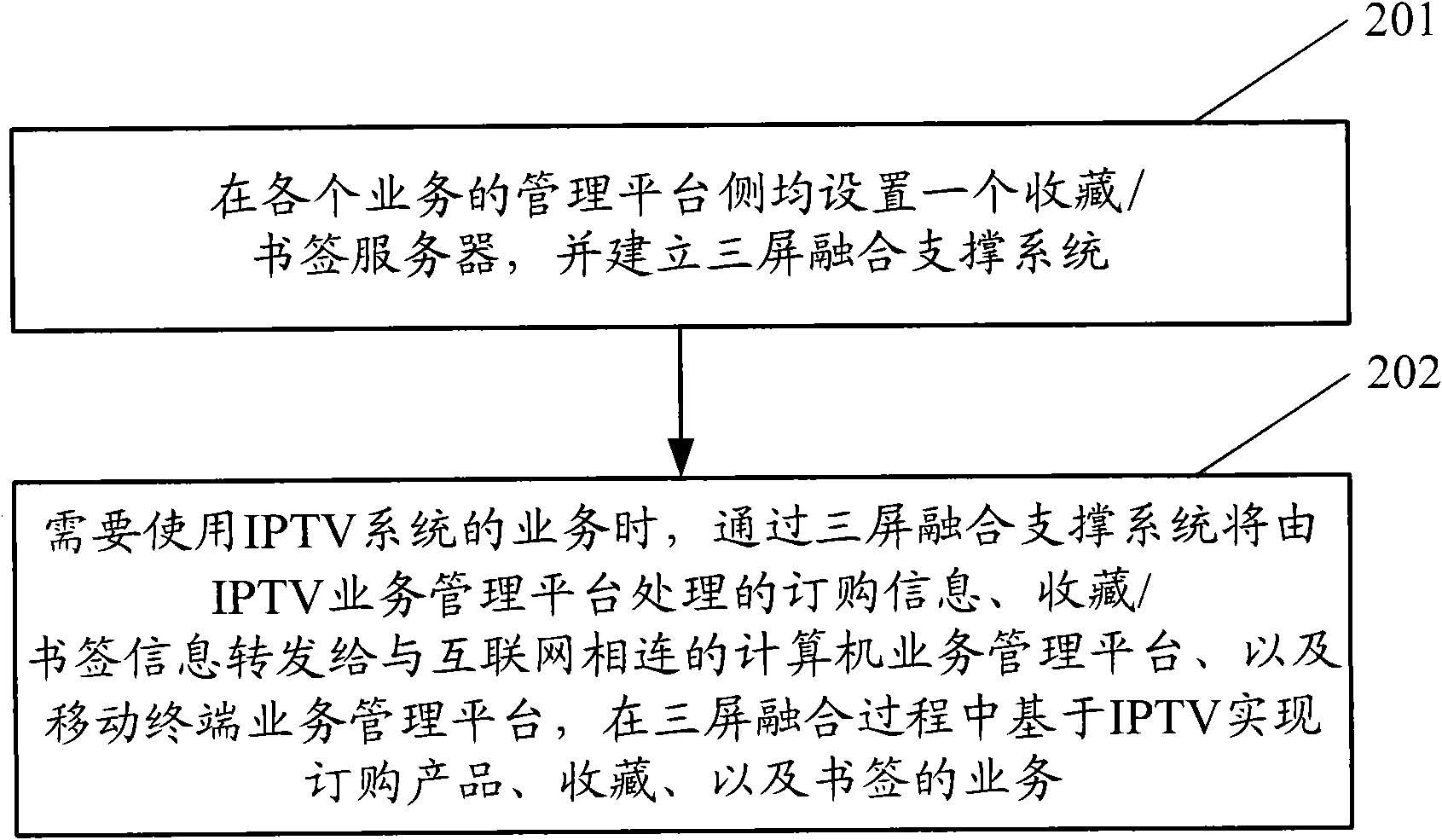

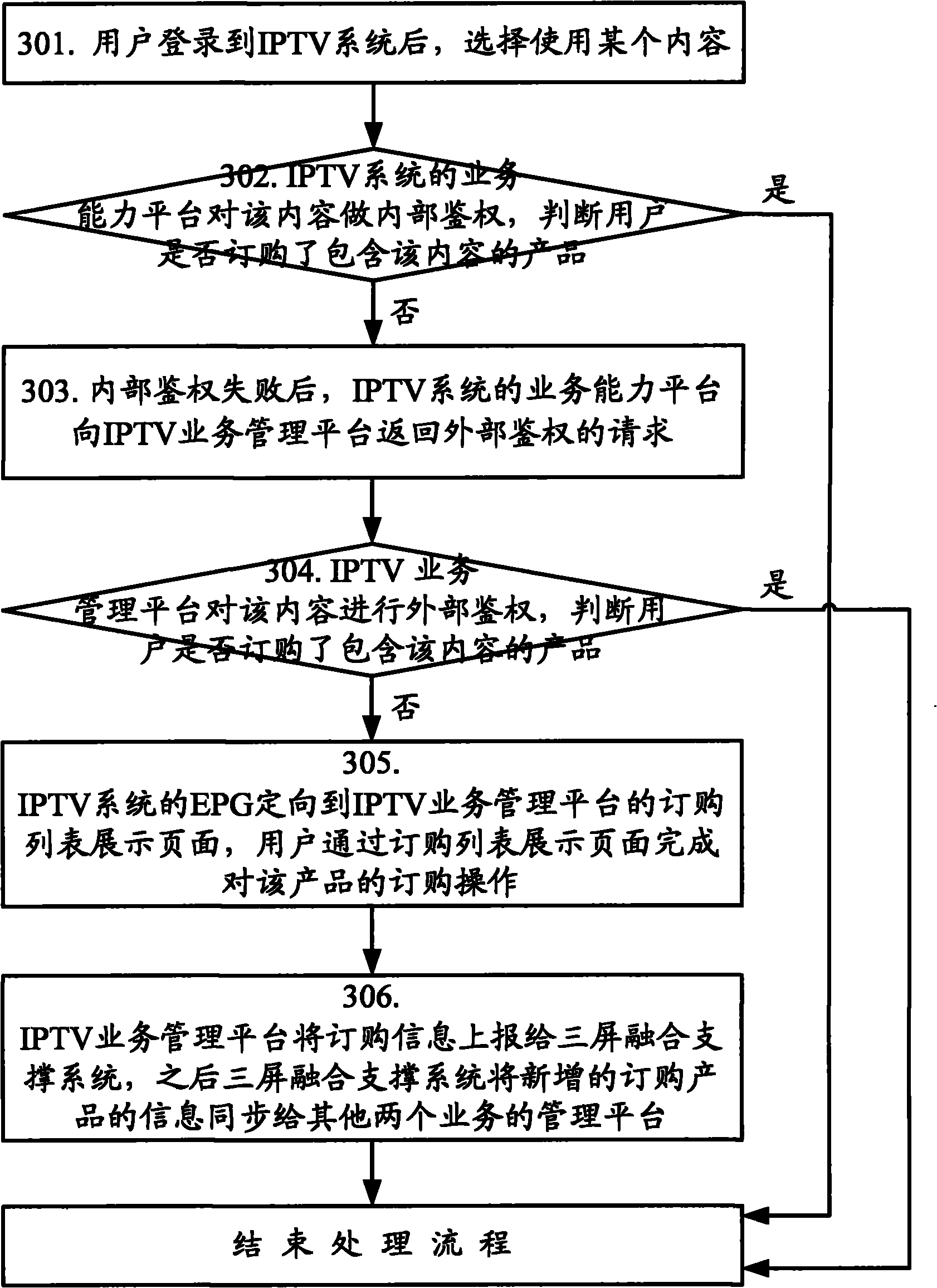

Internet protocol television-based implementing method in three-screen fusion and system

InactiveCN101789950AImprove experienceShorten the timeVideo data browsing/visualisationTwo-way working systemsSupporting systemProduct order

The invention discloses an IPTV-based implementing method in three-screen fusion, which comprises that: when the service of an IPTV system needs to be used, a three-screen fusion support system forwards order information and collection / bookmark information processed by a collection / bookmark server of an IPTV service management platform to a collection / bookmark server of a computer service management platform and a collection / bookmark server of a mobile terminal service management platform. Meanwhile, the invention discloses an IPTV-based implementing system in the three-screen fusion. The method and the system realize the services of product order, collection and bookmark based on IPTV in the three-screen fusion process, can form good video counseling delivery service among three screens, and can realize the services of three-screen fusion and simultaneous three-screen view so as to bring more opportunities for the development and popularization of the IPTV.

Owner:ZTE CORP

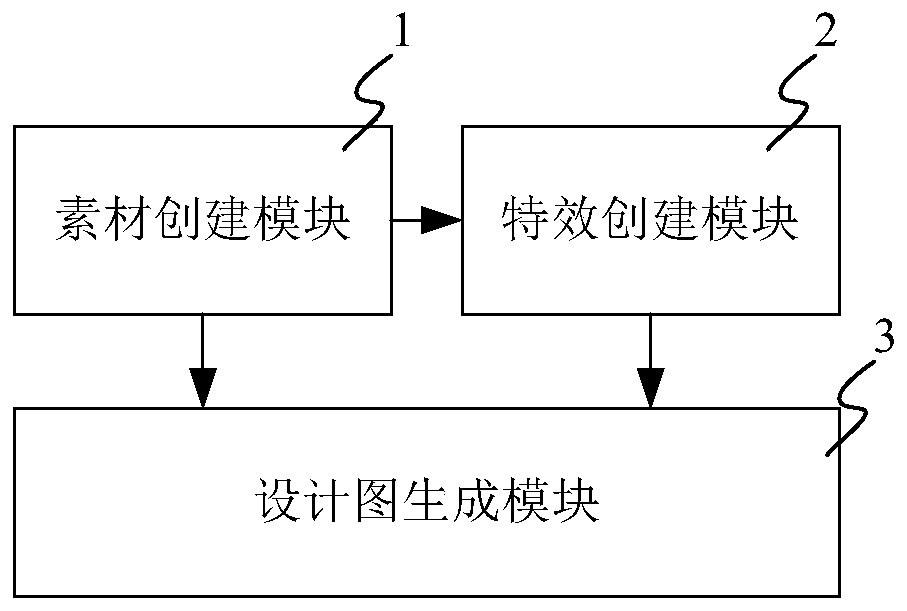

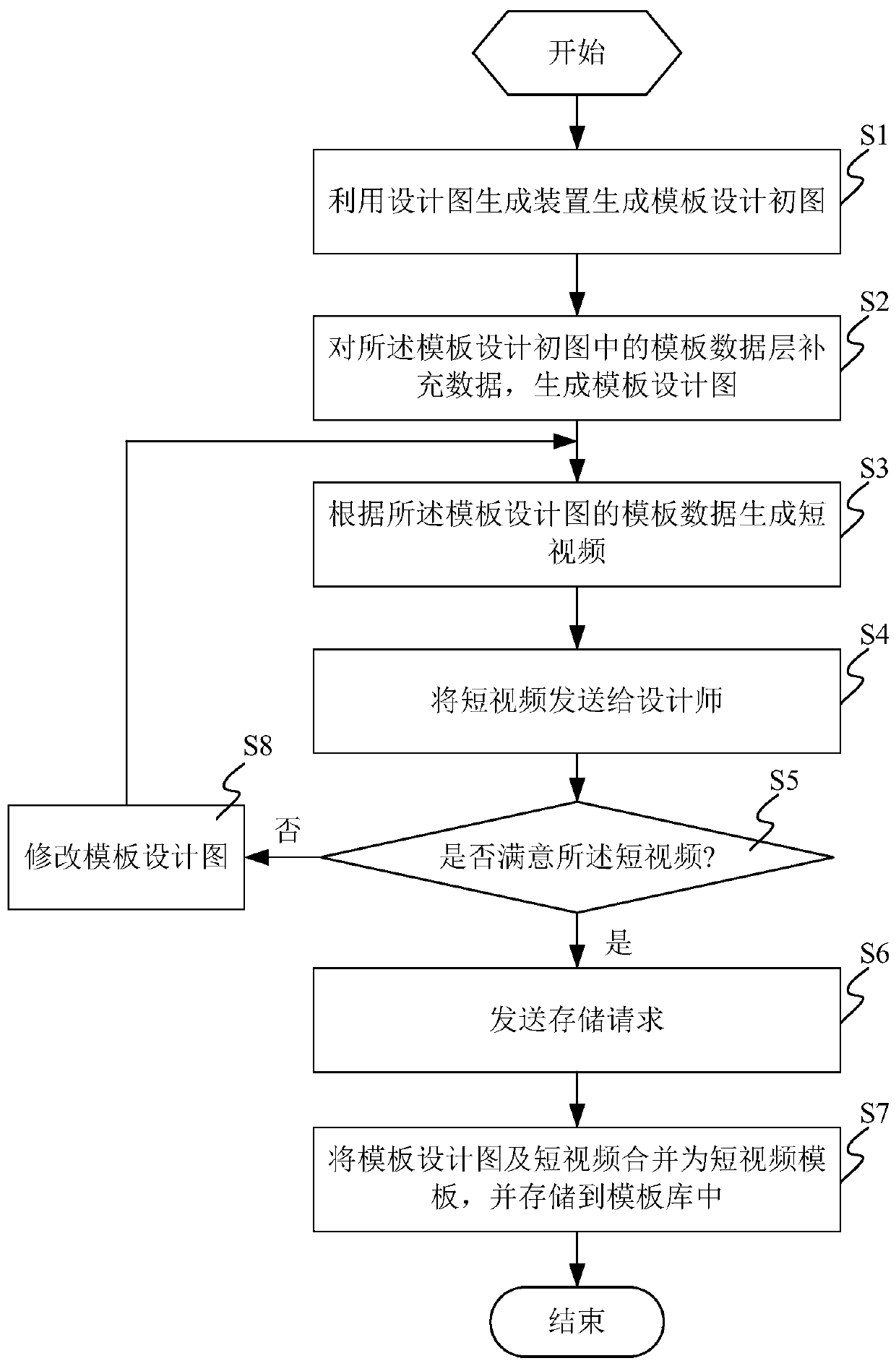

Short video template design drawing generation device and short video template generation method

ActiveCN110287368AReduce workloadStructuredVideo data indexingVideo data browsing/visualisationTemplate designComputer module

The invention relates to a short video template design drawing generation device and a short video template generation method, and the device comprises a material creation module which is configured to obtain the materials and the description information thereof according to the input data corresponding to the material parameters; a special effect creating module which is configured to provide a visual editing interface and obtain the special effect information according to the corresponding special effect parameter input data; and a design drawing generation module which is configured to generate a template design initial drawing according to a predetermined format according to the materials and the description information and the special effect information thereof. Through the visual short video template design drawing generation device, a designer can visually obtain a design effect when designing the materials and special effects, and the workload of the designer is reduced.

Owner:上海萌鱼网络科技有限公司

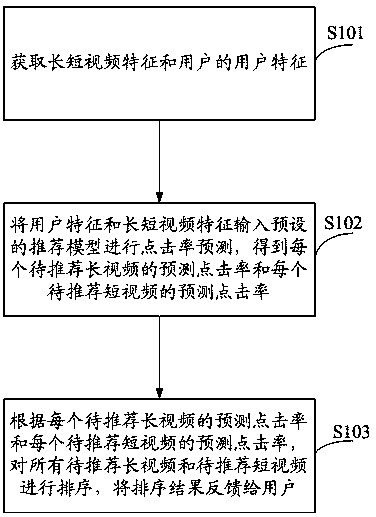

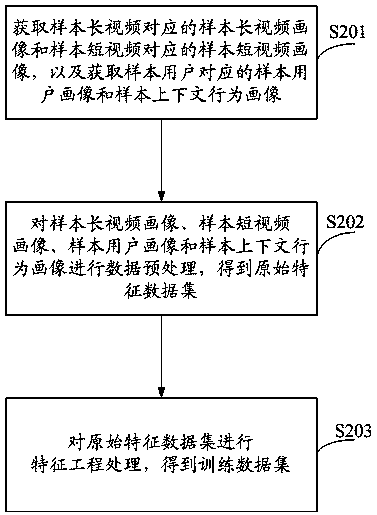

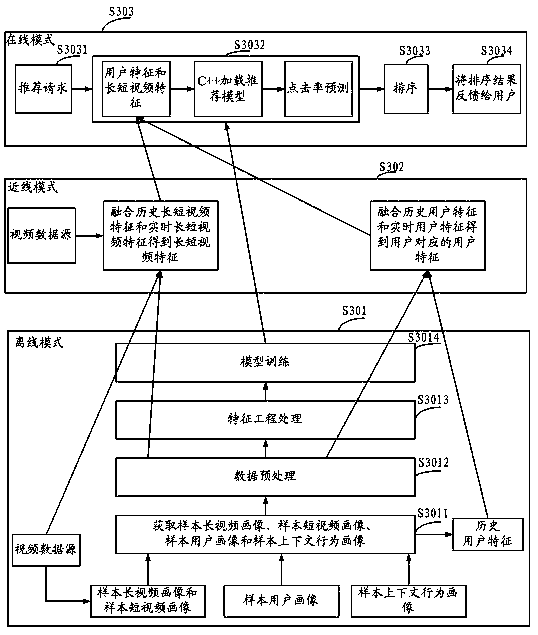

Video recommendation method and system

InactiveCN111339355AImprove user experienceAchieve recommendationVideo data queryingVideo data browsing/visualisationRecommendation modelClick-through rate

The invention provides a video recommendation method and system. The method comprises the steps of obtaining long and short video features and user features of a user; inputting the user features andthe long and short video features into a preset recommendation model for click rate prediction to obtain a predicted click rate of each to-be-recommended long video and a predicted click rate of eachto-be-recommended short video; and sorting all theto-be-recommended long videos to be recommended and all the to-be-recommended short videos to be recommended according to the predicted click rate ofeach to-be-recommended long video to be recommended and the predicted click rate of each to-be-recommended short video to be recommended, and feeding back a sorting result to the user. In the scheme,the user characteristics and the long and short video characteristics obtained by combining the long video characteristics and the short video characteristics are input into the pre-trained recommendation model to obtain the predicted click rates of the to-be-recommended long video and the to-be-recommended short videos. Sorting t The to-be-recommended long videos and the to-be-recommended short videos are sorted according to the predicted click rate, and feeding back a sorting result is reported to the user, thereby realizing recommendation of different types of information, and improving theuse experience of the user and the accuracy of information recommendation.

Owner:北京搜狐新动力信息技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com